QoS Configuration and Integration

This chapter provides an overview of the principles of Riverbed Quality of Service (QoS). Additionally, it shows how to configure Riverbed QoS and how to integrate SteelHeads into existing QoS architectures.

This chapter includes the following sections:

Important: A QoS configuration generally requires the configuration of many SteelHeads. To avoid repetitive configuration steps on single SteelHeads, Riverbed strongly recommends that you use the SCC 9.0 or later to configure QoS on your SteelHeads. SCC enables you to configure one time and to send the configuration out to multiple SteelHeads instead of connecting to a SteelHead, performing the configuration, and repeating the same configuration for all SteelHeads in the network. For details, see the SteelCentral Controller for SteelHead User’s Guide.

Important: If you are using a release previous to RiOS 9.0, the features described in the chapter are not applicable. For information about applications before RiOS 9.0, see earlier versions of the

SteelHead Deployment Guide on the Riverbed Support site at

https://support.riverbed.com.

For more information about Riverbed QoS, see the

SteelHead Management Console User’s Guide. For configuration examples, see

QoS Configuration Examples.

This chapter requires you be familiar with

Topology and

Application Definitions.

Overview of Riverbed QoS

Riverbed QoS is complementary to RiOS WAN optimization. Whereas Scalable Data Referencing (SDR), transport streamlining, and application streamlining techniques perform best with bandwidth-hungry or thick applications (such as email, file sharing, and backup), QoS improves performance in latency-sensitive or thin applications (such as VoIP and interactive applications). QoS depends on accurate classification of traffic, bandwidth reservation, and proper traffic priorities.

You can also look at the perspective as WAN optimization speeds up some traffic by reducing its bandwidth needs and accelerating it and QoS slows down some traffic to guarantee latency and bandwidth to other traffic. A combination of both techniques is ideal.

Because the SteelHead acts as a TCP proxy, the appliance already works with traffic flows. Riverbed QoS is an extensive flow-based QoS system, which can queue traffic on a per-flow basis and uses standard TCP mechanics for traffic shaping to avoid packet loss on a congested link. SteelHeads support inbound and outbound QoS.

The major functionalities of QoS are as follows:

• Classification - Identifies and groups traffic. Riverbed QoS identifies and groups traffic using the TCP/UDP-header information, VLAN ID, or AFE. Identified traffic is grouped into classes or QoS marked (differentiated services code point [DSCP] or Type of Service [ToS])—or both.

• Policing - Defines the action against the classified traffic. Riverbed QoS can define a minimum and maximum bandwidth per class, the priority of a class relative to other classes, and a weight for the usage of excess bandwidth (unused bandwidth, which is allocated to other classes).

• Enforcement - Determines how the action takes place. Enforcement is performed using the SteelHead QoS scheduler, which is based on the Hierarchical Fair Service Curve (HFSC) algorithm.

You can perform policing and enforcement on a downstream networking device when classification and QoS marking are performed on the SteelHead. This is useful if the SteelHead must integrate with an existing QoS implementation.

Riverbed QoS is flow based. For TCP, the SteelHead must detect the three-way handshake or it cannot classify a traffic flow. If a traffic flow is not classified, it falls into the default class.

After a traffic flow is classified, it is registered and cannot be reclassified. If a flow changes to a different application and is not reset by the application, the classification stays the same as before the change.

SteelHeads set up 1024-packet-deep packet buffers per configured class, regardless of the packet size. You can adjust the depths of the packet buffers using the CLI.

For information about adjusting packet buffers, see the SteelHead Management Console User’s Guide.

Riverbed QoS takes effect as soon as traffic congestion occurs on a link. Congestion occurs when multiple flows are sending data at the same time and the packets of the flows are not forwarded immediately, forming a queue.

There are two types of congestion: long term, which lasts a second or longer, and short term, which occurs for less than a second. Both types of congestion signal that one or more applications are slowed down because of other traffic.

You can best manage long-term congestion by shaping traffic: reserving bandwidth for the more important traffic. This is the most well-known implementation for QoS.

Short-term congestion is what can cause applications to hang for a second or reduce the quality of a VoIP conversation. You can manage short-term congestion by prioritizing traffic packets that are latency-sensitive to move ahead in the queue.

A well-designed QoS environment uses both prioritization and traffic shaping to guarantee bandwidth and latency for applications.

Many QoS implementations use some form of packet fair queueing (PFQ), such as weighted fair queueing (WFQ) or class-based weighted fair queueing (CBWFQ). As long as high-bandwidth traffic requires a high priority (or vice versa), PFQ systems perform adequately. However, problems arise for PFQ systems when the traffic mix includes high-priority, low-bandwidth traffic (such as VoIP), or high-bandwidth traffic that does not require a high priority (such as e-mail), particularly when both of these traffic types occur together.

Additional features such as low-latency queueing (LLQ) attempt to address these concerns by introducing a separate system of strict priority queueing that is used for high-priority traffic. However, LLQ is not a principled way of handling bandwidth and latency trade-offs. LLQ is a separate queueing mechanism meant as a work around for PFQ limitations.

The Riverbed QoS system is based on a patented version of HFSC. HFSC allows bandwidth allocation for multiple applications of varying latency sensitivity. HFSC explicitly considers delay and bandwidth at the same time. Latency is described in six priority levels (real-time, interactive, business critical, normal, low, and best-effort) that you assign to classes.

If you assign a priority to a class, the class can tolerate X delay, in which X is the priority setting. At the same time, bandwidth guarantees are respected. This enables Riverbed to deliver low latency to traffic without wasting bandwidth and deliver high bandwidth to delay-insensitive traffic without disrupting delay-sensitive traffic.

The Riverbed QoS system achieves the benefits of LLQ without the complexity and potential configuration errors of separate, parallel queueing mechanisms.

For example, you can enforce a mix of high-priority, low-bandwidth traffic patterns (SSH, Telnet, Citrix, RDP, CRM systems, and so on) with lower-priority, high-bandwidth traffic (FTP, backup, replication, and so on). This enables you to protect delay-sensitive traffic such as VoIP, alongside other delay-sensitive traffic such as video conferencing, RDP, and Citrix. You can do this without having to reserve large amounts of bandwidth for the traffic classes.

Additionally HFSC provides a framework for the following:

• Link sharing - Specifies how excess bandwidth is allocated among sibling classes. By default, all link shares are equal. QoS classes with a larger link-share weight are allocated more of the excess bandwidth than QoS classes with a lower link share weight.

• Class hierarchy - A class hierarchy lets a user create QoS classes as children of QoS classes other than the root class. This allows creating a class tree with overall parameters for a certain traffic types and specific parameters for subtypes of that traffic.

For more information about QoS classes, see

QoS Classes.

You can apply Riverbed QoS to both pass-through and optimized traffic, which do not require the optimization service. QoS classification occurs during connection setup for optimized traffic—before optimization and compression. QoS shaping and enforcement occurs after optimization and compression. Pass-through traffic has the QoS shaping and enforcement applied appropriately. However, with the introduction of the SteelHead CX and SteelHead EX, there are platform-specific limits defined for the following QoS settings for outbound QoS:

• Maximum configurable root bandwidth

• Maximum number of classes

• Maximum number of rules

• Maximum number of sites

There are no platform-specific limits for inbound QoS.

You can perform differentiated services code point (DSCP) marking and QoS enforcement on the same traffic. First mark the traffic, and then perform QoS qualification and management on the post-marked traffic.

For information about marking traffic,

QoS Marking.

QoS Concepts

This section describes the concepts of Riverbed QoS. It includes the following topics:

Overview of QoS Concepts

In RiOS 9.0, Riverbed introduces a new process to configure QoS on SteelHeads. This new process greatly simplifies the configuration and administration efforts, compared to earlier RiOS versions.

A QoS configuration generally requires the configuration of many SteelHeads. To avoid repetitive configuration steps on single SteelHeads, Riverbed strongly recommends that you use the SCC 9.0 or later to configure QoS on your SteelHeads. SCC enables you to configure one time and to send the configuration out to multiple SteelHeads instead of connecting to a SteelHead, performing the configuration, and repeating the same configuration for all SteelHeads in the network.

For more information about using the SCC to configure SteelHeads, see the SteelCentral Controller for SteelHead Deployment Guide 9.0 or later.

QoS configuration consists of three building blocks, of which some are shared with the RiOS features path selection and secure transport.

• Topology - The topology combines a set of parameters that enable a SteelHead to build its view onto the network. The topology consists of network and site definitions, including information about how a site connects to a network. With the concept of a topology, a SteelHead can automatically build paths to remote sites and calculate the bandwidth that is available on these paths.

For more information about topology, see

Topology.

• Applications - An application is a set of criteria to classify traffic. The definition of an application enables the SteelHead to ensure that the traffic belonging to this application is treated according how you have configured it. Technically this means that the SteelHead can allocate the necessary bandwidth and priority for an application to ensure its optimal transport through the network. You can define an application on manually configured criteria or by using the AFE, which can recognize over 1200 applications.

• QoS profile - A profile combines a set of QoS classes and rules, which reflect your intent how to treat applications going to (outbound) or coming from (inbound) a site. To ensure an application is optimally working, you must classify it into a QoS class, which holds the parameters for bandwidth allocation and prioritization.

The classification of the application into a QoS class is done with QoS rules. A rule can be based on a single application or application groups.

You can assign a profile to one or multiple sites.

The information contained in the building blocks topology, application and profile enables the SteelHead to efficiently apply QoS.

For more information on secure transport, see

Overview of Secure Transport and the

SteelCentral Controller for SteelHead Deployment Guide.

Application configuration and detection enables easy traffic classification. In topology, the configuration of networks, sites, and uplinks enables RiOS to build the QoS class tree at the site level and to calculate the available bandwidth to each configured site. This calculation also takes care of a possible oversubscription of the local links and enables you to add or delete sites without having to recalculate the bandwidths to the sites. The profiles apply the site specific QoS classes and rules to the sites to allocate bandwidth and priority to applications.

QoS Rules

In RiOS 9.0 and later, QoS rules assign traffic to a particular QoS class. Prior to RiOS 9.0 QoS rules defined an application; however, applications are now defined separately. For more information, see

Application Definitions.

Note: You must define an application before you use it in a QoS rule.

A QoS rule is part of a profile and assigns an application or application group to a QoS class. If a QoS rule is based on an application group, it counts as a single rule. Grouping applications intelligently can significantly reduce the number of rules per sites.

Including the QoS rule in the profile prevents the repetitive configuration of QoS rules, because you can assign a QoS profile to multiple sites.

The order in which the rules appear in the QoS rules table is important. Rules from this list are applied from top to bottom. As soon as a rule is matched, the list is exited. Make sure that you place the more granular QoS rules at the top of the QoS rules list.

For more information about QoS profiles, see

QoS Profiles.

Riverbed QoS supports shaping and marking for multicast and broadcast traffic. To classify this type of traffic, you must configure a custom application based on IP header, because the AFE does not support multicast and broadcast traffic.

To configure a QoS rule

1. Choose Networking > Network Services: Quality of Service.

2. Click Edit for the QoS profile for which you want to configure a rule.

3. Select Add a Rule.

4. Specify the first three or four letters of the application or application group you want to create a rule for and select it from the drop down menu of the available application and application groups (

Figure: New QoS Rule).

Figure: New QoS Rule

You can mark traffic for this application with a DSCP or preserve the existing one and click on save.

The newly created QoS rule displays in the QoS rules table of the QoS profile.

Figure: New QoS Rule Priority and Outbound DSCP Setting

QoS Classes

This section describes QoS classes and includes the following topics:

A QoS class represents an aggregation of traffic that is treated the same way by the QoS scheduler. The QoS class specifies the constraints and parameters, such as minimum bandwidth guarantee and latency priority, to ensure the optimal performance of applications. Additionally, the queue type specifies whether packets belonging to the same TCP/UDP session are treated as a flow or as individual packets.

In RiOS 9.0 and later, QoS classes are part of a QoS profile.

For more information about QoS profiles, see

QoS Profiles.

To configure a QoS class

1. Chose Networking > Network Services: Quality of Service.

2. Create a new profile or edit the QoS profile for which you want to configure QoS classes.

3. In the QoS classes section, click Edit.

RiOS 9.0 does not create a default class in the profiles. Make sure you create a default class and point the default rule to it.

In RiOS versions prior to 9.0, you were required to choose between different QoS modes. RiOS 9.0 automatically sets up the QoS class hierarchy based on the information contained in the topology and profile configuration.

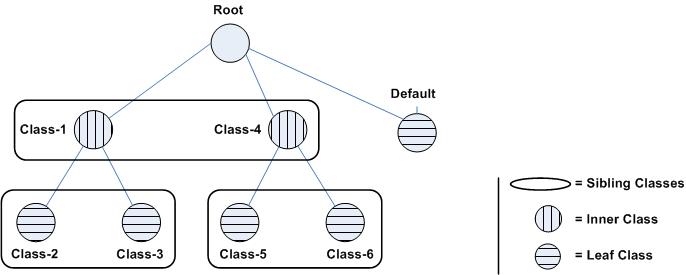

Class Hierarchy

A class hierarchy allows creating QoS classes as children of QoS classes other than the root class. This allows you to create overall parameters for a certain traffic type and specify parameters for subtypes of that traffic.

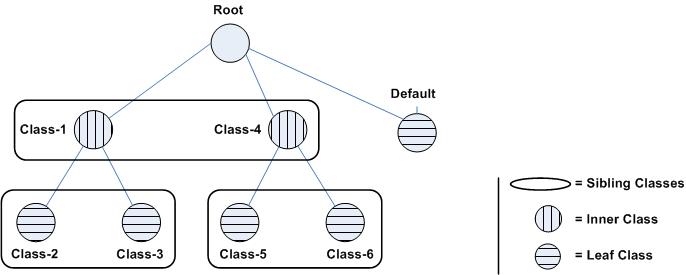

In a class hierarchy, the following relationships exist between QoS classes:

• Sibling classes - Classes that share the same parent class.

• Leaf classes - Classes at the bottom of the class hierarchy.

• Inner classes - Classes that are neither the root class nor leaf classes.

QoS rules can only specify leaf classes as targets for traffic.

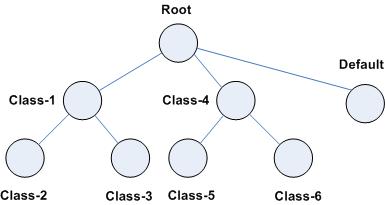

Figure: Hierarchical Mode Class Structure shows a class hierarchy's structure and the relationships between the QoS classes.

Figure: Hierarchical Mode Class Structure

QoS controls the traffic of hierarchical QoS classes in the following manner:

• QoS rules assign active traffic to leaf classes.

• The QoS scheduler:

– applies active leaf class parameters to the traffic.

– applies parameters to inner classes that have active leaf class children.

– continues this process up the class hierarchy.

– constrains the total output bandwidth to the WAN rate specified on the root class.

The following examples show how class hierarchy controls traffic.

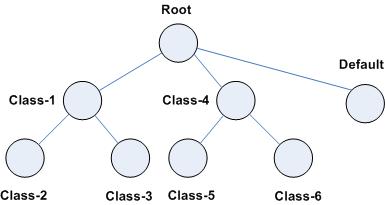

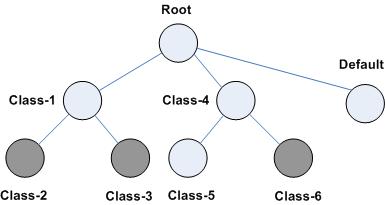

Figure: Example of QoS Class Hierarchy shows six QoS classes. The root and default QoS classes are built-in and are always present. This example shows the QoS class hierarchy structure.

Figure: Example of QoS Class Hierarchy

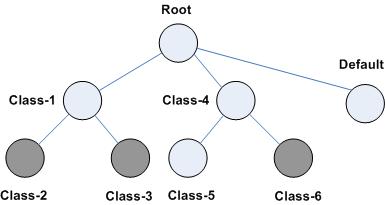

Figure: QoS Classes 2, 3, and 6 Have Active Traffic shows there is active traffic beyond the overall WAN bandwidth rate. This example shows a scenario in which the QoS rules place active traffic into three QoS classes: Classes 2, 3, and 6.

Figure: QoS Classes 2, 3, and 6 Have Active Traffic

Riverbed QoS rules place active traffic into QoS classes in the following manner.

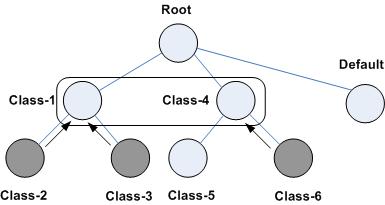

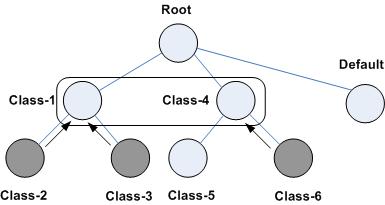

• The QoS scheduler:

• applies the constraints for the lower leaf classes.

• applies bandwidth constraints to all leaf classes. The QoS scheduler awards minimum guarantee percentages among siblings, after which the QoS scheduler awards excess bandwidth, after which the QoS scheduler applies upper limits to the leaf class traffic.

• applies latency priority to the leaf classes. For example, if class 2 is configured with a higher latency priority than class 3, the QoS scheduler gives traffic in class 2 the chance to be transmitted before class 3. Bandwidth guarantees still apply for the classes.

– Traffic from class 2 and class 3 is logically combined and treated as if it were class 1 traffic.

– Because class 4 only has active traffic from class 6, the QoS scheduler treats the traffic as if it were class 4 traffic.

Figure: How the QoS Scheduler Applies Constraints of Parent Class to Child Classes

In RiOS 9.0 and later, you configure sites in the topology and traffic classes in profiles. With this information, RiOS 9.0 and later automatically set up the QoS class hierarchy.

The bandwidth of the uplink of the local site is assigned to the root class. The sites form the next layer of the class hierarchy, and the bandwidth of the site uplinks are assigned to the classes. The next layer of the hierarchy is then obtained from the profile, which is assigned to the class.

Figure: Example of Topology and Profile Translating into Class Hierarchy shows the configuration in the topology and the profile is translated into a class hierarchy.

Figure: Example of Topology and Profile Translating into Class Hierarchy

For more information about topology, see

Topology. For more information about profiles, see

QoS Profiles.

Per-Class Parameters

The QoS scheduler uses the per-class configured parameters to determine how to treat traffic belonging to the QoS class. The per-class parameters are as follows:

• Guaranteed minimum bandwidth - When there is bandwidth contention, this bandwidth parameter specifies the minimum amount of bandwidth as a percentage of the parent class bandwidth. The QoS class might receive more bandwidth if there is unused bandwidth remaining. The ratio, of how much of the unused bandwidth is allocated to a class in relation to other classes, is determined by the link-share weight. The total minimum guaranteed bandwidth of all QoS classes must be less than or equal to 100% of the parent class. You can adjust the value as low as 0%.

• Link share weight - Allocates excess bandwidth based on the minimum bandwidth guarantee for each class. This parameter is not exposed in the SteelHead Management Console and implicitly configured with the minimum bandwidth guarantee for a class.

• Maximum bandwidth - Specifies the maximum allowed bandwidth a QoS class receives as a percentage of the parent class guaranteed bandwidth. The upper bandwidth limit is applied even if there is excess bandwidth available. The upper bandwidth limit must be greater than or equal to the minimum bandwidth guarantee for the class. The smallest value you can assign is 0.01%.

• Connection limit - Specifies the maximum number of optimized connections for the QoS class. When the limit is reached, all new connections are passed through unoptimized. In hierarchical mode, a parent class connection limit does not affect its child. Each child-class optimized connection is limited by the connection limit specified for its class. For example, if B is a child of A and the connection limit for A is set to 5, although the connection limit for A is set to 5, the connection limit for B is 10. Connection limit is supported only in in-path configurations. Connection limit is not supported in out- of-path or virtual-in-path configurations.

RiOS does not support a connection limit assigned to any QoS class that is associated with a QoS rule with an AFE component. An AFE component consists of a Layer-7 protocol specification. RiOS cannot honor the class connection limit because the QoS scheduler might subsequently reclassify the traffic flow after applying a more precise match using AFE identification.

In RiOS 9.0 and later, this parameter is available through the CLI only. For information about how to configure the connection limit, please refer to the Riverbed Command-Line Interface Reference Manual.

QoS Class Latency Priorities

Latency priorities indicate how delay-sensitive a traffic class is. A latency priority does not control how bandwidth is used or shared among different QoS classes. You can assign a QoS class latency priority when you create a QoS class or modify it later.

Riverbed QoS has six QoS class latency priorities. The following table summarizes the QoS class latency priorities in descending order.

Latency Priority | Example |

Real time | VoIP, video conferencing |

Interactive | Citrix, RDP, Telnet, and SSH |

Business critical | Thick client applications, ERPs, CRMs |

Normal priority | Internet browsing, file sharing, email |

Low priority | FTP, backup, replication, and other high-throughput data transfers; recreational applications such as audio file sharing |

Best effort | Lowest priority |

Typically, applications such as VoIP and video conferencing are given real-time latency priority, although applications that are especially delay-insensitive, such as backup and replication, are given low latency priority.

Important: The latency priority describes only the delay sensitivity of a class, not how much bandwidth it is allocated, nor how important the traffic is compared to other classes. Therefore, it is common to configure low latency priority for high-throughput, delay-insensitive applications such as ftp, backup, and replication.

QoS Queue Types

Each QoS class has a configured queue type parameter. There following types of parameters are available:

• Stochastic Fairness Queueing (SFQ) - Determines SteelHead behavior when the number of packets in a QoS class outbound queue exceeds the configured queue length. When SFQ is used, packets are dropped from within the queue, among the present traffic flows. SFQ ensures that each flow within the QoS class receives a fair share of output bandwidth relative to each other, preventing bursty flows from starving other flows within the QoS class. SFQ is the default queue parameter.

• First-in, First-Out (FIFO) - Determines SteelHead behavior when the number of packets in a QoS class outbound queue exceeds the configured queue length. When FIFO is used, packets received after this limit is reached are dropped, hence the first packets received are the first packets transmitted.

• MX-TCP - Not a queueing algorithms but rather a TCP transport type. For details, see

MX-TCP.

QoS Queue Depth

RiOS assigns a queue to each configured QoS class, which has the size of 100 packets. Therefore the queue size in bytes depends on the packet size. You can modify the queue size using the CLI.

For more information about how to configure the queue size of a QoS class, see the Riverbed Command-Line Interface Reference Manual.

MX-TCP

MX-TCP is a QoS class queue parameter, but with very different use cases than the other queue parameters. MX-TCP also has secondary effects that you must understand before you configure it.

When optimized traffic is mapped into a QoS class with the MX-TCP queueing parameter, the TCP congestion control mechanism for that traffic is altered on the SteelHead. The normal TCP behavior of reducing the outbound sending rate when detecting congestion or packet loss is disabled, and the outbound rate is made to match the minimum guaranteed bandwidth configured on the QoS class.

You can use MX-TCP to achieve high throughput rates even when the physical medium carrying the traffic has high loss rates. For example, a common usage of MX-TCP is for ensuring high throughput on satellite connections where no lower-layer loss recovery technique is in use.

Another usage of MX-TCP is to achieve high throughput over high-bandwidth, high-latency links, especially when intermediate routers do not have properly tuned interface buffers. Improperly tuned router buffers cause TCP to perceive congestion in the network, resulting in unnecessarily dropped packets, even when the network can support high throughput rates.

MX-TCP is incompatible with the AFE. A traffic flow cannot be classified as MX-TCP and then subsequently classified in a different queue. This reclassification can happen if there is a more exact match of the traffic.

You must ensure the following guidelines when you enable MX-TCP:

• The QoS rule for MX-TCP is at the top of QoS rules list

• The rule does not use AFE identification

• Use MX-TCP for optimized traffic only

As with any other QoS class, you can configure MX-TCP to scale from the specified minimum bandwidth up to a specific maximum. This allows MX-TCP to use available bandwidth during nonpeak hours. During traffic congestion, MX-TCP scales back to the configured minimum bandwidth.

For information about configuring MX-TCP before RiOS 8.5, see earlier versions of the

SteelHead Deployment Guide on the Riverbed Support site at

https://support.riverbed.com.

You can configure a specific rule for an MX-TCP class for packet-mode UDP traffic. An MX-TCP rule for packet-mode UDP traffic is useful for UDP bulk transfer and data-replication applications (for example, Aspera and Veritas Volume Replicator).

Packet-mode traffic matching any non-MX-TCP class is classified into the default class because QoS does not support packet-mode optimization.

For more information about MX-TCP as a transport streaming lining mode, see

Transport Streamlining. For an example of how to configure QoS and MX-TCP, see

Configuring QoS and MX-TCP.

QoS Profiles

The QoS profile combines QoS classes and rules into a building block for QoS.

You can create a hierarchical QoS class structure within a profile to segregate traffic based on flow source or destination, and you can apply different shaping rules and priorities to each leaf-class.

The SteelHead Management Consoles GUI supports the configurations of three levels of hierarchy. If more levels of hierarchy are needed, you can configure them using the CLI.

A profile can be used for inbound and outbound QoS.

To configure a QoS profile

1. Choose Networking > Network Service: Quality of Service.

Figure: Add a New QoS Profile

You can either create a new QoS profile based on a blank template or create a new QoS profile based on an existing one. Creating a new QoS profile based on an existing one simplifies the configuration because you can adjust parameters of the profile instead of starting with nothing.

3. Specify a name for the QoS profile and click Save.

The newly created QoS profile is displayed in the list.

Figure: QoS Profile Details

A blank QoS profile comes with a root class and the default rule. To configure QoS classes, see

QoS Classes. To configure QoS rules, see

QoS Classes.

After you create the QoS profile, you can use the configured set of QoS classes and rules for multiple sites.

To assign a QoS profiles to a site

1. Choose Networking > Topology: Sites & Networks.

2. Click Edit Site in the Sites section.

3. In the QoS Profiles section of the configuration page, open the Outbound QoS Profile drop-down menu and select the profile for this site.

You can assign the same QoS profile for inbound and outbound QoS. However, usually inbound QoS and outbound QoS have different functions, so it is likely that you need to configure a separate QoS profile for inbound QoS. For more information about inbound QoS, see

Inbound QoS.

For more information about configuration profiles, see

Creating QoS Profiles and

MX-TCP.

Configuring QoS

This section shows how to configure Riverbed QoS. It includes the following topics:

This section requires you be familiar with

QoS Concepts,

Topology, and

Application Definitions.

QoS Configuration Workflow

In RiOS 9.0 and later, you configure QoS modularly. Some configuration tasks needed for QoS are also needed for the path selection and secure transport feature. You configure modules independently from each other, and RiOS combines the information from the modules into a QoS configuration. This greatly simplifies work you need to do to configure QoS in previous RiOS versions.

To configure QoS you must complete the following tasks:

1. Configure the applications - Configure and define the applications, which are to be handled with QoS. For details, see

Application Definitions.

2. Configure the profiles - Configure the classes and rules to prioritize and shape the applications. For details, see

QoS Profiles.

3. Configure the topology - Set up the local and destination sites to do QoS for. Configure the bandwidths from and to the sites and assign a profile to the sites. For details, see

Topology.

4. Enable QoS on the SteelHead.

A QoS configuration generally requires the configuration of many SteelHeads. To avoid repetitive configuration steps on single SteelHeads, Riverbed strongly recommends that you use the SCC 9.0 or later to configure QoS on your SteelHeads. SCC enables you to configure one time and to send the configuration out to multiple SteelHeads instead of connecting to a SteelHead, performing the configuration and repeating the same configuration for all SteelHeads in the network.

Enabling QoS

This section describes how to enable QoS.

To enable QoS on a SteelHead

1. Choose Network > Network Services: Quality of Service.

2. Select the check box next to the QoS feature you want to enable.

Figure: Enabling QoS

You can enable outbound QoS marking without having to enable inbound or outbound QoS shaping.

After you enable QoS globally, you must check to see if the in-path interface of the SteelHead is also enabled for QoS.

4. In the Manage QoS Per Interface section of the configuration page, enable QoS on the in-path interface.

5. Click Save.

Note: Enabling QoS marking is a global configuration setting. You cannot enable it per in-path interface.

Important: RiOS QoS needs to be part of the TCP three-way handshake to be able to classify traffic. If you enable QoS on a SteelHead, all existing TCP flows are classified into the default site. Riverbed recommends that you enable QoS during nonproduction hours in a network.

Inbound QoS

This section explains how Riverbed inbound QoS works and how you configure it. It includes the following topics:

This section requires that you be familiar with

QoS Concepts.

Introduction to Inbound QoS

Inbound QoS enables you to allocate bandwidth and prioritize traffic flowing from the WAN into the LAN network behind the SteelHead. This configuration provides the benefits of QoS for environments that cannot meet their QoS requirements with only outbound QoS.

Reasons to configure inbound QoS include the following:

• Many business applications, such as VoIP and desktop video conferencing, now run over any-to-any mesh topologies.

The traffic generated by these applications typically travels directly from one branch office to another; for example, if a user in Branch Office A calls a user in Branch Office B, the VoIP call is routed directly, without having to traverse the data center. This traffic might compete with other traffic that is coming from the data center or from other sites, but it bypasses any QoS that is deployed at those sites. As a result, there is no network location from which you can use outbound QoS to control all incoming traffic going to Branch Office B. The only place where you can control all incoming traffic is at the branch itself. You can use inbound QoS at Branch Office B to guarantee bandwidth for critical applications and slow down traffic from other, less critical applications.

• Software as a Service (SaaS) applications and public cloud services accessed over the internet.

These applications compete with recreational internet traffic for bandwidth at the branch office. When users watch online videos or browse social networking sites, business applications can struggle to get the resources they need. With inbound QoS, you can ensure that business applications have enough room to get through.

Inbound QoS is used the same way as outbound QoS—to prioritize traffic from sites and types of traffic using rules and classes. You define the applications on the local SteelHead and then create a QoS profile corresponding to the shaping and prioritization policies.

For information about how to configure inbound QoS, see the SteelHead Management Console User’s Guide.

Inbound QoS applies the HFSC shaping policies to the ingress traffic. This behavior addresses environments in which bandwidth constraints exist at the downstream location. When this occurs, the downstream SteelHead (where inbound QoS is enabled) dynamically communicates the bandwidth constraints to the client transmitting the traffic. The client slows down the throughput and the traffic adheres to the configured inbound QoS rule. Inbound QoS, just like outbound QoS, is not a dual ended SteelHead solution. A single SteelHead can control inbound WAN traffic on its own.

For information about the HFSC queueing technology, see

Overview of Riverbed QoS and the

SteelHead Management Console User’s Guide.

Unlike earlier RiOS versions, RiOS 9.0 allows for applying inbound QoS per site (hierarchical inbound).

Assigning an Inbound QoS Profile to a Site

You configure an inbound QoS profile the same way as an outbound QoS profile. Assigning an inbound QoS profile to a site requires you to think backwards. For example, you want to restrict certain traffic coming into the data center. When you configure the SteelHead that is located in the data center for inbound QoS, you need to assign a QoS profile to that site. Do not assign a QoS profile for inbound QoS to the data center SteelHead.

To assign a QoS profiles for inbound QoS to a site

1. Choose Networking > Topology: Sites & Networks.

2. Click Edit Site in the Sites section.

3. In the QoS Profiles section of the configuration page, open the Inbound QoS Profile drop-down menu and select the profile for this site (

Figure: Edit an Existing Site).

Figure: Edit an Existing Site

LAN Bypass

Virtual in-path network topologies in which the LAN-bound traffic traverses the WAN interface might require that you configure the SteelHead to bypass LAN-bound traffic so that it is not subject to the maximum root bandwidth limit. Some deployment examples are WCCP or a WAN-side default gateway. The LAN bypass feature enables you to exempt certain subnets from QoS enforcement. You can configure LAN bypass on both inbound and outbound QoS.

For information about LAN bypass, see the SteelHead Management Console User’s Guide.

QoS for IPv6

IPv6 traffic is not currently supported for QoS shaping or AFE-based classification. If you enable QoS shaping for a specific interface, all IPv6 packets for that interface are classified to the default class.

You can mark IPv6 traffic with an IP ToS value. You can also configure the SteelHead to reflect an existing traffic class from the LAN side to the WAN side of the SteelHead.

For more information about IPv6, see

IPv6.

QoS in Virtual In-Path and Out-of-Path Deployments

You can use QoS enforcement, on both inbound and outbound traffic, in virtual in-path deployments (for example, WCCP and PBR) and out-of-path deployments. In both of these types of deployments, you connect the SteelHead to the network through a single interface: the WAN interface for WCCP deployments, and the primary interface for out-of-path deployments. You enable QoS for these types of deployments:

• Configure subnet side rules appropriately with LAN IP subnets on the LAN side. The subnet side rules define which part of the interface traffic belongs to the LAN side.

For information about subnet side rules, see the SteelHead Management Console User’s Guide.

• Set the WAN throughput for the network interfaces to the total speed of the LAN and WAN interfaces or to the speed of the local link, whichever number is lower.

• Configure QoS as if you have deployed the SteelHead in a physical in-path mode.

Figure: Virtual In-Path Example shows two SteelHeads are deployed in a virtual in-path deployment. The client side IP subnets (192.168.10.0/24 for the client side SteelHead and 172.30.10.0/24 on the server side SteelHead) need to be configured as LAN-side subnets in the Subnet side rules table.

Figure: Virtual In-Path Example

QoS in Multiple SteelHead Deployments

You can use QoS when multiple SteelHeads are optimizing traffic for the same WAN link. In these cases, you must configure the QoS settings so that the SteelHeads share the available WAN bandwidth. For example, if traffic is to be load balanced evenly across two SteelHeads, then the maximum WAN bandwidth configured for each SteelHead is one-half of the total available WAN bandwidth. This scenario is often found in multiple SteelHead data protection deployments.

For information about data protection deployments, see

Designing for Scalability and High Availability.

QoS and Multiple WAN Interfaces

You can enable QoS on WAN interfaces using different bandwidths. For example, you can configure the uplink for the inpath0_0 interface for 10 Mbps to connect to a 10-Mbps terrestrial link and configure the uplink for inpath0_1 for 1 Mbps to connect to a 1-Mbps satellite backup link.

Keep in mind that the outbound WAN capacity limit is based per SteelHead, regardless of the number of interfaces. For example, with a SteelHead EX1160, the QoS bandwidth is limited to 100 Mbps of traffic regardless of the number of interfaces installed or enabled for QoS.

The bandwidth and latency allocation are applied across all the interfaces. Continuing with the previous example, if you allocate 10% of the bandwidth for CIFS traffic, then that would be 10% of the 10-Mbps link and 10% of the 1-Mbps link.

Integrating SteelHeads into Existing QoS Architectures

This section describes the integration of SteelHeads into existing QoS architectures. This section includes the following topics:

When you integrate SteelHeads into your QoS architecture, you can:

• retain the original DSCP or IP precedence values.

• choose the DSCP or IP precedence values.

• retain the original destination TCP port.

• choose the destination TCP port.

• retain all of the original IP addresses and TCP ports.

You do not have to use all of the SteelHead functions on your optimized connections. You can selectively apply functions to different optimized traffic, based on attributes such as IP addresses, TCP ports, DSCP, VLAN tags, and payload.

WAN-Side Traffic Characteristics and QoS

When you integrate SteelHeads into an existing QoS architecture, it is helpful to understand how optimized and pass-through traffic appear to the WAN or any WAN-side infrastructure.

Figure: How Traffic Appears to the WAN When SteelHeads Are Present shows how traffic appears on the WAN when SteelHeads are present.

Figure: How Traffic Appears to the WAN When SteelHeads Are Present

When SteelHeads are present in a network:

• the optimized data for each LAN-side connection is carried on a unique WAN-side TCP connection.

• the IP addresses, TCP ports, and DSCP or IP precedence values of the WAN connections are determined by the SteelHead WAN visibility mode, and the QoS marking settings configured for the connection.

• the amount of bandwidth and delay assigned to traffic when you enable Riverbed QoS enforcement is determined by the Riverbed QoS enforcement configuration. This configuration applies to both pass-through and optimized traffic. However, this configuration is separate from configuring SteelHead WAN visibility modes.

For information about WAN visibility modes, see

Overview of WAN Visibility.

QoS Integration Techniques

This section provides examples of different QoS integration techniques, depending on the environment, as described in the following topics:

These examples assume that the post-integration goal is to treat optimized and nonoptimized traffic in the same manner with respect to QoS policies; you might want to allocate different network resources to optimized traffic.

For information about QoS marking, see

QoS Marking.

In networks in which both classification or marking and enforcement are performed on traffic after it passes through the SteelHead, you have the following configuration options:

• In a network in which classification and enforcement is based only on TCP ports, you can use port mapping or the port transparency WAN visibility mode.

• In a network where classification and enforcement is based on IP addresses, you can use the full address transparency WAN visibility mode.

QoS Policy Differentiating Voice Versus Nonvoice Traffic

In some networks, QoS policies do not differentiate traffic that is optimized by the SteelHead. For example, because VoIP traffic is passed through the SteelHead, a QoS policy that gives priority to only VoIP traffic, without differentiating between non-VoIP traffic, is unaffected by the introduction of SteelHeads. In these networks, you do not need to make QoS configuration changes to maintain the existing policy, because the existing configuration treats all non-VoIP traffic identically, regardless of whether it is optimized by the SteelHead.

SteelHead Honoring Premarked LAN-Side Traffic

Another example of a network that might not require QoS configuration changes to integrate SteelHeads is where traffic is marked with DSCP or ToS values before reaching the SteelHead, and enforcement is made after reaching the SteelHeads based only on DSCP or ToS. The default SteelHead settings reflect the DSCP or ToS values from the LAN side to the WAN side of an optimized connection.

For example, you configure QoS by marking the DSCP values at the source or on LAN-side switches, and enforcement is performed on WAN routers, the WAN routers detect the same DSCP values for all classes of traffic, optimized or not.

SteelHead Remarking Traffic from the LAN Side

You can mark or remark a packet with a different DSCP or ToS value as it enters the SteelHead. This remarking process is sometimes necessary because an end host can set an inappropriate DSCP value for traffic that might otherwise receive a lower priority. In this scenario, the QoS enforcement remains the responsibility of the WAN router.

SteelHead Enforcing the QoS Policy

Instead of enforcing the QoS policy on the WAN router, you can configure the SteelHead to enforce the QoS policy instead. However, Riverbed recommends that you also maintain a QoS policy on the WAN router to ensure that the traffic quality is guaranteed in the event that the SteelHead becomes unavailable.

QoS Marking

This section describes how to use SteelHead QoS marking when integrating SteelHeads into an existing QoS architecture. This section includes the following topics:

SteelHeads can retain or alter the DSCP or IP ToS value of both pass-through traffic and optimized traffic. The DSCP or IP ToS values can be set in the QoS profile per QoS class and per QoS rule or per application.

Note: You can enable QoS marking without enabling QoS shaping.

QoS reporting does not show any output if QoS marking is enabled without enabling QoS shaping. To get reporting for marked traffic, you must enable QoS shaping.

For example QoS marking configurations, see

Configuring QoS Marking on SteelHeads.

Note: In RiOS 7.0, the DSCP or IP ToS value definition changed significantly from earlier versions of RiOS. If you are running an earlier version of the RiOS, see an earlier version of the SteelHead Deployment Guide for instructions on how to configure the DSCP or IP ToS value.

QoS Marking for SteelHead Control Traffic

By default, the setup of out-of-band control connections (OOB splice) are not marked with a DSCP value. If you are integrating a SteelHead into a network that provides multiple classes of service, SteelHead control traffic is classified into the default class. The default class is usually treated with the lowest priority. This traffic classification can lead to packet loss and high latency for SteelHead control traffic if the link is congested, which impacts optimization performance.

For optimized connections (inner channel), the client-side SteelHead sets a configured DSCP value from the very start of the connection, according to the first fully matching QoS rule. Riverbed Application Flow Engine (AFE) cannot classify traffic on the first packets (TCP handshake), so only QoS rules based on the IP protocol header (IP address, TCP port number, UDP port number, and so on) match on the first packet (TCP SYN). On the server-side SteelHead, the DSCP marking value seen on the TCP SYN packet is reflected onto the TCP SYN-ACK packet, so the packets of the TCP session setup (TCP handshake) are correctly marked with the configured DSCP.

For more information about AFE, see

Application Flow Engine.

In versions of RiOS prior to 9.1, the Global DSCP feature was used to set a DSCP value to the first packets of a session (TCP handshake), which is no longer needed. For more information about Global DSCP, refer to an earlier version of the SteelHead Deployment Guide.

If your existing network provides multiple classes of service based on DSCP values, and you are integrating a SteelHead into your environment, you can use the out-of-band (OOB) DSCP marking feature to prevent dropped packets and other undesired effects for SteelHeads control traffic.

The OOB DSCP marking feature enables you to explicitly set a DSCP value for the control channel between SteelHeads (OOB splice).

To configure OOB DSCP marking, create a new rule in the QoS profile in use, which is based on the Riverbed control traffic application group, and configure the desired DSCP value.

Figure: Riverbed Control Traffic Application Group

If you want to set a DSCP value for only one direction, choose either the Riverbed Control Traffic (Client) or Riverbed Control Traffic (Server) application.

For more information about how to configure OOB DSCP marking, see the SteelHead Management Console User’s Guide.

QoS Marking Default Setting

By default, SteelHeads reflects the DSCP or IP ToS value found on pass-through traffic and optimized connections. The default value for DSCP or IP ToS are set to:

• preserve in the QoS class.

• preserve in the QoS rules.

• Any in the application rules configuration (this means do not change anything).

By default, the DSCP or IP ToS value on pass-through or optimized traffic is unchanged when it passes through the SteelHead.

If a DSCP or IP ToS value is set for certain traffic, this value is only significant for the SteelHead on which it is configured. Another SteelHead on the same network may set the DSCP or IP ToS value differently.

Figure: Local Significance of DSCP or IP ToS Value shows the local significance of DSCP or IP ToS values.

Figure: Local Significance of DSCP or IP ToS Value

QoS Marking Design Considerations

Consider the following when using QoS marking:

• You can use a DSCP or IP ToS value to classify traffic or even to define an application. You can define an application based on an existing DSCP or IP ToS value instead of specifying individual header or application flow rules. This value simplifies the configuration, because traffic is marked before it is classified.

• You cannot use QoS marking for traffic exiting the LAN interface. The LAN interface reflects the QoS marking value it receives from the WAN interface, for both optimized and unoptimized traffic.

• QoS marking behavior is the same for in-path, virtual in-path, or out-of-path deployments. The one exception is that in a virtual in-path deployment you can set a DSCP or IP ToS traffic for traffic destined to the LAN, because in a virtual in-path deployment only the WAN interface of the SteelHead is activated.

QoS Enforcement Best Practices

Riverbed recommends the following actions to ensure optimal performance with the least amount of initial and ongoing configuration:

• Configure QoS while the QoS functionality is disabled and only enable it after you are ready for the changes to take effect.

• Configure an uplink for the default class and assign a reasonable bandwidth to it.

The built-in default class initially does not have uplinks configured. Because of this, the physical bandwidth of the in-path interface is used. This use can lead to a high and undesired oversubscription of the uplink of the local site and thus impact the minimum bandwidth guarantee of the remote sites.

A typical indication that you must adjust the default class uplinks bandwidth is when traffic that is specified in the sites or QoS rules appears to be slow during times of congestion, while other traffic not specified in sites or QoS rules (typical examples include web browsing and routing updates) works fine.

• When configuring a profile, create a default class and point the default rule (Any) to the default class.

• Be aware that flows can be incorrectly classified if there are asymmetric routes in the network in which you have enabled the QoS features.

Upgrading to RiOS Version 9.0

RiOS 9.0 provides a mechanism to convert the QoS configuration of earlier RiOS versions into the format of a RiOS 9.0 configuration. The conversion process is transparent to and happens during the first boot-up of RiOS 9.0 on a SteelHead.

The QoS concepts and resulting configuration in RiOS 9.0 are very different from those of earlier RiOS versions. In some cases it can be easier to create a new QoS configuration in RiOS 9.0, than to migrate an existing QoS configuration. You must handle any migration of an advanced QoS configuration from earlier RiOS versions with care.

Riverbed highly recommends that you closely analyze a migrated QoS configuration after you upgrade a SteelHead to RiOS 9.0.

For more information about migrations, see

RiOS 9.0 QoS Migration at

https://splash.riverbed.com/docs/DOC-5443.

Guidelines for the Maximum Number of QoS Classes, Sites, and Rules

The number of QoS classes, sites, and rules you can create on a SteelHead depends on the appliance model number, the traffic flow, and other RiOS features you enable.

Important: If you are using a release previous to RiOS 8.5.1, some of the features described in the chapter might not be applicable. For information about QoS before RiOS 8.5.1, see earlier versions of the

SteelHead Deployment Guide on the Riverbed Support site at

https://support.riverbed.com.

Riverbed recommends that you do not exceed the guidelines in this section.

RiOS 7.0.3 and later enforce a maximum configurable root bandwidth per appliance model. Bandwidth enforcement issues a warning when you configure the sum of the bandwidth interfaces as a value greater than the model-specific QoS limits. The limits were introduced in RiOS 6.5.4 and 7.0.1. The warning appears when you save a configured bandwidth limit that exceeds the supported limit. If you receive the warning, adjust the sum of the configured QoS interface values to be lower or equal to the model-specific bandwidth limit and save the configuration.

Riverbed strongly recommends you configure the bandwidth at or below the appliance limit, because problems arise when you exceed it. Upgrading a SteelHead to RiOS 7.0.3 does not automatically fail when the configuration has a greater QoS bandwidth limit than the appliance supports. However, after the upgrade, RiOS begins enforcing the bandwidth limits and disallows any QoS configuration changes. This limitation could result in having to reconfigure all QoS policies to accommodate the bandwidth limit.

In RiOS 8.5.1 and later, QoS limits are no longer enforced, with the exception of the QoS bandwidth. To restrict the possibility of exhausting the system resources, use these safeguard limits:

• Maximum number of total rules: 2000

• Maximum number of sites in basic outbound QoS mode: 100

• Maximum number of sites in advanced outbound QoS mode: 200

In RiOS 6.5 and later, QoS performance is based on the number of connections per second. The guidelines are as follows:

• Desktop models: 200 new connections per second

• 1U models: 500 new connections per second

• 3U models: 1,000 new connections per second

Important: Exceeding the recommended limits can lead to severe delays when you change your Riverbed QoS configuration or boot the SteelHead.

For more information about the bandwidth limits and the Riverbed recommended optimal performance guidelines for the SteelHead models, see

http://www.riverbed.com/content/dam/riverbed-www/global/en_US/Documents/fpo/Spec+Sheet+-+Steelhead+Family+-+05.06.2015.pdf.