Configuring in-path rules

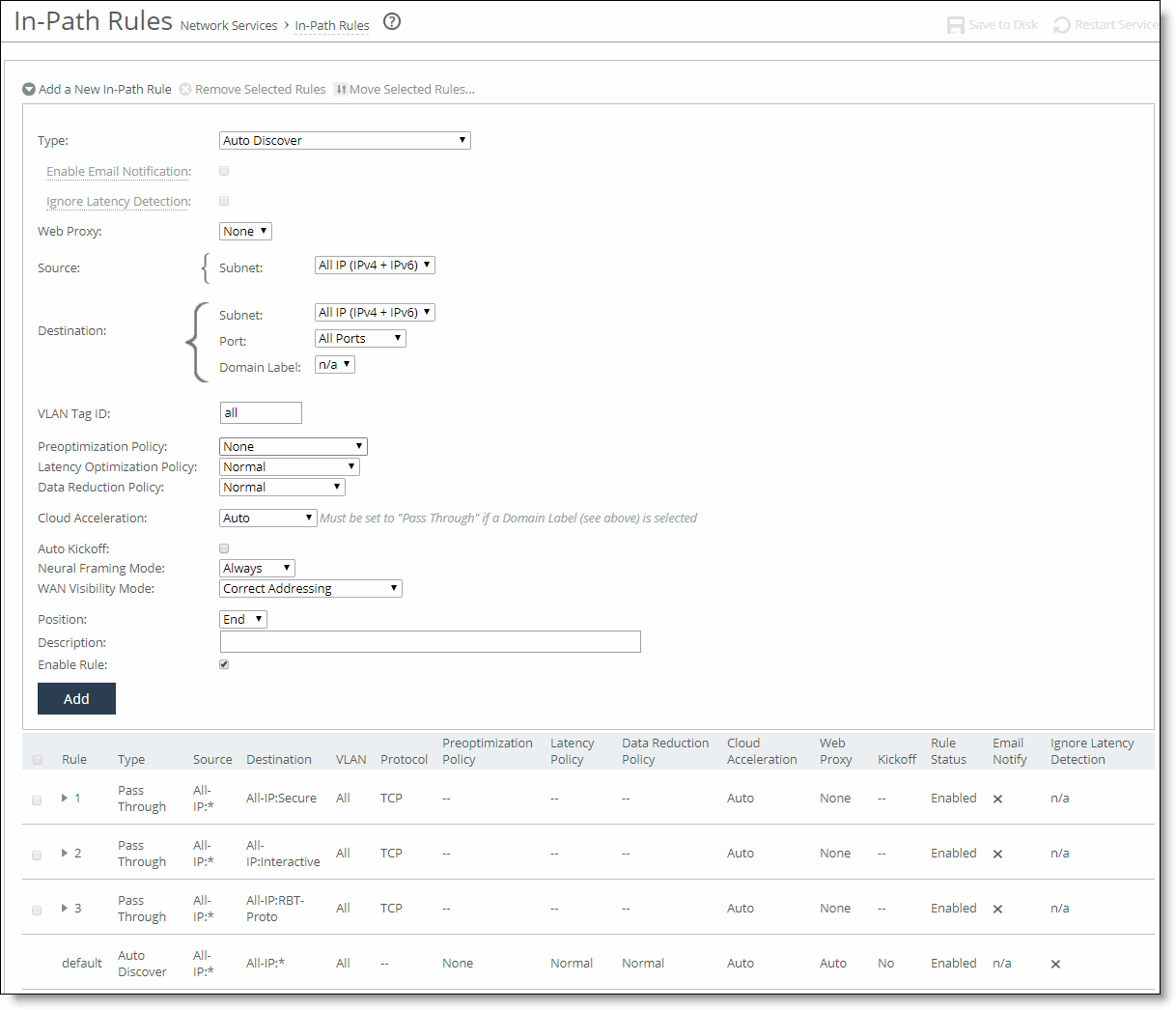

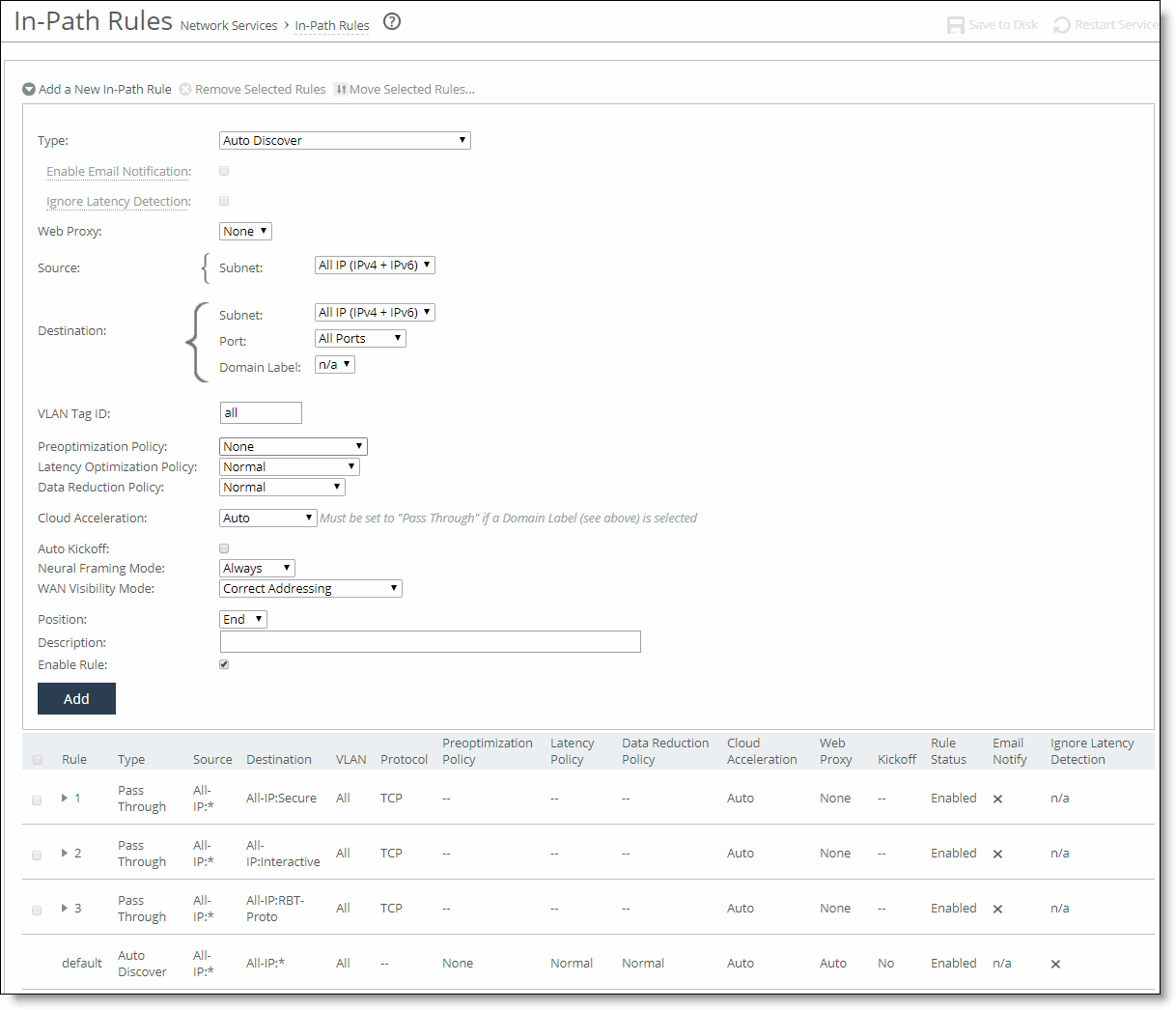

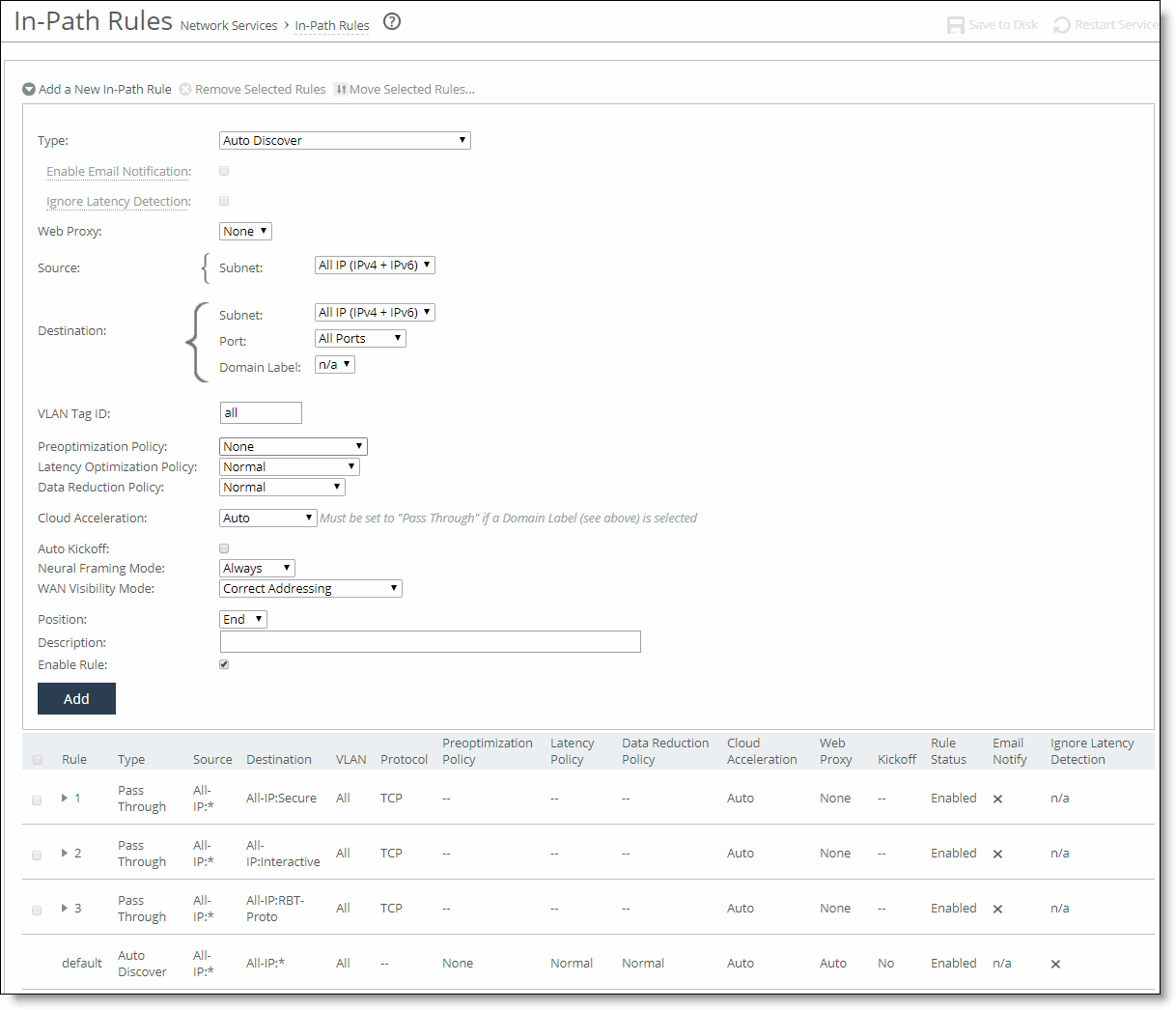

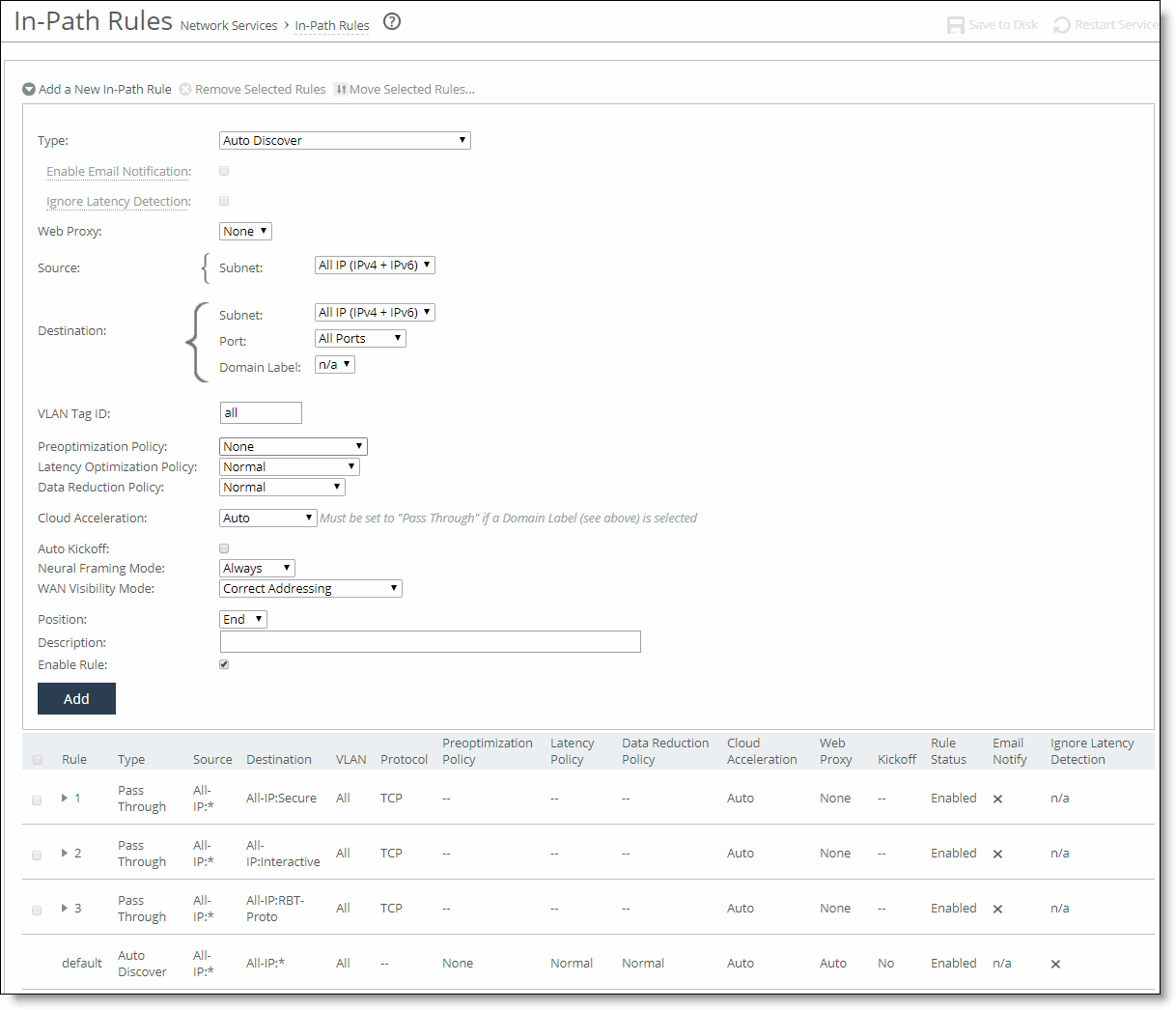

You review, add, edit, and remove in-path rules in the Optimization > Network Services: In-Path Rules page.

In-Path Rules page

The In-Path Rules table lists the order and properties of the rules set for the running configuration.

For an overview of in-path rules, see

About In-Path Rules.For details on IPv6 deployment options, see the SteelHead Deployment Guide.

In-path rules settings

This section describes settings for in-path rules.

The default rule, Auto, which optimizes all traffic that has not been selected by another rule, can’t be removed and is always listed last.

In RiOS 8.5 and later, the default rule maps to all IPv4 and IPv6 addresses: All IP (IPv4 + IPv6). The default rule for TCP traffic, either IPv4 or IPv6, attempts autodiscovery with correct addressing as the WAN visibility mode. For details on IPv6 deployment options, see the SteelHead Deployment Guide.

Type

Select one of these rule types from the drop-down list:

• Auto-Discover—Uses the autodiscovery process to determine if a remote SteelHead is able to optimize the connection attempting to be created by this SYN packet. By default, Auto-Discover is applied to all IP addresses and ports that aren’t secure, interactive, or default Riverbed ports. Defining in-path rules modifies this default setting.

• Fixed-Target—Skips the autodiscovery process and uses a specified remote SteelHead as an optimization peer.

You must specify at least one remote target SteelHead (and, optionally, which ports and backup SteelHeads), and add rules to specify the network of servers, ports, port labels, and out-of-path SteelHeads to use.

In RiOS 8.5 and later, a fixed-target rule enables you to optimize traffic end to end using IPv6 addresses. You must change the use of All IP (IPv4 + IPv6) to All IPv6.

If you don’t change to All IPv6, use specific source and destination IPv6 addresses. The inner channel between SteelHeads forms a TCP connection using the manually assigned IPv6 address. This method is similar to an IPv4 fixed-target rule and you configure it the same way.

• Fixed-Target (Packet Mode Optimization)—Skips the autodiscovery process and uses a specified remote SteelHead as an optimization peer to perform bandwidth optimization on TCPv4, TCPv6, UDPv4, or UDPv6 connections.

Packet-mode optimization rules support both physical in-path and master/backup SteelHead configurations.

You must specify which TCP or UDP traffic flows need optimization, at least one remote target SteelHead, and, optionally, which ports and backup SteelHeads to use.

In addition to adding fixed-target packet-mode optimization rules, you must go to Optimization > Network Services: General Service Settings, enable packet-mode optimization, and restart the optimization service.

Packet-mode optimization rules are unidirectional; a rule on the client-side SteelHead optimizes traffic to the server only. To optimize bidirectional traffic, define two rules:

– A fixed-target packet-mode optimization rule on the client-side SteelHead to the server.

– A fixed-target packet-mode optimization rule on the server-side SteelHead to the client.

Packet-mode optimization rules perform packet-by-packet optimization, as opposed to traffic-flow optimization. After you create the in-path rule to intercept the connection, the traffic flows enter the SteelHead. The SteelHead doesn’t terminate the connection, but instead rearranges the packet headers and payload for SDR optimization. Next, it provides SDR optimization and sends the packets through a TCPv4 or TCPv6 channel to the peer SteelHead. The peer SteelHead decodes the packet and routes it to the destined server. The optimized packets are sent through a dedicated channel to the peer, depending on which in-path rule the packet's flow was matched against.

To view packet-mode optimized traffic, choose Reports > Networking: Current Connections or Connection History. You can also enter the show flows CLI command at the system prompt.

• Pass-Through—Allows the SYN packet to pass through the SteelHead unoptimized. No optimization is performed on the TCP connection initiated by this SYN packet. You define pass-through rules to exclude subnets from optimization. Traffic is also passed through when the SteelHead is in bypass mode. (Pass through of traffic might occur because of in-path rules or because the connection was established before the SteelHead was put in place or before the optimization service was enabled.)

• Discard—Drops the SYN packets silently. The SteelHead filters out traffic that matches the discard rules. This process is similar to how routers and firewalls drop disallowed packets: the connection-initiating device has no knowledge that its packets were dropped until the connection times out.

• Deny—Drops the SYN packets, sends a message back to its source, and resets the TCP connection being attempted. Using an active reset process rather than a silent discard allows the connection initiator to know that its connection is disallowed.

Enable email notification

Allows notification emails to be sent when this in-path rule is configured. Reminder emails are also sent every 15 days.

In addition to selecting this check box, make or verify the following changes in the Administration > System Settings: Email page to enable email notifications:

• Select the Send Reminder of Passthrough Rules via Email check box.

• Select the Report Events via Email check box and specify an email address.

This field is active only if you specify a pass-through rule. You cannot create notifications for other types of rules.

You can select one or more pass-through rules for notification. Notifications are sent only for those rules that have this check box selected.

To change the frequency of reminder emails, enter the email notify passthrough rule notify-timer <notification-time-in-days> command. For more information, see the Riverbed Command-Line Interface Reference Manual.

To disable reminder emails for pass-through rules, clear the Send Reminder of Passthrough Rules via email check box in the Administration > System Settings: Email page or enter the no email notify passthrough rule command.

Ignore latency detection

Does not perform latency detection for this in-path rule when global latency detection is enabled.

Web proxy

Enable on a client-side appliance with Auto Discover and Pass-Through rules to use a single-ended web proxy to transparently intercept all traffic bound to the internet. Enabling the web proxy improves performance by providing optimization services such as web object caching and SSL decryption to enable content caching and logging services.

Because this is a client-side feature, it’s controlled and managed from a SteelCentral Controller for SteelHead (SCC). You can configure the in-path rule on the client-side SteelHead running the web proxy or on the SCC. You must also enable the web proxy globally on the SCC and add domains to the global HTTPs whitelist. For details, see the SteelCentral Controller for SteelHead User Guide.

You can use the same SteelHead for optimizing dual-ended connections (between clients and servers in the data center) and web proxy connections destined for internet-based servers.

Web object caching includes all objects delivered through HTTP(S) that can be cached, including large video objects like static video on demand (VoD) objects and YouTube video. YouTube video caching is enabled by default.

The number of objects that can be cached is limited only by the total available cache space, determined by the SteelHead model. The cache sizes range from 50 GB to 500 GB.

The maximum size of a single object is unlimited. An object remains in the cache for the amount of the time specified in the cache control header. When the time limit expires, the SteelHead evicts the object from the cache.

The proxy cache is separate from the RiOS data store. When objects for a given website are already present in the cache, the system terminates the connection locally and serves the content from the cache. This saves the connection setup time and also reduces the bytes to be fetched over the WAN.

The proxy cache is persistent; its contents remain intact after service restarts and appliance reboots.

Select one of these options from the drop-down list:

• None—Do not direct traffic through the web proxy.

• Force—Select with a pass-through rule to direct any private or intranet IP address and port matching this rule through the web proxy. You can also specify port labels to proxy. When enabled, the full and port transparency WAN visibility modes have no impact.

• Auto—Automatically directs all internet-bound traffic destined to public IP addresses on port 80 and 443 through the web proxy. This is the default setting. Only IPv4 traffic is supported.

When enabled on an Auto Discover rule, and the SteelHead is prioritizing the traffic through the web proxy, the full or port transparency WAN visibility modes have no impact. When the traffic can’t be prioritized through the web proxy, autodiscovery occurs and the full or port transparency modes are used.

You can enable the web proxy in a single-ended or asymmetric SteelHead deployment model. A server-side SteelHead is not required.

The client-side SteelHead must be able to access internet traffic from the in-path interface. For the interface that is configured to access the internet, choose Networking > In-Path Interfaces: In-Path Interface Settings, and add the in-path gateway IP address. The xx60 models don’t support this feature. VCX-10 through VCX-90 SteelHead-v models running RiOS 9.6 and later software support Web Proxy; other models do not.

You can’t enable the web proxy with these rule types:

• Fixed-target

• Fixed-target packet mode optimization

• Discard

• Deny

To view the connections going through the web proxy, choose Reports > Networking: Current Connections on the client-side SteelHead. The report shows the optimized HTTP (destination port 80) connections with a W in the connection type (CT) column and Web Proxy in the Application column.

Source

Subnet—Specify the subnet IP address and netmask for the source network:

• All IP (IPv4 + IPv6)—Maps to all IPv4 and IPv6 networks.

• All IPv4—Maps to 0.0.0.0/0.

• All IPv6—Maps to ::/0.

• IPv4—Prompts you for a specific IPv4 subnet address. Use this format for an individual subnet IP address and netmask: xxx.xxx.xxx.xxx/xx

• IPv6—Prompts you for a specific IPv6 subnet address. Use this format for an individual subnet IP address and netmask: x:x:x::x/xxx

In a virtual in-path configuration using packet-mode optimization, don’t use the wildcard All IP option for both the source and destination IP addresses on the server-side and client-side SteelHeads. Doing so can create a loop between the SteelHeads if the server-side SteelHead forms an inner connection with the client-side SteelHead before the client-side SteelHead forms an inner connection with the server-side SteelHead. Instead, configure the rule using the local subnet on the LAN side of the SteelHead.

When creating a fixed-target packet-mode optimization rule, you must configure an IPv6 address and route for each interface, unless you are optimizing UDP traffic.

Destination

Subnet—Specify the subnet IP address and netmask for the destination network:

• All IP (IPv4 + IPv6)—Maps to all IPv4 and IPv6 networks.

• All IPv4—Maps to 0.0.0.0/0.

• All IPv6—Maps to ::/0.

• IPv4—Prompts you for a specific IPv4 address. Use this format for an individual subnet IP address and netmask: xxx.xxx.xxx.xxx/xx

• IPv6—Prompts you for a specific IPv6 address. Use this format for an individual subnet IP address and netmask: x:x:x::x/xxx

• Host Label—Choose a destination host label to selectively optimize connections to specific services. A host label includes a fully qualified domain name (hostname) and/or a list of subnets.

Host labels replace the destination. When you select a host label, RiOS ignores any destination IP address specified within the in-path rule.

The Management Console dims the host label selection when there aren’t any host labels.

Host labels aren’t compatible with IPv6. When you add a host label to an in-path rule, you must change the source to All IPv4 or a specify an IPv4 subnet.

You can use both host labels and domain labels within a single in-path rule.

The rules table shows any host label, domain label, and/or port label name in use in the Destination column.

• SaaS Application—Defines an in-path rule for SaaS optimization through SteelConnect Manager. When you choose SaaS Application, you also need to specify an application (such as SharePoint for Business) or an application group (such as Microsoft Office 365).

Only applications set up for SaaS optimization on the associated SteelConnect Manager are available.

In a virtual in-path configuration using packet-mode optimization, don’t use the wildcard All IP option for both the source and destination IP addresses on the server-side and client-side SteelHeads. Doing so can create a loop between the SteelHeads if the server-side SteelHead forms an inner connection with the client-side SteelHead before the client-side SteelHead forms an inner connection with the server-side SteelHead. Instead, configure the rule using the local subnet on the LAN side of the SteelHead.

When creating a fixed-target packet mode optimization rule, you must configure an IPv6 address and route for each interface.

Port—Select All Ports, Specific Port, or Port Label. Select All Ports to use all ports, which is the default setting. For Specific Port, specify the destination port number. Valid port numbers are from 1 to 65535. When you select Port Label, a list of port labels appears. Select a label from the drop-down list.

The rules table shows any host label, domain label, and/or port label name in use in the Destination column. When the port is using the default setting of all ports, * appears in the Destination column.

See

Default ports for a description of the SteelHead default ports.

Domain Label—Select a domain label to optimize a specific service or application with an autodiscover, passthrough, or fixed-target rule. Domain labels are names given to sets of hostnames to streamline configuration.

An in-path rule with a domain label uses two layers of match conditions. The in-path rule still sets a destination IP address and subnet (or uses a host label or port). Any traffic that matches the destination first must also be going to a domain that matches the entries in the domain label. The connection must match both the destination and the domain label. When the entries in the domain label don’t match, the system looks to the next matching rule. There are exceptions listed in the Notes that follow.

Choose Networking > App Definitions: Domain Labels to create a domain label.

You can use both host and domain labels within a single in-path rule.

The rules table shows any host label, domain label, and/or port label name in use in the Destination column.

Domain labels are compatible with IPv4 only. Both the server-side and the client-side SteelHeads must be running RiOS 9.2 or later.

Domain labels apply only to HTTP and HTTPS traffic. Therefore, when you add a domain label to an in-path rule and set the destination port to All, the in-path rule defaults to ports HTTP (80) and HTTPS (443) for optimization.

To specify another port or port range, use the Specific Port option instead of All Ports.

When you add a domain label to an in-path rule that has cloud acceleration enabled, the system automatically sets cloud acceleration to Pass Through, and connections to the subscribed SaaS platform are no longer optimized by the Akamai network. However, you could add in-path rules so that other SteelHeads in the network optimize SaaS connections.

To allow the Akamai network to optimize SaaS connections, complete one of the following tasks:

• Create an in-path rule with Cloud Acceleration set to Auto and specify the _cloud‑accel‑saas_ host label. This label automatically detects the IP addresses being used by SaaS applications. See

Using the _cloud-accel-saas_ host label for details.

• Place domain label rules lower than cloud acceleration rules in your rule list so the cloud rules match before the domain label rules.

For a complete list of domain label compatibility and dependencies, see

Configuring domain labels.Target Appliance IP Address

Specify the target appliance address for a fixed-target rule. When the protocol is TCP and you don’t specify an IP address, the rule defaults to all IPv6 addresses.

Port—Specify the target port number for a fixed-target rule.

Backup Appliance IP Address

Specify the backup appliance address for a fixed-target rule.

Port—Specify the backup destination port number for a fixed-target rule.

VLAN tag ID

Specify a VLAN identification number from 0 to 4094, enter all to apply the rule to all VLANs, or enter untagged to apply the rule to nontagged connections.

RiOS supports VLAN v802.1Q. To configure VLAN tagging, configure in-path rules to apply to all VLANs or to a specific VLAN. By default, rules apply to all VLAN values unless you specify a particular VLAN ID. Pass-through traffic maintains any preexisting VLAN tagging between the LAN and WAN interfaces.

Protocol

This setting appears only for fixed-target packet-mode optimization rules. Select a traffic protocol from the drop-down list:

• TCP—Specifies the TCP protocol. Supports TCP-over-IPv6 only.

• UDP—Specifies the UDP protocol. Supports UDP-over-IPv4 only.

• Any—Specifies all TCP-based and UDP-based protocols. This is the default setting.

Preoptimization policy

Select a traffic type from the drop-down list:

• None—If the Oracle Forms, SSL, or Oracle Forms-over-SSL preoptimization policy is enabled and you want to disable it for a port, select None. This is the default setting.

Port 443 always uses a preoptimization policy of SSL even if an in-path rule on the client-side SteelHead sets the preoptimization policy to None. To disable the SSL preoptimization for traffic to port 443, you can either:

– disable the SSL optimization on the client-side or server-side SteelHead.

—or—

– modify the peering rule on the server-side SteelHead by setting the SSL Capability control to No Check.

• Oracle Forms—Enables preoptimization processing for Oracle Forms. This policy is not compatible with IPv6.

• Oracle Forms over SSL—Enables preoptimization processing for both the Oracle Forms and SSL encrypted traffic through SSL secure ports on the client-side SteelHead. You must also set the Latency Optimization Policy to HTTP. This policy is not compatible with IPv6.

If the server is running over a standard secure port—for example, port 443—the Oracle Forms over SSL in-path rule needs to be before the default secure port pass-through rule in the in-path rule list.

• SSL—Enables preoptimization processing for SSL encrypted traffic through SSL secure ports on the client-side SteelHead.

Latency optimization policy

Select one of these policies from the drop-down list:

• Normal—Performs all latency optimizations (HTTP is activated for ports 80 and 8080). This is the default setting.

• HTTP—Activates HTTP optimization on connections matching this rule.

• Outlook Anywhere—Activates RPC over HTTP(S) optimization for Outlook Anywhere on connections matching this rule. To automatically detect Outlook Anywhere or HTTP on a connection, select the Normal latency optimization policy and enable the Auto-Detect Outlook Anywhere Connections option in the Optimization > Protocols: MAPI page. The auto-detect option in the MAPI page is best for simple SteelHead configurations with only a single SteelHead at each site and when the Internet Information Services (IIS) server is also handling websites. If the IIS server is only used as RPC Proxy, and for configurations with asymmetric routing, connection forwarding, or Interceptor installations, add in-path rules that identify the RPC Proxy server IP addresses and select this latency optimization policy. After adding the in-path rule, disable the auto-detect option in the Optimization > Protocols: MAPI page.

• Citrix—Activates Citrix-over-SSL optimization on connections matching this rule. This policy is not compatible with IPv6. Add an in-path rule to the client-side SteelHead that specifies the Citrix Access Gateway IP address, select this latency optimization policy on both the client-side and server-side SteelHeads, and set the preoptimization policy to SSL (the preoptimization policy must be set to SSL).

SSL must be enabled on the Citrix Access Gateway. On the server-side SteelHead, enable SSL and install the SSL server certificate for the Citrix Access Gateway.

The client-side and server-side SteelHeads establish an SSL channel between themselves to secure the optimized Independent Computing Architecture (ICA) traffic. End users log in to the Access Gateway through a browser (HTTPS) and access applications through the web Interface site. Clicking an application icon starts the Online Plug-in, which establishes an SSL connection to the Access Gateway. The ICA connection is tunneled through the SSL connection.

The SteelHead decrypts the SSL connection from the user device, applies ICA latency optimization, and reencrypts the traffic over the internet. The server-side SteelHead decrypts the optimized ICA traffic and reencrypts the ICA traffic into the original SSL connection destined to the Access Gateway.

• Exchange Autodetect—Automatically detects MAPI transport protocols (Autodiscover, Outlook Anywhere, and MAPI over HTTP) and HTTP traffic. For MAPI transport protocol optimization, enable SSL and install the SSL server certificate for the Exchange Server on the server-side SteelHead. To activate MAPI over HTTP bandwidth and latency optimization, you must also choose Optimization > Protocols: MAPI and select Enable MAPI over HTTP optimization on the client-side SteelHead. Both the client-side and server-side SteelHeads must be running RiOS 9.2 for MAPI over HTTP latency optimization.

• None—Do not activate latency optimization on connections matching this rule. For Oracle Forms-over-SSL encrypted traffic, you must set the Latency Optimization Policy to HTTP.

Setting the Latency Optimization Policy to None excludes all latency optimizations, such as HTTP, MAPI, and SMB.

Data reduction policy

Optionally, if the rule type is Auto-Discover or Fixed Target, you can configure these types of data reduction policies:

• Normal—Performs LZ compression and SDR.

• SDR-Only—Performs SDR; doesn’t perform LZ compression.

• SDR-M—Performs data reduction entirely in memory, which prevents the SteelHead from reading and writing to and from the disk. Enabling this option can yield high LAN-side throughput because it eliminates all disk latency. This data reduction policy is useful for:

– a very small amount of data: for example, interactive traffic.

– point-to-point replication during off-peak hours when both the server-side and client-side SteelHeads are the same (or similar) size.

• Compression-Only—Performs LZ compression; doesn’t perform SDR.

• None—Doesn’t perform SDR or LZ compression.

To configure data reduction policies for the FTP data channel, define an in-path rule with the destination port 20 and set its data reduction policy. Setting QoS for port 20 on the client-side SteelHead affects passive FTP, while setting the QoS for port 20 on the server-side SteelHead affects active FTP.

To configure optimization policies for the MAPI data channel, define an in-path rule with the destination port 7830 and set its data reduction policy.

Cloud acceleration

After you subscribe to a SaaS platform and enable it, ensure that cloud acceleration is ready and enabled. When cloud acceleration is enabled, connections to the subscribed SaaS platform are optimized by the SteelHead SaaS. You don’t need to add an in-path rule unless you want to optimize specific users and exclude others. Select one of these choices from the drop-down list:

• Auto—If the in-path rule matches, the connection is optimized by the SteelHead SaaS connection.

• Pass Through—If the in-path rule matches, the connection is not optimized by the SteelHead SaaS, but it follows the other rule parameters so that the connection might be optimized by this SteelHead with other SteelHeads in the network, or it might be passed through.

Domain labels and cloud acceleration are mutually exclusive. When using a domain label, the Management Console dims this control and sets it to Pass Through. You can set cloud acceleration to Auto when domain label is set to n/a.

Using host labels is not recommended for SteelHead SaaS traffic. This applies to the legacy cloud acceleration service and not the SaaS Accelerator.

Auto kickoff

Enables kickoff, which resets pre-existing connections to force them to go through the connection creation process again. If you enable kickoff, connections that pre-exist when the optimization service is started are reestablished and optimized.

Generally, connections are short-lived and kickoff is not necessary. It is suitable for certain long-lived connections, such as data replication, and very challenging remote environments: for example, in a remote branch-office with a T1 and a 35-ms round-trip time, you would want connections to migrate to optimization gracefully, rather than risk interruption with kickoff.

RiOS provides three ways to enable kickoff:

• Globally for all existing connections in the Optimization > Network Services: General Service Settings page.

• For a single pass-through or optimized connection in the Current Connections report, one connection at a time.

• For all existing connections that match an in-path rule and the rule has kickoff enabled.

In most deployments, you don’t want to set automatic kickoff globally because it disrupts all existing connections. When you enable kick off using an in-path rule, once the SteelHead detects packet flow that matches the IP and port specified in the rule, it sends an RST packet to the client and server maintaining the connection to try to close it. Next, it sets an internal flag to prevent any further kickoffs until the optimization service is once again restarted.

If no data is being transferred between the client and server, the connection is not reset immediately. It resets the next time the client or server tries to send a message. Therefore, when the application is idle, it might take a while for the connection to reset.

By default, automatic kickoff per in-path rule is disabled.

The service applies the first matching in-path rule for an existing connection that matches the source and destination IP and port; it doesn’t consider a VLAN tag ID when determining whether to kick off the connection. Consequently, the service automatically kicks off connections with matching source and destination addresses and ports on different VLANs.

The source and destination of a preexisting connection can’t be determined because the SteelHead did not see the initial TCP handshake, whereas an in-path rule specifies the source and destination IP address to which the rule should be applied. Hence, this connection for this IP address pair is matched twice, once as source to destination and the other as destination to source to find an in-path rule.

As an example, the following in-path rule will kick off connections from 10.11.10.10/24 to 10.12.10.10/24 and 10.12.10.10/24 to 10.11.10.10/24:

Src 10.11.10.10/24 Dst 10.12.10.10/24 Auto Kickoff enabled

The first matching in-path rule will be considered during the kickoff check for a preexisting connection. If the first matching in-path rule has kickoff enabled, then that preexisting connection will be reset.

Specifying automatic kickoff per in-path rule enables kickoff even when you disable the global kickoff feature. When global kickoff is enabled, it overrides this setting. You set the global kickoff feature using the Reset Existing Client Connections on Start Up feature, which appears in the Optimization > Network Services: General Service Settings page.

This feature pertains only to autodiscover and fixed-target rule types and is disabled for the other rule types.

Neural Framing Mode

Optionally, if the rule type is Auto-Discover or Fixed Target, you can select a neural framing mode for the in-path rule. Neural framing enables the system to select the optimal packet framing boundaries for SDR. Neural framing creates a set of heuristics to intelligently determine the optimal moment to flush TCP buffers. The system continuously evaluates these heuristics and uses the optimal heuristic to maximize the amount of buffered data transmitted in each flush, while minimizing the amount of idle time that the data sits in the buffer.

Select a neural framing setting:

• Never—Do not use the Nagle algorithm. The Nagle algorithm is a means of improving the efficiency of TCP/IP networks by reducing the number of packets that need to be sent over the network. It works by combining a number of small outgoing messages and sending them all at once. All the data is immediately encoded without waiting for timers to fire or application buffers to fill past a specified threshold. Neural heuristics are computed in this mode but aren’t used. In general, this setting works well with time-sensitive and chatty or real-time traffic.

• Always—Use the Nagle algorithm. This is the default setting. All data is passed to the codec, which attempts to coalesce consume calls (if needed) to achieve better fingerprinting. A timer (6 ms) backs up the codec and causes leftover data to be consumed. Neural heuristics are computed in this mode but aren’t used. This mode is not compatible with IPv6.

• TCP Hints—If data is received from a partial frame packet or a packet with the TCP PUSH flag set, the encoder encodes the data instead of immediately coalescing it. Neural heuristics are computed in this mode but aren’t used. This mode is not compatible with IPv6.

• Dynamic—Dynamically adjust the Nagle parameters. In this option, the system discerns the optimum algorithm for a particular type of traffic and switches to the best algorithm based on traffic characteristic changes. This mode is not compatible with IPv6.

For different types of traffic, one algorithm might be better than others. The considerations include: latency added to the connection, compression, and SDR performance.

To configure neural framing for an FTP data channel, define an in-path rule with the destination port 20 and set its data reduction policy. To configure neural framing for a MAPI data channel, define an in-path rule with the destination port 7830 and set its data reduction policy.

WAN Visibility Mode

Enables WAN visibility, which pertains to how packets traversing the WAN are addressed. RiOS provides three types of WAN visibility: correct addressing, port transparency, and full address transparency.

You configure WAN visibility on the client-side SteelHead (where the connection is initiated).

Select one of these modes from the drop-down list:

• Correct Addressing—Disables WAN visibility. Correct addressing uses SteelHead IP addresses and port numbers in the TCP/IP packet header fields for optimized traffic in both directions across the WAN. This is the default setting.

• Port Transparency—Port address transparency preserves your server port numbers in the TCP/IP header fields for optimized traffic in both directions across the WAN. Traffic is optimized while the server port number in the TCP/IP header field appears to be unchanged. Routers and network monitoring devices deployed in the WAN segment between the communicating SteelHeads can view these preserved fields.

Starting with RiOS 9.7, IPv6 addressing is supported with port transparency and full transparency modes; however, IPv6 transparency is supported only when you select the Enable Enhanced IPv6 Auto-Discovery check box in the Optimization > Network Services: Peering Rules page. See

Configuring peering for more information.

Use port transparency if you want to manage and enforce QoS policies that are based on destination ports. If your WAN router is following traffic classification rules written in terms of client and network addresses, port transparency enables your routers to use existing rules to classify the traffic without any changes.

Port transparency enables network analyzers deployed within the WAN (between the SteelHeads) to monitor network activity and to capture statistics for reporting by inspecting traffic according to its original TCP port number.

Port transparency doesn’t require dedicated port configurations on your SteelHeads.

Port transparency only provides server port visibility. It doesn’t provide client and server IP address visibility, nor does it provide client port visibility.

• Full Transparency—Full address transparency preserves your client and server IP addresses and port numbers in the TCP/IP header fields for optimized traffic in both directions across the WAN. It also preserves VLAN tags. Traffic is optimized while these TCP/IP header fields appear to be unchanged. Routers and network monitoring devices deployed in the WAN segment between the communicating SteelHeads can view these preserved fields.

If both port transparency and full address transparency are acceptable solutions, port transparency is preferable. Port transparency avoids potential networking risks that are inherent to enabling full address transparency. For details, see the SteelHead Deployment Guide.

However, if you must see your client or server IP addresses across the WAN, full transparency is your only configuration option.

Enabling full address transparency requires symmetrical traffic flows between the client and server. If any asymmetry exists on the network, enabling full address transparency might yield unexpected results, up to and including loss of connectivity. For details, see the SteelHead Deployment Guide.

RiOS supports Full Transparency with a stateful firewall. A stateful firewall examines packet headers, stores information, and then validates subsequent packets against this information. If your system uses a stateful firewall, the following option is available:

• Full Transparency with Reset—Enables full address and port transparency and also sends a forward reset between receiving the probe response and sending the transparent inner channel SYN. This mode ensures the firewall doesn’t block inner transparent connections because of information stored in the probe connection. The forward reset is necessary because the probe connection and inner connection use the same IP addresses and ports and both map to the same firewall connection. The reset clears the probe connection created by the SteelHead and allows for the full transparent inner connection to traverse the firewall.

For details on configuring WAN visibility and its implications, see the SteelHead Deployment Guide.

WAN visibility works with autodiscover in-path rules only. It doesn’t work with fixed-target rules or server-side out-of-path SteelHead configurations.

To enable full transparency globally by default, create an in-path autodiscover rule, select Full, and place it above the default in-path rule and after the Secure, Interactive, and RBT-Proto rules.

You can configure a SteelHead for WAN visibility even if the server-side SteelHead doesn’t support it, but the connection is not transparent.

You can enable full transparency for servers in a specific IP address range and you can enable port transparency on a specific server. For details, see the SteelHead Deployment Guide.

The Top Talkers report displays statistics on the most active, heaviest users of WAN bandwidth, providing some WAN visibility without enabling a WAN Visibility Mode.

Position

Select Start, End, or a rule number from the drop-down list. SteelHeads evaluate rules in numerical order starting with rule 1. If the conditions set in the rule match, then the rule is applied, and the system moves on to the next packet. If the conditions set in the rule don’t match, the system consults the next rule. For example, if the conditions of rule 1 don’t match, rule 2 is consulted. If rule 2 matches the conditions, it’s applied, and no further rules are consulted.

In general, list rules in this order:

1. Deny

2. Discard

3. Pass-through

4. Fixed-Target

5. Auto-Discover

Place rules that use domain labels below others.

The default rule, Auto-Discover, which optimizes all remaining traffic that has not been selected by another rule, can’t be removed and is always listed.

Description

Describe the rule to facilitate administration.

To configure and enable in-path rules

1. Choose Optimization > Network Services: In-Path Rules to display the In-Path Rules page.

2. Select an existing rule, or add a new one.

3. Adjust the settings to your needs.

4. Select Enable Rule.

5. Click Add if you are adding a new rule, or Apply to apply your changes.

6. Click Save to Disk to save your settings permanently.

After the Management Console has applied your settings, you can verify whether changes have had the desired effect by reviewing related reports. When you have verified appropriate changes, you can write the active configuration that is stored in memory to the active configuration file (or you can save it as any filename you choose). For details, see

Managing configuration files.

Related topics