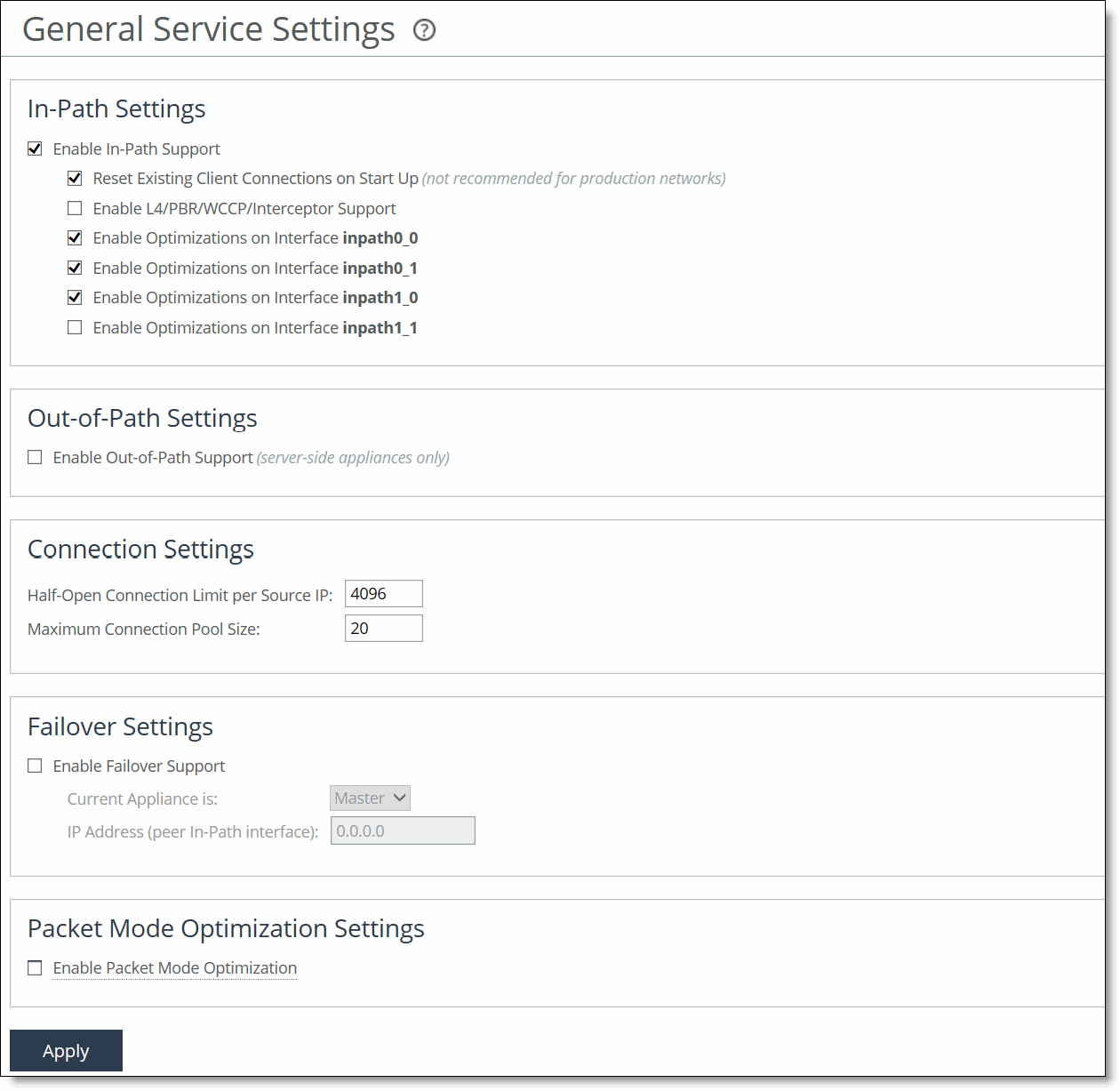

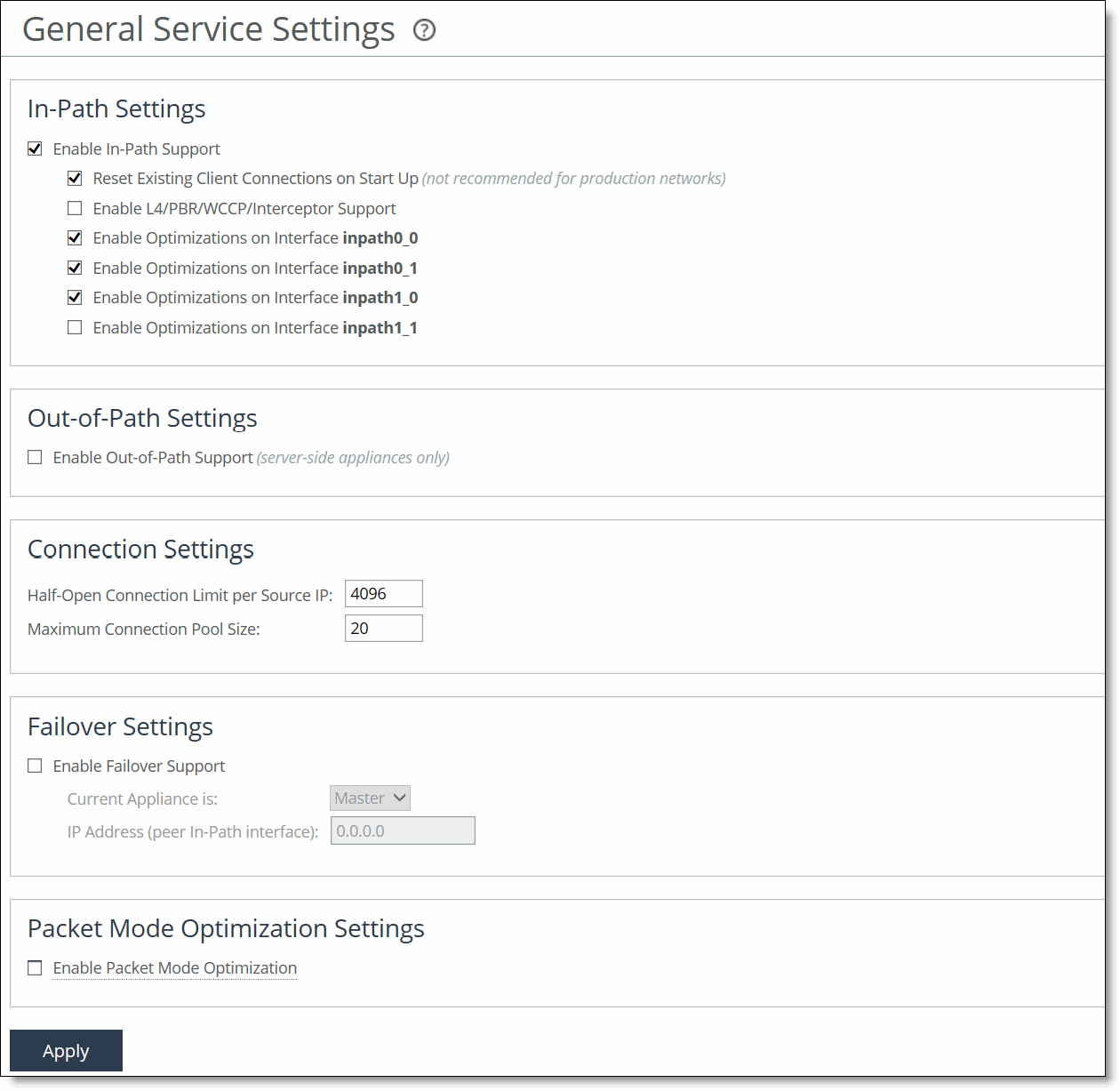

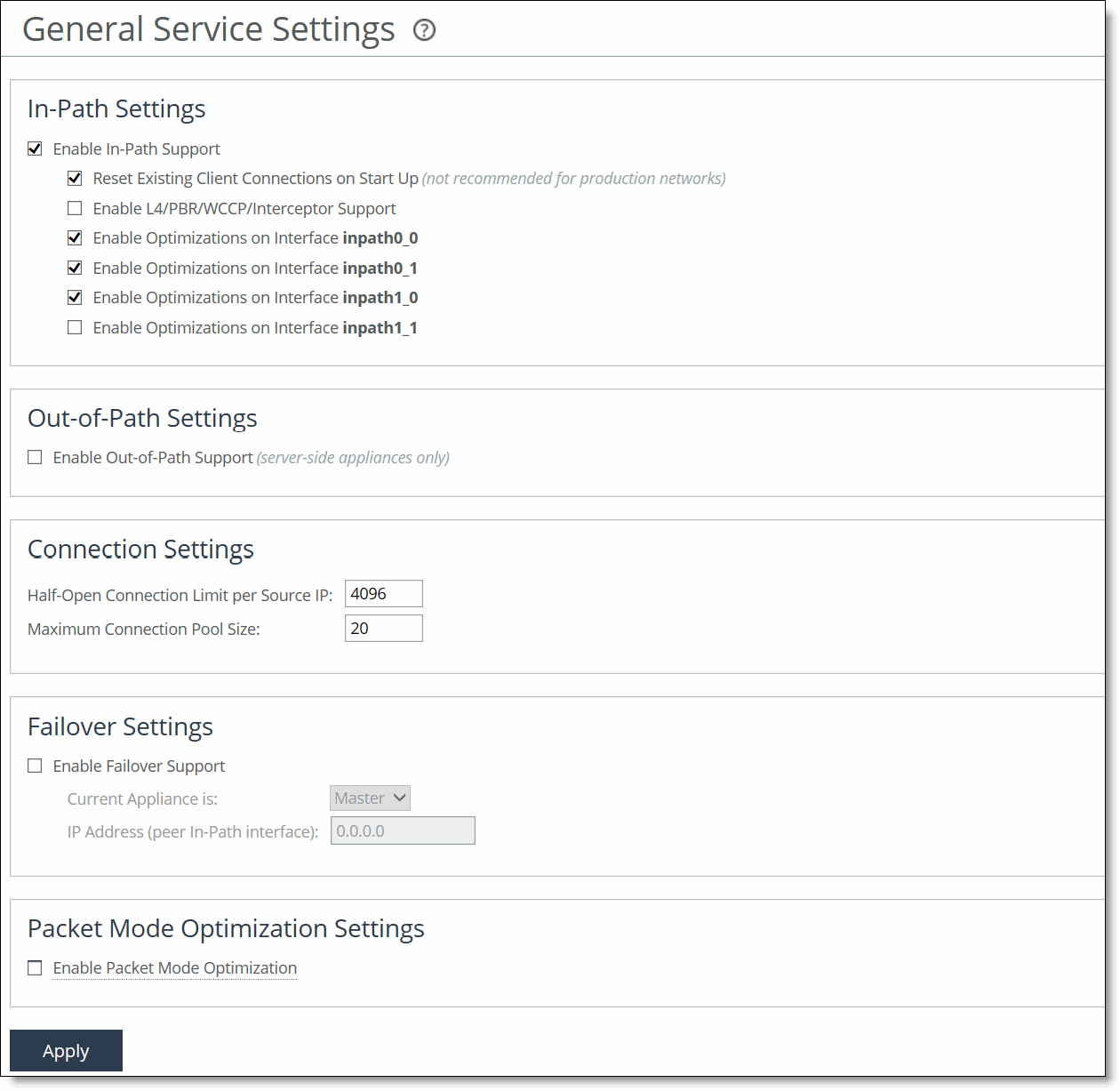

General Service Settings page

Control | Description |

Enable In-Path Support | Enables optimization on traffic that is in the direct path of the client, server, and SteelHead. |

Reset Existing Client Connections on Start Up | Enables kickoff globally. If you enable kickoff, connections that exist when the optimization service is started and restarted are disconnected. When the connections are retried they’re optimized. Generally, connections are short-lived and kickoff is not necessary. It is suitable for very challenging remote environments. In a remote branch-office with a T1 and 35-ms round-trip time, you would want connections to migrate to optimization gracefully, rather than risk interruption with kickoff. RiOS provides a way to reset preexisting connections that match an in-path rule and the rule has kickoff enabled. You can also reset a single pass-through or optimized connection in the Current Connections report, one connection at a time. Do not enable kickoff for in-path SteelHeads that use autodiscover or if you don’t have a SteelHead on the remote side of the network. If you don’t set any in-path rules the default behavior is to autodiscover all connections. If kickoff is enabled, all connections that existed before the SteelHead started are reset. |

Enable L4/PBR/WCCP Interceptor Support | Enables optional, virtual in-path support on all the interfaces for networks that use Layer-4 switches, PBR, WCCP, and SteelHead Interceptor. External traffic redirection is supported only on the first in-path interface. These redirection methods are available: • Layer-4 Switch—You enable Layer-4 switch support when you have multiple SteelHeads in your network, so that you can manage large bandwidth requirements. • Policy-Based Routing (PBR)—PBR allows you to define policies to route packets instead of relying on routing protocols. You enable PBR to redirect traffic that you want optimized by a SteelHead that is not in the direct physical path between the client and server. • Web Cache Communication Protocol (WCCP)—If your network design requires you to use WCCP, a packet redirection mechanism directs packets to RiOS appliances that aren’t in the direct physical path to ensure that they’re optimized. For details about configuring Layer-4 switch, PBR, and WCCP deployments, see the SteelHead Deployment Guide. |

This feature is only supported by the Cloud Accelerator. | Enables configuration of the transparency mode in the Cloud Accelerator and transmits it to the Discovery Agent. The Discovery Agent in the server provides these transparency modes for client connections: • Restricted transparent—All client connections are transparent with these restrictions: – If the client connection is from a NATted network, the application server sees the private IP address of the client. – You can use this mode only if there’s no conflict between the private IP address ranges (there are no duplicate IP addresses) and ports. This is the default mode. • Safe transparent—If the client is behind a NAT device, the client connection to the application server is nontransparent—the application server sees the connection as a connection from the Cloud Accelerator IP address and not the client IP address. All connections from a client that is not behind a NAT device are transparent and the server sees the connections from the client IP address instead of the Cloud Accelerator IP address. • Non-transparent—All client connections are nontransparent—the application server sees the connections from the server-side SteelHead IP address and not the client IP address. We recommend that you use this mode as the last option. |

Enable Optimizations on Interface <interface-name> | Enables in-path support for additional bypass cards. If you have an appliance that contains multiple two-port, four-port, or six-port bypass cards, the Management Console displays options to enable in-path support for these ports. The number of these interface options depends on the number of pairs of LAN and WAN ports that you have enabled in your SteelHead. The interface names for the bypass cards are a combination of the slot number and the port pairs (inpath<slot>_<pair>, inpath<slot>_<pair>): for example, if a four-port bypass card is located in slot 0 of your appliance, the interface names are inpath0_0 and inpath0_1. Alternatively, if the bypass card is located in slot 1 of your appliance, the interface names are inpath1_0 and inpath1_1. For details about installing additional bypass cards, see the Network and Storage Card Installation Guide. |

Control | Description |

Enable Out-of-Path Support | Enables out-of-path support on a server-side SteelHead, where only a SteelHead primary interface connects to the network. The SteelHead can be connected anywhere in the LAN. There is no redirecting device in an out-of-path SteelHead deployment. You configure fixed-target in-path rules for the client-side SteelHead. The fixed-target in-path rules point to the primary IP address of the out-of-path SteelHead. The out-of-path SteelHead uses its primary IP address when communicating to the server. The remote SteelHead must be deployed either in a physical or virtual in-path mode. If you set up an out-of-path configuration with failover support, you must set fixed-target rules that specify the master and backup SteelHeads. |

Control | Description |

Half-Open Connection Limit per Source IP | Restricts half-opened connections on a source IP address initiating connections (that is, the client machine). Set this feature to block a source IP address that is opening multiple connections to invalid hosts or ports simultaneously (for example, a virus or a port scanner). This feature doesn’t prevent a source IP address from connecting to valid hosts at a normal rate. Thus, a source IP address could have more established connections than the limit. The default value is 4096. The appliance counts the number of half-opened connections for a source IP address (connections that check if a server connection can be established before accepting the client connection). If the count is above the limit, new connections from the source IP address are passed through unoptimized. If you have a client connecting to valid hosts or ports at a very high rate, some of its connections might be passed through even though all of the connections are valid. |

Maximum Connection Pool Size | Specify the maximum number of TCP connections in a connection pool. Connection pooling enhances network performance by reusing active connections instead of creating a new connection for every request. Connection pooling is useful for protocols that create a large number of short-lived TCP connections, such as HTTP. To optimize such protocols, a connection pool manager maintains a pool of idle TCP connections, up to the maximum pool size. When a client requests a new connection to a previously visited server, the pool manager checks the pool for unused connections and returns one if available. Thus, the client and the SteelHead don’t have to wait for a three-way TCP handshake to finish across the WAN. If all connections currently in the pool are busy and the maximum pool size has not been reached, the new connection is created and added to the pool. When the pool reaches its maximum size, all new connection requests are queued until a connection in the pool becomes available or the connection attempt times out. The default value is 20. A value of 0 specifies no connection pool. You must restart the SteelHead after changing this setting. Viewing the Connection Pooling report can help determine whether to modify the default setting. If the report indicates an unacceptably low ratio of pool hits per total connection requests, increase the pool size. |

Control | Description |

Enable Failover Support | Configures a failover deployment on either a master or backup SteelHead. In the event of a failure in the master appliance, the backup appliance takes its place with a warm RiOS data store and can begin delivering fully optimized performance immediately. The master and backup SteelHeads must be the same hardware model. |

Current Appliance is | Select Master or Backup from the drop-down list. A master SteelHead is the primary appliance; the backup SteelHead is the appliance that automatically optimizes traffic if the master appliance fails. |

IP Address (peer in-path interface) | Specify the IP address for the master or backup SteelHead. You must specify the in-path IP address (inpath0_0) for the SteelHead, not the primary interface IP address. You must specify the inpath0_0 interface as the other appliance’s in-path IP address. |

Control | Description |

Enable Packet Mode Optimization | Performs packet-by-packet SDR bandwidth optimization on TCP or UDP (over IPv4 or IPv6) flows. This feature uses fixed-target packet mode optimization in-path rules to optimize bandwidth for applications over these transport protocols. TCPv6 or UDPv4 flows are supported. TCPv4 and UDPv6 flows require a minimum RiOS version of 8.5. By default, packet-mode optimization is disabled. Enabling this feature requires an optimization service restart. |