Configuring High Availability

Overview

High availability (HA) maintains uninterrupted service in the event of a power, hardware, software, or WAN uplink failure. Configuring HA provides network redundancy and reliability.

SteelConnect Gateway Model Physical Appliances

This table lists the common uses cases for SteelConnect gateways.

Gateway Model | Use Case |

SDI-130 | Small branch or retail |

SDI-330 | Medium branch |

SDI-1030 | Large branch, campus, or data center |

SDI-5030 | Campus or data center |

Branch high availability overview

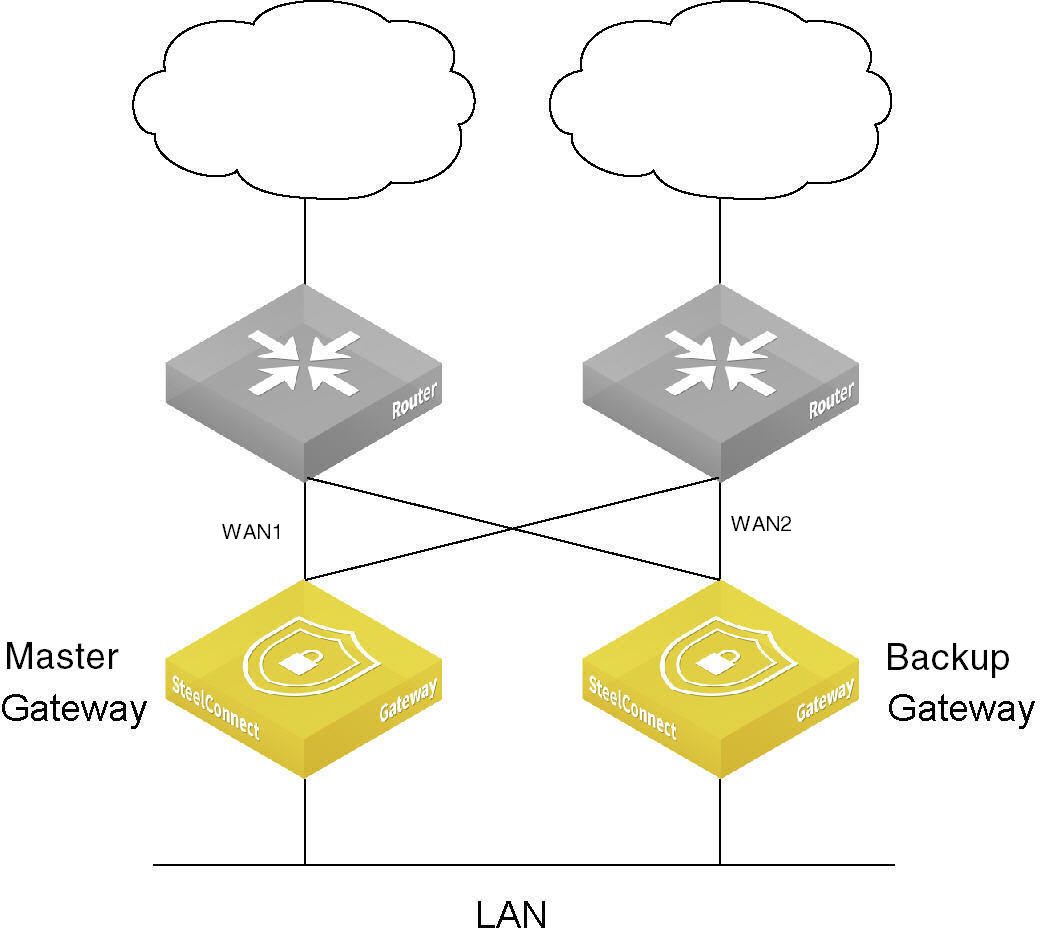

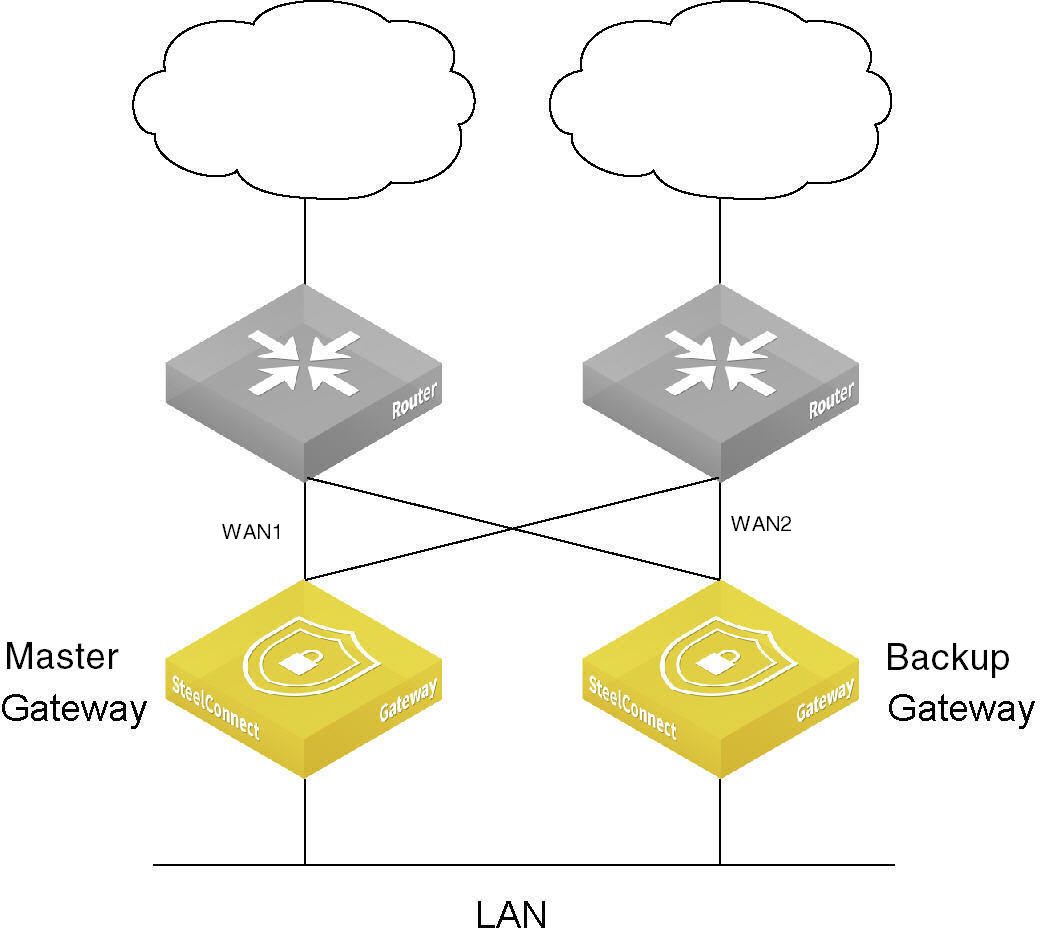

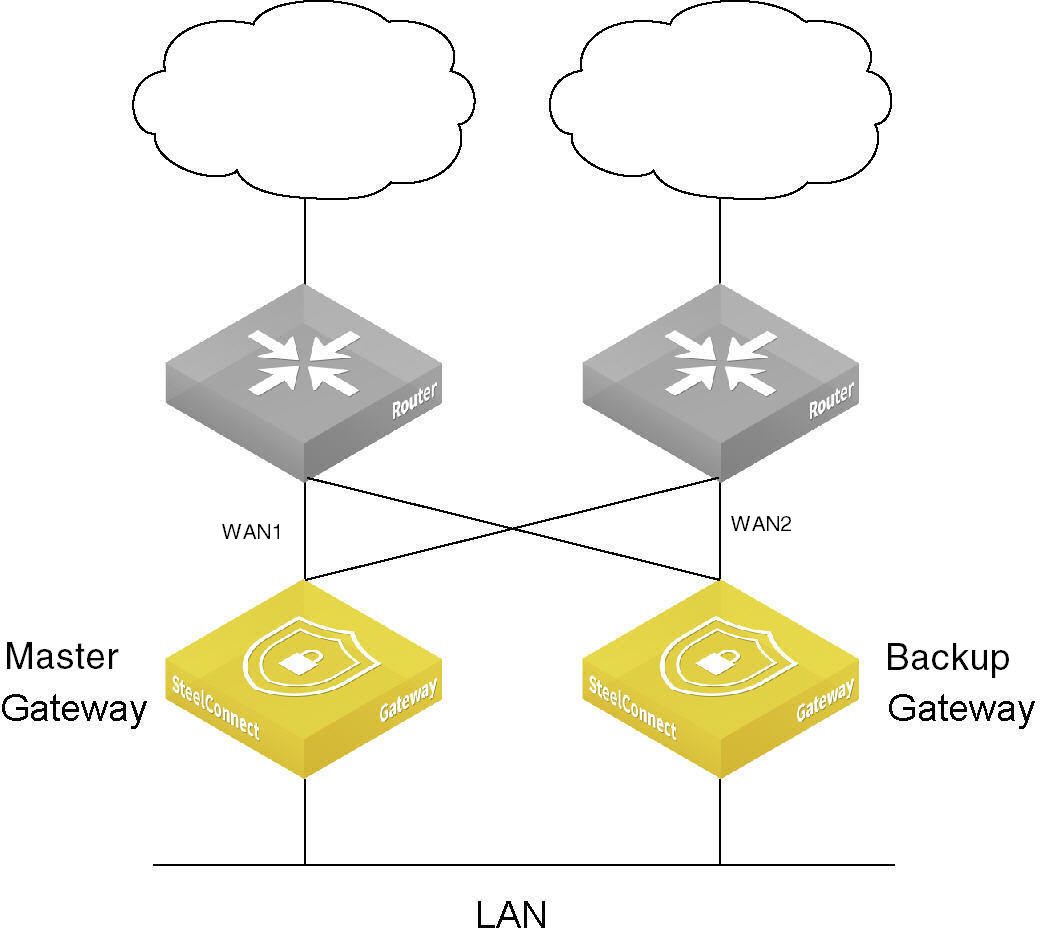

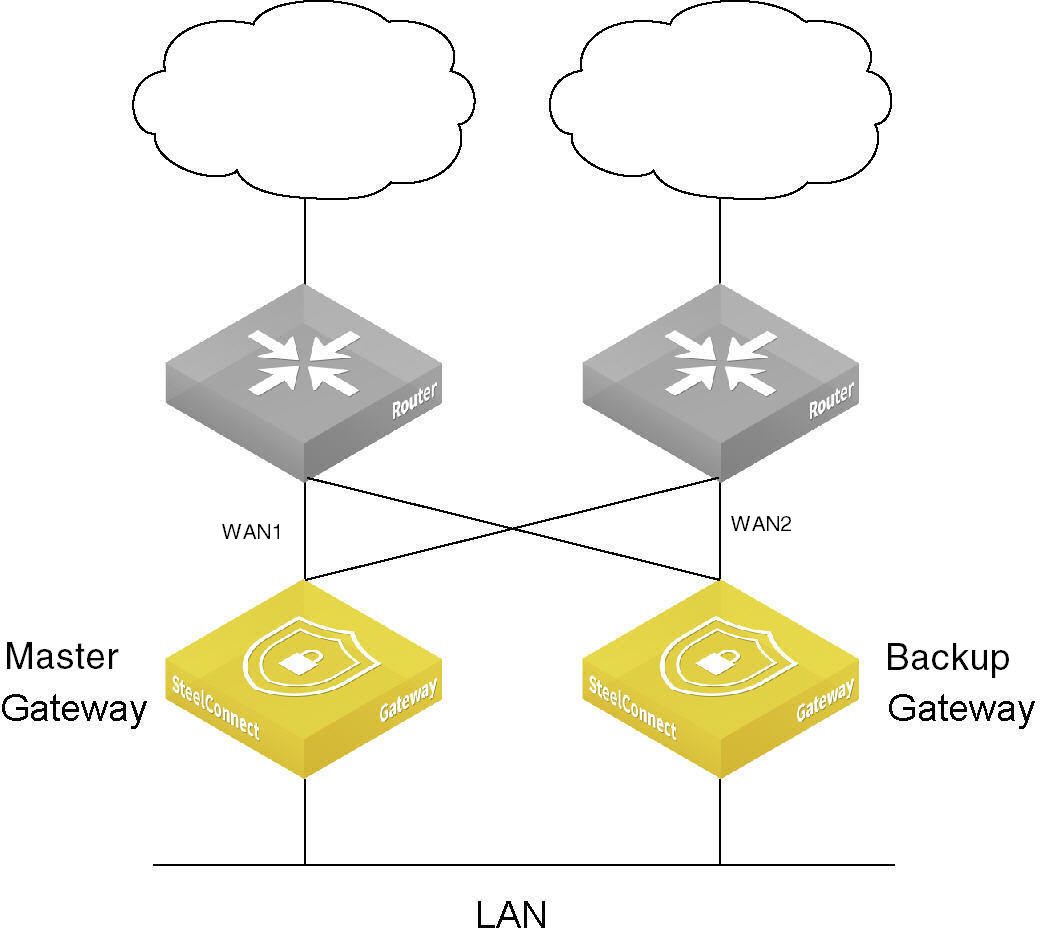

SteelConnect Manager (SCM) connects the branch gateway pair that includes the master and backup gateways over the links in the management network zone to monitor and route traffic.

The two gateways use active-passive mode. In active-passive mode, only the master gateway processes traffic while the backup gateway remains in standby mode, ready to take over if the master gateway fails.

HA active-passive deployment

How does branch high availability work?

SCM sends the master gateway configuration to both gateways. The first gateway to send Virtual Router Redundancy Protocol (VRRP) packets on the network becomes the master and SCM applies the master configuration to the gateway. No additional configuration is required on the backup gateway.

Gateways in an HA pair establish an encrypted communication channel between each other. After the communication channel is established between the master and backup gateway, the communication channel replicates all DHCP lease release and renewals between the master and backup in both directions, so that in the event of a failover, a new master gateway doesn’t assign duplicate leases.

The gateway pair also synchronizes firewall state and connection tracking information between the master and backup gateway providing stateful transition if failover occurs.

Gateway failover performance

A failover due to failure of the master gateway will trigger within 3-4 seconds of the master gateway going offline. After the backup gateway assumes the master role, it can pass internet traffic in approximately 9-10 seconds. The AutoVPN tunnels are typically reestablished after an additional 4 to 5 seconds.

Which gateway models support high availability?

SCM supports box-to-box redundancy for these gateway models:

•SDI-130 paired with another SDI-130

•SDI-330 paired with another SDI-330

•SDI-1030 paired with another SDI-1030 (requires the use of a dedicated HA port)

You can pair two gateways of the same model, two shadow appliances of the same model, or one hardware and one shadow appliance of the same model for high availability.

You can use shadow and physical gateways in a high-availability pair. You can also use virtual gateways.

HA features

Smart update

To ensure minimal service interruption during a firmware upgrade for an HA pair, SCM uses this smart updating process to gracefully install firmware updates:

1. SCM notifies appliances about the availability of a new firmware image.

2. The master appliance immediately starts downloading the image. The backup appliance downloads the image through a proxied connection through the master appliance.

3. After the download of the firmware image is complete on the backup gateway, SCM instructs it to install the new firmware and reboot.

At this point, the master gateway has received the new firmware file; however, it’s still handling client traffic for the HA pair and a failover has not yet occurred.

4. After SCM receives a notification from the backup gateway that it has rebooted and is running the new firmware, SCM instructs the master gateway to install the firmware and reboot.

5. The reboot triggers a failover and the backup gateway assumes the active master role.

6. After the previous master gateway comes back online, it remains in backup mode until the active gateway triggers a failover and relinquishes the active role.

WAN uplink failover

HA protects against local WAN uplink issues such as:

•an unplugged network cable between the upstream switch port on one of the gateways but not the other.

•an Ethernet port failure on the WAN port or corresponding upstream switch port.

Failover triggers after an Internet Control Message Protocol (ICMP) ping detects that all uplinks are down. The gateway dynamically determines an appropriate upstream IP address to ping. The ICMP uplink monitoring disregards short uplink drop-outs to avoid reporting false negatives.

WAN uplink failover performance

A WAN uplink failover triggers within 13 to16 seconds after down uplinks are detected. After the backup gateway assumes the master role, it can pass internet traffic in approximately 9-10 seconds. The AutoVPN tunnels are typically reestablished after an additional 4 to 5 seconds.

For network stability, a failover can’t occur within 60 seconds of a previous failover. WAN uplink failover uses a 60-second dampening factor to limit the advertisements of up and down link transition states. For 60 seconds after a failover, the system suppresses subsequent failovers until it has enough time to verify the uplink state and analyze the gateway heuristics.

Uplinks are shared between the master and backup gateways. For example, uplink 1 and (optionally) uplink 2 are physically connected to both the master and backup gateways, so if an upstream outage occurs, both gateways are affected. To provide continued connectivity after an upstream outage, you can create a traffic path rule that selects a secondary path. For details, see

To create a traffic path rule.

Prerequisites

Before configuring high availability, check these requirements and recommendations.

Gateway configuration

Both gateways must be:

•cabled on the LAN side.

•cabled directly via the dedicated port (1030 and virtual gateways only).

We recommend that the gateways are cabled exactly the same for redundancy.

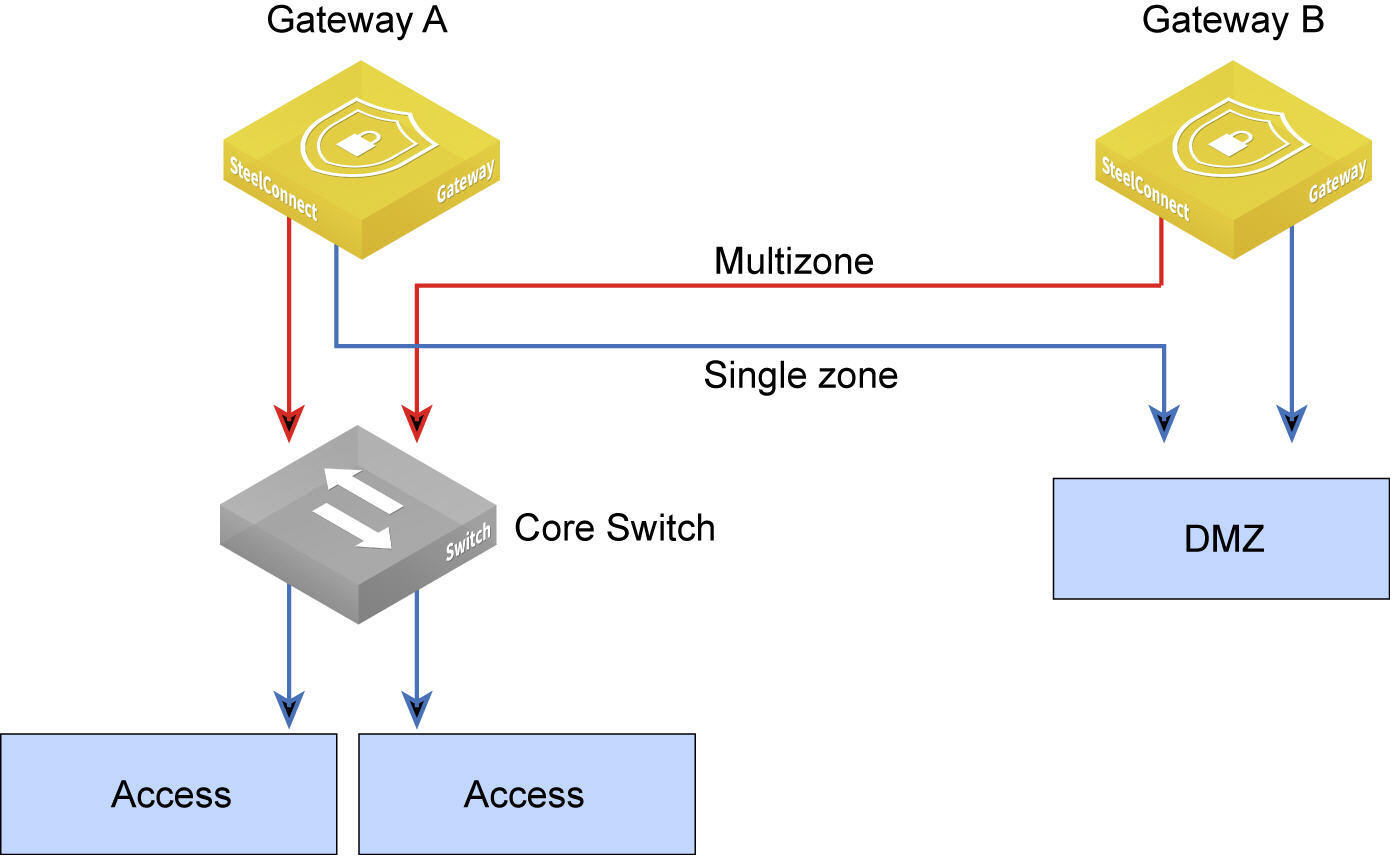

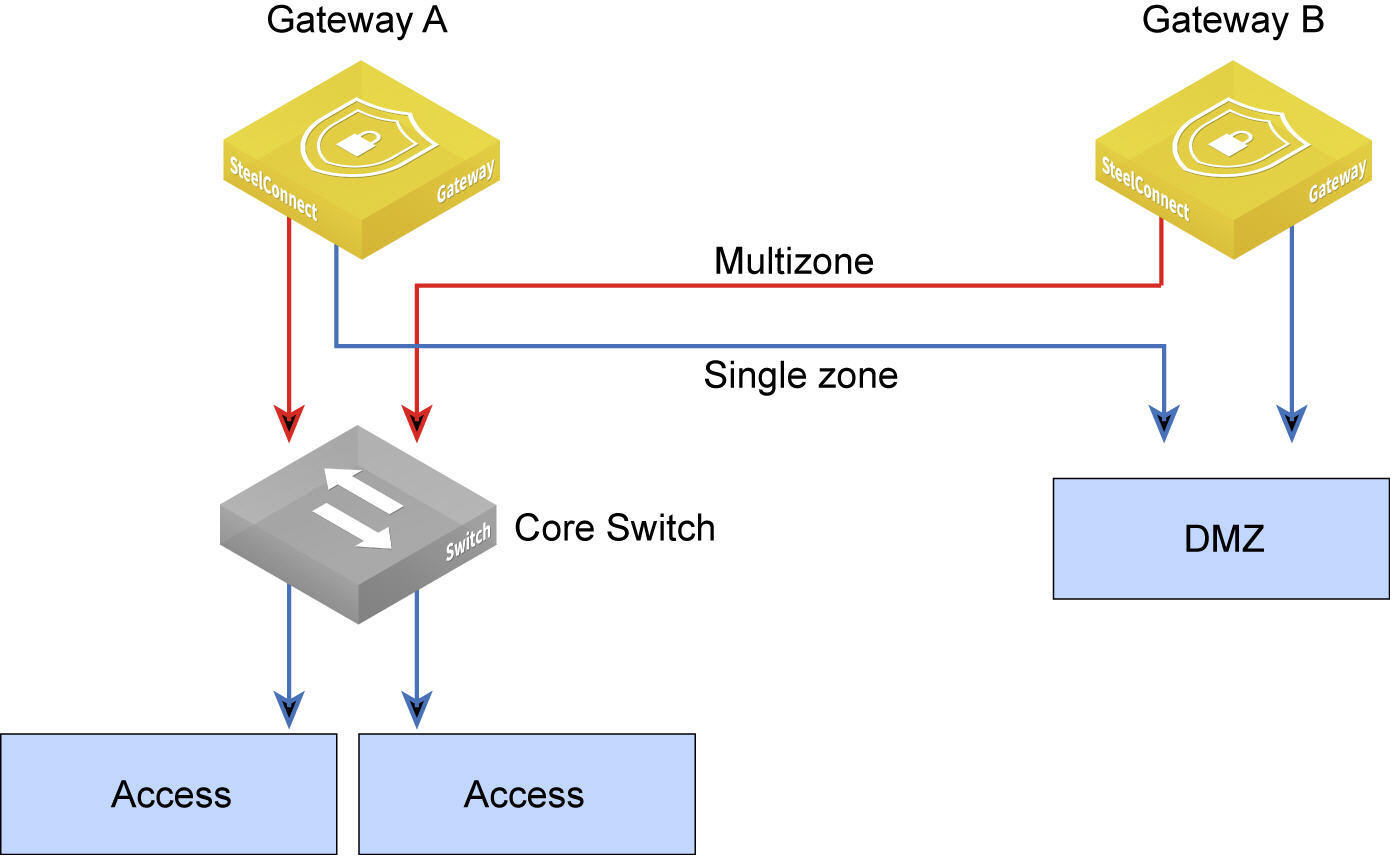

The backup gateway communicates with SCM through a default route using the site’s management zone. Each site has only one management zone. There must be management zone connectivity between each HA gateway to work. Loss of management zone connectivity will cause a “split brain” state where both HA gateways become the master. Management zone connectivity can be set either explicitly through single-zone ports with the management zone selected or implicitly through a multizone port. See

Port configuration.

Switch configuration

When HA is configured, never plug in a device other than a switch directly into the gateway. For HA failover to work properly, you can configure a switch in between devices and the HA pair, so that devices can access whichever gateway is currently the master.

You can connect one or more switches directly to the HA pair; however, keep in mind that the HA pair will not forward Layer 2 traffic among the connected switches. To forward Layer 2 traffic, you must configure a core switch as a Layer 2 aggregation layer.

Make sure that the switches connected to the HA gateways are set to either a single-zone port or a multizone port, based on your requirements.

HA switch configuration

Individual and mirrored uplinks

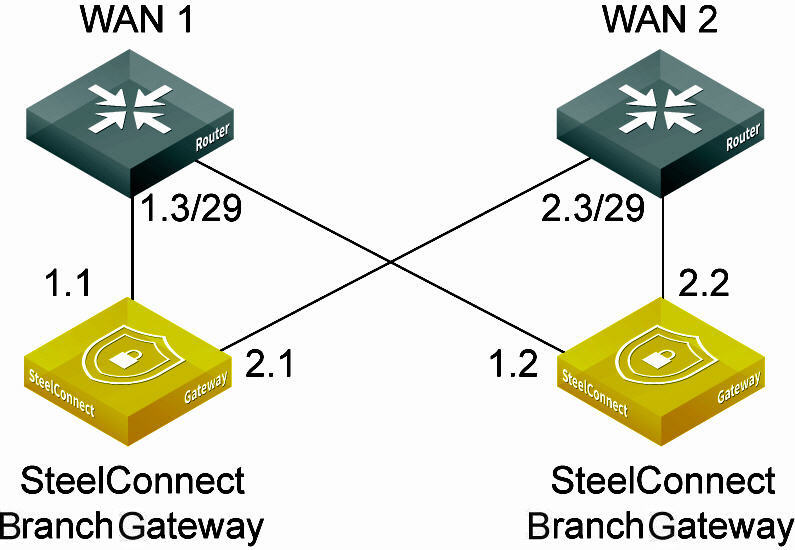

Mirrored uplinks configure identical ports for the HA pair. You can assign an individual uplink to a gateway, and the upstream router assigns a port for each member of the HA pair.

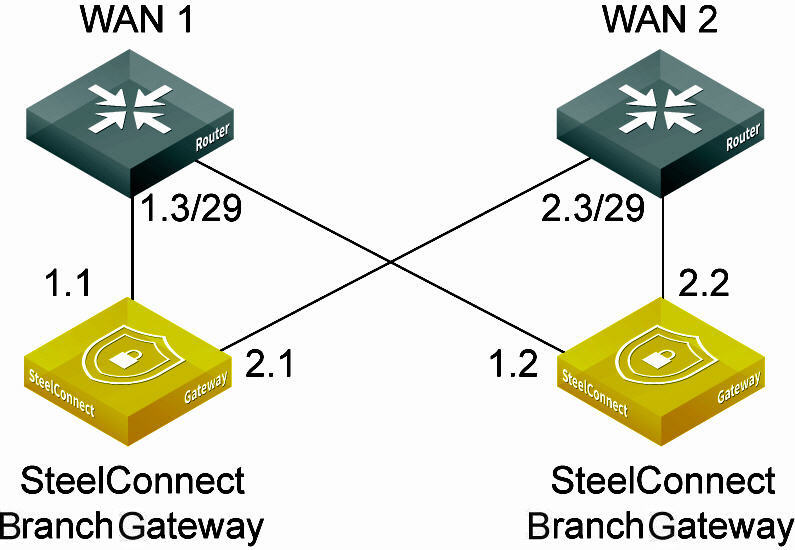

Individual uplink IP addresses

Individual uplinks don’t require a WAN-side switch, as each uplink has its own Layer 3 configuration.

When should I use nonmirrored, individual uplinks?

We recommend using mirrored uplinks. In an active-passive HA configuration, the backup gateway is passive with all uplinks and LAN ports down. The uplinks on the backup gateway aren’t actively routing traffic. However, for deployments where WAN edge equipment can provide Layer 3 ports for greater flexibility, you can associate individual uplinks with a WAN.

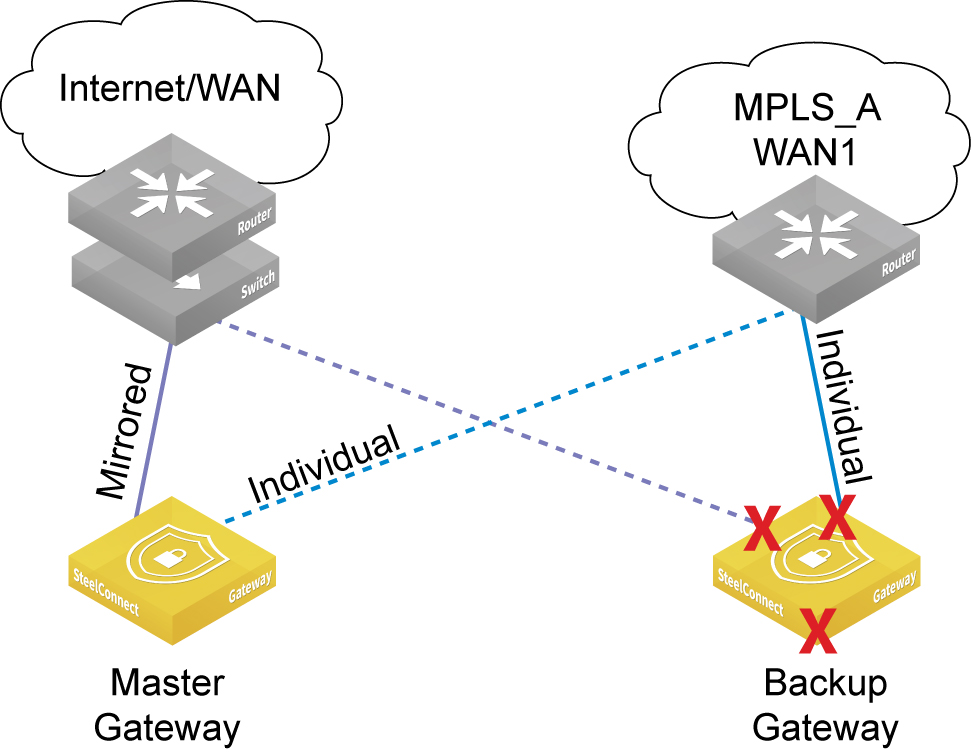

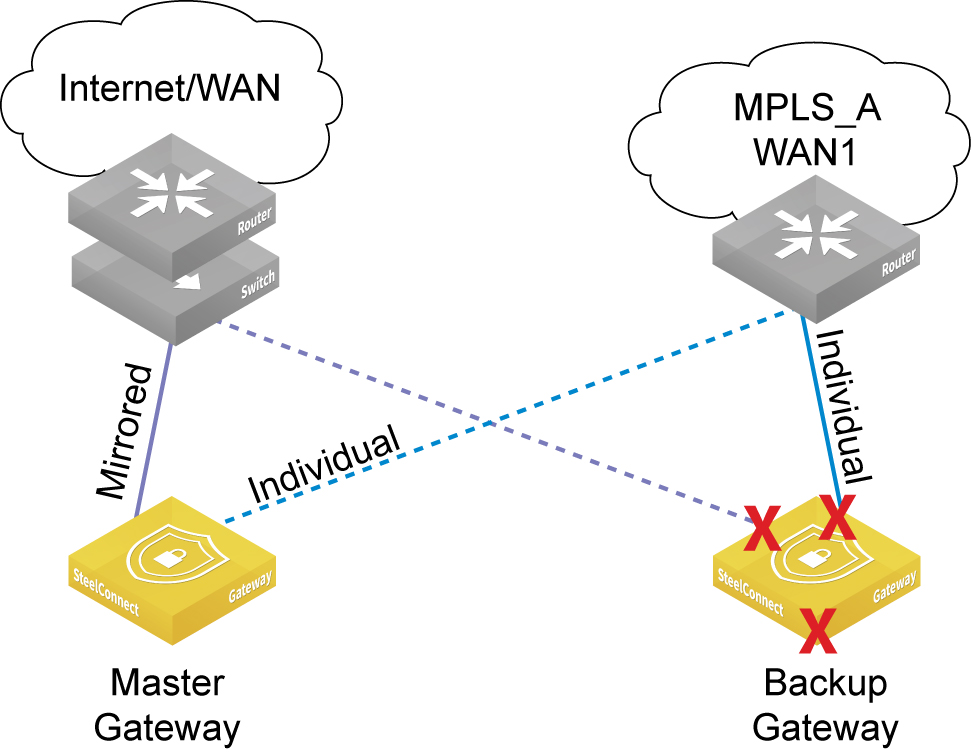

Mirrored and individual uplinks shows a deployment example using one mirrored and two individual uplinks.

Mirrored and individual uplinks

In

Mirrored and individual uplinks, the HA pair has connectivity to two WANs: an internet and an MPLS. Three uplinks are configured for the HA pair. The first is a single internet uplink in mirrored mode, as the ISP only provides a single port on their router. A WAN-side switch is necessary to achieve connectivity for both appliance’s mirrored uplink port to the single port on the internet router. The MPLS provider provides two Layer 3 ports on their PE router. For each port on the MPLS router, an individual, nonmirrored uplink is created with a Layer 3 configuration for that port and assigned to each partner in the HA pair. No switch is necessary on the MPLS WAN when using individual uplinks, because each uplink has its own unique Layer 3 configuration.

The SteelHead SD 570-SD gateway, 770-SD gateway, and 3070-SD gateway models don’t support dedicated ports or mirrored uplinks.

Port configuration

We recommend that you configure the LAN ports such that each gateway mirrors the other; however, you can configure individual, nonmirrored LAN ports per gateway if required. Take care to ensure that the physical cabling respects the port configuration on each gateway.

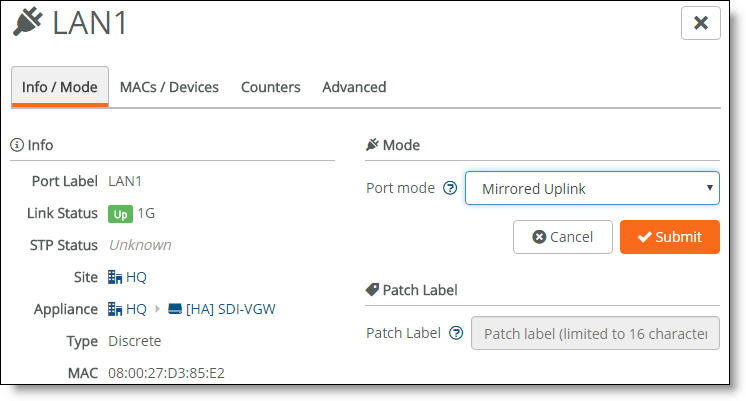

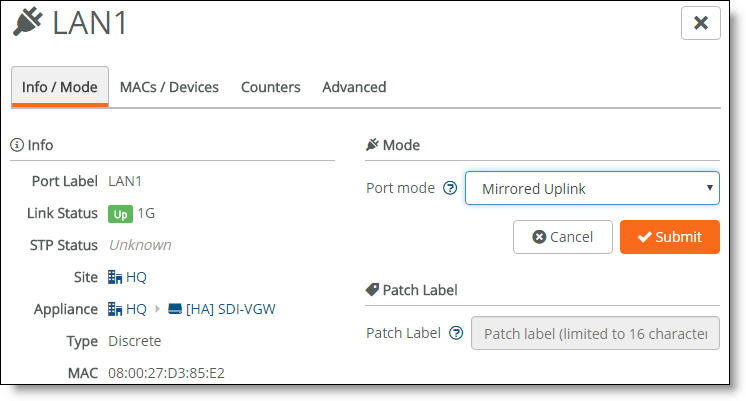

To set the LAN port operation for HA

1. Choose Ports and select a LAN port.

LAN port mode

2. Under Mode, select a port mode from the drop-down list.

•Singlezone - Enables a single zone. After selecting Singlezone, select the zone for the port to carry.

•Uplink - Enables an individual uplink on each partner in an HA pair. After selecting Uplink, select the uplink to use. See

Individual uplink IP addresses.

•Mirrored Uplink - Configures identical ports for the HA pair. One of the nodes in the HA pair needs to have an uplink configured by selecting Uplink in the Port mode field and selecting the specific uplink that needs to be mirrored. On the HA partner, select the corresponding port by selecting Mirrored Uplink. For the mirrored uplink option to be available on the HA partner, the port number must match the partner node’s port number. Select the uplink used in the partner node. SCM configures the port identically to the corresponding port on its partner HA gateway.

When MAC cloning is enabled, mirrored uplinks inherit a virtual MAC address from one of the HA partners. SCM overrides and disables the virtual MAC address on all mirrored uplinks, and it populates the virtual MAC address on one of the HA nodes (indeterminate as to which one is selected by SCM) with the MAC address of the corresponding port on the other node.

Port modes aren’t available on ports configured as a dedicated port for HA.

3. Click Submit.

The Spanning Tree Protocol (STP) prevents network malfunction by blocking ports that can cause loops in redundant network paths. SteelConnect implements the 802.1w Rapid Spanning Tree Protocol (RSTP) defined in the IEEE 802.1D-2004 specification.STP is not supported on branch gateways configured for high availability. Due to the lack of STP, we recommend two deployment options: You can use one multizone or a singlezone LAN port per gateway to the LAN switch to avoid Layer 2 loops and MAC address flapping. Or, on the LAN-side switch, you can disable STP on all switch ports associated with the gateway.

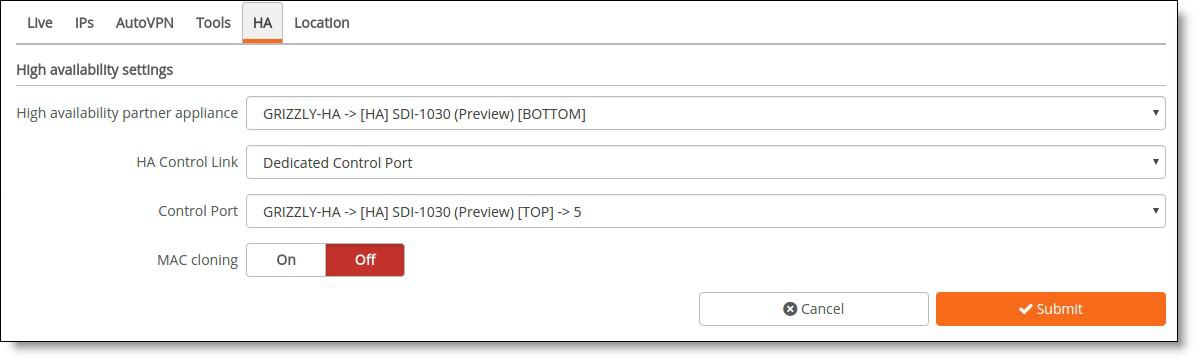

Dedicated port configuration for 1030 and virtual gateways

Dedicated port mode designates a single LAN port as the HA control port. This port is used for routing SCM traffic for backup gateways.

Configure a dedicated port when you are setting up high availability between a 1030 gateway pair. A dedicated port is required for 1030 gateway high availability to avoid loops and spanning tree issues.

You can also configure a dedicated port for a virtual gateway, but it isn’t required. You can select the management zone for a virtual gateway as well as a dedicated port.

You must cable the gateways to each other directly using dedicated ports. Don’t add a switch in between the gateways.

You can’t configure a dedicated port for 130 and 330 gateways.

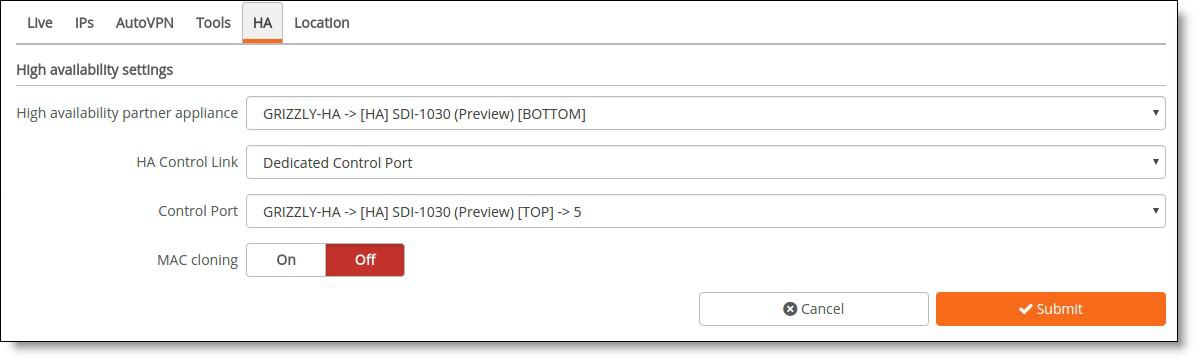

To configure a dedicated port

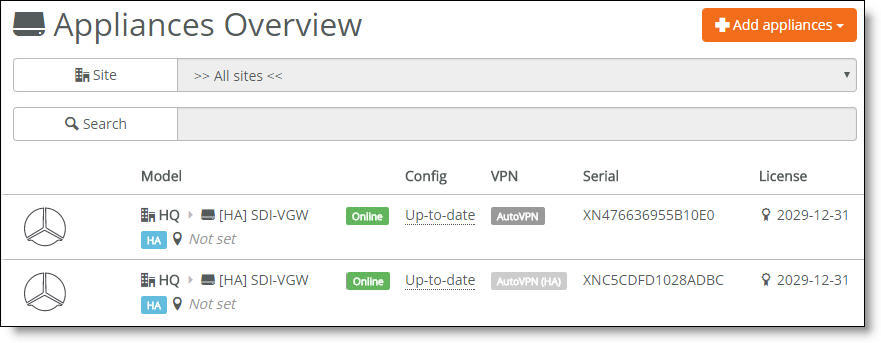

1. Choose Appliances > Overview.

2. Select the fully configured master gateway.

3. Select the HA tab.

4. Next to HA Control Link, select Dedicated Control Port from the drop-down list. The dedicated control port isn’t available on 130 and 330 gateways.

5. Select the control port from the drop-down list. This port must be cabled directly to the other 1030. When you dedicate a port to a gateway, it’s no longer available for use with other gateways.

Dedicated port for a 1030 gateway

6. Click Submit.

How do I configure an HA pair?

Because SCM mirrors all uplink and gateway assignments for HA partners, you only need to configure the master gateway. You simply add a second gateway, cable it, and plug it in. After registering the backup gateway with SCM, you select it as the master’s backup and specify its IP address.

To form a gateway pair for high availability

1. Add two gateways of the same model to a site.

2. Configure the master gateway with zones and uplinks. For details, see

Creating sites and

Creating uplinks. Adding a second uplink to an HA pair is optional, as the HA partners can share one uplink.

If you use mirrored uplinks, you don’t have to configure the backup gateway, because it will inherit the configuration from the master gateway.

The backup gateway is configured with the management zone IP address only.

There is no dedicated connection between the two gateways. The backup gateway has a default route, pointing to the master gateway through the management zone for the site. The default route provides access to SCM to receive firmware updates, configuration changes, and so on.

3. Choose Appliances > Overview.

4. Select the fully configured master gateway.

5. Select the HA tab.

6. Select the gateway to use as the backup.

7. Under HA management IP, select the IP address for the master gateway.

8. Under HA partner management IP, select the IP address for the backup gateway.

These become the two interface IP addresses the management zone uses for the keepalive daemon between the HA pair.

The default IP address for the management zone (typically the .1 address) becomes the virtual IP address. Whichever gateway becomes the master owns the virtual IP address. The virtual IP address only applies to the management zone.

9. Click Submit. You don’t need to reboot SCM.

What impact does a failover from a backup to a master gateway have on the uplinks?

High availability supports all uplink types. When an HA pair switches a backup gateway over to a master gateway, the uplinks are impacted differently, depending on the uplink type:

•PPPoE - All connections are reestablished. The public IP address might change.

•Static IP Address - No impact.

•DHCP client - An optional virtual media access control (MAC) cloning feature is available to support addressing on the WAN interfaces. This feature clones the MAC address on the WAN uplinks for both interfaces in the HA pair. The backup gateway then uses the cloned MAC from the master gateway. This feature is useful when using a cable modem/router as an uplink and an ISP expects a consistent MAC address. The ISP can block access if it receives traffic from an unknown MAC address. This feature is disabled by default.

The cloned MAC address will also be used during failover to update the backup gateway with a new virtual MAC address. Without MAC cloning enabled, AutoVPN tunnels can take longer to reestablish after a fail over. For details on failover performance, see

Gateway failover performance.

MAC cloning only applies to mirrored uplinks. If you require specific MAC addresses on nonmirrored, individual uplinks, use the virtual MAC address feature directly. For details, see

To override a port’s default MAC address.

To enable MAC cloning

1. Choose Appliances > Overview.

2. Select the fully configured master gateway.

3. Select the HA tab.

4. Next to MAC cloning, click On.

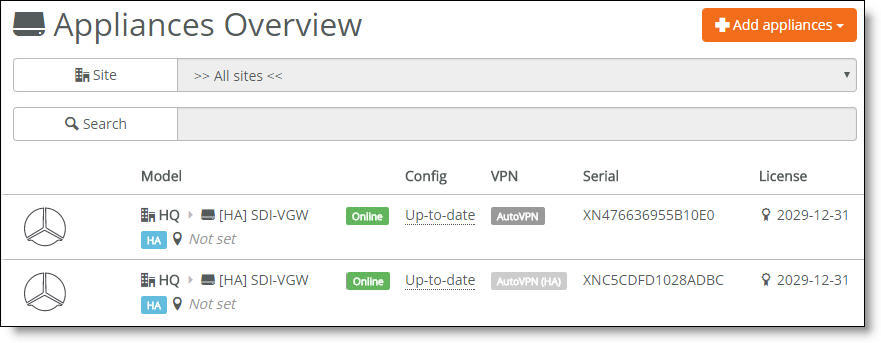

Monitoring a high availability pair

SCM displays all gateways belonging to a high availability pair with a blue HA icon in all views. After the gateway reports its HA state to SCM, the icon indicates whether it is the master or the backup.

The pair stays together in appliance lists to make it clear that the gateway is a partner that belongs in an HA pair. SCM manages both gateways in a pair as one.

Gateways in an HA pair appear together in all views

When an HA pair is separated, the gateways continue running with the same port settings, AutoVPN setting, and so on used in the HA pair. SCM unmirrors the uplinks, so one gateway will typically no longer have an uplink associated with it.

Data center high availability overview

High availability (HA) maintains uninterrupted service for a data center 5030 gateway cluster in the event of a power, hardware, software, or link failure. The SteelConnect Manager (SCM) connects three or more 5030 gateways (nodes) to monitor and route traffic. Configuring high availability between 5030 nodes provides network redundancy and reliability.

The SteelConnect data center solution uses the notation n + k to describe the engineered capacity (n) and resiliency (k) of nodes in a high-availability solution.

Because redundancy is critical, the minimum 5030 high-availability cluster is deployed in an n+ k arrangement of 2 + 1.

To increase throughput, you can scale out the deployment by adding more active and spare 5030 nodes.

Data center redundancy

A cluster is made up of multiple 5030 nodes in a single data center. In an out-of-path deployment, the data center cluster is deployed on the server side. You can achieve resiliency by deploying at least three data center 5030 nodes out-of-path at one site. In a cluster of three 5030 nodes, all three of the nodes actively handle traffic. A 2+1 cluster is a three appliance active quorum that tolerates one complete 5030 gateway failure and remains operational.

High availability ensures no single component failure can bring down an entire cluster. Failure handling is tied to reliable semantics to detect a downed cluster node or service node. Failure recovery is initiated based on the failure notification.

A healthy cluster automatically enables data center high availability. There is no need to enable high availability after creating a cluster. For details, see

Creating clusters.

How does data center high availability work?

Each 5030 node in the cluster is individually connected to the SCM. SCM sends the configuration to all three 5030 nodes.

Node failure

Removing or upgrading a cluster node causes connections on that node to failover and tunnels from affected data centers to reconnect.

After a node failure, a cluster rebalances the active traffic load to resume traffic flow through the nodes in under a minute.

When an active node fails, traffic flow rebalancing occurs automatically. Branch gateways handled by the failed node reconnect to the newly assigned active node. The cluster health is degraded but remains operational.

VM failure

The control virtual machine (CVM) manages appliance start up, licenses, initial configuration, and interface addressing. CVMs are interconnected through data center Layer 3 connectivity and represent an entire data center cluster as a combined manageable entity.

A CVM failure triggers node high-availability failover. A CVM fails if it crashes, panics, hangs, stops execution, or shuts down. The recovery attempted depends on the type of CVM failure. For panics, hangs, and stops, the CVM is restarted. For crashes and shutdowns, the CVM is reinstantiated.

CVM recovery is attempted three times with a five-second wait period between each recovery attempt. If the CVM doesn’t recover after three attempts, the 5030 node is rebooted. The 5030 node also reboots after the CVM encounters any errors during the recovery process.

eBGP and high availability

The external Border Gateway Protocol (eBGP) is used when a tunnel endpoint (TEP) moves from one 5030 node to another during a node failover. When a 5030 node owning a TEP fails, the cluster transfers the TEP from the previous active node to a spare node. The spare node becomes active and now owns the TEP. It advertises the TEP into eBGP so it can attract traffic to itself.

All data center 5030 nodes must use a private autonomous system number (ASN) to determine the best path between two points and also to prevent looping. But the ASN also comes into play during a failover. The 5030 node uses the AS number as follows:

•In a steady, functioning state, a 5030 node prepends the ASN three times in its TEP advertisement. This creates an AS path length of three. Because it’s the only path for the TEP, it becomes the best route.

•After a failover, a 5030 node becomes the new owner of a tunnel endpoint. It advertises its ASN once, which results in a route with the shortest AS path. This causes its route advertisement to win over any preexisting, longer path advertisements because it has an AS length of one. This route advertisement method improves network convergence time, speeding up the failover.

Which models support data center high availability?

SCM supports box-to-box redundancy for a 5030 node paired with two other 5030 nodes.

Switch and port configuration

Upgrading a data center cluster

This topic describes the firmware upgrade prerequisites for a data center cluster.

Prerequisites

Before starting the rolling upgrade, make sure each 5030 node is:

•in a healthy cluster of three or more nodes. To check the cluster health, choose Datacenters > Clusters. The cluster health status must be “Healthy.” If the cluster health status is “Unhealthy,” see

Secure overlay tunnels.

•showing the firmware version status “Firmware upgrade,” indicating that a new firmware version has been downloaded to the appliance but the firmware version hasn’t been updated yet. To view the status, choose Appliances > Overview.

•using the option to apply firmware upgrades immediately. To verify, choose Organization and select the Maintenance tab. After Apply firmware upgrades immediately, check that the On button is green.

To upgrade a 5030 node cluster

1. Choose Datacenters > Clusters.

2. Select the cluster and select the Settings tab.

3. Select a 5030 cluster member, click the trash can icon, and click Submit to remove the node from the cluster.

After the node is separated from the cluster, it reboots with the new firmware version.

After a successful upgrade, the appliance status indicates that the appliance is online with an up-to-date configuration.

If the appliance is online, but the status indicates that the upgrade has failed, select the 5030 node. Click Actions, select Retry Upgrade, and click Confirm.

After the node is successfully upgraded, you need to add it back into the cluster.

4. Choose Datacenters > Clusters.

5. Select the cluster and select the Settings tab.

6. Select the upgraded 5030 node from the drop-down list to add it back into the cluster.

All 5030 nodes in the cluster return to online status.

7. Upgrade the other 5030 nodes in the cluster, starting with step 1.