QoS Concepts

This section describes the concepts of Riverbed QoS. It includes the following topics:

Overview of QoS ConceptsQoS RulesQoS ClassesQoS ProfilesOverview of QoS Concepts

In RiOS v9.0, Riverbed introduces a new process to configure QoS on SteelHeads. This new process greatly simplifies the configuration and administration efforts, compared to earlier RiOS versions.

A QoS configuration generally requires the configuration of many SteelHeads. To avoid repetitive configuration steps on single SteelHeads, Riverbed strongly recommends that you use the SCC v9.0 or later to configure QoS on your SteelHeads. SCC enables you to configure one time and to send it out to multiple SteelHeads instead of connecting to a SteelHead, performing the configuration, and repeating the same configuration for all SteelHeads in the network.

For more information about using the SCC to configure SteelHeads, see the SteelCentral Controller for SteelHead Deployment Guide v9.0 or later.

QoS configuration consists of three building blocks, of which some are shared with the RiOS features path selection and secure transport. The building blocks are as follows:

Topology - The topology combines a set of parameters that enable a SteelHead to build its view onto the network. The topology consists of network and site definitions, including information about how a site connects to a network. With the concept of a topology, a SteelHead can automatically build paths to remote sites and calculate the bandwidth that is available on these paths.For more information about topology, see

Topology.

The classification of the application into a QoS class is done with QoS rules. A rule can be based on a single application or application groups.

You can assign a profile to one or multiple sites.

The information contained in the building blocks topology, application and profile enables the SteelHead to efficiently apply QoS.

For more information on secure transport, see

Overview of Secure Transport and the

SteelCentral Controller for SteelHead Deployment Guide.

Application configuration and detection enables easy traffic classification. In topology, the configuration of networks, sites, and uplinks enable RiOS to build the QoS class tree at the site level and to calculate the available bandwidth to each configured site. This calculation also takes care of a possible oversubscription of the local links and enables you to add or delete sites without having to recalculate the bandwidths to the sites. The profiles apply the site specific QoS classes and rules to the sites to allocate bandwidth and priority to applications.

QoS Rules

In RiOS v9.0 and later, QoS rules assign traffic to a particular QoS class. Prior to RiOS v9.0 QoS rules defined an application; however, applications are now defined separately. For more information, see

Application Definitions.

You must define an application before you use it in a QoS rule.

A QoS rule is part of a profile and assigns an application or application group to a QoS class. If a QoS rule is based on an application group, it counts as a single rule. Grouping applications intelligently can significantly reduce the number of rules per sites.

Including the QoS rule in the profile prevents the repetitive configuration of QoS rules, because you can assign a QoS profile to multiple sites.

The order in which the rules appear in the QoS rules table is important. Rules from this list are applied from top to bottom. As soon as a rule is matched, the list is exited. Make sure that you place the more granular QoS rules at the top of the QoS rules list.

For more information about QoS profiles see

QoS Profiles.

Riverbed QoS supports shaping and marking for multicast and broadcast traffic. To classify this type of traffic, you must configure a custom application based on IP header, because the AFE does not support multicast and broadcast traffic.

To configure a QoS rule

Choose Networking > Network Services: Quality of Service.

Click Edit for the QoS profile for which you want to configure a rule.

Select Add a Rule.

Specify the first three or four letters of the application or application group you want to create a rule for and select it from the drop down menu of the available application and application groups (

Figure 6‑1).

Figure 6‑1. New QoS Rule

You can mark traffic for this application with a DSCP or preserve the existing one and click on save.

The newly created QoS rule displays in the QoS rules table of the QoS profile.

Figure 6‑2. New QoS Rule Priority and Outbound DSCP Setting

QoS Classes

This section describes QoS classes and includes the following topics:

Class HierarchyPer-Class ParametersQoS Class Latency PrioritiesQoS Queue TypesQoS Queue DepthMX-TCPA QoS class represents an aggregation of traffic that is treated the same way by the QoS scheduler. The QoS class specifies the constraints and parameters, such as minimum bandwidth guarantee and latency priority, to ensure the optimal performance of applications. Additionally, the queue type specifies if packets belonging to the same TCP/UDP session are treated as a flow or as individual packets.

In RiOS v9.0 and later, QoS classes are part of a QoS profile.

For more information about QoS profiles, see

QoS Profiles.

To configure a QoS class

Chose Networking > Network Services: Quality of Service.

Create a new profile or edit the QoS profile for which you want to configure QoS classes.

In the QoS classes section, click Edit.

RiOS v9.0 does not create a default class in the profiles. Make sure you create a default class and point the default rule to it.

In RiOS versions prior to version v9.0, you were required to choose between different QoS modes. RiOS v9.0 automatically sets up the QoS class hierarchy based on the information contained in the topology and profile configuration.

Class Hierarchy

A class hierarchy allows creating QoS classes as children of QoS classes other than the root class. This allows you to create overall parameters for a certain traffic type and specify parameters for subtypes of that traffic.

In a class hierarchy, the following relationships exist between QoS classes:

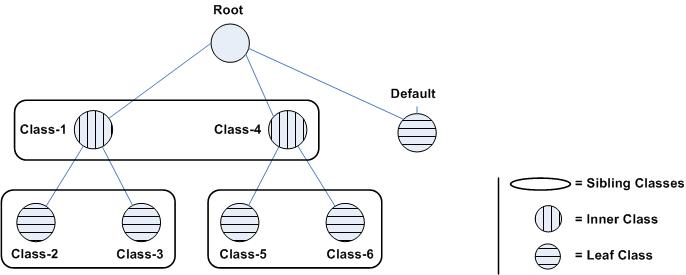

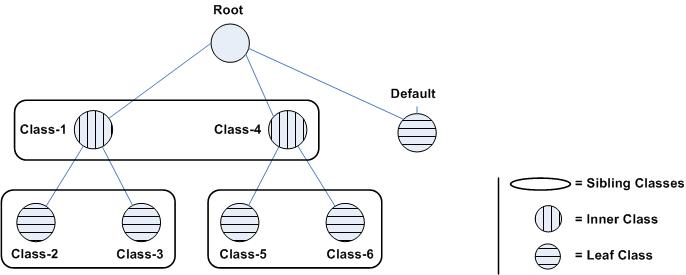

Sibling classes - Classes that share the same parent class.Leaf classes - Classes at the bottom of the class hierarchy.Inner classes - Classes that are neither the root class nor leaf classes.QoS rules can only specify leaf classes as targets for traffic.

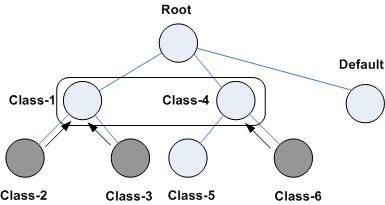

Figure 6‑3 shows a class hierarchy's structure and the relationships between the QoS classes.

Figure 6‑3. Hierarchical Mode Class Structure

QoS controls the traffic of hierarchical QoS classes in the following manner:

QoS rules assign active traffic to leaf classes. The QoS scheduler:applies active leaf class parameters to the traffic.applies parameters to inner classes that have active leaf class children.continues this process up the class hierarchy.constrains the total output bandwidth to the WAN rate specified on the root class.The following examples show how class hierarchy controls traffic.

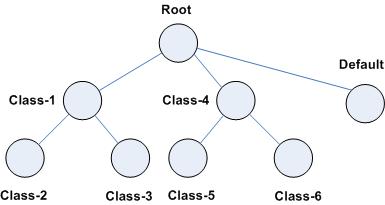

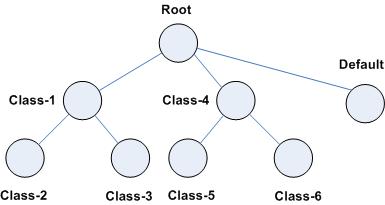

Figure 6‑4 shows six QoS classes. The root and default QoS classes are built-in and are always present. This example shows the QoS class hierarchy structure.

Figure 6‑4. Example of QoS Class Hierarchy

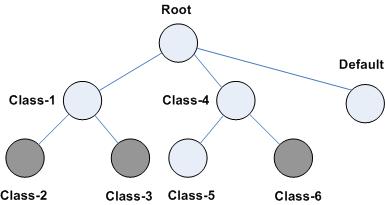

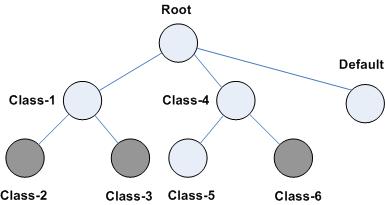

Figure 6‑5 shows there is active traffic beyond the overall WAN bandwidth rate. This example shows a scenario in which the QoS rules place active traffic into three QoS classes: Classes 2, 3, and 6.

Figure 6‑5. QoS Classes 2, 3, and 6 Have Active Traffic

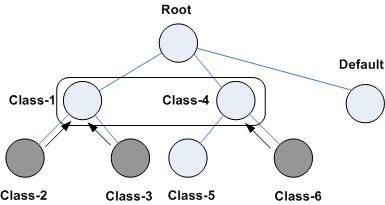

Riverbed QoS rules place active traffic into QoS classes in the following manner. In the following order, the QoS scheduler:

applies the constraints for the lower leaf classes.

applies bandwidth constraints to all leaf classes. The QoS scheduler awards minimum guarantee percentages among siblings, after which the QoS scheduler awards excess bandwidth, after which the QoS scheduler applies upper limits to the leaf class traffic.

applies latency priority to the leaf classes. For example, if class 2 is configured with a higher latency priority than class 3, the QoS scheduler gives traffic in class 2 the chance to be transmitted before class 3. Bandwidth guarantees still apply for the classes.

applies the constraints of the parent classes. The QoS scheduler treats the traffic of the children as one traffic class. The QoS scheduler uses class 1 and class 4 parameters to determine how to treat the traffic.

Figure 6‑6 shows the following points:

Figure 6‑6. How the QoS Scheduler Applies Constraints of Parent Class to Child Classes

In RiOS v9.0 and later, you configure sites in the topology and traffic classes in profiles. With this information, RiOS v9.0 and later automatically sets up the QoS class hierarchy.

The bandwidth of the uplink of the local site is assigned to the root class. The sites form the next layer of the class hierarchy, and the bandwidth of the site uplinks are assigned to the classes. The next layer of the hierarchy is then obtained from the profile, which is assigned to the class.

Figure 6‑7 shows the configuration in the topology and the profile is translated into a class hierarchy.

Figure 6‑7. Example of Topology and Profile Translating into Class Hierarchy

For more information about Topology, see

Topology. For more information about profiles, see

QoS Profiles.

Per-Class Parameters

The QoS scheduler uses the per-class configured parameters to determine how to treat traffic belonging to the QoS class. The per-class parameters are as follows:

Latency priority - There are six QoS class latency priorities. For details, see QoS Class Latency Priorities.Queue types - For details, see QoS Queue Types.Guaranteed minimum bandwidth - When there is bandwidth contention, this specifies the minimum amount of bandwidth as a percentage of the parent class bandwidth. The QoS class might receive more bandwidth if there is unused bandwidth remaining. The ratio, of how much of the unused bandwidth is allocated to a class in relation to other classes, is determined by the link-share weight. The total minimum guaranteed bandwidth of all QoS classes must be less than or equal to 100% of the parent class. You can adjust the value as low as 0%.Link share weight - Allocates excess bandwidth based on the minimum bandwidth guarantee for each class. This is not exposed in the SteelHead Management Console and implicitly configured with the minimum bandwidth guarantee for a class. Maximum bandwidth - Specifies the maximum allowed bandwidth a QoS class receives as a percentage of the parent class guaranteed bandwidth. The upper bandwidth limit is applied even if there is excess bandwidth available. The upper bandwidth limit must be greater than or equal to the minimum bandwidth guarantee for the class. The smallest value you can assign is 0.01%.Connection limit - Specifies the maximum number of optimized connections for the QoS class. When the limit is reached, all new connections are passed through unoptimized. In hierarchical mode, a parent class connection limit does not affect its child. Each child-class optimized connection is limited by the connection limit specified for its class. For example, if B is a child of A, and the connection limit for A is set to 5, although the connection limit for A is set to 5, the connection limit for B is 10. Connection limit is supported only in in-path configurations. Connection limit is not supported in out- of-path or virtual-in-path configurations.RiOS does not support a connection limit assigned to any QoS class that is associated with a QoS rule with an AFE component. An AFE component consists of a Layer-7 protocol specification. RiOS cannot honor the class connection limit because the QoS scheduler might subsequently reclassify the traffic flow after applying a more precise match using AFE identification.

In RiOS v9.0 and later, this parameter is available through the CLI only. For information about how to configure the connection limit, please refer to the Riverbed Command-Line Interface Reference Manual.

QoS Class Latency Priorities

Latency priorities indicate how delay-sensitive a traffic class is. A latency priority does not control how bandwidth is used or shared among different QoS classes. You can assign a QoS class latency priority when you create a QoS class or modify it later.

Riverbed QoS has six QoS class latency priorities. The following table summarizes the QoS class latency priorities in descending order.

Latency Priority | Example |

Real time | VoIP, video conferencing |

Interactive | Citrix, RDP, telnet, and ssh |

Business critical | Thick client applications, ERPs, CRMs |

Normal priority | Internet browsing, file sharing, email |

Low priority | FTP, backup, replication, and other high-throughput data transfers; recreational applications such as audio file sharing |

Best effort | lowest priority |

Typically, applications such as VoIP and video conferencing are given real-time latency priority, although applications that are especially delay-insensitive, such as backup and replication, are given low latency priority.

The latency priority describes only the delay sensitivity of a class, not how much bandwidth it is allocated, nor how important the traffic is compared to other classes. Therefore, it is common to configure low latency priority for high-throughput, delay-insensitive applications such as ftp, backup, and replication.

QoS Queue Types

Each QoS class has a configured queue type parameter. There following types of parameters are available:

Stochastic Fairness Queueing (SFQ) - Determines SteelHead behavior when the number of packets in a QoS class outbound queue exceeds the configured queue length. When SFQ is used, packets are dropped from within the queue, among the present traffic flows. SFQ ensures that each flow within the QoS class receives a fair share of output bandwidth relative to each other, preventing bursty flows from starving other flows within the QoS class. SFQ is the default queue parameter.First-in, First-Out (FIFO) - Determines SteelHead behavior when the number of packets in a QoS class outbound queue exceeds the configured queue length. When FIFO is used, packets received after this limit is reached are dropped, hence the first packets received are the first packets transmitted.MX-TCP - Not a queueing algorithms but rather a TCP transport type. For details, see MX-TCP.QoS Queue Depth

RiOS assigns a queue to each configured QoS class, which has the size of 100 packets. Therefore the queue size in bytes depends on the packet size. You can modify the queue size using the CLI.

For more information about how to configure the queue size of a QoS class, see the Riverbed Command-Line Interface Reference Manual.

MX-TCP

MX-TCP is a QoS class queue parameter, but with very different use cases than the other queue parameters. MX-TCP also has secondary effects that you must understand before you configure it.

When optimized traffic is mapped into a QoS class with the MX-TCP queueing parameter, the TCP congestion control mechanism for that traffic is altered on the SteelHead. The normal TCP behavior of reducing the outbound sending rate when detecting congestion or packet loss is disabled, and the outbound rate is made to match the minimum guaranteed bandwidth configured on the QoS class.

You can use MX-TCP to achieve high throughput rates even when the physical medium carrying the traffic has high loss rates. For example, a common usage of MX-TCP is for ensuring high throughput on satellite connections where no lower-layer loss recovery technique is in use.

Another usage of MX-TCP is to achieve high throughput over high-bandwidth, high-latency links, especially when intermediate routers do not have properly tuned interface buffers. Improperly tuned router buffers cause TCP to perceive congestion in the network, resulting in unnecessarily dropped packets, even when the network can support high throughput rates.

MX-TCP is incompatible with the AFE. A traffic flow cannot be classified as MX-TCP and then subsequently classified in a different queue. This reclassification can happen if there is a more exact match of the traffic.

You must ensure the following when you enable MX-TCP:

The QoS rule for MX-TCP is at the top of QoS rules listThe rule does not use AFE identificationUse MX-TCP for optimized traffic onlyAs with any other QoS class, you can configure MX-TCP to scale from the specified minimum bandwidth up to a specific maximum. This allows MX-TCP to use available bandwidth during nonpeak hours. During traffic congestion, MX-TCP scales back to the configured minimum bandwidth.

For information about configuring MX-TCP before RiOS v8.5, see earlier versions of the

SteelHead Deployment Guide on the Riverbed Support site at

https://support.riverbed.com.

You can configure a specific rule for an MX-TCP class for packet-mode UDP traffic. An MX-TCP rule for packet-mode UDP traffic is useful for UDP bulk transfer and data-replication applications (for example, Aspera and Veritas Volume Replicator).

Packet-mode traffic matching any non-MX-TCP class is classified into the default class because QoS does not support packet-mode optimization.

For more information about MX-TCP as a transport streaming lining mode, see

Transport Streamlining. For an example of how to configure QoS and MX-TCP, see

Configuring QoS and MX-TCP.

QoS Profiles

The QoS profile combines QoS classes and rules into a building block for QoS.

You can create a hierarchical QoS class structure within a profile to segregate traffic based on flow source or destination, and apply different shaping rules and priorities to each leaf-class.

The SteelHead Management Consoles GUI supports the configurations of three levels of hierarchy. If more levels of hierarchy are needed, you can configure them using the CLI.

A profile can be used for inbound and outbound QoS.

To configure a QoS profile

Choose Networking > Network Service: Quality of Service.

Figure 6‑8. Add a New QoS Profile

You can either create a new QoS profile based on a blank template or create a new QoS profile based on an existing one. Creating a new QoS profile based on an existing one simplifies the configuration because you can adjust parameters of the profile instead of starting with nothing.

Specify a name for the QoS profile and click Save.

The newly created QoS profile is displayed in the list.

Click

Edit to configure the parameters (

Figure 6‑9).

Figure 6‑9. QoS Profile Details

A blank QoS profile comes with a root class and the default rule. To configure QoS classes, see

QoS Classes. To configure QoS rules, see

QoS Classes.

After you create the QoS profile, you can use the configured set of QoS classes and rules for multiple sites.

To assign a QoS profiles to a site

Choose Networking > Topology: Sites & Networks.

Click Edit Site in the Sites section.

In the QoS Profiles section of the configuration page, open the Outbound QoS Profile drop-down menu and select the profile for this site.

You can assign the same QoS profile for inbound and outbound QoS. However, usually inbound QoS and outbound QoS have different functions, so it is likely that you need to configure a separate QoS profile for inbound QoS. For more information about inbound QoS, see

Inbound QoS.

For more information about configuration profiles, see

Creating QoS Profiles and

MX-TCP.