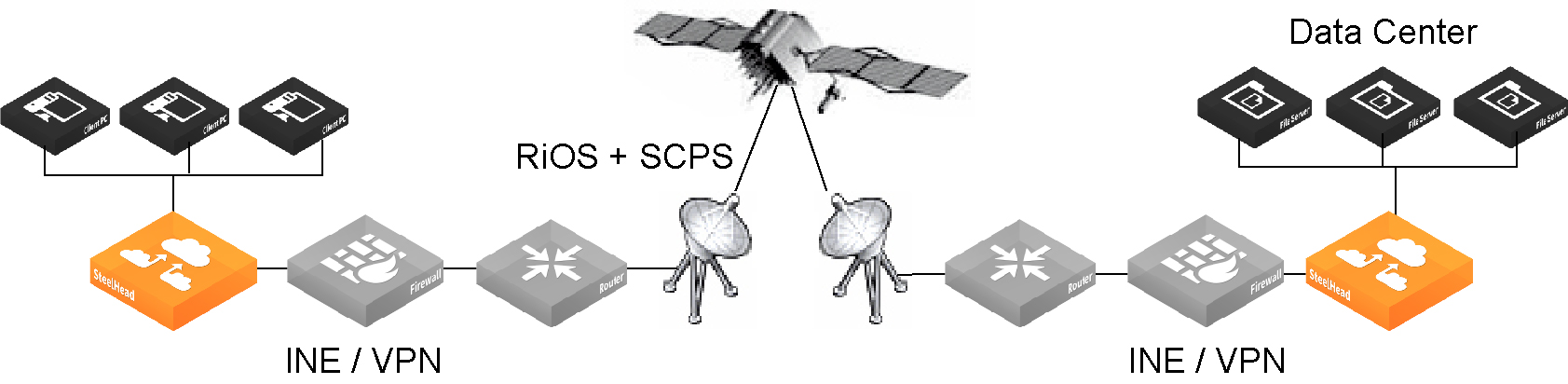

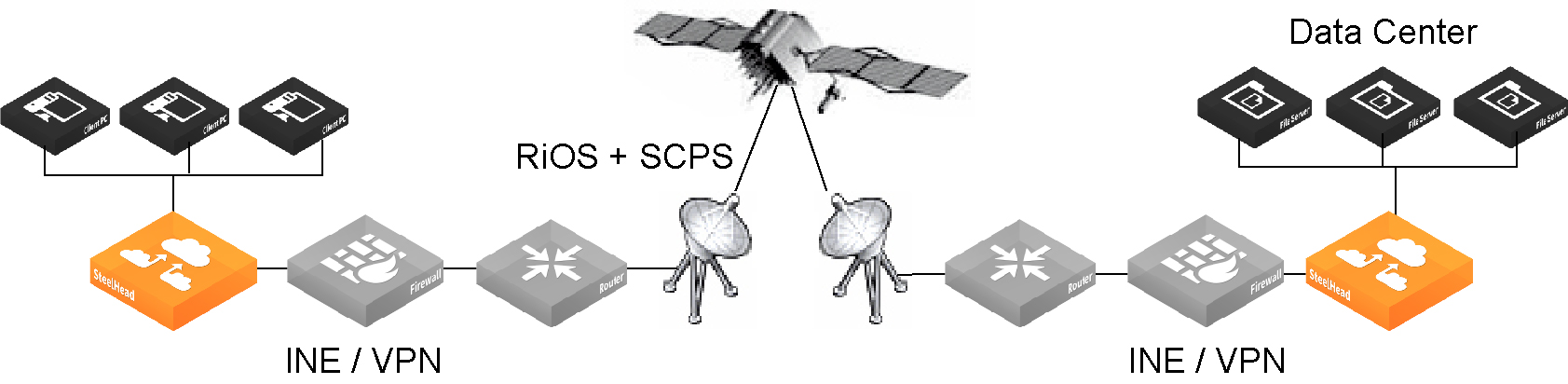

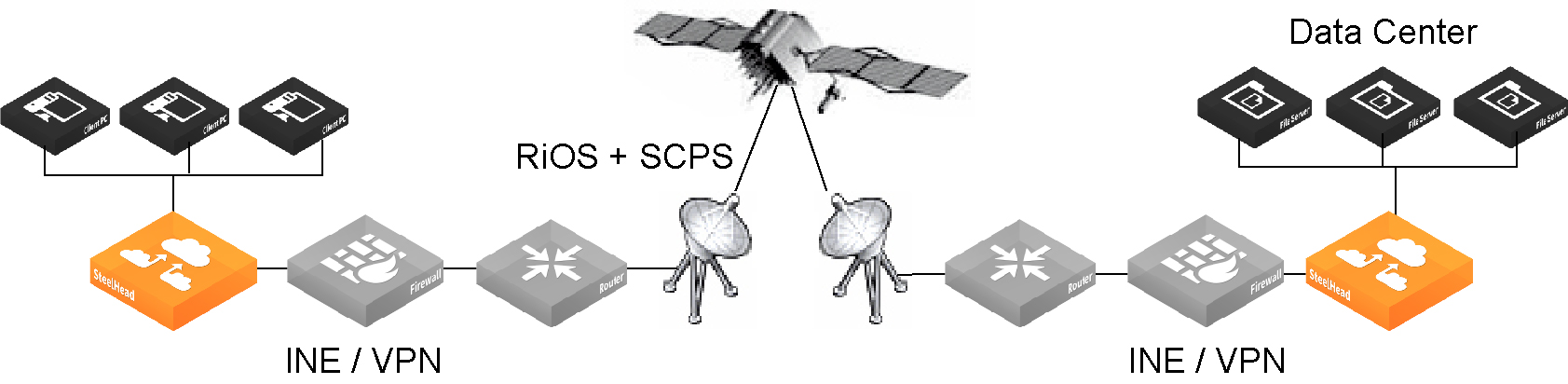

RiOS and SCPS Connection

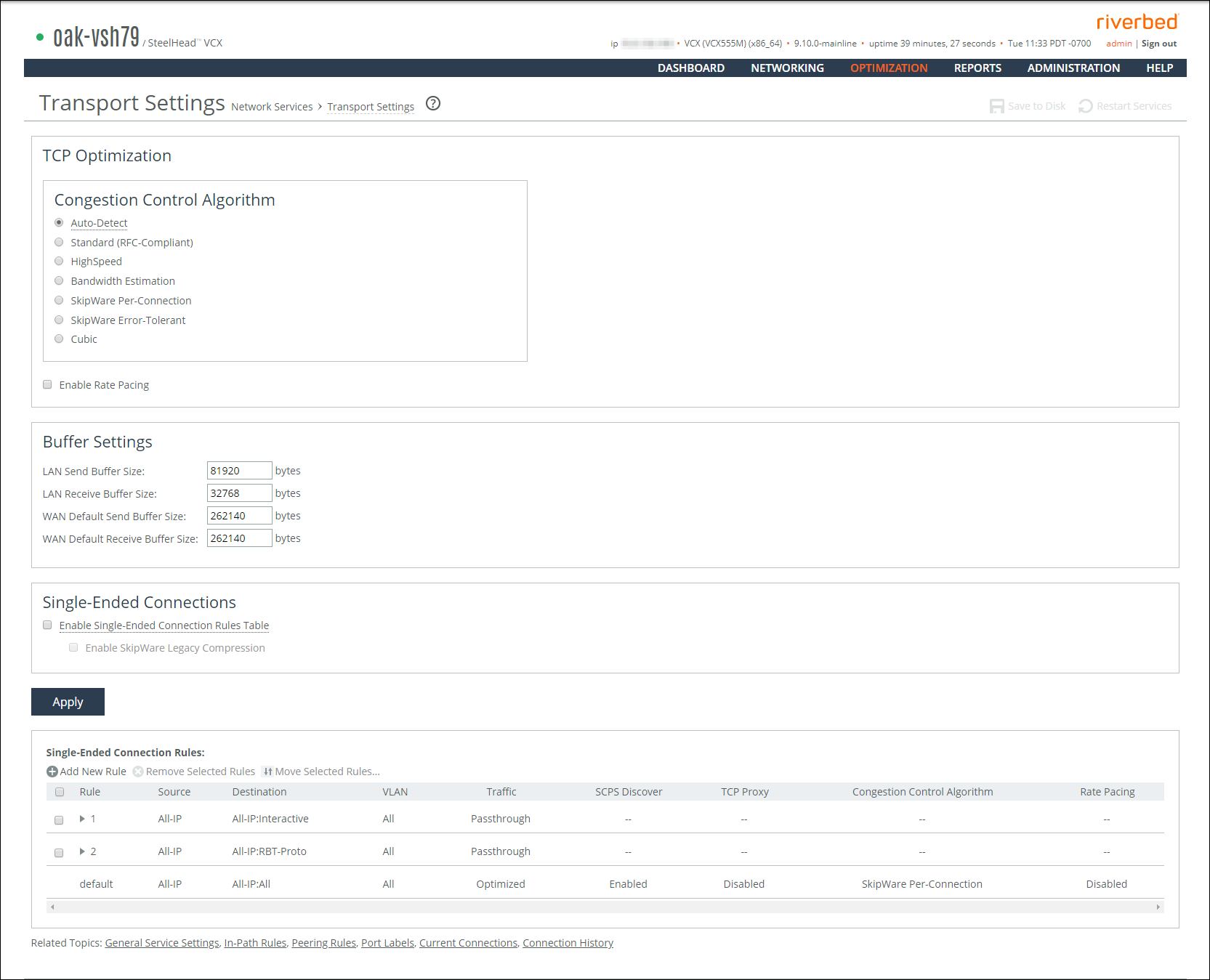

Control | Description |

Auto-Detect | Automatically detects the optimal TCP configuration by using the same mode as the peer SteelHead for inner connections, SkipWare when negotiated, or standard TCP for all other cases. This is the default setting. If you have a mixed environment where several different types of networks terminate into a hub or server-side SteelHead, enable this setting on your hub SteelHead so it can reflect the various transport optimization mechanisms of your remote site SteelHeads. Otherwise, you can hard code your hub SteelHead to the desired setting. RiOS advertises automatic detection of TCP optimization to a peer SteelHead through the OOB connection between the appliances. For single-ended interception connections, use SkipWare per-connection TCP optimization when possible; use standard TCP otherwise. |

Standard (RFC-Compliant) | Optimizes non-SCPS TCP connections by applying data and transport streamlining for TCP traffic over the WAN. This control forces peers to use standard TCP as well. For details on data and transport streamlining, see the SteelHead Deployment Guide. This option clears any advanced bandwidth congestion control that was previously set. |

HighSpeed | Enables high-speed TCP optimization for more complete use of long fat pipes (high-bandwidth, high-delay networks). Do not enable for satellite networks. We recommend that you enable high-speed TCP optimization only after you have carefully evaluated whether it will benefit your network environment. For details about the trade-offs of enabling high-speed TCP, see tcp highspeed enable in the Riverbed Command-Line Interface Reference Manual. |

Bandwidth Estimation | Uses an intelligent bandwidth estimation algorithm along with a modified slow-start algorithm to optimize performance in long lossy networks. These networks typically include satellite and other wireless environments, such as cellular networks, longer microwave, or Wi-Max networks. Bandwidth estimation is a sender-side modification of TCP and is compatible with the other TCP stacks in the RiOS system. The intelligent bandwidth estimation is based on analysis of both ACKs and latency measurements. The modified slow-start mechanism enables a flow to ramp up faster in high-latency environments than traditional TCP. The intelligent bandwidth estimation algorithm allows it to learn effective rates for use during modified slow start, and also to differentiate BER loss from congestion-derived loss and manage them accordingly. Bandwidth estimation has good fairness and friendliness qualities toward other traffic along the path. The default setting is off. |

SkipWare Per-Connection | Applies TCP congestion control to each SCPS-capable connection. The congestion control uses: • a pipe algorithm that gates when a packet should be sent after receipt of an ACK. • the NewReno algorithm, which includes the sender's congestion window, slow start, and congestion avoidance. • time stamps, window scaling, appropriate byte counting, and loss detection. This transport setting uses a modified slow-start algorithm and a modified congestion-avoidance approach. This method enables SCPS per-connection to ramp up flows faster in high-latency environments, and handle lossy scenarios, while remaining reasonably fair and friendly to other traffic. SCPS per-connection does a very good job of efficiently filling up satellite links of all sizes. SCPS per-connection is a high-performance option for satellite networks. We recommend enabling per-connection if the error rate in the link is less than approximately 1 percent. The Management Console dims this setting until you install a SkipWare license. |

SkipWare Error-Tolerant | Enables SkipWare optimization with the error-rate detection and recovery mechanism on the SteelHead. This setting allows the per-connection congestion control to tolerate some loss due to corrupted packets (bit errors), without reducing the throughput, using a modified slow-start algorithm and a modified congestion-avoidance approach. It requires significantly more retransmitted packets to trigger this congestion-avoidance algorithm than the SkipWare per-connection setting. Error-tolerant TCP optimization assumes that the environment has a high BER and that most retransmissions are due to poor signal quality instead of congestion. This method maximizes performance in high-loss environments, without incurring the additional per-packet overhead of a FEC algorithm at the transport layer. SCPS error tolerance is a high-performance option for lossy satellite networks. Use caution when enabling error-tolerant TCP optimization, particularly in channels with coexisting TCP traffic, because it can be quite aggressive and adversely affect channel congestion with competing TCP flows. We recommend enabling error tolerance if the error rate in the link is more than approximately 1 percent. The Management Console dims this setting until you install a SkipWare license. |

Cubic | Enables the Cubic congestion control algorithm. Cubic is the local default congestion control algorithm when two peer SteelHeads are both configured to auto-detect. Cubic offers better performance and faster recovery after congestion events than NewReno, the previous local default. |

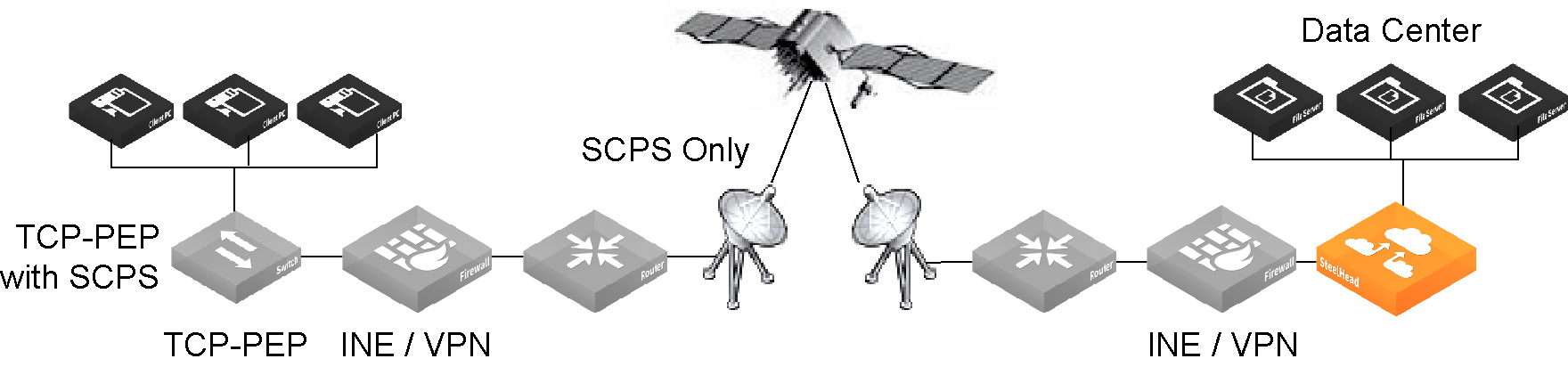

Enable Rate Pacing | Imposes a global data-transmit limit on the link rate for all SCPS connections between peer SteelHeads, or on the link rate for a SteelHead paired with a third-party device running TCP-PEP (Performance Enhancing Proxy). Rate pacing combines MX-TCP and a congestion-control method of your choice for connections between peer SteelHeads and SEI connections (on a per-rule basis). The congestion-control method runs as an overlay on top of MX-TCP and probes for the actual link rate. It then communicates the available bandwidth to MX-TCP. Enable rate pacing to prevent these problems: • Congestion loss while exiting the slow-start phase. The slow-start phase is an important part of the TCP congestion-control mechanisms that starts slowly increasing its window size as it gains confidence about the network throughput. • Congestion collapse • Packet bursts Rate pacing is disabled by default. With no congestion, the slow-start phase ramps up to the MX-TCP rate and settles there. When RiOS detects congestion (either due to other sources of traffic, a bottleneck other than the satellite modem, or because of a variable modem rate), the congestion-control method kicks in to avoid congestion loss and exit the slow-start phase faster. Enable rate pacing on the client-side SteelHead along with a congestion-control method. The client-side SteelHead communicates to the server-side SteelHead that rate pacing is in effect. You must also: • Enable Auto-Detect TCP Optimization on the server-side SteelHead to negotiate the configuration with the client-side SteelHead. • Configure an MX-TCP QoS rule to set the appropriate rate cap. If an MX-TCP QoS rule is not in place, the system doesn’t apply rate pacing and the congestion-control method takes effect. You can’t delete the MX-TCP QoS rule when rate pacing is enabled. The Management Console dims this feature until you install a SkipWare license. Rate pacing doesn’t support IPv6. You can also enable rate pacing for SEI connections by defining an SEI rule for each connection. |

Enable Single-Ended Connection Rules Table | Enables transport optimization for single-ended interception connections with no SteelHead peer. These connections appear in the rules table. In RiOS 8.5 or later, you can impose rate pacing for single-ended interception connections with no peer SteelHead. By defining an SEI connection rule, you can enforce rate pacing even when the SteelHead is not peered with a SCPS device and SCPS is not negotiated. To enforce rate pacing for a single-ended interception connection, create an SEI connection rule for use as a transport-optimization proxy, select a congestion method for the rule, and then configure a QoS rule (with the same client/server subnet) to use MX-TCP. RiOS 8.5 and later accelerate the WAN-originated or LAN-originated proxied connection using MX-TCP. By default, the SEI connection rules table is disabled. When enabled, two default rules appear in the rules table. The first default rule matches all traffic with the destination port set to the interactive port label and bypasses the connection for SCPS optimization. The second default rule matches all traffic with the destination port set to the RBT-Proto port label and bypasses the connection for SCPS optimization. This option doesn’t affect the optimization of SCPS connections between SteelHeads. When you disable the table, you can still add, move, or remove rules, but the changes don’t take effect until you reenable the table. The Management Console dims the SEI rules table until you install a SkipWare license. Enable SkipWare Legacy Compression—Enables negotiation of SCPS-TP TCP header and data compression with a remote SCPS-TP device. This feature enables interoperation with RSP SkipWare packages and TurboIP devices that have also been configured to negotiate TCP header and data compression. Legacy compression is disabled by default. After enabling or disabling legacy compression, you must restart the optimization service. The Management Console dims legacy compression until you install a SkipWare license and enable the SEI rules table. Legacy compression also works with non-SCPS TCP algorithms. These limits apply to legacy compression: • This feature is not compatible with IPv6. • Packets with a compressed TCP header use IP protocol 105 in the encapsulating IP header; this might require changes to intervening firewalls to permit protocol 105 packets to pass. • This feature supports a maximum of 255 connections between any pair of end-host IP addresses. The connection limit for legacy SkipWare connections is the same as the appliance-connection limit. • QoS limits for the SteelHead apply to the legacy SkipWare connections. To view SCPS connections, see

Viewing Connection History reports. |

Control | Description |

LAN Send Buffer Size | Specify the send buffer size used to send data out of the LAN. The default value is 81920. |

LAN Receive Buffer Size | Specify the receive buffer size used to receive data from the LAN. The default value is 32768. |

WAN Default Send Buffer Size | Specify the send buffer size used to send data out of the WAN. The default value is 262140. |

WAN Default Receive Buffer Size | Specify the receive buffer size used to receive data from the WAN. The default value is 262140. |

Control | Description |

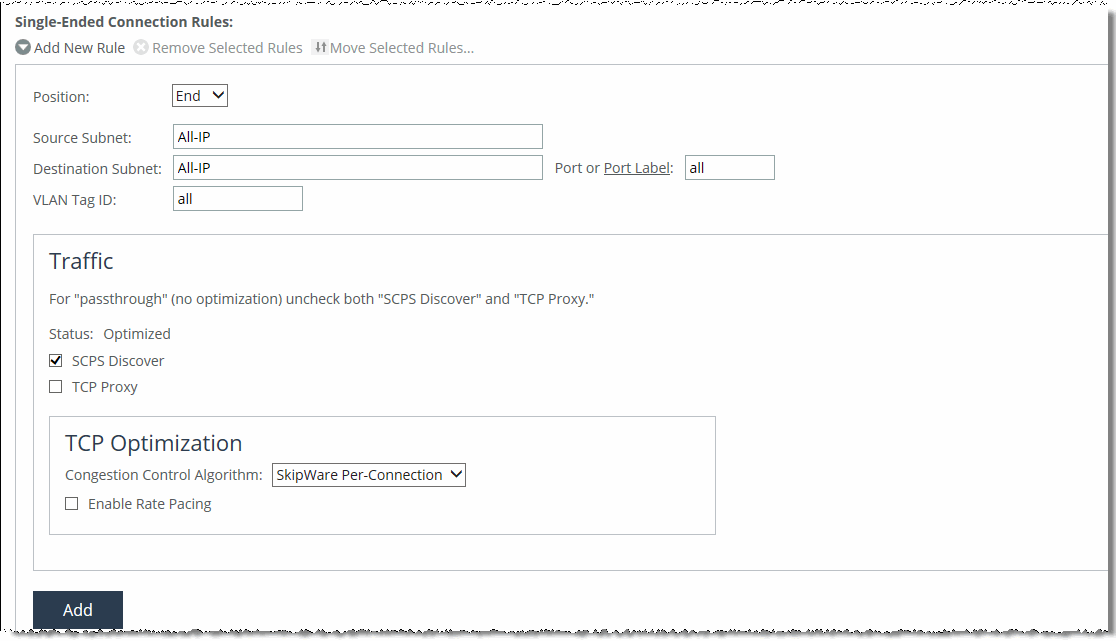

Add New Rule | Displays the controls for adding a new rule. |

Position | Select Start, End, or a rule number from the drop-down list. SteelHeads evaluate rules in numerical order starting with rule 1. If the conditions set in the rule match, then the rule is applied, and the system moves on to the next packet. If the conditions set in the rule don’t match, the system consults the next rule. As an example, if the conditions of rule 1 don’t match, rule 2 is consulted. If rule 2 matches the conditions, it’s applied, and no further rules are consulted. |

Source Subnet | Specify an IPv4 or IPv6 address and mask for the traffic source. You can also specify wildcards: • All-IPv4 is the wildcard for single-stack IPv4 networks. • All-IPv6 is the wildcard for single-stack IPv6 networks. • All-IP is the wildcard for all IPv4 and IPv6 networks. Use these formats: xxx.xxx.xxx.xxx/xx (IPv4) x:x:x::x/xxxx (IPv6) |

Destination Subnet | Specify an IPv4 or IPv6 address and mask for the traffic destination. You can also specify wildcards: • All-IPv4 is the wildcard for single-stack IPv4 networks. • All-IPv6 is the wildcard for single-stack IPv6 networks. • All-IP is the wildcard for all IPv4 and IPv6 networks. Use these formats: xxx.xxx.xxx.xxx/xx (IPv4) x:x:x::x/xxxx (IPv6) |

Port or Port Label | Specify the destination port number, port label, or all. Click Port Label to go to the Networking > App Definitions: Port Labels page for reference. |

VLAN Tag ID | Specify one of the following: a VLAN identification number from 1 to 4094; all to specify that the rule applies to all VLANs; or untagged to specify the rule applies to untagged connections. RiOS supports VLAN v802.1Q. To configure VLAN tagging, configure SCPS rules to apply to all VLANs or to a specific VLAN. By default, rules apply to all VLAN values unless you specify a particular VLAN ID. Pass-through traffic maintains any preexisting VLAN tagging between the LAN and WAN interfaces. |

Traffic | Specifies the action that the rule takes on a SCPS connection. To allow single-ended interception SCPS connections to pass through the SteelHead unoptimized, disable SCPS Discover and TCP Proxy. Select one of these options: – SCPS Discover—Enables SCPS and disables TCP proxy. – TCP Proxy—Disables SCPS and enables TCP proxy. |

Congestion Control Algorithm | Select a method for congestion control from the drop-down list. • Standard (RFC-Compliant)—Optimizes non-SCPS TCP connections by applying data and transport streamlining for TCP traffic over the WAN. This control forces peers to use standard TCP as well. For details on data and transport streamlining, see the SteelHead Deployment Guide. This option clears any advanced bandwidth congestion control that was previously set. • HighSpeed—Enables high-speed TCP optimization for more complete use of long fat pipes (high-bandwidth, high-delay networks). Do not enable for satellite networks. We recommend that you enable high-speed TCP optimization only after you have carefully evaluated whether it will benefit your network environment. For details about the trade-offs of enabling high-speed TCP, see tcp highspeed enable in the Riverbed Command-Line Interface Reference Manual. • Bandwidth Estimation—Uses an intelligent bandwidth estimation algorithm along with a modified slow-start algorithm to optimize performance in long lossy networks. These networks typically include satellite and other wireless environments, such as cellular networks, longer microwave, or Wi-Max networks. Bandwidth estimation is a sender-side modification of TCP and is compatible with the other TCP stacks in the RiOS system. The intelligent bandwidth estimation is based on analysis of both ACKs and latency measurements. The modified slow-start mechanism enables a flow to ramp up faster in high latency environments than traditional TCP. The intelligent bandwidth estimation algorithm allows it to learn effective rates for use during modified slow start, and also to differentiate BER loss from congestion-derived loss and deal with them accordingly. Bandwidth estimation has good fairness and friendliness qualities toward other traffic along the path. • SkipWare Per-Connection—Applies TCP congestion control to each SCPS-capable connection. This method is compatible with IPv6. The congestion control uses: – a pipe algorithm that gates when a packet should be sent after receipt of an ACK. – the NewReno algorithm, which includes the sender's congestion window, slow start, and congestion avoidance. – time stamps, window scaling, appropriate byte counting, and loss detection. This transport setting uses a modified slow-start algorithm and a modified congestion-avoidance approach. This method enables SCPS per connection to ramp up flows faster in high-latency environments, and handle lossy scenarios, while remaining reasonably fair and friendly to other traffic. SCPS per-connection does a very good job of efficiently filling up satellite links of all sizes. SkipWare per-connection is a high-performance option for satellite networks. The Management Console dims this setting until you install a SkipWare license. |

• SkipWare Error-Tolerant—Enables SkipWare optimization with the error-rate detection and recovery mechanism on the SteelHead. This method is compatible with IPv6. This method tolerates some loss due to corrupted packets (bit errors), without reducing the throughput, using a modified slow-start algorithm and a modified congestion avoidance approach. It requires significantly more retransmitted packets to trigger this congestion-avoidance algorithm than the SkipWare per-connection setting. Error-tolerant TCP optimization assumes that the environment has a high BER and most retransmissions are due to poor signal quality instead of congestion. This method maximizes performance in high-loss environments, without incurring the additional per-packet overhead of a FEC algorithm at the transport layer. Use caution when enabling error-tolerant TCP optimization, particularly in channels with coexisting TCP traffic, because it can be quite aggressive and adversely affect channel congestion with competing TCP flows. The Management Console dims this setting until you install a SkipWare license. | |

Enable Rate Pacing | Imposes a global data transmit limit on the link rate for all SCPS connections between peer SteelHeads or on the link rate for a SteelHead paired with a third-party device running TCP-PEP (Performance Enhancing Proxy). Rate pacing combines MX-TCP and a congestion-control method of your choice for connections between peer SteelHeads and SEI connections (on a per-rule basis). The congestion-control method runs as an overlay on top of MX-TCP and probes for the actual link rate. It then communicates the available bandwidth to MX-TCP. Enable rate pacing to prevent these problems: • Congestion loss while exiting the slow start phase. The slow-start phase is an important part of the TCP congestion-control mechanisms that starts slowly increasing its window size as it gains confidence about the network throughput. • Congestion collapse. • Packet bursts. Rate pacing is disabled by default. With no congestion, the slow start ramps up to the MX-TCP rate and settles there. When RiOS detects congestion (either due to other sources of traffic, a bottleneck other than the satellite modem, or because of a variable modem rate), the congestion-control method kicks in to avoid congestion loss and exit the slow start phase faster. Enable rate pacing on the client-side SteelHead along with a congestion-control method. The client-side SteelHead communicates to the server-side SteelHead that rate pacing is in effect. You must also: • Enable Auto-Detect TCP Optimization on the server-side SteelHead to negotiate the configuration with the client-side SteelHead. • Configure an MX-TCP QoS rule to set the appropriate rate cap. If an MX-TCP QoS rule is not in place, rate pacing is not applied and the congestion-control method takes effect. You can’t delete the MX-TCP QoS rule when rate pacing is enabled. The Management Console dims this setting until you install a SkipWare license. Rate pacing doesn’t support IPv6. You can also enable rate pacing for SEI connections by defining an SEI rule for each connection. |

Add | Adds the rule to the list. The Management Console redisplays the SCPS Rules table and applies your modifications to the running configuration, which is stored in memory. |

Remove Selected Rules | Select the check box next to the name and click Remove Selected. |

Move Selected Rules | Moves the selected rules. Click the arrow next to the desired rule position; the rule moves to the new position. |

Task | Reference |

1. Enable high-speed TCP support. | |

2. Increase the WAN buffers to 2 * Bandwidth Delay Product (BDP). You can calculate the BDP WAN buffer size: Buffer size in bytes = 2 * bandwidth (in bits per sec) * delay (in sec) / 8 (bits per byte) Example: For a link of 155 Mbps and 100 ms round-trip delay. Bandwidth = 155 Mbps = 155000000 bps Delay = 100 ms = 0.1 sec BDP = 155 000 000 * 0.1 / 8 = 1937500 bytes Buffer size in bytes = 2 * BDP = 2 * 1937500 = 3 875 000 bytes. If this number is greater than the default (256 KB), enable HS-TCP with the correct buffer size. | |

3. Increase the LAN buffers to 1 MB. | |

4. Enable in-path support. |