QoS Overview

QoS is a reservation system for network traffic. In its most basic form, QoS allows organizations to allocate scarce network resources across multiple traffic types of varying importance. QoS implementations allow organizations to accurately control their applications by the amount of bandwidth they have access to and by their sensitivity to delay.

QoS Enhancements by Version

This section lists and describes new QoS features and enhancements by RiOS version.

RiOS 9.1 provides these enhancements:

• Differentiated Service Code Point (DSCP) Marking to Prioritize Out-of-Band (OOB) Control Channel Traffic - An OOB connection is a TCP connection that SteelHeads establish with each other when they begin optimizing traffic to exchange capabilities and feature information such as licensing information, hostname, RiOS version, and so on. The SteelHeads also use control channel information to detect failures. You can now mark OOB connections with a DSCP or ToS IP value to prioritize or classify the Riverbed control channel traffic, preventing dropped packets in a lossy or congested network to guarantee control packets will get through and not be subject to unexpected tear down.

• Increase in Applications Recognized by the AFE - The AFE recognizes over 1,300 application signatures, providing an efficient and accurate way to identify applications for advanced classification and shaping of network traffic. To view the predefined global application list, see

List of Recognized Applications.

RiOS 9.0 provides these enhancements:

• Easy QoS Configuration - Simplifies configuration of application definitions and QoS classes. Now you can start with a basic QoS model and create per-site exceptions only as needed. Additionally, you no longer need to build individual rules to identify and classify traffic for QoS marking and shaping, because you can use application groups and business criticality definitions for faster and easier configuration. For details on application groups, see

Defining Applications.

• QoS Profiles - Create a fully customizable class shaping hierarchy containing a set of rules and classes. View the class layout and details at a glance and reuse the profiles with multiple sites in both inbound and outbound QoS. Profiles in RiOS 9.0 and later replace service policies in previous versions.

• Inbound QoS and Outbound QoS Feature Parity - Removes inbound QoS restrictions to achieve full feature parity with outbound QoS.

RiOS 8.6 provides these enhancements:

• SSL Common Name Matching - Classify SSL pass-through traffic using a common name in a QoS rule.

• Substantial Increase in Applications Recognized by the AFE - The AFE recognizes over 1,000 application signatures, providing an efficient and accurate way to identify applications for advanced classification and shaping of network traffic. To view the predefined global application list, see

List of Recognized Applications.

RiOS 8.5 provides these enhancements:

• SnapMirror Support - Use outbound QoS to prioritize SnapMirror replication jobs or shape optimized SnapMirror traffic that is sharing a WAN link with other enterprise protocols. QoS recognizes SnapMirror optimized flows and provisions five different service levels for each packet, based on priorities. You can also distinguish a job priority by filer and volume. You can create a QoS rule for the appropriate site and optionally specify a service class and DSCP marking per priority.

• Export QoS Configuration Statistics to a CascadeFlow Collector - CascadeFlow collectors can aggregate information about QoS configuration and other application statistics to send to a SteelCentral NetProfiler. The Enterprise NetProfiler summarizes and displays the QoS configuration statistics. For details, see

Configuring Flow Statistics.

• LAN Bypass - Virtual in-path network topologies in which the LAN-bound traffic traverses the WAN interface might require that you configure the SteelHead to bypass LAN-bound traffic so it’s not subject to the maximum root bandwidth limit. RiOS 7.0.3 introduced LAN bypass for QoS outbound shaping; RiOS 8.5 and later include inbound QoS shaping.

• Host Label Handling - Specify a range of hostnames and subnets within a single QoS rule.

• Global DSCP Marking - By default, the setup of optimized connections and the out-of-band control connections aren’t marked with a DSCP value. Existing traffic marked with a DSCP value is classified into the default class. If your existing network provides multiple classes of service based on DSCP values, and you are integrating a SteelHead into your environment, you can use the Global DSCP feature to prevent dropped packets and other undesired effects.

• QoS with IPv6 - RiOS 8.5 and later doesn’t support IPv6 traffic for QoS shaping or AFE-based classification. If you enable QoS shaping for a specific interface, all IPv6 packets for that interface are classified to the default class. You can mark IPv6 traffic with an IP TOS value. You can also configure the SteelHead to reflect an existing traffic class from the LAN-side to the WAN-side of the SteelHead.

QoS classes are based on traffic importance, bandwidth needs, and delay-sensitivity. You allocate network resources to each of the classes. Traffic flows according to the network resources allocated to its class.

You configure QoS on client-side and server-side SteelHeads to control the prioritization of different types of network traffic and to ensure that SteelHeads give certain network traffic (for example, Voice over IP (VoIP) higher priority over other network traffic.

Traffic Classification

QoS allows you to specify priorities for particular classes of traffic and properly distribute excess bandwidth among classes. The QoS classification algorithm provides mechanisms for link sharing and priority services while decoupling delay and bandwidth allocation.

Many QoS implementations use some form of Packet Fair Queueing (PFQ), such as Weighted Fair Queueing or Class-Based Weighted Fair Queueing. As long as high-bandwidth traffic requires a high priority (or vice-versa), PFQ systems perform adequately. However, problems arise for PFQ systems when the traffic mix includes high-priority, low-bandwidth traffic, or high-bandwidth traffic that doesn’t require a high priority, particularly when both of these traffic types occur together. Features such as low-latency queueing (LLQ) attempt to address these concerns by introducing a separate system of strict priority queueing that is used for high-priority traffic. However, LLQ isn’t an effective way of handling bandwidth and latency trade-offs. LLQ is a separate queueing mechanism meant as a workaround for PFQ limitations.

The Riverbed QoS system isn’t based on PFQ, but rather on Hierarchical Fair Service Curve (HFSC). HFSC delivers low latency to traffic without wasting bandwidth and delivers high bandwidth to delay-insensitive traffic without disrupting delay-sensitive traffic. The Riverbed QoS system achieves the benefits of LLQ without the complexity and potential configuration errors of a separate queueing mechanism.

The SteelHead HFSC-based QoS enforcement system provides the flexibility needed to simultaneously support varying degrees of delay requirements and bandwidth usage. For example, you can enforce a mix of high-priority, low-bandwidth traffic patterns (for example, SSH, Telnet, Citrix, RDP, and CRM systems) with lower priority, high-bandwidth traffic (for example, FTP, backup, and replication). RiOS QoS allows you to protect delay-sensitive traffic such as VoIP, as well as other delay-sensitive traffic such as RDP and Citrix. You can do this without having to reserve large amounts of bandwidth for their traffic classes.

QoS classification occurs during connection setup for optimized traffic, before optimization and compression. QoS shaping and enforcement occurs after optimization and compression.

By design, QoS is applied to both pass-through and optimized traffic; however, you can choose to classify either pass-through or optimized traffic. QoS is implemented in the operating system; it’s not a part of the optimization service. When the optimization service is disabled, all the traffic is pass-through and is still shaped by QoS.

Note: Flows can be incorrectly classified if there are asymmetric routes in the network when any of the QoS features are enabled.

WeQoS EX xx60 Series Limits

Riverbed limits the maximum bandwidth on the SteelHead EX xx60 series shown in this table. Riverbed recommends the maximum classes, rules, and sites shown in this table for optimal performance and to avoid delays while changing the QoS configuration.

The QoS bandwidth limits are global across all WAN interfaces and the primary interface.

Traffic that passes through a SteelHead EX but is not destined to the WAN is not subject to the QoS bandwidth limit. Examples of traffic that is not subject to the bandwidth limits include routing updates, DHCP requests, and default gateways on the WAN-side of the SteelHead EX that redirect traffic back to other LAN-side subnets.

SteelHead Appliance EX Model | Maximum Configurable Root Bandwidth (Mbps) | Recommended Maximum Classes | Recommended Maximum Rules | Recommended Maximum Sites |

EX560 | 12 for G, L, M configurations 20 for H configuration | 250 | 250 | 25 |

EX760 | 45 | 500 | 500 | 50 |

EX1160 | 100 | 500 | 500 | 50 |

EX1260 | 100 | 500 | 500 | 50 |

EX1360 | 100 | 500 | 500 | 100 |

following

Bypassing LAN Traffic

We recommend a maximum limit on the configurable root bandwidth for the WAN interface. The hardware platform determines the recommended limit.

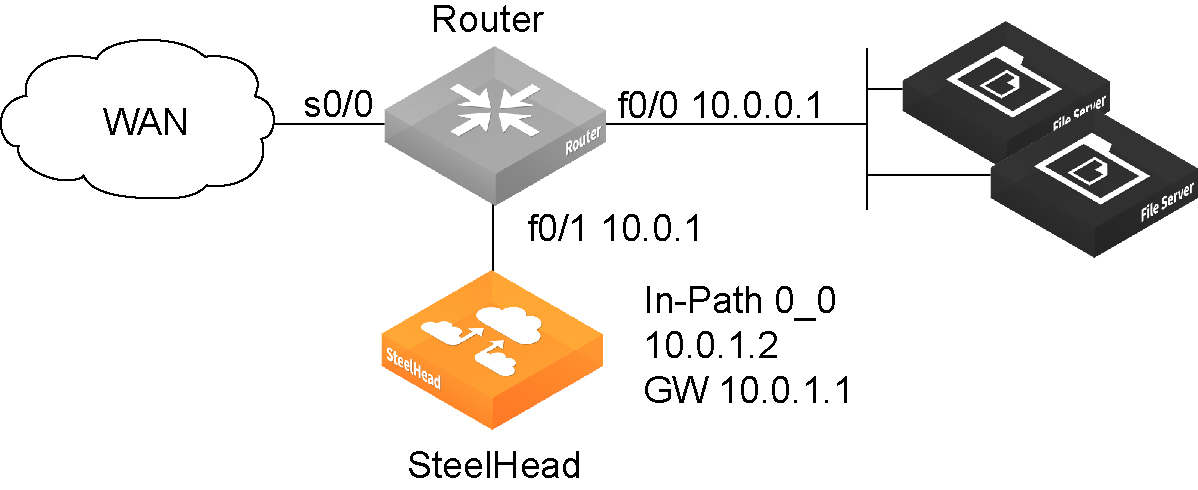

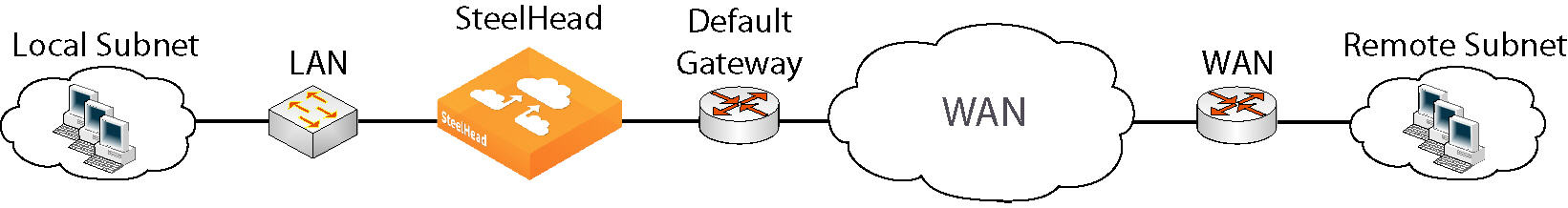

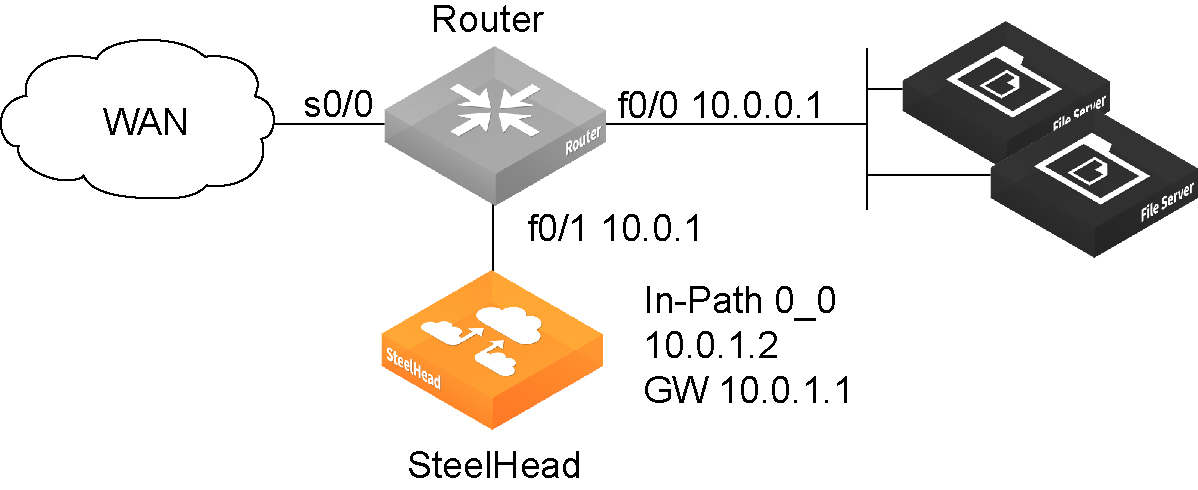

Certain virtual in-path network topologies where the LAN-bound traffic traverses the WAN interface might require that the SteelHead bypass LAN-bound traffic so that it’s not included in the rate limit determined by the recommended maximum root bandwidth. Some deployment examples are WCCP or a WAN-side default gateway.

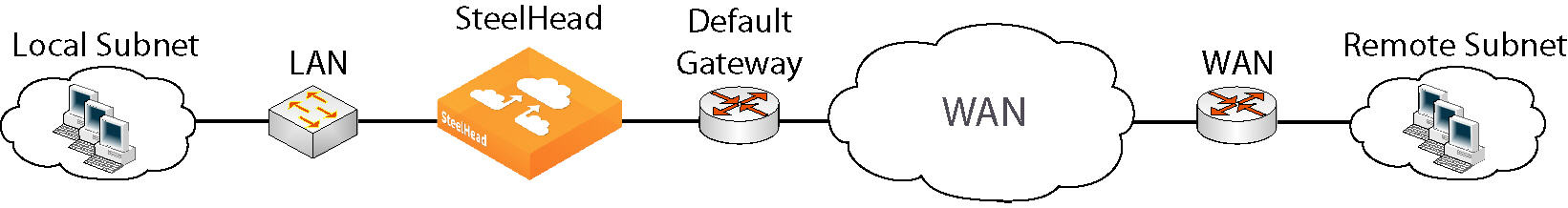

Figure: In-path Configuration Where Default LAN Gateway is Accessible Over the SteelHead WAN Interface and

Figure: WCCP Configuration Where Default LAN Gateway is Accessible Over the SteelHead WAN Interface illustrate topologies where the default LAN gateway or router is accessible over the WAN interface of the SteelHead. If there are two clients in the local subnet, traffic between the two clients is routable after reaching the LAN gateway. As a result, this traffic traverses the WAN interface of the SteelHead.

Figure: In-path Configuration Where Default LAN Gateway is Accessible Over the SteelHead WAN Interface

Figure: WCCP Configuration Where Default LAN Gateway is Accessible Over the SteelHead WAN Interface

In a QoS configuration for these topologies, suppose you have several QoS classes created and the root class is configured with the WAN interface rate. The remainder of the classes use a percentage of the root class. In this scenario, the LAN traffic is rate limited because RiOS classifies it into one of the classes under the root class.

You can use the LAN bypass feature to exempt certain subnets from QoS enforcement, bypassing the rate limit. The LAN bypass feature is enabled by default and comes into effect when subnet side rules are configured.

To filter the LAN traffic from the WAN traffic

1. If QoS isn’t running, choose Networking > Network Services: Quality of Service and enable inbound or outbound QoS Shaping.

2. Choose Networking > Network Services: Subnet Side Rules.

3. Click Add a Subnet Side Rule.

4. Select Start, End, or a rule number from the drop-down list.

5. Specify the client-side SteelHead subnet using the format <IP address>/<subnet mask>.

6. Select Subnet address is on the LAN side of the appliance.

7. Click Add.

To verify the traffic classification, choose Reports > Networking: Inbound QoS or Outbound QoS.

Note: The SteelHead processes the subnet side LAN rules before the QoS outbound rules.

Note: In virtual-in-path deployment, using subnet side rules is the same for QoS and NetFlow. In an in-path deployment NetFlow discards the subnet side rules.

QoS Classification for the FTP Data Channel

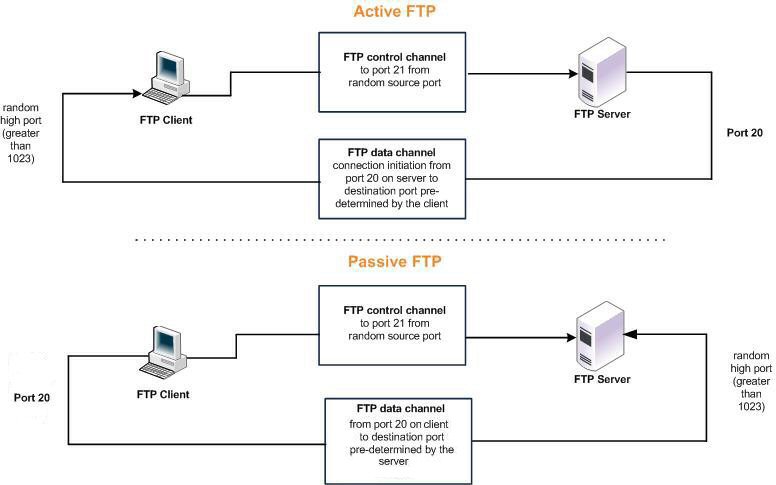

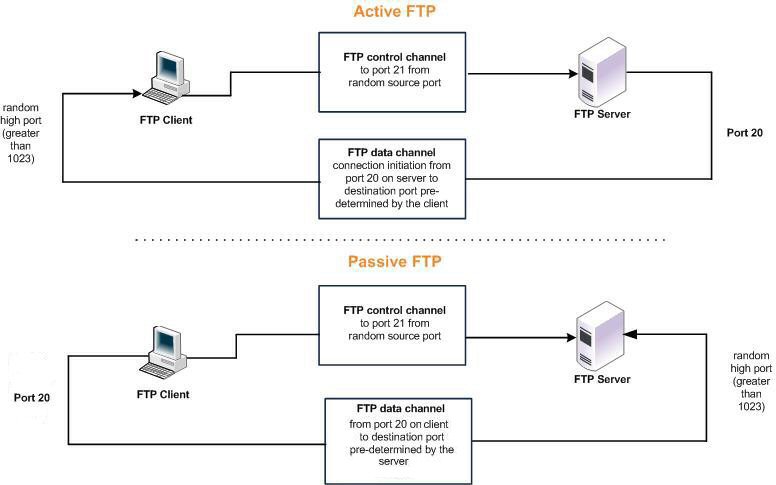

When configuring QoS classification for FTP, the QoS rules differ depending on whether the FTP data channel is using active or passive FTP. Active versus passive FTP determines whether the FTP client or the FTP server select the port connection for use with the data channel, which has implications for QoS classification.

The Application Flow engine doesn’t support passive FTP. Because passive FTP uses random high TCP-port numbers to set up its data channel from the FTP server to the FTP client, the FTP data traffic can’t be classified on the TCP port numbers. To classify passive FTP traffic, you can add an application rule where the application is FTP and that matches on the FTP servers IP address.

Active FTP Classification

With active FTP, the FTP client logs in and enters the PORT command, informing the server which port it must use to connect to the client for the FTP data channel. Next, the FTP server initiates the connection toward the client. From a TCP perspective, the server and the client swap roles. The FTP server becomes the client because it sends the SYN packet, and the FTP client becomes the server because it receives the SYN packet.

Although not defined in the RFC, most FTP servers use source port 20 for the active FTP data channel.

For active FTP, configure a QoS rule on the server-side SteelHead to match source port 20. On the client-side SteelHead, configure a QoS rule to match destination port 20.

You can also use AFE to classify active FTP traffic.

Passive FTP Classification

With passive FTP, the FTP client initiates both connections to the server. First, it requests passive mode by entering the PASV command after logging in. Next, it requests a port number for use with the data channel from the FTP server. The server agrees to this mode, selects a random port number, and returns it to the client. Once the client has this information, it initiates a new TCP connection for the data channel to the server-assigned port. Unlike active FTP, there’s no role swapping and the FTP client initiates the SYN packet for the data channel.

The FTP client receives a random port number from the FTP server. Because the FTP server can’t return a consistent port number to use with the FTP data channel, RiOS doesn’t support QoS Classification for passive FTP in versions earlier than RiOS 4.1.8, 5.0.6, or 5.5.1. Later RiOS releases support passive FTP and the QoS Classification configuration for passive FTP is the same as active FTP.

When configuring QoS Classification for passive FTP, port 20 on both the server and client-side SteelHeads means the port number used by the data channel for passive FTP, as opposed to the literal meaning of source or destination port 20.

Note: The SteelHead must intercept the FTP control channel (port 21), regardless of whether the FTP data channel is using active or passive FTP.

The Application Flow engine doesn’t support passive FTP. Because passive FTP uses random high TCP-port numbers to set up its data channel from the FTP server to the FTP client, the FTP data traffic can’t be classified on the TCP port numbers. To classify passive FTP traffic, you can add an application rule in which the application is FTP and matches the IP address of the FTP server.

Figure: Active and Passive FTP

QoS Classification for Citrix Traffic

RiOS 9.x doesn’t support packet-order queueing or latency priorities with Citrix traffic. We recommend using either the Autonegotiation of Multi-Stream ICA feature or the Multi-Port feature to classify Citrix traffic types for QoS. For details, see

Configuring Citrix Optimization.

RiOS 8.6.x and earlier provide a way to classify Citrix traffic using QoS to differentiate between different traffic types within a Citrix session. QoS classification for Citrix traffic is beneficial in mixed-use environments where Citrix users perform printing and use drive-mapping features. Using QoS to classify Citrix traffic in a mixed-use environment provides optimal network performance for end users.

Citrix QoS classification provides support for Presentation Server 4.5, XenApp 5.0 and 6.0, and 10.x, 11.x, and 12.x clients.

The essential RiOS capabilities that ensure optimal delivery of Citrix traffic over the network are:

• Latency priority - The Citrix traffic application priority affects traffic latency, which allows you to assign interactive traffic a higher priority than print or drive-mapping traffic. A typical application priority for interactive Citrix sessions, such as screen updates, is real-time or interactive. Keep in mind that priority is relative to other classes in your QoS configuration.

• Bandwidth allocation (also known as traffic shaping) - When configuring QoS for Citrix traffic, it’s important to allocate the correct amount of bandwidth for each QoS traffic class. The amount you specify reserves a predetermined amount of bandwidth for each traffic class. Bandwidth allocation is important for ensuring that a given class of traffic can’t consume more bandwidth than it is allowed. It’s also important to ensure that a given class of traffic has a minimum amount of bandwidth available for delivery of data through the network.

The default ports for the Citrix service are 1494 (native ICA traffic) and 2598 (session reliability). To use session reliability, you must enable Citrix optimization on the SteelHead in order to classify the traffic correctly. You can enable and modify Citrix optimization settings in the Optimization > Protocols: Citrix page. For details, see

Configuring Citrix Optimization.

You can use session reliability with optimized traffic only. Session reliability with RiOS QoS doesn’t support pass-through traffic. For details about disabling session reliability, go to

http://support.citrix.com/proddocs/index.jsp?topic=/xenapp5fp-w2k8/ps-sessions-sess-rel.html.Note: If you upgrade from a previous RiOS version with an existing Citrix QoS configuration, the upgrade automatically combines the five preexisting Citrix rules into one.

Note: For QoS configuration examples, see the SteelHead Deployment Guide.