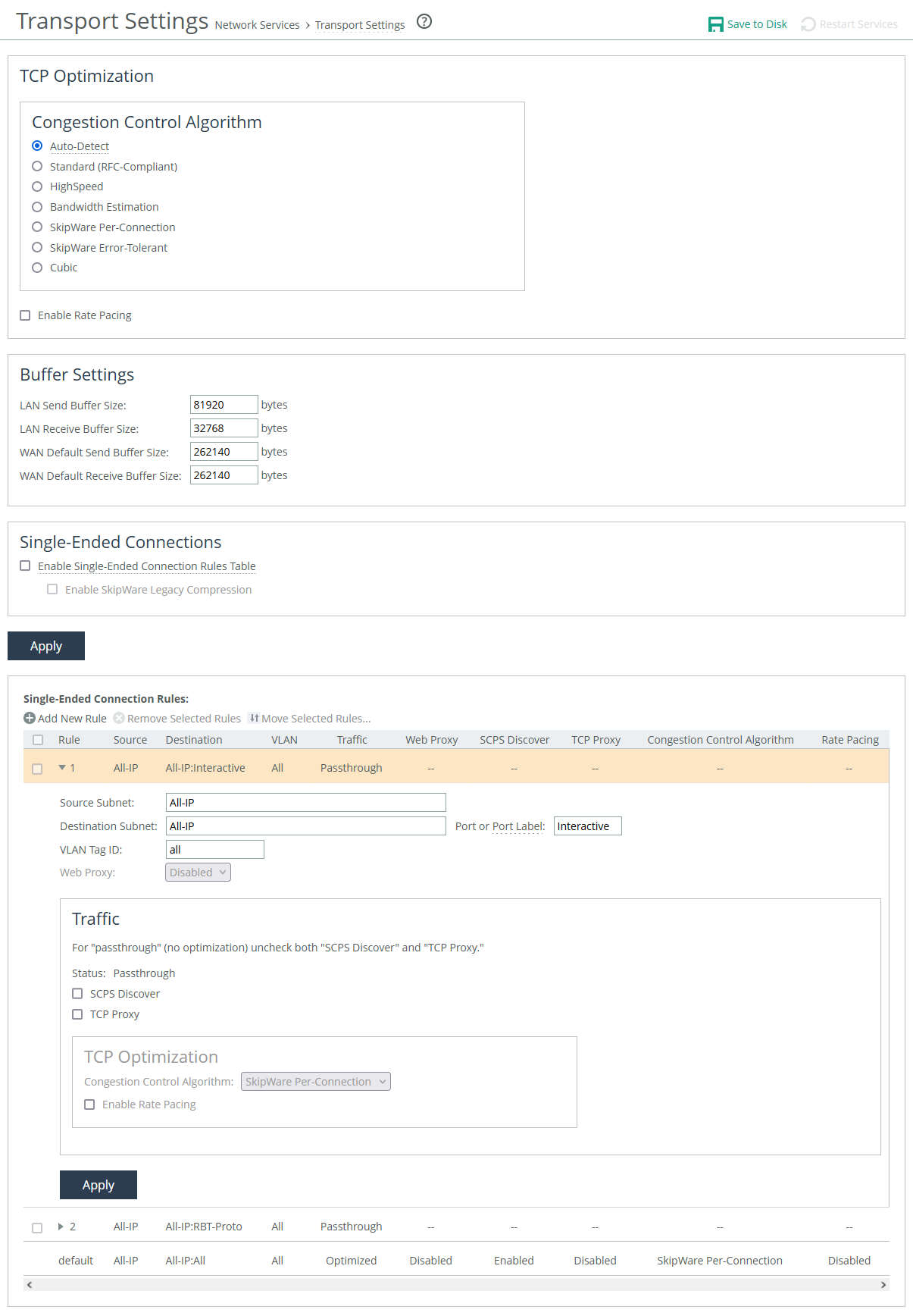

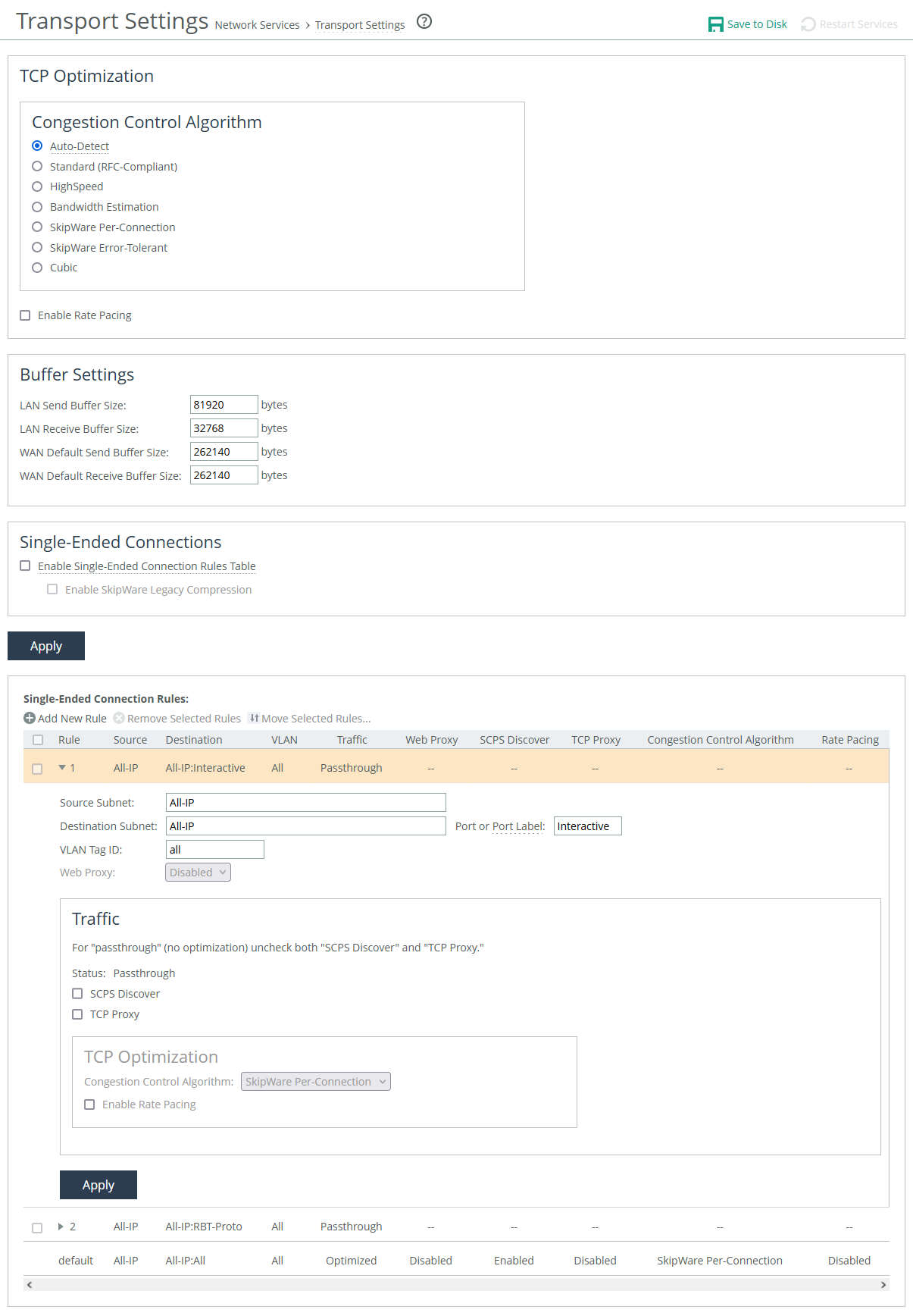

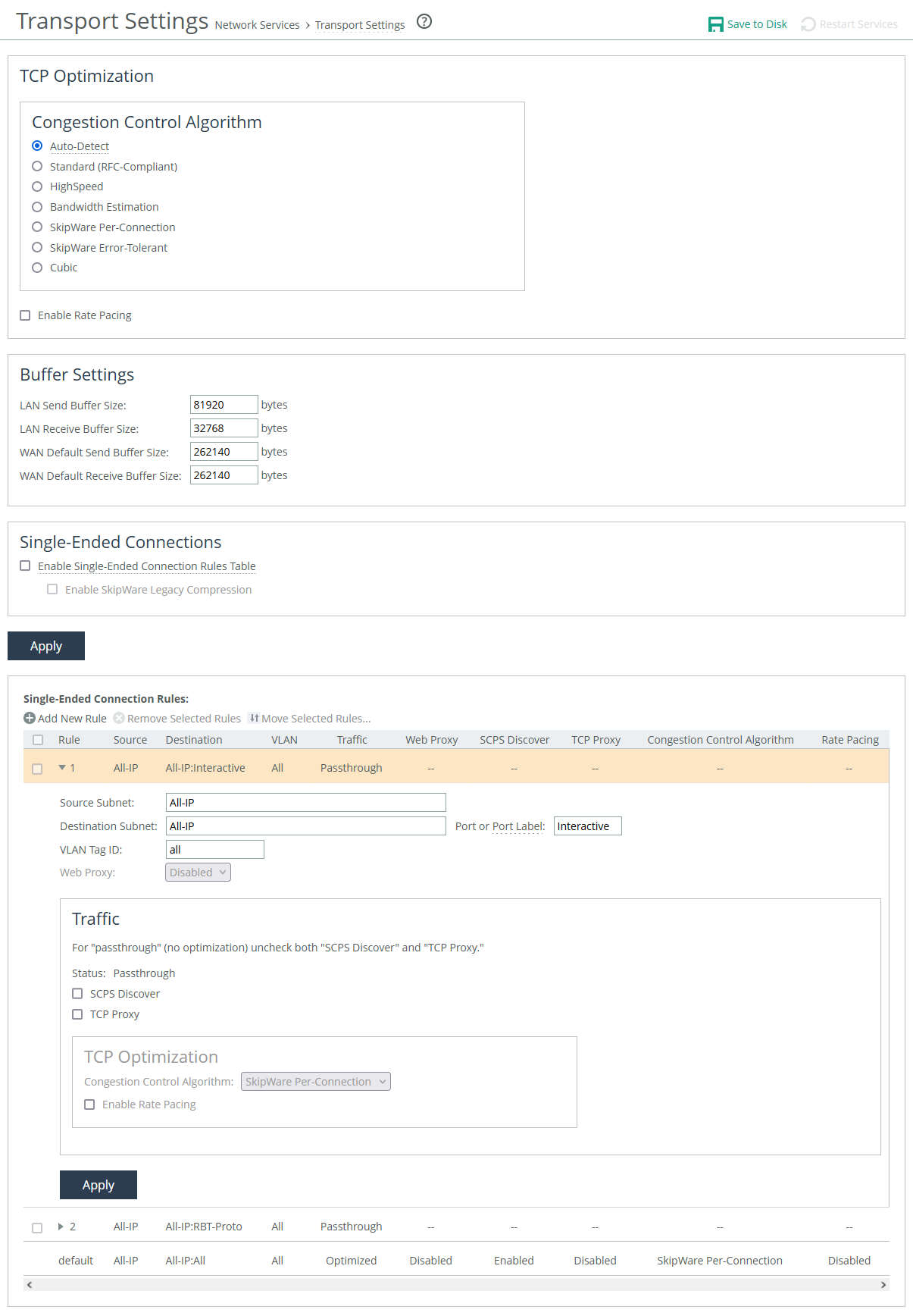

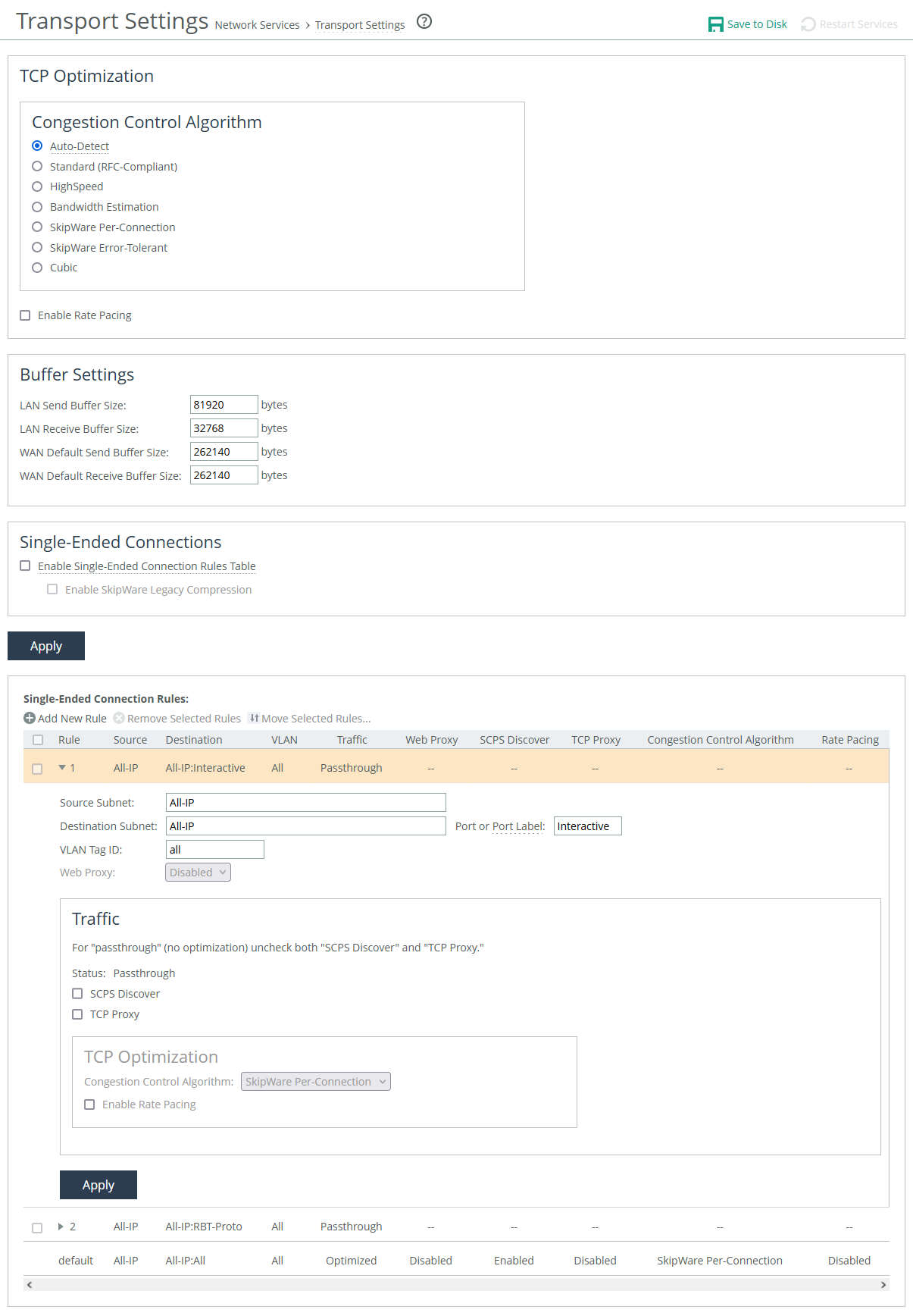

About transport settings

Transport settings are under Optimization > Network Services: Transport Settings.

Transport settings

After you apply your settings, you can verify whether changes have had the desired effect by viewing the Current Connections report. The report summarizes the optimized established connections for SCPS. SCPS connections appear as typical established, optimized or established, or single-ended optimized connections. Click the connection to view details. SCPS connection detail reports display SCPS Initiate or SCPS Terminate under Connection Information. Under Congestion Control, the report displays the congestion control method that the connection is using.

TCP optimization

Auto-Detect detects the optimal TCP configuration by using the same mode as the peer appliance for inner connections, SkipWare when negotiated, or standard TCP for all other cases. If you have a mixed environment where several different types of networks terminate into a hub or server-side SteelHead, optionally enable this setting on your hub appliance so it can reflect the various transport optimization mechanisms of your remote site appliances. Appliances advertise automatic detection of TCP to peers through their OOB connections.

Standard (RFC-Compliant) applies data and transport streamlining to non-SCPS TCP connections. This control forces peers to use standard TCP as well. Clears any previously set advanced bandwidth congestion control.

HighSpeed enables high-speed TCP optimization for more complete use of long fat pipes (high-bandwidth, high-delay networks). Do not enable for satellite networks. We recommend that you enable high-speed TCP optimization only after you have carefully evaluated whether it will benefit your network environment. Optionally, you can enable this feature using the tcp highspeed enable command.

Bandwidth Estimation uses an intelligent bandwidth estimation algorithm along with a modified slow-start algorithm to optimize performance in long lossy networks, such as satellite, cellular, longer microwave, or Wi-Max networks. Bandwidth estimation is a sender-side modification of TCP and is compatible with other TCP stacks. Estimations are based on analysis of ACKs and latency measurements. Modified slow-start enables a flow to ramp up faster in high-latency environments than traditional TCP. The intelligent bandwidth estimation algorithm allows it to learn effective rates for use during modified slow start, and also to differentiate bit error rate (BER) loss from congestion-derived loss and manage them accordingly. Bandwidth estimation has good fairness and friendliness qualities toward other traffic along the path.

SkipWare Per-Connection applies TCP congestion control to each SCPS-capable connection. The congestion control uses:

• a pipe algorithm that gates when a packet should be sent after receipt of an ACK.

• the NewReno algorithm, which includes the sender's congestion window, slow start, and congestion avoidance.

• time stamps, window scaling, appropriate byte counting, and loss detection.

This transport setting uses a modified slow-start algorithm and a modified congestion-avoidance approach. When enabled, SCPS per-connection ramps up flows faster in high-latency environments and handles lossy scenarios while remaining reasonably fair and friendly to other traffic. SCPS per-connection does a very good job of efficiently filling up satellite links of all sizes. SCPS per-connection is a high-performance option for satellite networks.

We recommend enabling per-connection if the error rate in the link is less than approximately 1 percent.

SkipWare Error-Tolerant accelerates SCPS and provides error-rate detection and recovery. This setting allows per-connection congestion control to tolerate some loss due to corrupted packets (bit errors), without reducing throughput, using a modified slow-start algorithm and a modified congestion-avoidance approach. It requires significantly more retransmitted packets to trigger this congestion-avoidance algorithm than the SkipWare per-connection setting. Error-tolerant TCP acceleration assumes that the environment has a high BER and that most retransmissions are due to poor signal quality instead of congestion. This method maximizes performance in high-loss environments, without incurring the additional per-packet overhead of a forward error correction (FEC) algorithm at the transport layer.

SCPS error tolerance is a high-performance option for lossy satellite networks. Use caution when enabling this feature, particularly in channels with coexisting TCP traffic. It can be quite aggressive and adversely affect channel congestion with competing TCP flows. We recommend enabling this feature if the error rate in the link is more than approximately 1 percent.

Cubic enables the Cubic congestion control algorithm, which offers better performance and faster recovery after congestion events than NewReno, the previous local default.

Rate pacing

Enable Rate Pacing imposes a global data-transmit limit on the link rate for all SCPS connections between peers, or on the link rate for an appliance paired with a third-party device running TCP-PEP (Performance Enhancing Proxy). Disabled by default. IPv6 not supported

Rate pacing combines MX-TCP and your selected congestion-control method. The congestion-control method runs as an overlay on top of MX-TCP and probes for the actual link rate. It then communicates the available bandwidth to MX-TCP. Enable rate pacing to prevent congestion collapse, packet bursts, and congestion loss while exiting the slow-start phase. The slow-start phase is an important part of the TCP congestion-control mechanisms that starts slowly increasing its window size as it gains confidence about the network throughput.

With no congestion, the slow-start phase ramps up to the MX-TCP rate and settles there. When the appliance detects congestion—either due to other sources of traffic, a bottleneck other than the satellite modem, or because of a variable modem rate, the congestion-control method activates to avoid congestion problems and exit the slow-start phase faster.

The client-side appliance communicates to the server-side appliance that rate pacing is in effect. The server-side appliance should have its TCP optimization set to auto detect, so it automatically negotiates the configuration with the client-side appliance. Also, configure an MX-TCP QoS rule to set the appropriate rate cap. If an MX-TCP QoS rule is not in place, the system doesn’t apply rate pacing and the congestion-control method takes effect. You can’t delete the MX-TCP QoS rule when rate pacing is enabled.

Buffer

The buffer settings support high-speed TCP and are also used in data protection scenarios to improve performance.

LAN send buffer size specifies size used to send data out of the LAN. Default is 81920.

LAN receive buffer size specifies size used to receive data from the LAN. Default is 32768.

WAN default send buffer size specifies size used to send data out of the WAN. Default is 262140.

WAN default receive buffer size specifies size used to receive data from the WAN. Default is 262140.