Understanding crash consistency and application consistency

In the context of snapshots and backups and data protection in general, two types or states of data consistency are distinguished:

• Crash consistency - A backup or snapshot is crash consistent if all of the interrelated data components are as they were (write-order consistent) at the instant of the crash. This type of consistency is similar to the status of the data on your PC’s hard drive after a power outage or similar event. A crash-consistent backup is usually sufficient for nondatabase operating systems and applications like file servers, DHCP servers, print servers, and so on.

• Application consistency - A backup or snapshot is application consistent if, in addition to being write-order consistent, running applications have completed all their operations and flushed their buffers to disk (application quiescing). Application-consistent backups are recommended for database operating systems and applications such as SQL, Oracle, and Exchange.

The SteelFusion product family ensures continuous crash consistency at the branch and at the data center by using journaling and by preserving the order of WRITEs across all the exposed LUNs. For application-consistent backups, administrators can directly configure and assign hourly, daily, or weekly snapshot policies on the Core. Edge interacts directly with both VMware ESXi and Microsoft Windows servers, through VMware Tools and Volume Snapshot Service (VSS) to quiesce the applications and generate application-consistent snapshots of both Virtual Machine File System (VMFS) and New Technology File System (NTFS) data drives.

Managing vSphere datastores presented by Core

Through the vSphere client, you can view inside the LUN to see the VMs previously loaded in the data center storage array. You can add a server that contains vSphere VMs as a datastore to the ESXi server in the branch. This server can be either a regular hardware platform hosting ESXi or the hypervisor node in the Edge appliance.

Single-appliance versus high-availability deployments

This section describes types of SteelFusion appliance deployments. It includes the following topics:

This section assumes that you understand the basics of how the SteelFusion product family works together, and you are ready to deploy your appliances.

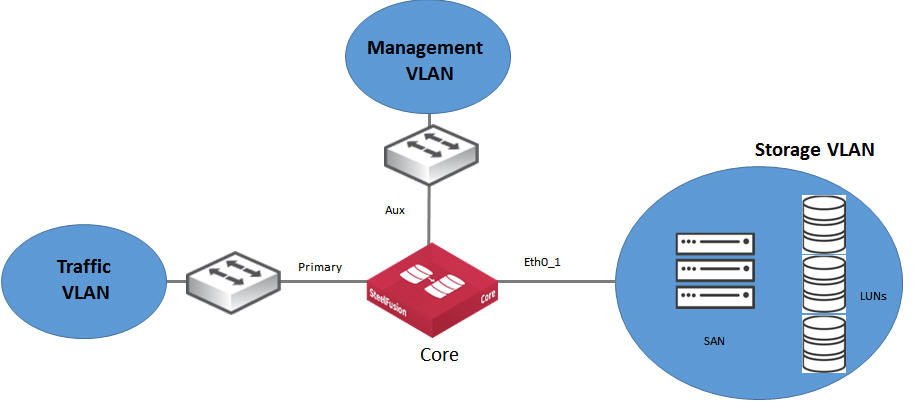

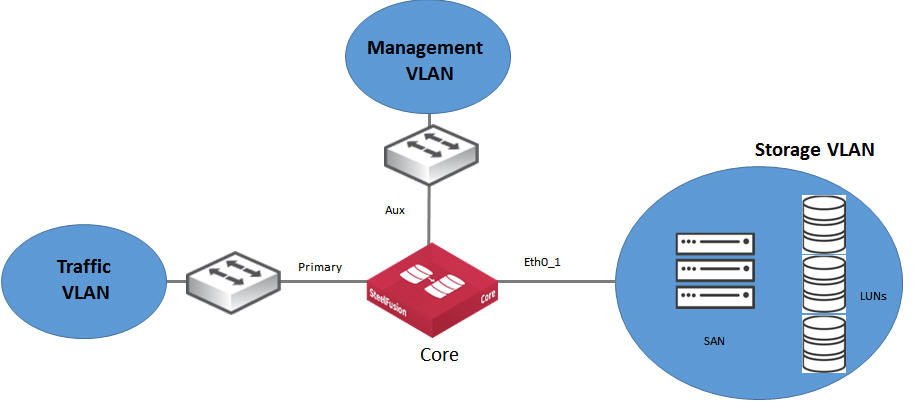

Single-appliance deployment

In a single-appliance deployment (basic deployment), SteelFusion Core connects to the storage array through a data interface. Depending on the model of the Core, the data interface is named ethX_Y in which X and Y are some numerical value such as eth0_0, eth0_1, and so on. The primary (PRI) interface is dedicated to the traffic VLAN, and the auxiliary (AUX) interface is dedicated to the management VLAN. More complex designs generally use the additional network interfaces. For more information about Core interface names and their possible uses, see

Core interface and port configuration.

Figure: Single-appliance deployment

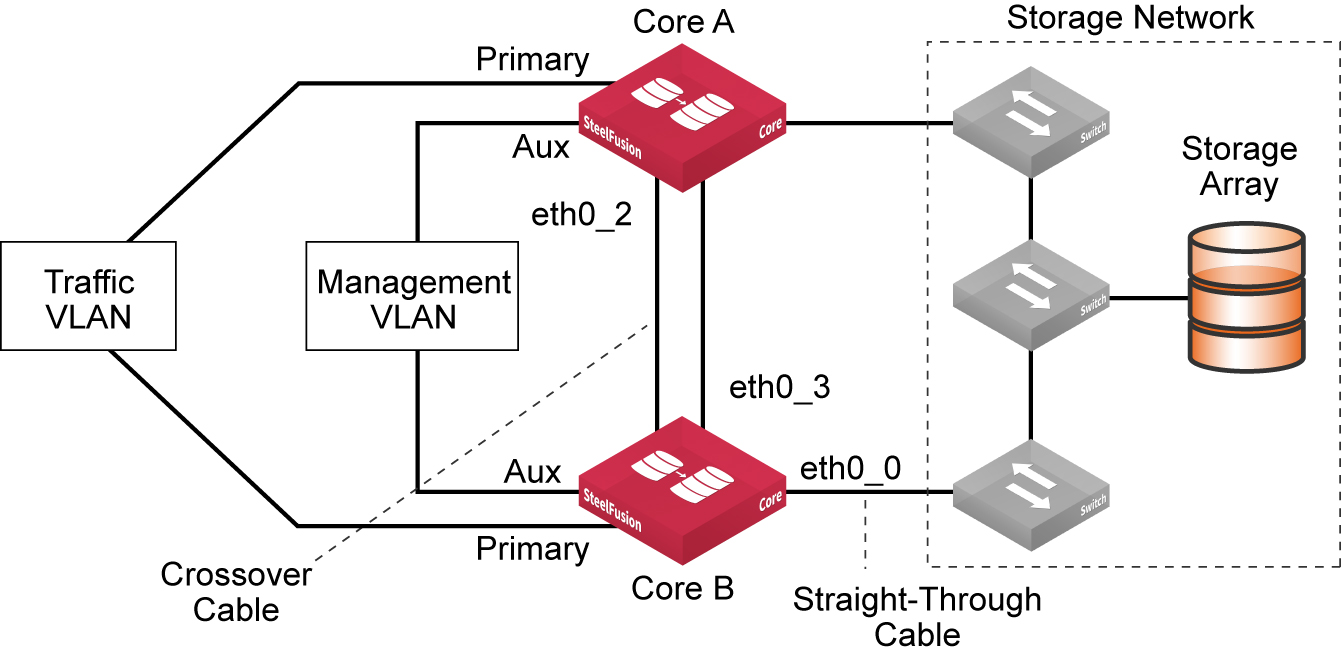

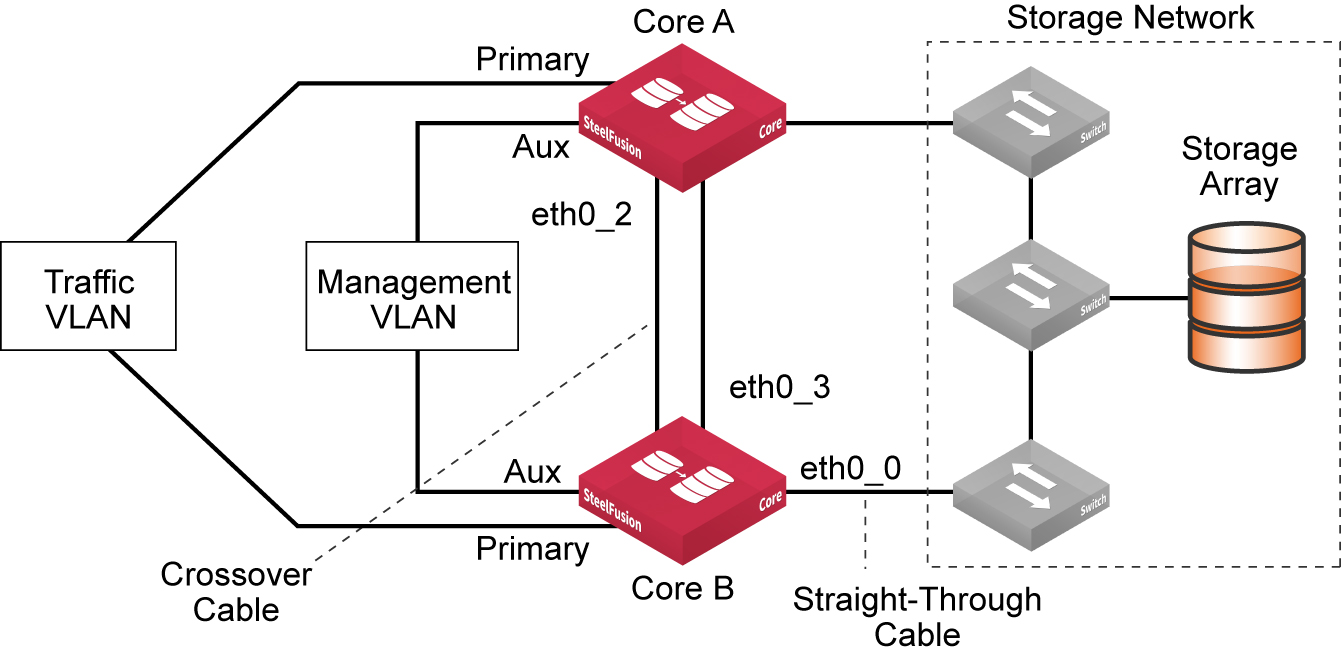

High-availability deployment

In a high-availability (HA) deployment, two Cores operate as failover peers. Both appliances operate independently with their respective and distinct Edges until one fails; then the remaining operational Core handles the traffic for both appliances.

Figure: HA deployment

Connecting Core with Edge

This section describes the prerequisites for configuring the data center (Core) and branch office (Edge) components of the SteelFusion product family, and it provides an overview of the procedures required. It includes the following topics:

Prerequisites

Before you configure Core with Edge, ensure that the following tasks have been completed:

• Assign an IP address or hostname to the Core.

• Determine the iSCSI Qualified Name (IQN) to be used for Core.

When you configure Core, you set this value in the initiator configuration.

• Set up your storage array:

– Register the Core IQN.

– Configure iSCSI portal, targets, and LUNs, with the LUNs assigned to the Core IQN.

• Assign an IP address or hostname to the Edge.

Connecting the SteelFusion product family components

This table summarizes the process for connecting and configuring Core and Edge as a system. You can perform some of the steps in the table in a different order, or even in parallel with each other in some cases. The sequence shown is intended to illustrate a method that enables you to complete one task so that the resources and settings are ready for the next task in the sequence.

Component | Procedure | Description |

Core | Determine the network settings for Core. | Prior to deployment: • Assign an IP address or hostname to Core. • Determine the IQN to be used for Core. When you configure Core, you set this value in the initiator configuration. |

iSCSI-compliant storage array | Register the Core IQN. | Core uses the IQN name format for iSCSI initiators. For details about IQN, see http://tools.ietf.org/html/rfc3720. |

Prepare the iSCSI portals, targets, and LUNs, with the LUNs assigned to the Core IQN. | Prior to deploying Core, you must prepare these components. |

Fibre channel-compliant storage array | Enable Fibre Channel connections. | For details, see SteelFusion and Fibre Channel. |

Core | Install Core. | For details, see the SteelFusion Core Installation and Configuration Guide. |

Edge | Install the Edge. | For details, see the SteelFusion Edge Installation and Configuration Guide. |

Edge | Configure disk management. | You can configure the disk layout mode to allow space for the SteelFusion blockstore in the Disk Management page. Free disk space is divided between the Virtual Services Platform (VSP) and the SteelFusion blockstore. |

Configure SteelFusion SteelFusionSteelFusion storage settings. | The SteelFusion storage settings are used by the Core to recognize and connect to the Edge. |

Core | Run the Setup Wizard to perform initial configuration. | The Setup Wizard performs the initial, minimal configuration of the Core, including: • Network settings • iSCSI initiator configuration • Mapping LUNs to the Edges For details, see the SteelFusion Edge Installation and Configuration Guide. |

Core | Configure iSCSI initiators and LUNs. | Configure the iSCSI initiator and specify an iSCSI portal. This portal discovers all the targets within that portal. Add and configure the discovered targets to the iSCSI initiator configuration. |

Configure targets. | After a target is added, all the LUNs on that target can be discovered, and you can add them to the running configuration. |

Map LUNs to the Edges. | Using the previously defined Edge self-identifier, connect LUNs to the appropriate Edges. |

For details about these procedures, see the SteelFusion Core User Guide. |

Core | Configure CHAP users and storage array snapshots. | Optionally, you can configure CHAP users and storage array snapshots. For details, see the SteelFusion Core User Guide. |

Edge | Confirm the connection with Core. | After completing the Core configuration, confirm that the Edge is connected to and communicating with the Core. |

Adding Edges to the Core configuration

You can add and modify connectivity with Edges in the Configure > Manage: SteelFusion Edges page in the Core Management Console.

This procedure requires you to provide the Edge Identifier for the Edge. Choose this value in the SteelFusion Edge Management Console Storage > Storage Edge page.

For more information, see the SteelFusion Core User Guide, the SteelFusion Command-Line Interface Reference Manual, and the Riverbed Command-Line Interface Reference Manual.

Configuring Edge

For information about Edge configuration for deployment, see

Configuring the Edge.

Mapping LUNs to Edges

This section describes how to configure LUNs and map them to Edges. It includes the following topics:

Configuring iSCSI settings

You can view and configure the iSCSI initiator, portals, and targets in the iSCSI Configuration page.

The iSCSI Initiator settings configure how the Core communicates with one or more storage arrays through the specified portal configuration.

After configuring the iSCSI portal, you can open the portal configuration to configure targets.

For more information and procedures, see the SteelFusion Core User Guide, the SteelFusion Command-Line Interface Reference Manual, and the Riverbed Command-Line Interface Reference Manual.

Configuring LUNs

You configure block disk (Fibre Channel), Edge local, and iSCSI LUNs in the LUNs page.

Typically, block disk and iSCSI LUNs are used to store production data. They share the space in the blockstore cache of the associated Edges, and the data is continuously replicated and kept synchronized with the associated LUN in the data center. The Edge blockstore caches only the working set of data blocks for these LUNs; additional data is retrieved from the data center when needed.

Block-disk LUN configuration pertains to Fibre Channel support. Fibre Channel is supported only in

Core-v deployments. For more information, see

Configuring Fibre Channel LUNs.

Edge local LUNs are used to store transient and temporary data or local copies of software distribution repositories. Local LUNs also use dedicated space in the blockstore cache of the associated Edges, but the data is not replicated back to the data center LUNs.

Configuring Edges for specific LUNs

After you configure the LUNs and Edges for the Core, you can map the LUNs to the Edges.

You complete this mapping through the Edge configuration in the Core Management Console Configure > Manage: SteelFusion Edges page.

When you select a specific Edge, the following controls for additional configuration are displayed.

Control | Description |

Status | This panel displays the following information about the selected Edge: • IP Address - The IP address of the selected Edge. • Connection Status - Connection status to the selected Edge. • Connection Duration - Duration of the current connection. • Total LUN Capacity - Total storage capacity of the LUN dedicated to the selected Edge. • Blockstore Encryption - Type of encryption selected, if any. The panel also displays a small-scale version of the Edge Data I/O report. |

Target Settings | This panel displays the following controls for configuring the target settings: • Target Name - Displays the system name of the selected Edge. • Require Secured Initiator Authentication - Requires CHAP authorization when the selected Edge is connecting to initiators. If the Require Secured Initiator Authentication setting is selected, you must set authentication to CHAP in the adjacent Initiator tab. • Enable Header Digest - Includes the header digest data from the iSCSI protocol data unit (PDU). • Enable Data Digest - Includes the data digest data from the iSCSI PDU. • Update Target - Applies any changes you make to the settings in this panel. |

Initiators | This panel displays controls for adding and managing initiator configurations: • Initiator Name - Specify the name of the initiator you are configuring. • Add to Initiator Group - Select an initiator group from the drop-down list. • Authentication - Select the authentication method from the drop-down list: None - No authentication required. CHAP - Only the target authenticates the initiator. The secret is set just for the target; all initiators that want to access that target must use the same secret to begin a session with the target. Mutual CHAP - The target and the initiator authenticate each other. A separate secret is set for each target and for each initiator in the storage array. If Require Secured Initiator Authentication setting is selected for the Edge in the Target Settings tab, authentication must be configured for a CHAP option. • Add Initiator - Adds the new initiator to the running configuration. |

Initiator Groups | This panel displays controls for adding and managing initiator group configurations: • Group Name - Specifies a name for the group. • Add Group - Adds the new group. The group name displays in the Initiator Group list. After this initial configuration, click the new group name in the list to display additional controls: • Click Add or Remove to control the initiators included in the group. |

Servers | (Version 4.6 and later) This panel displays information about ESXi and/or Windows servers connected to the Edge, including alias hostname/IP address, type (VMware or Windows), connection status, backup policy name, and last status (Triggered, Edge Processing, Core Processing, Proxy Mounting, Proxy Mounted, Not Protected, Protect Failed). Note: If you have configured server-level backups, a manual backup for any server that is added to a backup policy must be triggered from this tab (as opposed to the LUNs tab for LUN-level snapshots). |

LUNs | This panel displays controls for mapping available LUNs to the selected Edge. After mapping, the LUN displays in the list in this panel. To manage group and initiator access, click the name of the LUN to access additional controls. |

Prepopulation | This panel displays controls for configuring prepopulation tasks: • Schedule Name - Specify a task name. • Start Time - Select the start day and time from the respective drop-down list. • Stop Time - Select the stop day and time from the respective drop-down list. • Add Prepopulation Schedule - Adds the task to the Task list. This prepopulation schedule is applied to all virtual LUNs mapped to this appliance if you do not configure any LUN-specific schedules. To delete an existing task, click the trash icon in the Task list. The LUN must be pinned to enable prepopulation. For more information, see LUN pinning and prepopulation in the Core. |

Riverbed Turbo Boot

Riverbed Turbo Boot is a prefetch technique. Turbo Boot uses the Windows Performance Toolkit to generate information that enables faster boot times for Windows VMs in the branch office on either external ESXi hosts or VSP. Turbo Boot can improve boot times by two to ten times, depending on the customer scenario. Turbo Boot is a plugin that records the disk I/O when booting up the host operating system it has been installed on. The disk I/O activity is logged to a file. During any subsequent boots of the host system, the Turbo Boot log file is used by the Core to perform more accurate prefetch of data.

At the end of each boot made by the host, the log file is updated with changes and new information. This update ensures an enhanced prefetch on each successive boot.

Note: Turbo Boot only applies to Windows VMs using NTFS.

Note: For Virtual Edge on Hyper-V deployments, prefetch optimization is supported only for Windows Server 2016 and Windows Server 2012 R2 guest VMs with NTFS volumes.

If you are booting a Windows server or client VM from an unpinned LUN, we recommend that you install the Riverbed Turbo Boot software on the Windows VM.

These operating systems support Riverbed Turbo Boot software:

• Windows Vista

• Windows 7

• Windows Server 2008

• Windows Server 2008 r2

• Windows Server 2012

• Windows Server 2012r2

• Windows Server 2016 (as of Core version 5.0)

For installation information, see the SteelFusion Core Installation and Configuration Guide.

Note: You can download the SteelFusion Turbo Boot plugin from the Riverbed Support site as part of the Unified Installer for Riverbed Plugins Version 5.1. As of version 5.1, Turbo Boot can coexist with the Branch Recovery Agent. When both services are installed on the Windows Server they are automatically started at different intervals. This behavior is by design but may result in the following message on the Windows Server until both services have started, “The NT Kernel Logger session is already in use. Failed to start ETW Controller: sleeping for 60 seconds”. You can ignore the message. For more information about the branch recovery agent, see the SteelFusion Core User Guide.

Related information

• SteelFusion Core Installation and Configuration Guide

• SteelFusion Edge Installation and Configuration Guide

• SteelFusion Core User Guide

• SteelFusion Edge User Guide