SteelFusion and NFS

SteelFusion version 5.0 includes support for Network File System (NFS) storage. It is important to note that this capability is designed for deployment separately from a SteelFusion block storage (iSCSI, Fibre Channel) installation. The two deployment modes (block storage and NFS) cannot be combined within the same SteelFusion appliance (SteelFusion Core or SteelFusion Edge).

However, many of the features included with a SteelFusion block storage implementation are also available with a SteelFusion NFS/file deployment. Review the other chapters in this guide for more detailed advice and guidance about these other features. This chapter explains the differences between an NFS/file deployment and a block storage deployment.

This chapter includes these sections:

Introduction to SteelFusion with NFS

The Network File System (NFS) protocol is an open standard originally developed in 1984. NFSv2 was released in 1989 and NFSv3 was added in 1995. Although NFSv4 was released in 2000, it has had a number of revisions, the most recent being in 2015.

Further details about the individual versions and their capabilities and enhancements go beyond the scope of this guide; however, information is available externally if required. NFSv3 continues to be the most frequently deployed version, with TCP being the preferred delivery protocol in favor of its alternative, UDP.

Prior to version 5.0, SteelFusion specialized in providing block-level access to LUNs served via iSCSI from SteelFusion Edge. In simple terms, this is a Storage Area Network (SAN) for the branch office, which is actually an extension of the SAN located in a data center across the far end of a WAN link. Among the many scenarios for this capability is support for VMFS LUNs mapped to VMware vSphere (ESXi host) servers that use them as their datastores. This includes the hypervisor resident inside the Edge itself.

Additionally, vSphere supports the ability to mount fileshares served out from Network Attached Storage (NAS). These fileshares are exported from a file server across the network to the ESXi host using the Network File System (NFS) protocol. When the fileshare is mounted by the ESXi host, it too can be used as a datastore.

SteelFusion version 5.0 supports this alternative method to access storage used for datastores by ESXi hosts.

The SteelFusion implementation uses NFSv3 over TCP. In the branch office, SteelFusion Edge supports the export of NFS fileshares to external ESXi servers where they can be used as datastores. SteelFusion Edge also supports the export of fileshares to the ESXi server that is hosted inside the hypervisor node of the SteelFusion Edge.

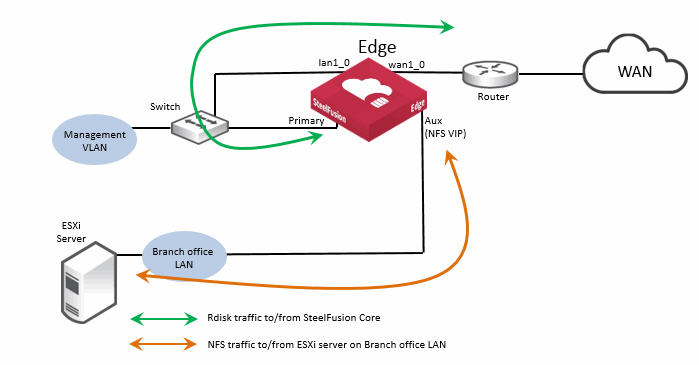

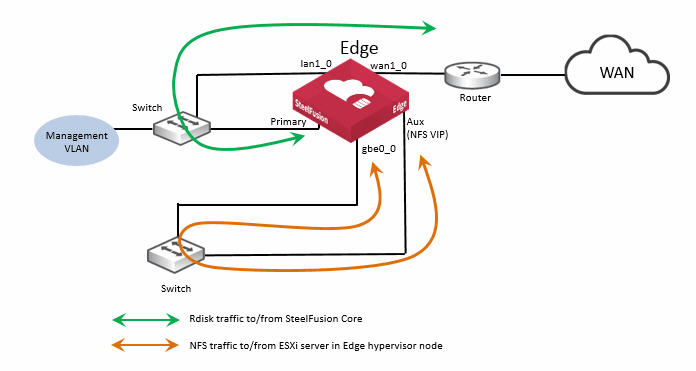

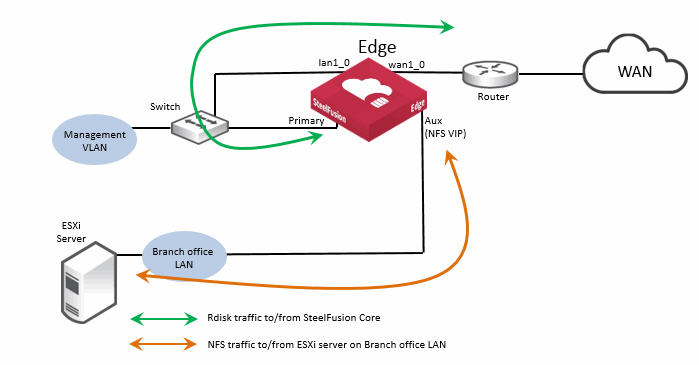

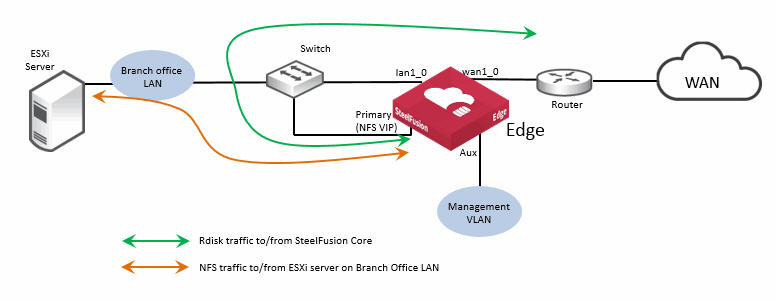

This figure shows a basic deployment diagram, including an external ESXi server in the branch, indicating the protocols in use.

Figure: Use of NFS protocol

The general internal architecture and operation of SteelFusion is preserved despite the different mode of operating with storage. Many of the existing features and benefits of a SteelFusion deployment that exist with a block storage configuration are available when using NFS.

To accommodate the NFS capability within SteelFusion, the use of some network interfaces in Core and Edge may differ from that used in a block storage deployment. Therefore, care must be taken to ensure the correct assignments and configuration settings are made. For details, see

SteelFusion Core interface and port configuration and

SteelFusion Edge interface and port configuration.

Although the majority of existing SteelFusion features available in a block storage deployment are also available with an NFS/file deployment, there are a few items that are not currently supported. For details, see

Existing SteelFusion features available with NFS/file deployments and

Unsupported SteelFusion features with NFS/file deployments.

Existing SteelFusion features available with NFS/file deployments

With SteelFusion version 5.0 in an NFS/file deployment, the following features from previous releases continue to be supported:

• Edge high availability

• Core high availability

• Prefetch

• Prepopulation

• Boot over WAN

• Pinning storage in the branch

• Local storage in the branch

• Snapshot

• Server-level backups

In addition to the above features, SteelFusion version 5.0 with NFS has been qualified with the following storage systems for both the data path and snapshot integration:

• NetApp C mode

• Isilon

This backup software is qualified:

• Commvault

• NetBackup

• BackupExec

• Veeam

• Avamar

Note: Although not qualified, other vendors' storage systems and backup software products may work. See the Riverbed Support website and your local Riverbed representative for the most up to date information.

The following models of SteelFusion Core support NFS/file deployments:

• VGC-1500

• SFCR-3500

Unsupported SteelFusion features with NFS/file deployments

With SteelFusion version 5.0 in an NFS/file deployment, the following features from previous versions are not currently supported or available:

• FusionSync between SteelFusion Cores

• Coreless SteelFusion Edge

• Virtual SteelFusion Edge

SteelHead EX version 5.0 does not support NFS/file deployments.

The following models of SteelFusion Core do not support NFS/file deployments:

• vGC-1000

• SFCR2000

• SFCR3000

Note: An individual Edge or Core can only be deployed in one of the two modes; block storage or NFS/file. It is not possible for the same Edge or Core to support both storage modes simultaneously.

Note: The SteelFusion NFS/file implementation is not designed as a generic file server with NFS access and cannot be used as a global fileshare.

Basic design scenarios

When deploying SteelFusion with NFS, all of the basic design principles that would be used for a SteelFusion deployment in a block storage (iSCSI or Fibre Channel) scenario can generally be applied. Some of the key principles are listed here:

• A single SteelFusion Core can service one or more SteelFusion Edge appliances, but a SteelFusion Edge appliance is only ever assigned to a single SteelFusion Core, and its peer in the case of a SteelFusion Core high-availability (HA) design.

• In a block storage deployment, each LUN is dedicated to a server in a branch and can only be projected to a specific Edge appliance, the same applies with NFS exports. Each individual NFS export is specific to a branch ESXi server (external or internal to the SteelFusion Edge) and only projected across the WAN link to a specific Edge.

• With a block storage deployment, the LUNs in the backend storage array that are assigned for SteelFusion use cannot be simultaneously accessed by other appliances for reading and writing. Backups are performed in the data center by first taking a snapshot of the LUN and then using a proxy host to backup the snapshot. The same rules and guidance apply for NFS exports. They should be protected on the NFS file server from read/write access by other devices. If necessary, other NFS clients could mount an export with a read-only option. Backups should be performed on a snapshot of the exported fileshare.

• There are some differences to be aware of when planning and implementing a design that incorporates NFS. These differences are primarily related to the allocation and use of Ethernet network interfaces on the Edge. There is also the requirement for an Edge Virtual IP (VIP) to be configured on the Edge. The reason for this is to provide the option of HA at the Edge. These differences compared to a block storage deployment are discussed in more detail in

Basic configuration deployment principles.

Basic configuration deployment principles

Configuration and deployment of SteelFusion with NFS can be separated into two categories, the SteelFusion Core and the SteelFusion Edge.

This section includes the following topics:

SteelFusion Core with NFS/file deployment process overview

Deployment of the SteelFusion Core with NFS can be almost directly compared with the procedures and settings that are required for a Core in a block storage scenario. We recommend that you review the details of this section and also the contents of

Deploying the Core. At a high level, deploying the SteelFusion Core requires completion of the following tasks in order:

1. Install and connect the Core in the data center network.

Optionally, you can include both Cores if you are deploying a high-availability solution. For more information on installation, see the SteelFusion Core Installation and Configuration Guide.

2. In the SteelFusion Core Management Console, choose configure > Manage: SteelFusion Edges. Define the SteelFusion Edge Identifiers so you can later establish connections between the SteelFusion Core and the corresponding SteelFusion Edges.

3. In the SteelFusion Edge Management Console, choose Storage > Storage Edge Configuration. In this page, specify:

• the hostname/IP address of the SteelFusion Core

• the SteelFusion Edge Identifier (as defined in step 2)

4. Using the Mount and Map Exports wizard in the SteelFusion Core Management Console, add a storage array and discover the NFS exports from the backend file server. If Edge appliances have already been connected to the Core, then the exports can be mapped to their respective Edges. At the same time, you can configure the export access permissions at the Edge, as well as pinning and prepopulation settings. For more details, see the SteelFusion Core Management Console User’s Guide (NFS).

For examples of how to configure SteelFusion for NFS with Dell EMC Isilon or NetApp Cluster Mode, see the relevant solution guide on the Riverbed Splash site at

https://splash.riverbed.com/community/product-lines/steelfusion.

SteelFusion Core interface and port configuration

This section describes a typical network port configuration. Depending on your deployment scenario, you might require additional routing configuration.

This section includes the following topics:

SteelFusion Core ports with NFS/file deployment

The following table summarizes the ports that connect the SteelFusion Core appliance to your network. Unless noted, the port and descriptions are for all SteelFusion Core models that support NFS.

Port | Description |

Console | Connects the serial cable to a terminal device. You establish a serial connection to a terminal emulation program for console access to the Setup Wizard and the Core CLI. |

Primary (PRI) | Connects Core to a VLAN switch through which you can connect to the Management Console and the Core CLI. You typically use this port for communication with Edge appliances. |

Auxiliary (AUX) | Connects the Core to the management VLAN. You can connect a computer directly to the appliance with a crossover cable, enabling you to access the CLI or Management Console. |

eth1_0 to eth1_3 (applies to SFCR-3500) | Connects the eth1_0, eth1_1, eth1_2, and eth1_3 ports of Core to a LAN switch using a straight-through cable. You can use the ports either for NFS connectivity or failover interfaces when you configure Core for high availability (HA) with another Core. In an HA deployment, failover interfaces are usually connected directly between Core peers using crossover cables. If you deploy the Core between two switches, all ports must be connected with straight-through cables. |

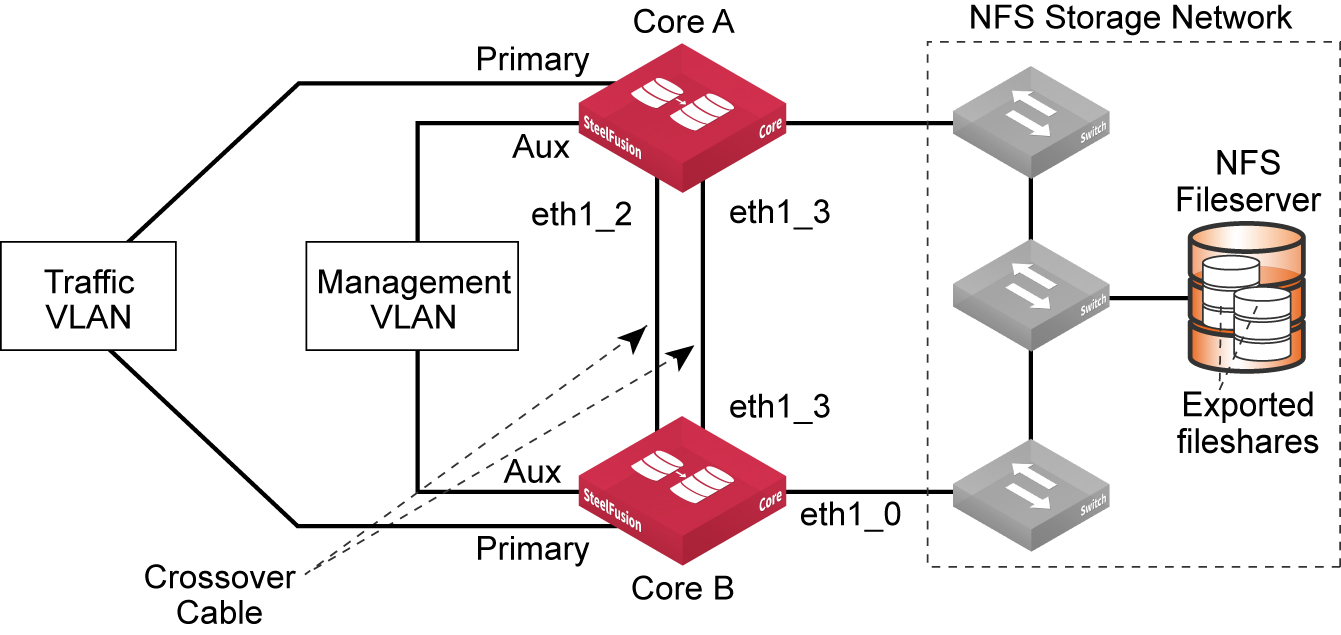

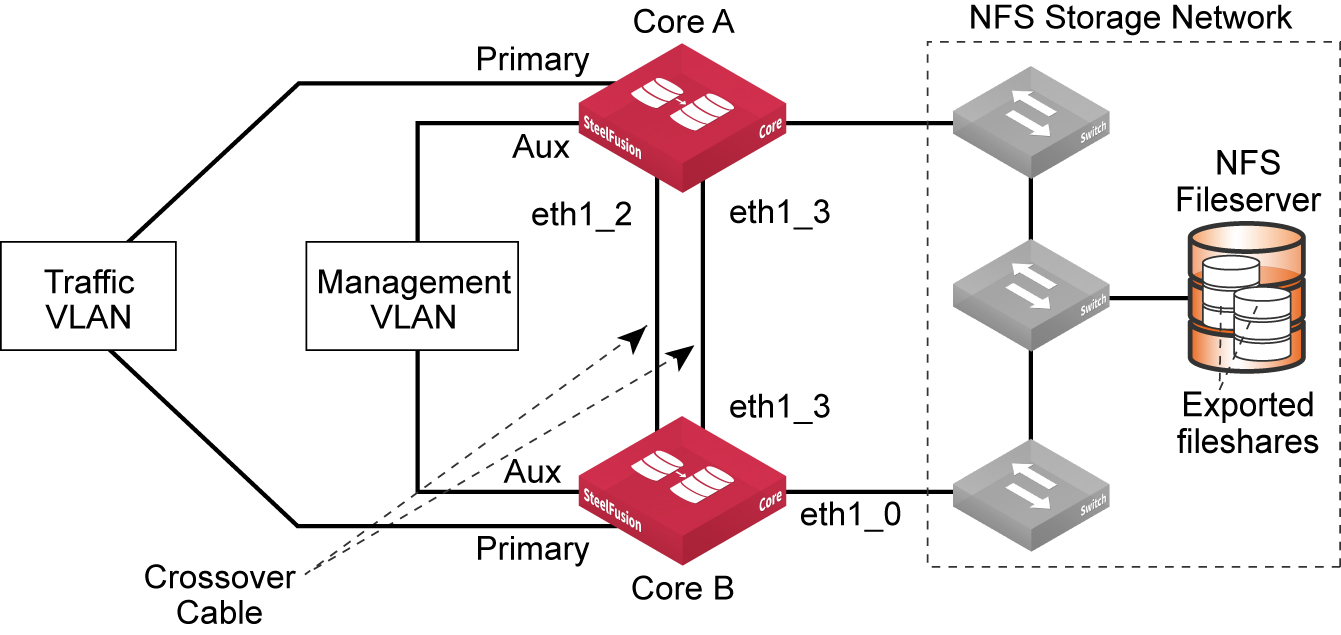

This figure shows a basic HA deployment indicating some of the SteelFusion Core ports and use of straight-through or crossover cables.

Figure: Ports for Core model 3500

Configuring SteelFusion Core interface routing with NFS/file deployment

Interface routing is configured in the same way as would be for a block storage deployment. For details, see

Configuring interface routing.

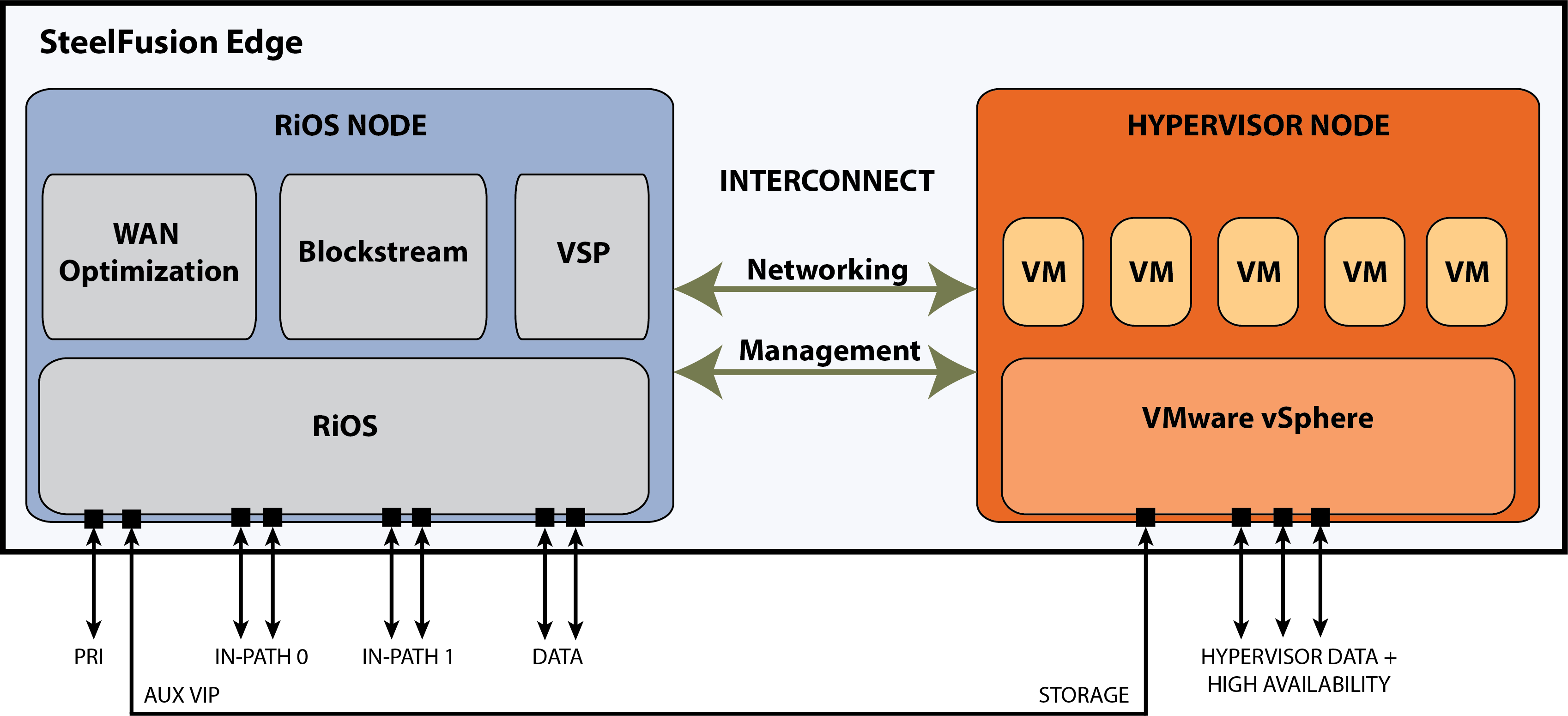

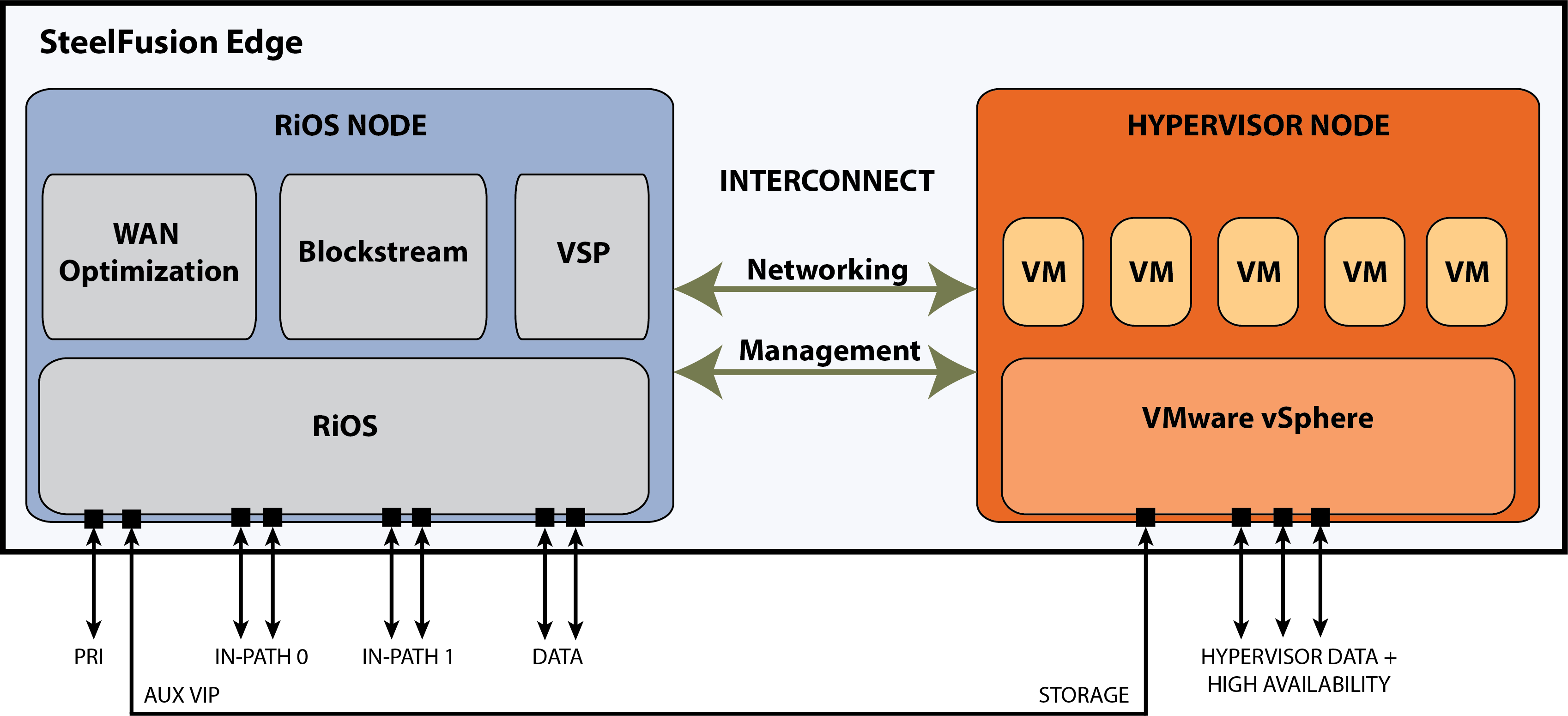

SteelFusion Edge with NFS - appliance architecture

A SteelFusion Edge appliance that is configured for an NFS/file deployment has the same basic internal architecture as an Edge that is configured for block storage deployment. This enables the majority of the existing SteelFusion features and services such as HA, prepopulation, local storage, pinned storage, snapshots, and so on to continue being supported.

This figure shows a similar internal diagram to that shown in

Figure: SteelFusion Edge appliance architecture - block storage mode, but this figure shows support for an NFS/file deployment.

Figure: Edge architecture in NFS/file mode

Although not shown in the architecture diagram, with SteelFusion version 5.0 and later, there is additional functionality within the RiOS node to support an NFS file server. At a high level, the NFS file server in the RiOS node interacts with the existing BlockStream and VSP components to provide branch ESXi servers, including the Edge hypervisor node, with exported fileshares. These fileshares have been projected across the WAN by SteelFusion Core from centralized NFS storage. With SteelFusion Edge deployed in NFS/file mode, the internal interconnect between the RiOS node and hypervisor node is only used for management traffic related to health and status of the architecture. The storage interconnect is not available for use by the NFS file server; this is by design. To enable the hypervisor node to mount exported fileshares from the NFS file server in RiOS, you must create external network connections. In

Figure: Edge architecture in NFS/file mode the external network connection is shown as being between the RiOS node auxiliary (AUX) network interface port and one of the hypervisor node data network ports. The AUX network interface port will be configured with Virtual IP address (VIP), which will be used to mount the exports on the hypervisor. Depending on your requirements, it may be possible to use alternative network interfaces for this external connectivity. For more details, see

SteelFusion Core ports with NFS/file deployment.

Virtual Services Platform hypervisor installation with NFS/file mode

Before performing the initial installation of the Virtual Services Platform (VSP) hypervisor in the SteelFusion Edge hypervisor node, the SteelFusion Core must already be configured and connected. See

SteelFusion Core with NFS/file deployment process overview for an outline of the required tasks, and see the

SteelFusion Core Management Console User’s Guide (NFS mode) for more detailed information.

Once Core is correctly configured, you can install the VSP hypervisor. The SteelFusion Edge Management Console is equipped with an installer wizard to guide you through the specific steps of the installation process. For a successful installation, be aware that exported fileshares projected from the Core and mapped to the Edge will only be detected by the hypervisor if they are configured with “VSP service” or “Everyone” access permissions. Once the VSP is installed, additional mapped fileshares can be added by mounting manually (via ESXi server) configuration tools such as vSphere client, and specifying the Edge VIP address. See the SteelFusion Edge Management Console User’s Guide for more details on the VSP hypervisor installation process.

SteelFusion Edge interface and port configuration

This section describes a typical network port configuration. Depending on your deployment scenario, you might require additional network configuration.

This section includes the following topics:

SteelFusion Edge ports with NFS/file deployment

This table summarizes the ports that connect the SteelFusion Edge appliance to your network.

Port | Description |

Primary (PRI) | Connects Edge to a VLAN switch through which you can connect to the Management Console and the Edge CLI. This interface is also used to connect to the Core through the Edge RiOS node in-path interface. |

Auxiliary (AUX) | Connects the Edge to the management VLAN. The IP address for the auxiliary interface must be on a subnet different from the primary interface subnet. You can connect a computer directly to the appliance with a crossover cable, enabling you to access the CLI or Management Console. |

lan1_0 | The Edge RiOS node uses one or more in-path interfaces to provide Ethernet network connectivity for optimized traffic. Each in-path interface comprises two physical ports: the LAN port and the WAN port. Use the LAN port to connect the Edge RiOS node to the internal network of the branch office. You can also use this port for a connection to the Primary port. A connection to the Primary port enables the blockstore traffic sent between Edge and Core to transmit across the WAN link. |

wan1_0 | The WAN port is the second of two ports that comprise the Edge RiOS node in-path interface. The WAN port is used to connect the Edge RiOS node toward WAN-facing devices such as a router, firewall, or other equipment located at the WAN boundary. If you need additional in-path interfaces, or different connectivity for in-path (for example, 10 GigE or Fiber), then you can install a bypass NIC in an Edge RiOS node expansion slot. |

eth0_0 to eth0_1 | These ports are available as standard on the Edge appliance. When configured for use by Edge RiOS node, the ports can provide additional NFS interfaces for storage traffic to external servers. These ports also enable the ability to provide redundancy for Edge high availability (HA). In such an HA design, we recommend that you use the ports for the heartbeat and BlockStream synchronization between the Edge HA peers. If additional NFS connectivity is required in an HA design, then install a nonbypass data NIC in the Edge RiOS node expansion slot. |

gbe0_0 to gbe0_3 | These ports are available as standard on the Edge appliance. When configured for use by Edge hypervisor node, the ports provide LAN connectivity to external clients and also for management. The ports are connected to a LAN switch using a straight-through cable. If additional connectivity is required for the hypervisor node, then install a nonbypass data NIC in a hypervisor node expansion slot. There are no expansion slots available for the hypervisor node on the SFED 2100 and 2200 models. There are two expansion slots on the SFED 3100, 3200, and 5100 models. Note: Your hypervisor must have access to the Edge VIP via one of these interfaces. |

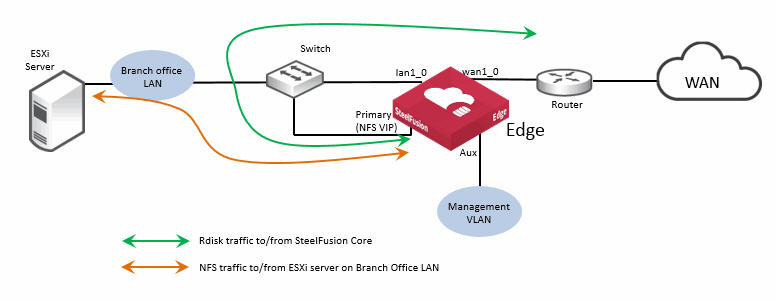

This figure shows a basic branch NFS/file deployment indicating some of the Edge network ports. In this scenario, the ESXi server is installed on the branch LAN, external to the Edge.

Figure: Edge ports used in branch NFS/file deployment - external ESXi server

Note that in this figure, the Edge auxiliary interface (Aux) is labeled

NFS VIP. For details on Edge VIP, see

Edge Virtual IP address.

Edge Virtual IP address

Unlike block storage, NFSv3 doesn’t support the concept of multipath. In order for high availability (HA) to avoid a single point of failure, the general industry standard solution for NFS file servers is to use a virtual IP (VIP) address. The VIP floats between the network interfaces on file servers that are part of an HA deployment. Clients that are accessing file servers using NFS in an HA deployment are configured to use the VIP address. When a file server that is part of an HA configuration is active, only it responds to the client requests. If the active file server fails for some reason, the standby file server starts responding to the client requests via the same VIP address.

On a related note, if there are NFS file servers in the data center configured for HA, then it is their VIP address that is added to the Core configuration.

At the branch location, because Edge is the NFS file server, it is configured with a VIP address for the ESXi server(s) to access as NFS clients.

Note: This VIP address must be configured even if Edge is not expected to be part of an Edge HA configuration.

The Edge VIP address requires an underlying network interface with a configured IP address on the same IP subnet. The underlying network interface must be reachable by the NFS clients requiring access to the fileshares exported by the SteelFusion Edge. The NFS clients in this case will be one or more ESXi servers that will mount fileshares exported from the Edge and use them as their datastore. The ESXi server that is in the Edge hypervisor node must also use an external network interface to connect to the configured VIP address. Unlike Edge deployments in block storage mode, it is not supported to use the internal interconnect between the RiOS node and the hypervisor node.

Depending on the Edge appliance model, you may have several options available for network interfaces that could be used for NFS access with the VIP address. By default, these would include any of the following: Primary, Auxiliary, eth0_0 or eth0_1. It is important to remember that any of these four interfaces could already be required to use as connectivity for management of the RiOS node, rdisk traffic to/from the Edge in-path interface, or heartbeat and synchronization traffic as part of a SteelFusion Edge HA deployment. Therefore, select a suitable interface based on NFS connectivity requirements and traffic workload.

If an additional nonbypass NIC is installed in the RiOS node expansion slot, then you may have additional ethX_Y interfaces available for use.

The Edge VIP address is configured in the Edge Management Console in the Storage Edge Configuration page. This figure shows a sample configuration setting with the available network interface options.

Figure: Edge VIP address configuration

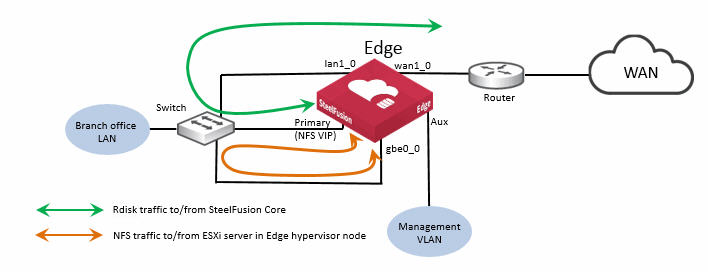

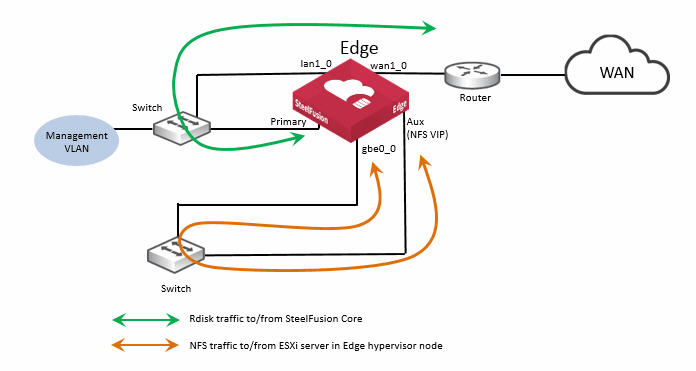

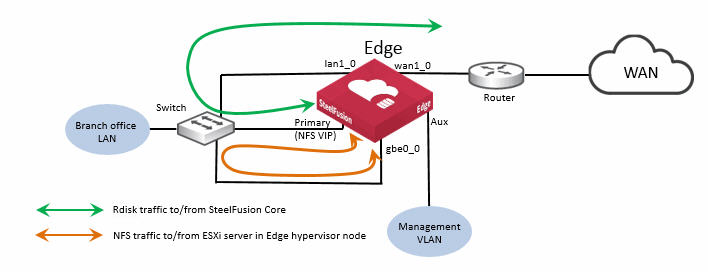

Figure: Edge ports used in branch NFS/file deployment - ESXi server in Edge hypervisor node shows a basic branch NFS/file deployment indicating some of the Edge network ports in use. In this scenario the ESXi server is internal to the Edge, in the hypervisor node. It is important to notice that the NFS traffic for the ESXi server in the hypervisor node is not using the internal connections between the RiOS node and the hypervisor node as it is not supported. Instead, the NFS traffic in this example is being transported externally between the auxiliary port of the RiOS node and the gbe0_0 port of the hypervisor node. If there were no other connectivity requirements, these two ports could be connected via a standard Ethernet cross-over cable. However, it is considered best practice to use straight-through cables and connect via a suitable switch.

Figure: Edge ports used in branch NFS/file deployment - ESXi server in Edge hypervisor node

Figure: Edge ports used in branch NFS/file deployment - external ESXi server

Figure: Edge ports used in branch NFS/file deployment - ESXi server in Edge hypervisor node

Overview of high availability with NFS

SteelFusion version 5.0 as part of an NFS/file deployment supports high availability (HA) for both Core and Edge configurations. There are some important differences for Core and Edge HA in an NFS/file deployment compared to a block storage deployment, and they are discussed in this section. However, we strongly recommend that you familiarize yourself with

SteelFusion Appliance High-Availability Deployment , which also contains information that can be applied generally for HA designs.

This section includes the following topics:

SteelFusion Core high availability with NFS

This figure shows an example Core HA deployment for NFS.

Figure: Core ports used in HA - NFS/file deployment

In the example, the use of network interface ports is identical to what could be used for a Core HA deployment for block storage mode. Core A and Core B are interconnected with two cross-over cables that are providing connectivity for heartbeat.

There are two NFS file servers, A and B. Although they could each be configured with virtual IP addresses to provide redundancy, it is not shown in the diagram for reasons of simplicity. File server A is exporting two fileshares, A1 and A2, which are mounted by Core A. File server B is exporting one fileshare, B1, which is mounted by Core B.

Again, for reasons of simplicity, no Edge is shown in the diagram, but for the purposes of this example we can assume that an Edge has been configured to connect to both Core A and Core B simultaneously in order to map all three projected fileshares: A1, A2 and B1.

Under normal conditions, where Core A and Core B are healthy, they are designed to operate in an “active-active” methodology. They are each in control of their respective fileshares, but also aware of their peer’s fileshares. They are independently servicing read and write operations to and from the Edge for their respective fileshares.

As described in

Failover states and sequences, the Cores check each other via their heartbeat interfaces to ensure their peer is healthy. In a healthy state, both peers are reported as being ActiveSelf.

Failover is triggered in the same way as for a block storage deployment, following the loss of nine consecutive heartbeats that normally occur at one-second intervals. When a failover scenario occurs in a SteelFusion Core HA with NFS, the surviving Core transitions to the “ActiveSolo” state. However, this is where the situation differs from a Core HA deployment in block storage mode.

In this condition with an NFS/file deployment, the surviving Core transitions all exported fileshares that are part of the HA configuration and projected to Edges, into a read-only mode. In our example, this would include A1, A2, and B1.

It is important to understand that the “read-only mode” transition is from the perspective of the surviving Core and any Edges that are connected to the HA pair. There is no change made to the state of the exported fileshares on the backend NFS file servers. They remain in read-write mode.

With the surviving Core in ActiveSolo state and the NFS exports in read-only mode, the following scenarios apply:

• The ActiveSolo Core will defer all commits arriving from its connected Edges.

• The ActiveSolo Core will defer all shapshot requests coming in from its connected Edges.

• Edges connected to the ActiveSolo Core will absorb writes locally in the blockstore and acknowledge, but commits will be paused.

• Edges connected to the ActiveSolo Core will continue to service read requests locally if the data is resident in the blockstore, and will request nonresident data via the ActiveSolo Core as normal.

• Pinning and prepopulation of exported fileshares will continue to operate.

• Mounting new exported fileshares on the ActiveSolo Core from backend NFS file servers are permitted.

• Mapping exported fileshares from the ActiveSolo Core to Edge appliances will still be allowed.

• Any operation to offline an exported fileshare will be deferred.

• Any operation on backend NFS file servers to resize an exported fileshare will be deferred.

Once the failed SteelFusion Core in an HA configuration comes back online and starts communicating healthy heartbeat messages to the ActiveSolo Core, recovery to normal service is automatic. Both Core appliances return to an ActiveSelf state and exported fileshares are transitioned back to read-write mode.

All pending commits for the connected Edge appliances will be completed and any other deferred operations will resume and complete.

Note: In circumstances where it is absolutely necessary, it is possible to “force” a transition back to read-write mode while in an ActiveSolo state. Contact Riverbed Support for assistance.

If a Core that is part of an HA deployment needs to be replaced, see

Replacing a Core in an HA deployment for further guidance.

SteelFusion Edge high availability with NFS

Similar to Core HA deployments with NFS, Edge HA configuration with NFS also has some differences compared to Edge HA in a block storage scenario. However, we still recommend that you review

SteelFusion Appliance High-Availability Deployment to be familiar with Edge HA designs in general.

This figure shows an example of a basic Edge HA design with NFS.

Figure: Ports used in Edge HA NFS/file deployment - external ESXi server

The basic example design shown in this figure is intended to illustrate the connectivity between the Edge peers (Edge A and Edge B). In this example, the ESXi server is located on the branch office LAN, external from the SteelFusion Edge appliances. In a production HA design, there will most likely be additional routing and switching equipment, but for simplicity in the diagram, this is not included. See also

Figure: Ports used in Edge HA NFS/file deployment - ESXi server in hypervisor node for an example of a basic design where the ESXi server is located within the Edge hypervisor node.

In exactly the same way with Edge HA in a block storage deployment, best practice is to connect both Edge appliances using eth0_0 and eth0_1 network interfaces for heartbeat and blockstore sync.

When configured as an HA pair, the Edges operate in an active-standby mode. Both Edge appliances are configured with the virtual IP (VIP) address. The underlying interface on each Edge (the example in

Figure: Ports used in Edge HA NFS/file deployment - external ESXi server is using the auxiliary port) must be configured with an IP on the same subnet.

The ESXi server is configured with the VIP address for the NFS file server of the Edge HA pair. In their active-standby roles, it is the active Edge that responds via the VIP to NFS read/write requests from the ESXi server. Just as with a block storage HA deployment, it is also the active Edge that is communicating with the attached Core to send and receive data across the WAN link. The standby Edge takes no part in any communication other than to send/receive heartbeat messages and synchronous blockstore updates from the active Edge.

In the event that the active Edge fails, the standby Edge takes over and begins responding to the ESXi server via the same VIP on its interface.

For more details about the communication between Edge HA peers, see

SteelFusion Edge HA peer communication.

This figure shows another basic Edge HA design, but in this case, the ESXi server is located in the hypervisor node of the Edge. Remember that in an NFS/file deployment with Edge, communication between the NFS file server in the SteelFusion Edge RiOS node and the ESXi server in the hypervisor node is performed externally via suitable network interfaces.

Figure: Ports used in Edge HA NFS/file deployment - ESXi server in hypervisor node

In this example, the NFS file server is accessible via the VIP address configured on the auxiliary (Aux) port and connects to the hypervisor node using the gbe0_0 network interface port.

The option to configure the VSP on both Edge appliances to provide an active-passive capability for any virtual machines (VMs) hosted on the hypervisor node is also possible with Edge NFS/file deployments.

For more details about how to configure Edge HA, see the SteelFusion Edge Management Console User’s Guide.

Snapshots and backup

With SteelFusion version 4.6, the features related to snapshot and backup were enhanced from a LUN-based approach to provide for server-level data protection. With NFS/file deployments using SteelFusion version 5.0 and later, this style of data protection supports branch ESXi servers including both the ESXi server in the hypervisor node of the Edge as well as external ESXi servers installed on the branch office LAN that use datastores mounted via NFS from the Edge.

The feature is able to automatically detect virtual machines (VMs) that reside only on vSphere datastores remotely mounted from Edge via NFS. This means that any VM resident on local ESXi datastore, or local storage provided by Edge (not projected by Core), will be filtered out and automatically excluded from any Core backup policy.

Server-level backup policies are dynamically updated when VMs are added to, or removed from, ESXi servers that are included in the policy.

By default, the policies are designed to protect the VMs in a nonquiesced state. If backups are required on VMs that are quiesced, this can be enabled by editing the policy using a CLI command on the Core.

storage backup group modify <group-id> quiesce-vm-list <VM1>,<VM2>

In this command, <group-id> is the name of the policy protection group identifier, and <VM1>, <VM2> are the VMs to be quiesced. For more details on this command, see SteelFusion Command-Line Interface Reference Manual.

Note: Although the server-level backup feature is designed to protect physical Windows servers, this is only supported with SteelFusion deployments in block storage mode.

As part of the entire SteelFusion data protection feature, snapshots performed under the control of a server-level backup policy configured on the Core are protected by mounting the snapshot on a proxy server and backing it up using a supported backup application. This action is also performed automatically as defined by the schedule configured in the backup policy.

Remember, with a SteelFusion NFS/file deployment, backups are performed at the server level only. Individual VMs that are residing in the fileshare are exported from the Edge. Within an exported fileshare there may be a number of directories and subdirectories as part of the ESXi datastore, which may contain data other than the VMs themselves. This data is not included within the server-level backup policy defined on the Core.

It is possible to manually trigger a snapshot of the entire fileshare export using the SteelFusion Edge Management Console. This will cause a crash-consistent snapshot of the relevant fileshare to be taken on the backend NFS file server located in the data center. To achieve this, the Core must already be configured with the relevant settings for the backend NFS file server so that it can complete the snapshot operation requested by the Edge.

To specify these settings, in the SteelFusion Core Management Console choose Configure > NFS: Storage Arrays and select the Snapshot Configuration tab. An example screenshot is shown in

Figure: Snapshot configuration on the Core - NFS/file deployment showing the required details for the NFS file server.

In the scenario where a manually triggered snapshot of the entire exported fileshare is taken on the backend NFS file server in the data center, because it is not part of any SteelFusion server-level backup policy, it is not automatically backed up. Therefore, additional third-party configuration, data management applications, scripts or tools, external to Core may be required to ensure a snapshot of the entire NFS-exported fileshare is successfully backed up. However, it is highly likely that such processes and applications are already available within the data center.

Figure: Snapshot configuration on the Core - NFS/file deployment

For a list of storage arrays supported in Core version 5.0, see

Existing SteelFusion features available with NFS/file deployments.

If you manually trigger snapshots by clicking Take Snapshot in the SteelFusion Edge Management Console and the procedure fails to complete, check the log entries on the Edge. This is especially relevant if the backend NFS file server is a NetApp.

If there are error messages that include the text “Missing aggr-list. The aggregate must be assigned to the VServer for snapshots to work,” see this Knowledge Base article on the Riverbed Support site:

This article will guide you through some configuration settings that are needed on the NFS file server. For more details, see the SteelFusion NetApp Solution Guide on the Riverbed Splash site.

See the SteelFusion Core Management Console User’s Guide for more details on snapshot and backup configuration.

Best practices

In general, you can apply the concepts in

Deployment Best Practices to SteelFusion deployments with NFS. There are some specific areas of guidance related to NFS and these are covered in the following sections:

Core with NFS - best practices

This section describes best practices related to SteelFusion Core. It includes the following topics:

Network path redundancy

Core does not support Multipath input/output (MPIO). For redundancy between the Core and the backend NFS file server, consider using Edge Virtual IP (VIP) capability on the NFS file server interfaces if it is supported by the vendor.

Interface selection

In a deployment where multiple network interfaces are configured on the Core to connect to backend NFS file servers, there is no interface selection for the NFS traffic. Any interface that is able to find a route to the NFS file server could be used. If you must use specific interfaces on the Core to connect to specific NFS file server ports, then consider using static routes on the Core to reach the specific NFS file server IP addresses.

Editing files on exported fileshares

Do not edit files on the exported fileshares by accessing them directly, unless the fileshare has already been unmounted from the Core. Where possible, use controls to restrict direct access to exported files on the file server. Also ensure that only the Core has write permission on the exported fileshares. This can be achieved by using IP or hostname based access control on the NFS file server configuration settings for the exports. If necessary, exported fileshares can be mounted read-only by other hosts.

Size of exported fileshares

Exported fileshares up to 16 TiB (per export) are supported on the Core. However, the number of mounted exports that are supported will depend on the model of Core.

Note: EMC Isilon only - if you are provisioning and exporting NFS storage on an Isilon storage system, we recommend that you set a storage quota for the directory so the export does not exceed 16 TiB. For details, see “Deploying SteelFusion with Dell EMC Isilon for NFS” on the Riverbed Splash site.

Resizing exported fileshares

Exported fileshares can be expanded on the backend NFS file server without first unmounting them from the Core. The resized export will be detected by the Core automatically within approximately one minute. Reduction of exported fileshares is not supported.

Replacing a Core

Replacing a Core as part of an RMA operation will require an extra configuration task if the Core is part of an NFS/file deployment. By default, a replacement Core will be shipped with a standard software image that would normally operate in block storage mode. As part of the procedure to replace a Core, if necessary, the Core software should first be updated to a version of supporting NFS (minimum version 5.0). If the Core needs to be upgraded to a version that supports NFS, make sure both image partitions are updated so the Core isn’t accidentally rebooted to a version that doesn’t support NFS/file mode. Once the required version of software is installed, the Core needs to be instructed to operate in NFS/file mode. This is achieved by entering the following two commands using the Core command line interface:

service reset set-mode-file

service reset set-mode-file confirm

The first command will automatically respond back with a request for confirmation, at which point, you can enter the second command.

Note: This operation will clear any storage configuration on the Core - therefore care must be taken to ensure the command is performed on the correct Core appliance.

Once the Core is configured for NFS operations, it can be configured with the relevant storage settings.

Replacing a Core in an HA deployment

If a Core that is part of an HA deployment needs to be replaced, use the following sequence of steps as a guideline to help with replacement of the Core:

• Consider opening a ticket with Riverbed Support. If you are not familiar with the replacement process, Riverbed Support can guide you safely through the required tasks.

• Stop the service on the Core to be replaced. This will cause the peer Core to transition to ActiveSolo state and take control of fileshares that had been serviced by the Core to be replaced. The ActiveSolo Core will change all the fileshares to read-only mode for the Edges.

• If the replacement Core is expected to take an extended period of time (days) before it is installed, contact Riverbed Support for guidance on forcing the filesystems back to a read-write mode.

• Once the replacement Core is installed and configured to operate in NFS/file mode, it can be updated with the relevant storage configuration settings by sending them from the ActiveSolo Core. This is achieved by performing the following command on the ActiveSolo Core:

device-failover peer set <new-peer-ip> local-if <interface> force

In this command, <new-peer-ip> is the IP address of the replacement Core appliance and <interface> is the local interface of the ActiveSolo Core used to connect to the replacement Core IP address. For more details on this command, see the SteelFusion Command-Line Interface Reference Manual.

Note: Ensure that you enter commands on the correct Core appliance.

Edge with NFS - best practices

This section describes best practices related to SteelFusion Edge. It includes the following topics:

General purpose file server

SteelFusion Edge with NFS is not designed to be a general purpose NFS file server and is not supported in this role.

Alternative ports for VIP address

If additional nonbypass ports are available in the RiOS node due to the installation of an optional network interface card (NIC), the VIP address can be assigned on those ports.

Unmounting ESXi datastores

Before unmapping or taking offline any NFS exports on the Edge, ensure that the ESXi datastore that corresponds to the exported fileshare has been unmounted.

Sparse files

Exported fileshares that contain sparse files can be mounted by the Core and projected to the Edge. However, SteelFusion does not maintain the sparseness and, therefore, pinning the exported fileshare may not be possible. If a sparse file is created through the Edge, it will be correctly synchronized back to the backend NFS file server. But if the export that contains the sparse file is off-lined or unmounted, the sparseness is not maintained following a subsequent on-line or mount operation.

Changing Edge VIP address

If you need to change the VIP address on the Edge appliance, you may need to remount the datastores. In this scenario, a VIP address change will also affect the UUID generated by the ESXi server. For more details on additional administration steps required, see the Knowledge Base article on the Riverbed Support site:

https://supportkb.riverbed.com/support/index?page=content&id=S30235.Replacing an Edge

Replacing an Edge as part of an RMA operation will require an extra configuration task if the Edge is part of an NFS/file deployment. By default, a replacement Edge will be shipped with a standard software image that would normally operate in block storage mode. As part of the procedure to replace an Edge, if necessary, the Edge software should first be updated to a version supporting NFS (minimum version 5.0). If the Edge needs to be upgraded to a version that supports NFS, make sure both image partitions are updated so the Edge isn’t accidentally rebooted to a version that doesn’t support NFS/file mode. Once the required version of software is installed, the Edge needs to be configured to operate in NFS/file mode. To carry out this task, you will require the guidance of Riverbed Support. Ensure that you open a case with Riverbed.

Once the Edge is configured for NFS operations, it can be configured with the relevant storage settings.

Related information

• SteelFusion Core Management Console User’s Guide

• SteelFusion Edge Management Console User’s Guide

• SteelFusion Core Installation and Configuration Guide

• SteelFusion Edge Installation and Configuration Guide

• SteelFusion Command-Line Interface Reference Manual