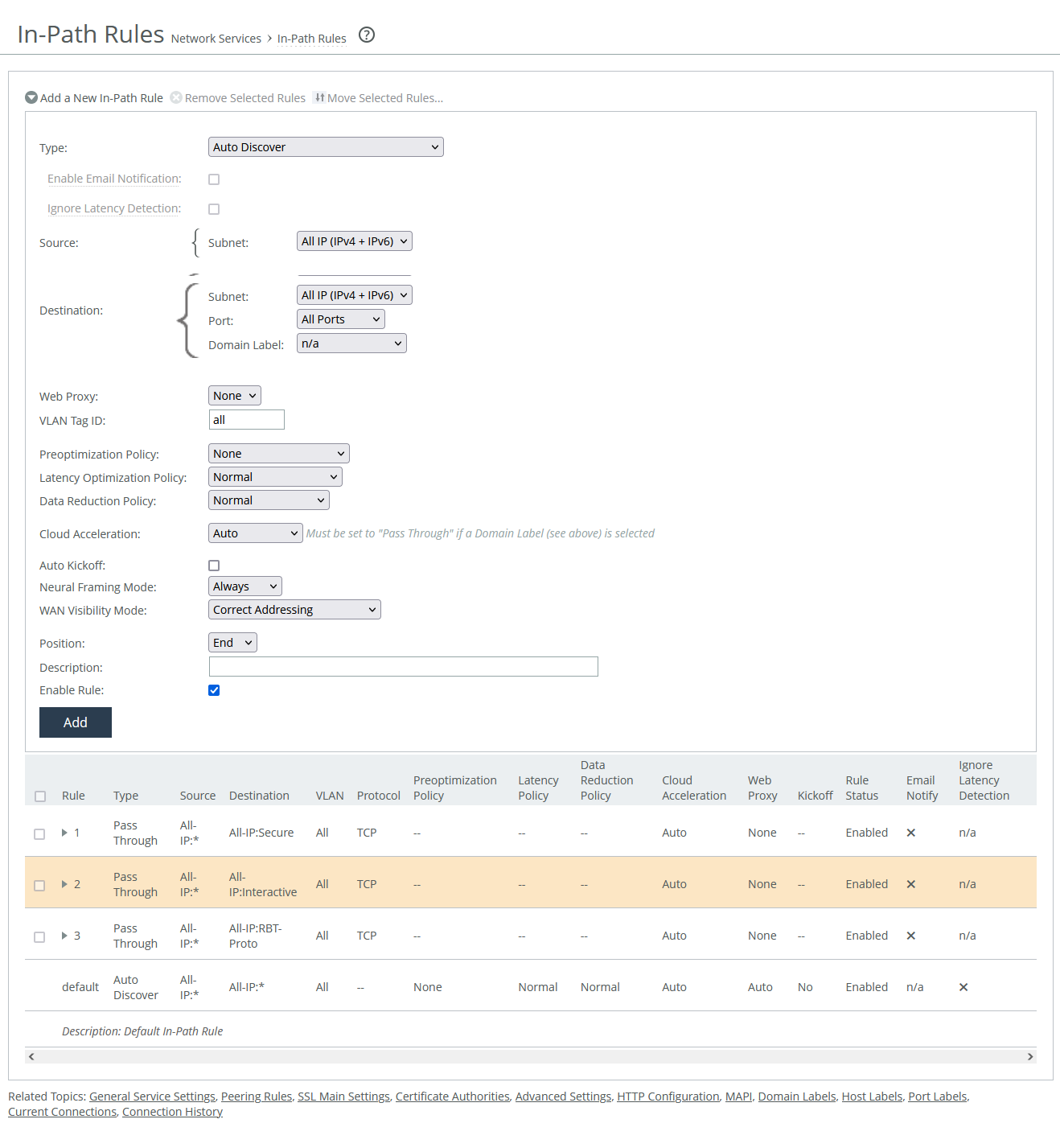

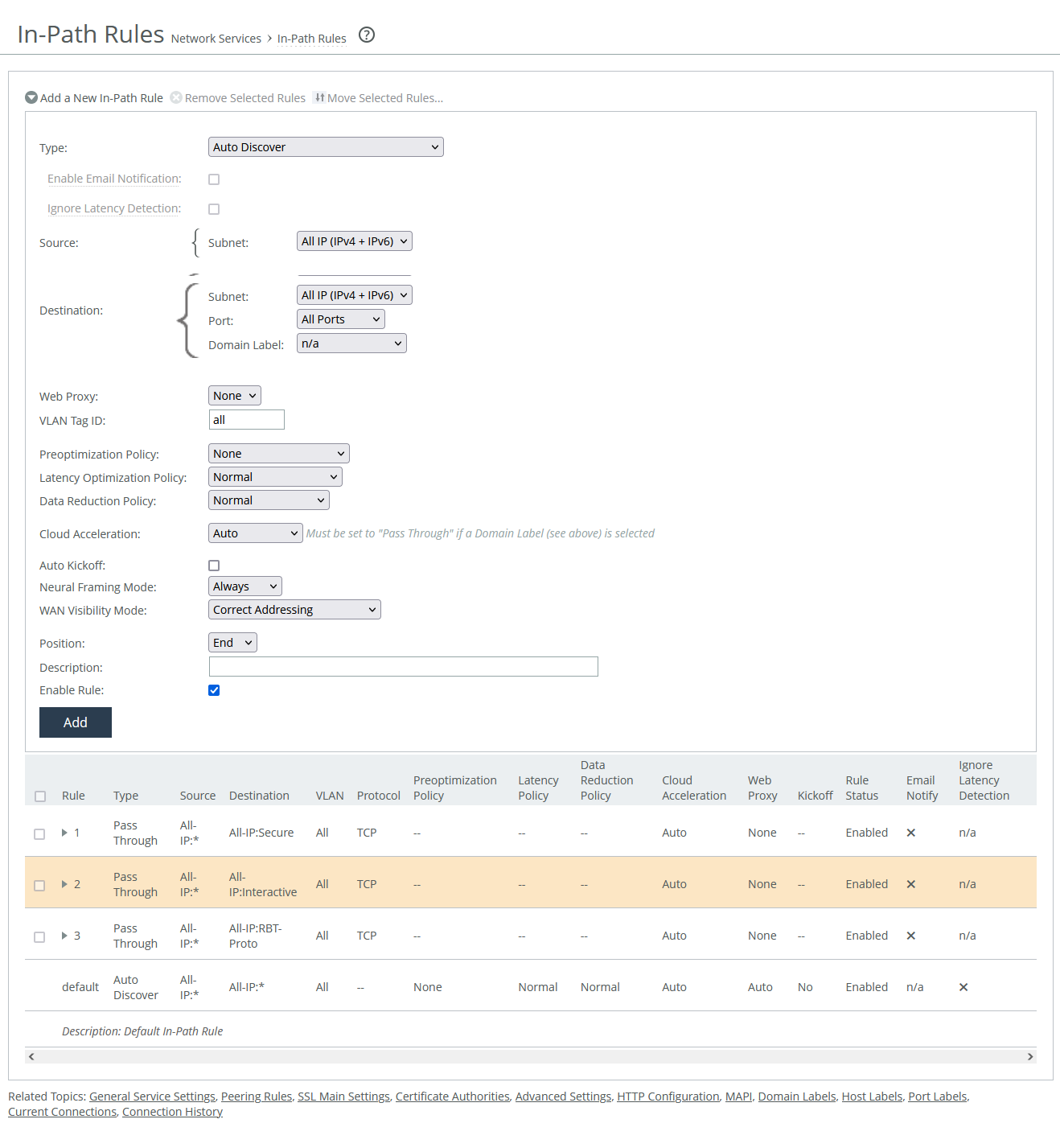

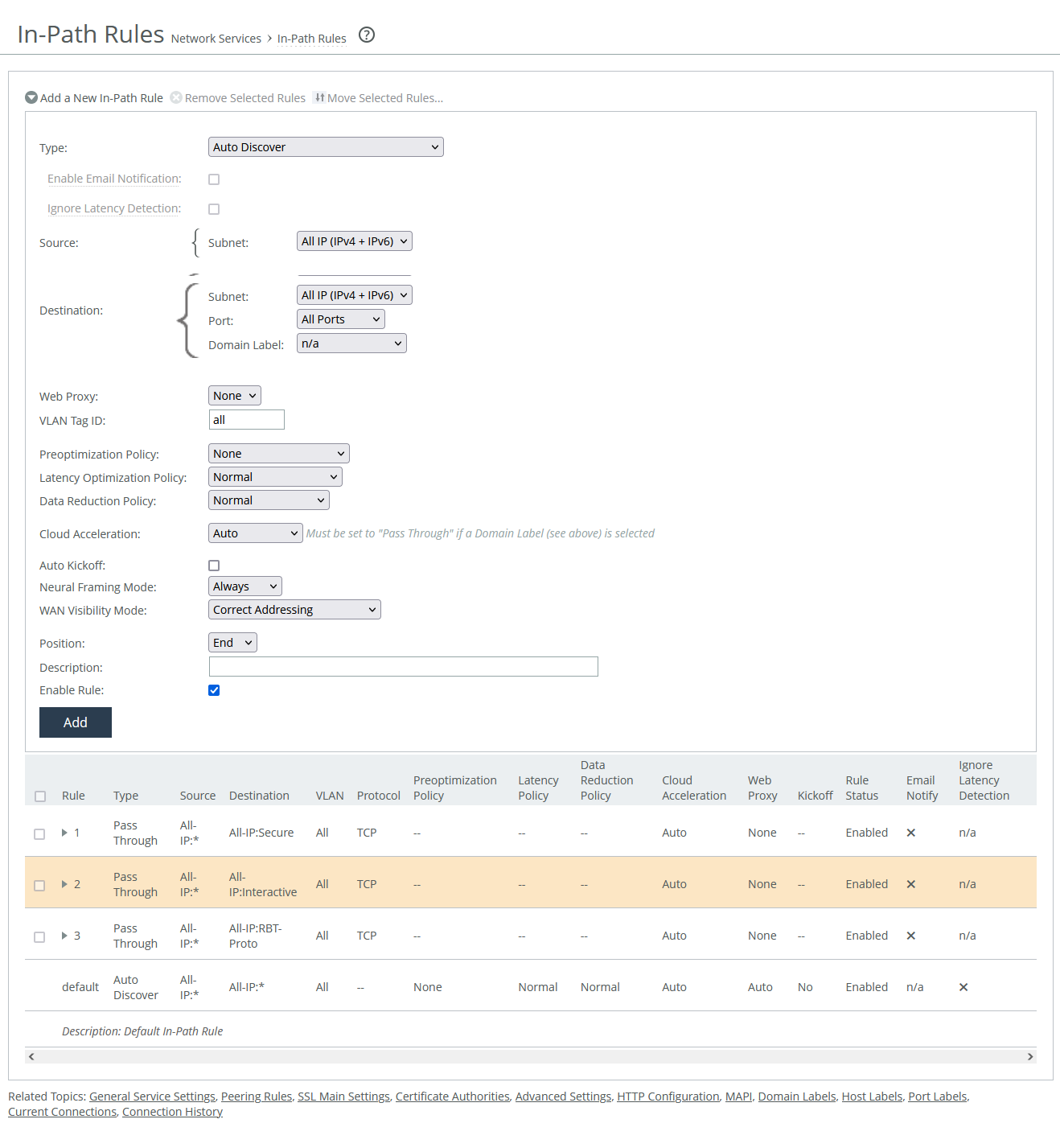

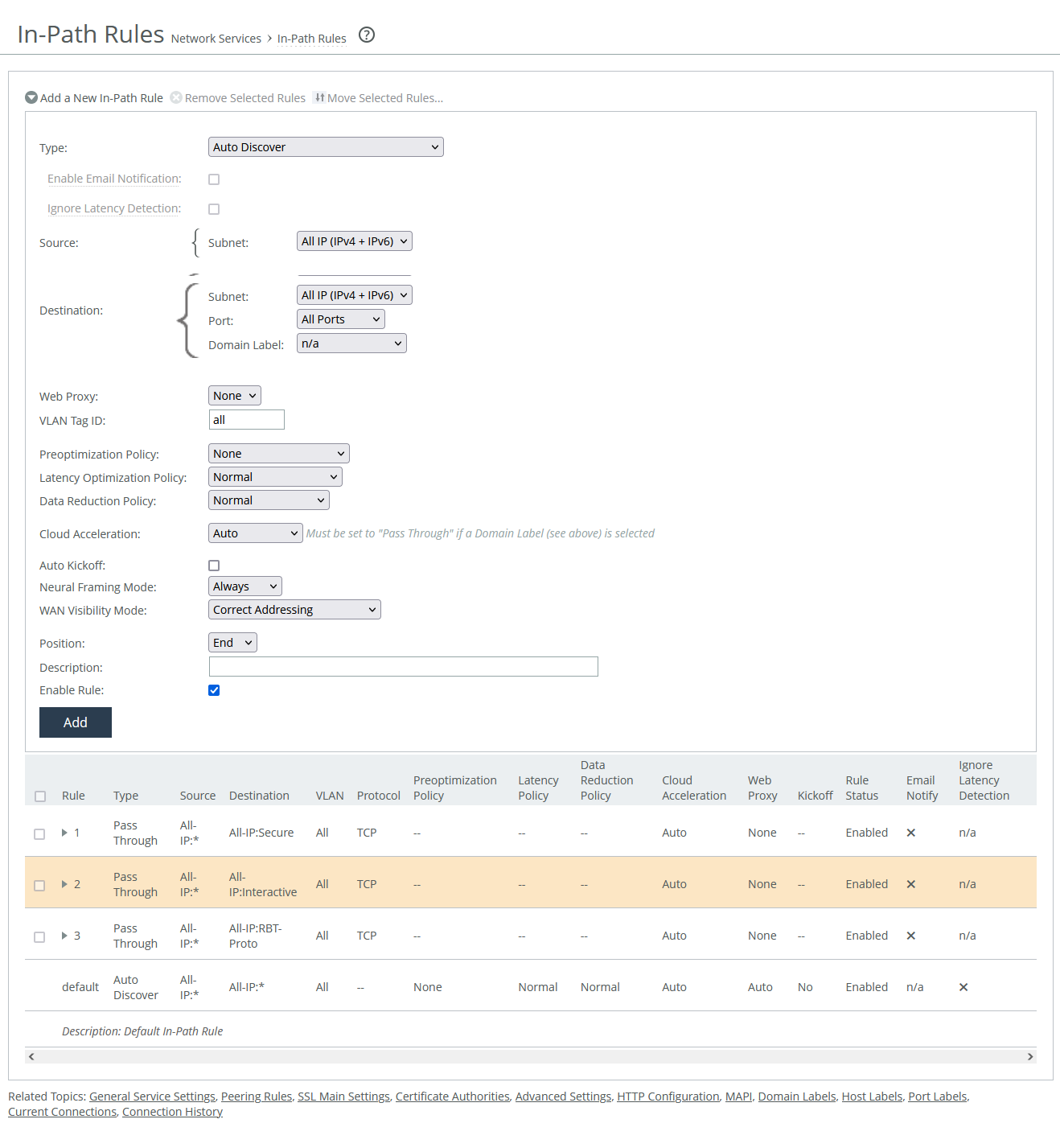

About in-path rule settings

In-path rule settings are under Optimization > Network Services: In-Path Rules.

In-path rules settings

Type

Specifies the general behavior of the appliance toward new connections.

Enable email notification

Enables the appliance to send notification and reminder emails to the specified users. Reminder emails, when enabled, are sent every 15 days. Use the email notify passthrough rule notify-timer <notification-time-in-days> command to change email frequency. Available only to pass-through rules. Requires that email notification is enabled under administration settings.

Ignore latency detection

Deactivates latency detection on matching connections.

Source and Destination

These settings match client requests based on their origin and destination. Available options differ slightly depending on the type of rule.

In a virtual in-path configuration using packet-mode optimization, don’t use the wildcard All IP option for source and destination. Doing so can create a loop between the server-side and client-side appliances. Instead, configure the rule using the local subnet on the LAN side of the appliance.

Subnet

Specifies the subnet IP address and netmask for the source and destination network:

• Host label is a logical group of hostnames and subnets that represent a collection of servers or services. You can use both host labels and domain labels within a single in-path rule. RiOS versions 9.16.0 and later support IPv6 for host labels. RiOS versions prior to 9.16.0 require that you change the source to All IPv4 or a specific IPv4 subnet. Labels are optionally configured.

• SaaS application defines an in-path rule for SaaS application acceleration through SaaS Accelerator Manager (SAM). Requires that the appliance is registered with a SAM.

• Port specifies all ports, a specific port, or a port label. Valid port numbers are from 1 to 65535. For port labels, a list of configured labels appears.

Domain label

Specifies a domain label for autodiscover, passthrough, or fixed-target rules. The connection must match both the destination and the domain label. When the entries in the domain label don’t match, the system looks to the next rule. Domain labels apply only to HTTP and HTTPS traffic. When the destination port is set to All, the rule defaults to ports 80 (HTTP) and 443 (HTTPS). Optionally, specify specific ports or port labels. RiOS versions 9.16.0 and later support IPv6 for domain labels.

Web proxy

Enables the appliance to use a single-ended web proxy to transparently intercept traffic bound to the internet. It is available to autodiscover and pass-through rules.

• None does not send traffic through the web proxy.

• Force directs traffic sends traffic for any matching private or intranet IP address and port through the web proxy. When enabled, full and port transparency WAN visibility modes have no impact. Pass-through rules only. Port labels supported.

• Auto sends all internet-bound traffic (on port 80 or 443) destined to public IP addresses through the web proxy. When enabled on an Auto Discover rule, full and port transparency WAN visibility modes have no impact. When the traffic can’t be prioritized through the web proxy, autodiscovery occurs and the full or port transparency modes are used.

Target appliance IP address

Specifies the IP address for the remote peer appliance. When the protocol is TCP and you don’t specify an IP address, the rule defaults to all IPv6 addresses.

Port

Specifies the target port number.

Backup appliance IP address

Settings are similar to those for target appliances.

Protocol

Specifies a traffic protocol: TCP, UDP, or any.

Preoptimization policy

Specifies a preoptimization policy Oracle Forms or SSL traffic:

• None disables preoptimization. On appliances where SSL acceleration is enabled, the preoptimization policy for SSL is always enabled for traffic on port 443, even when an in-path rule preoptimization policy is set to None. To disable the SSL preoptimization for traffic to port 443, disable SSL optimization (client-side or server-side appliance), or set SSL Capability in the peering rule on the server-side appliance to No Check.

• Oracle Forms enables preoptimization for Oracle Forms. IPv6 is not supported.

• Oracle Forms over SSL enables preoptimization for both the Oracle Forms and SSL encrypted traffic through SSL secure ports. Requires the latency optimization policy is set to HTTP. IPv6 is not supported. If the server is running over a standard secure port (port 443), this in-path rule Forms must be before the default secure port pass-through rule.

• SSL enables preoptimization for SSL encrypted traffic through SSL secure ports on the appliance.

Latency optimization policy

Activates latency optimization for the selected traffic type:

• Normal enables latency optimizations for all supported traffic. Use this option and enable the Auto-Detect Outlook Anywhere Connections option under MAPI settings to automatically detect Outlook Anywhere and HTTP connections. This is the default value.

• HTTP enables latency optimization for HTTP on connections matching this rule.

• Outlook Anywhere enables latency optimization for RPC over HTTP/HTTPS Outlook Anywhere connections matching this rule. Use this option for environments where an IIS server is used only as an RPC Proxy, or that use asymmetric routing, connection forwarding, or Interceptor. You’ll also need to disable the auto-detect option under MAPI settings.

• Citrix enables Citrix-over-SSL latency optimization. Requires that the client-side appliance has an in-path rule that specifies the Citrix Access Gateway IP address. Requires that this policy is selected for in-path rules on the client-side and server-side appliances, and that the preoptimization policy is set to SSL. SSL must be enabled on the Citrix Access Gateway. On the server-side SteelHead, enable SSL and install the SSL server certificate for the Citrix Access Gateway.

• Exchange autodetect enables latency optimization for MAPI (Outlook Anywhere, MAPI over HTTP), autodiscover, and HTTP traffic. Requires that SSL optimization is enabled under MAPI settings, and that the Exchange Server’s SSL certificate is installed on the server-side appliance.

• None disables latency optimization for all traffic types on matching connections.

Data reduction policy

Enables the selected optimization method:

• Normal enables SDR and LZ optimizations for all supported traffic. This is the default value.

• SDR-only enables SDR and disables LZ compression.

• SDR-M performs data reduction entirely in memory, which prevents the appliance from reading and writing to and from the disk. Enabling this option can yield high LAN-side throughput because it eliminates all disk latency. Useful for interactive traffic, or other small amounts of data, and point-to-point replication during off-peak hours when both the server-side and client-side appliances have similar capacities.

• Compression-only performs LZ compression; it doesn’t perform SDR.

• None disables SDR and LZ optimizations for all traffic on matching connections.

Cloud acceleration

Specifies whether the connection is accelerated by the cloud or passed through.

Auto kickoff

Resets preexisting matched connections to force reconnection.

Neural framing mode

Enables the system to select the optimal packet framing boundaries for SDR by creating a set of heuristics to intelligently determine the optimal moment to flush TCP buffers:

• Never does not use the Nagle algorithm. Neural heuristics are computed in this mode but aren’t used. In general, this setting works well with time-sensitive and chatty or real-time traffic.

• Always passes all data to the Nagle algorithm codec, which attempts to coalesce consume calls to achieve better fingerprinting, if needed. This is the default value. IPv6 is not supported.

• TCP Hints encodes data from partial frame packets, or packets with the TCP PUSH flag, instead of immediately coalescing it. Neural heuristics are computed but aren’t used. IPv6 is not supported.

• Dynamic adjusts the Nagle parameters to discern the optimum algorithm for a particular type of traffic and switches to the best algorithm based on traffic type. IPv6 is not supported.

WAN visibility mode

Enables visibility of how packets traversing the WAN are addressed:

• Correct addressing disables WAN visibility and uses SteelHead IP addresses and port numbers in the TCP/IP packet header fields for accelerated traffic in both directions across the WAN. This is the default value.

• Port transparency preserves server port numbers in the TCP/IP header fields for accelerated traffic in both directions across the WAN. IPv6 transparency requires that Enable Enhanced IPv6 Auto-Discovery is enabled under Peering Rules. Use when you want enforce QoS policies based on destination ports. If your WAN router is following traffic classification rules written in terms of client and network addresses, port transparency enables your routers to use existing rules to classify the traffic without any changes.

• Full transparency with reset enables full address and port transparency and resets the connection probe which allows for the fully transparent, secure inner channel to traverse the firewall unimpeded.

Position

Specifies the rule’s hierarchical position in the list. Place rules that use domain labels below others. The default rule can’t be removed.

Description

Specifies a description for the rule to facilitate administration.

Enable rule

Enables or disables the rule.

Web Proxy

This setting is available to autodiscover and pass-through rules. It enables client-side appliances to send all traffic bound to the internet through a single-ended web proxy. Enabling the web proxy improves performance through web object caching and SSL decryption.

Web object caching includes all objects delivered through HTTP or HTTPS that can be cached, including large video objects like static video on demand (VoD) objects and YouTube video. Cache space is determined by the appliance model. An object remains in the cache for the duration specified in the cache control header. When the time limit expires, the appliance removes the object from the cache. The proxy cache is separate from the data store. When objects for a given website are already present in the cache, the system terminates the connection locally and serves the content from the cache. This saves the connection setup time and also reduces the bytes to be fetched over the WAN. The proxy cache is persistent; its contents remain intact after service and appliance restarts.

Single-ended and asymmetric topologies are supported, and a server-side peer is not required. VCX-10 through VCX-90 virtual models support web proxy.

Web proxy connections appear in the Current Connections report.

Cloud acceleration

You don’t need to add an in-path rule unless you want acceleration for specific users, but not others. Select one of these choices from the drop-down list:

• Auto—If the in-path rule matches, the connection is optimized by the SaaS appliance.

• Pass Through—If the in-path rule matches, the connection is not optimized by the SaaS appliance, but it follows the other rule parameters so that the connection might be optimized by this appliance, with other appliances in the network, or it might be passed through.

Domain labels and cloud acceleration are mutually exclusive. When using a domain label, the Management Console dims this control and sets it to Pass Through. You can set cloud acceleration to Auto when the domain label is set to n/a.

Neural Framing Mode

Optionally, if the rule type is Auto-Discover or Fixed Target, you can select a neural framing mode for the in-path rule. Neural framing enables the system to select the optimal packet framing boundaries for SDR. Neural framing creates a set of heuristics to intelligently determine the optimal moment to flush TCP buffers. The system continuously evaluates these heuristics and uses the optimal heuristic to maximize the amount of buffered data transmitted in each flush, while minimizing the amount of idle time that the data sits in the buffer.

• Never—Do not use the Nagle algorithm. The Nagle algorithm is a means of improving the efficiency of TCP/IP networks by reducing the number of packets that need to be sent over the network. It works by combining a number of small outgoing messages and sending them all at once. All the data is immediately encoded without waiting for timers to fire or application buffers to fill past a specified threshold. Neural heuristics are computed in this mode but aren’t used. In general, this setting works well with time-sensitive and chatty or real-time traffic.

• Always—Use the Nagle algorithm. This is the default setting. All data is passed to the codec, which attempts to coalesce consume calls (if needed) to achieve better fingerprinting. A timer (6 ms) backs up the codec and causes leftover data to be consumed. Neural heuristics are computed in this mode but aren’t used. This mode is not compatible with IPv6.

• TCP Hints—If data is received from a partial frame packet or a packet with the TCP PUSH flag set, the encoder encodes the data instead of immediately coalescing it. Neural heuristics are computed in this mode but aren’t used. This mode is not compatible with IPv6.

• Dynamic—Dynamically adjust the Nagle parameters. In this option, the system discerns the optimum algorithm for a particular type of traffic and switches to the best algorithm based on traffic characteristic changes. This mode is not compatible with IPv6.

For different types of traffic, one algorithm might be better than others. The considerations include: latency added to the connection, compression, and SDR performance.

To configure neural framing for an FTP data channel, define an in-path rule with the destination port 20 and set its data reduction policy. To configure neural framing for a MAPI data channel, define an in-path rule with the destination port 7830 and set its data reduction policy.

WAN Visibility Mode

Use port transparency if you want to manage and enforce QoS policies that are based on destination ports. If your WAN router is following traffic classification rules written in terms of client and network addresses, port transparency enables your routers to use existing rules to classify the traffic without any changes.

Port transparency enables network analyzers deployed within the WAN (between the SteelHeads) to monitor network activity and to capture statistics for reporting by inspecting traffic according to its original TCP port number. Dedicated port configurations on your appliances are not required. Port transparency only provides server port visibility. It doesn’t provide client and server IP address visibility, nor does it provide client port visibility.

Full address transparency preserves your client and server IP addresses and port numbers in the TCP/IP header fields for optimized traffic in both directions across the WAN. It also preserves VLAN tags. Traffic is optimized while these TCP/IP header fields appear to be unchanged. Routers and network monitoring devices deployed in the WAN segment between the communicating appliances can view these preserved fields.

If both port transparency and full address transparency are acceptable solutions, port transparency is preferable. Port transparency avoids potential networking risks that are inherent to enabling full address transparency. However, if you must see your client or server IP addresses across the WAN, full transparency is your only configuration option.

Enabling full address transparency requires symmetrical traffic flows between the client and server. If any asymmetry exists on the network, enabling full address transparency might yield unexpected results, including loss of connectivity.

Full Transparency with a stateful firewall is supported. A stateful firewall examines packet headers, stores information, and then validates subsequent packets against this information. If your system uses a stateful firewall, use Full Transparency with Reset.

Full transparency with reset enables full address and port transparency and also sends a forward reset between receiving the probe response and sending the transparent inner channel SYN. This mode ensures the firewall doesn’t block inner transparent connections because of information stored in the probe connection. The forward reset is necessary because the probe connection and inner connection use the same IP addresses and ports and both map to the same firewall connection. The reset clears the probe connection created by the SteelHead and allows for the full transparent inner connection to traverse the firewall.

To enable full transparency globally by default, create an in-path autodiscover rule, selecting full transparency for the visibility mode, and then place it above the default in-path rule and after the Secure, Interactive, and RBT-Proto rules. You can configure an appliance for WAN visibility even if the server-side appliance doesn’t support it, but the connection is not transparent.

WAN visibility works with autodiscover in-path rules only. It doesn’t work with fixed-target rules or server-side out-of-path configurations.