Overview of the Interceptor

The Interceptor is an in-path clustering solution you can use to distribute optimized traffic to a local group of SteelHeads. The Interceptor doesn’t perform optimization itself. Therefore, you can use it in very demanding network environments with extremely high throughput requirements. The Interceptor works in conjunction with the SteelHead and offers several benefits over other clustering techniques, such as WCCP or Layer-4 switching, including native support for asymmetrically routed traffic flows.

This chapter includes the following sections:

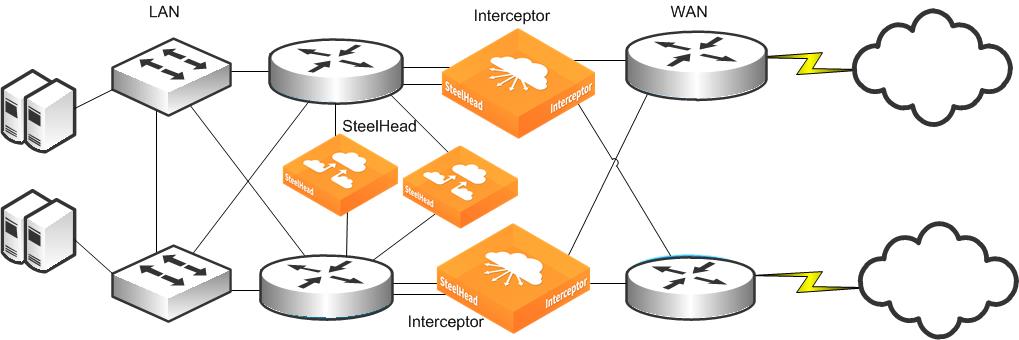

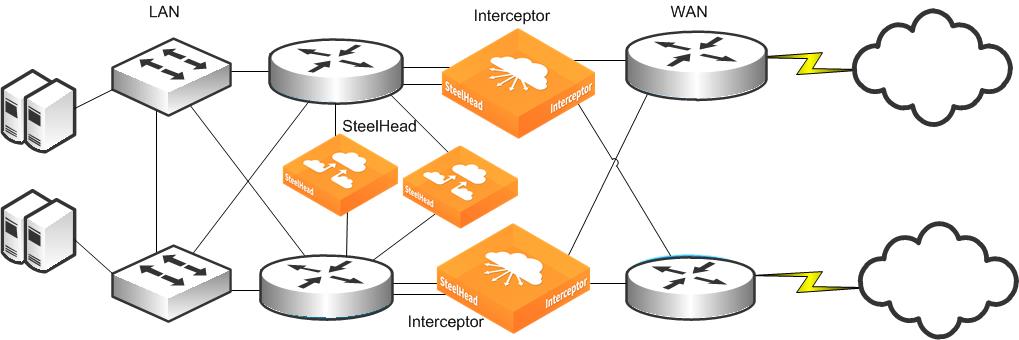

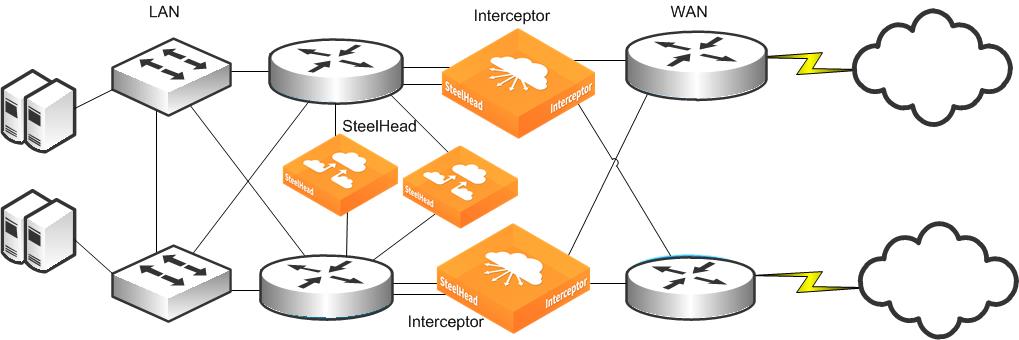

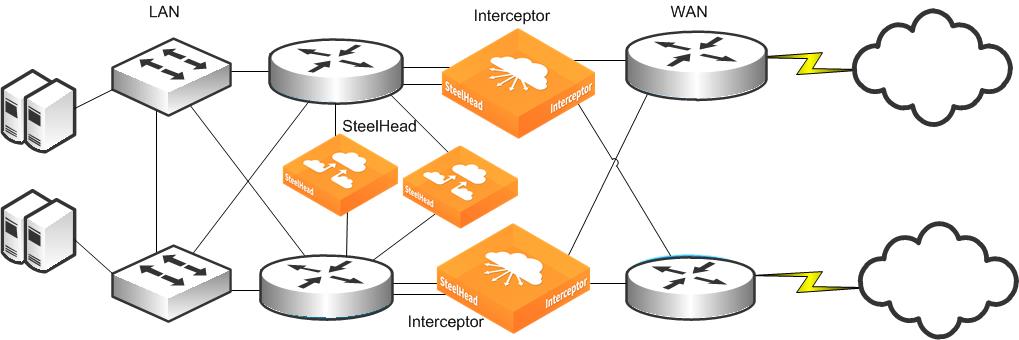

Interceptor deployment example

Figure: Basic Interceptor deployment example shows an example of an Interceptor deployment. Traffic between local and remote hosts can use one or both links to the WAN. The deployed Interceptors are configured to work together with the SteelHeads to ensure that optimization occurs, regardless of the path used. The SteelHeads have redundant physical connections to the LAN infrastructure to ensure that a single failure doesn’t prevent optimization.

Figure: Basic Interceptor deployment example

You can deploy Interceptors in an in-path or a virtual in-path configuration using the same network bypass interface cards as SteelHeads in the same highly available and redundant configurations: 802.1Q links, across multiple in-path links, in series, in parallel, or in a virtual in-path configuration with Web Cache Communication Protocol (WCCP) or policy-based routing (PBR). In addition, you can deploy Interceptors physically in-path on EtherChannel or other link aggregation trunks. Interceptors use connection forwarding between themselves and the SteelHead cluster to support detection, redirection, and optimization of asymmetrically routed traffic flows.

Methods for distributing TCP connections

The Interceptor provides several methods for distributing TCP connections to SteelHeads. You can send traffic to one or more SteelHeads based on source IP address, destination IP address, destination port, or VLAN tag. For example, you can configure the Interceptor to redirect traffic for large replication jobs to SteelHeads tuned to data protection workloads, while redirecting traffic for user application optimization to different SteelHeads.

Interceptor and optimized connections

Because the Interceptor works in conjunction with SteelHeads, it has several unique capabilities compared to other clustering solutions. Clustered SteelHeads determine which connections are optimized with autodiscovery, and they can share this information with the Interceptor so that redirection occurs only for optimized connections. The Interceptor can dynamically determine and direct traffic for optimization to the least-loaded SteelHeads based on their resource usage. To help drive efficiency of the clustered SteelHead data store, the Interceptor maintains peer affinity between the clustered SteelHeads and remote SteelHeads (or remote SteelCentral Controller for SteelHead Mobile). When the Interceptor chooses a SteelHead in the cluster to optimize a flow, it prefers a clustered SteelHead that has previously been paired with the remote SteelHead connection.

Support for real-time cluster administration

The Interceptor supports real-time cluster administration, enabling you to add, modify, or remove a clustered SteelHead with no disruption to operations in progress, except on the optimized connections of the target SteelHead. You can put a clustered SteelHead in paused mode, so that no new connections are redirected to it, while leaving configuration rules in place. You can place SteelHeads that are part of the cluster anywhere in the data center network, including in a separate subnet. Another example is to use Interceptors across two geographically close data centers connected through high-speed links, such as 1-Gbps or 10-Gbps MAN links, and use the connection-sharing abilities to treat the two locations as one logical site. This connection provides optimization even in the case of network asymmetry (traffic coming in one location and out the other), while leveraging the same cluster of SteelHeads.

Interceptors and network interface cards

The Interceptor uses the same network interface cards (NICs) as the SteelHeads, so the Interceptor can support several different physical connectivity options, including copper or fiber Ethernet ports and 10-Gbps fiber interface cards. The IC9350 has four 1-Gbps copper ports (two in-paths, each with a LAN and WAN port) and expansion slots that allow up to four additional network interface cards. The IC9600 includes a four 1-Gbps copper ports (two in-path interfaced, each with a LAN and WAN port) in one of the expansion slots. The IC9600 can use all six expansion slots allowing any combination of 1G and 10G cards. For example, you can use all six expansion slots for 10G interfaces by replacing the NIC that ships with the appliance. Available network cards include four-port 1‑Gbps or two-port 10-Gbps cards of different media.

Note: We recommend that if you use the 10-Gbps cards on the IC9350, you use the slots in the following order: 2, 3, 5, and 1. If you use 1-Gbps cards, you can use slots 1 through 4 in any order.

With network interface cards that support hardware assist, designated pass-through traffic can be processed entirely in the hardware, allowing close to line-rate speeds for pass-through traffic.

For more information about NICs, see the Network and Storage Card Installation Guide.

Interceptor support for system failure recovery

The Interceptor supports the same concepts of fail-to-block and fail-to-wire as the SteelHead. If a serious failure occurs on the Interceptor (including loss of power), it either passes traffic through for fail-to-wire mode or prevents traffic from passing for fail-to-block mode.

Interceptor throughput

Prior to Interceptor 3.0, the IC9350 supported up to 12 Gbps of total system throughput. Interceptor 3.0 introduces a software-packet-processing enhancement feature called Xbridge. Xbridge, when using 10‑Gbps interfaces, provides up to 40 Gbps of total throughput: 20 Gbps inbound and 20 Gbps outbound (you can enable Xbridge with the xbridge enable command). Pass-through traffic that is hardware assisted (using the 10-Gbps interface network cards) doesn’t count toward this total.

The IC9600 supports up to 40 Gbps of effective LAN throughput. This throughput equates to 40 Gbps of traffic to the pool of SteelHeads for optimization. The IC9600 requires a minimum of five 10 Gbps interfaces to reach 40 Gbps effective LAN throughput, assuming the SteelHeads are on the LAN side for the optimized traffic between local and remote SteelHead.

Note: We recommend Xbridge in 10G environments and as necessary in hardware-assisted pass-through traffic.

The IC9350 and IC9600 support clusters of up to 25 SteelHeads, and it can redirect 1,000,000 simultaneous TCP connections.

Support for network virtualization

Interceptor 4.0 and later provides VLAN segregation to support network environments in which you want to use network virtualization—also known as virtual routing and forwarding (VRF). The Interceptor supports virtualization by creating an instance corresponding to a VRF and associates the virtual local area networks (VLANs) to the instance. In this way, the Interceptor can maintain the 802.1q VLAN tag and support multitenancy, IP addresses overlap between instances, or situations in which you need traffic separation.

For more information about VLAN segregation, see

VLAN Segregation.

Comparing WCCP, PBR, and Layer-4 redirection without SteelHeads

The Interceptor redirects packets to a SteelHead so that you can place the SteelHead virtually in the data transmission path of TCP connections. Although there are other virtual in-path deployment methods for the SteelHead (including WCCP, PBR, and Layer-4 redirection), using the Interceptor combines and adds many advantages while avoiding many disadvantages of these methods.

For example, when planning WCCP deployments, you must consider whether all of the redirectors (routers or switches) are in the same subnet as the in-path interface IP address of the SteelHead, because any Layer‑3 hops between the redirectors and the SteelHead require GRE encapsulation on directed and returned traffic. This redirection has both a performance impact on the redirectors and an impact on the maximum data that can be included in a packet. For many networks, it is common for the WAN connecting devices—on which you can configure WCCP—to be on completely different subnets. For this reason, most WCCP deployments must use GRE as a redirection mechanism, especially to support asymmetric flows across the paths to the WAN.

For Interceptor deployments, Layer-3 hops don’t cause a performance difference on the redirecting Interceptor. Additionally, the redirection protocol is designed so that full-size packets can be redirected to the SteelHead without requiring any MSS or MTU adjustments on any optimized or nonoptimized traffic. This redirection simplifies many of the considerations for large or complex networks.

For more information about changes to the MTU and optimized traffic TCP MSS settings, and MTU guidelines, see the Riverbed Knowledge Base article

MTU guidelines for Interceptor in-path interfaces at

https://supportkb.riverbed.com/support/index?page=content&id=S14463.Behavior of pass-through traffic

Another important example is the behavior of pass-through traffic. For WCCP, PBR, or Layer-4 redirection methods, there is no way to signal the redirector that a particular flow should not be redirected to a SteelHead, despite the fact that a SteelHead, through the autodiscovery process, determines in the first few packets whether a flow can be optimized or not. Thus the redirectors might send large amounts of traffic unnecessarily and wastefully to a SteelHead. To avoid this unnecessary traffic, you must carefully use access lists or other filter methods to limit the redirected traffic to only those sites or applications that are optimized.

In Interceptor deployments, the appliances redirect the few packets at the beginning of a connection so that the SteelHead can perform autodiscovery. The SteelHead signals the Interceptor if future packets for the flow must be redirected. If the SteelHead doesn’t request future packets for the flow, no packets are redirected. This per-flow mechanism saves the overhead of pass-through traffic being redirected, and it saves you from manually mimicking the autodiscovery process through the ongoing maintenance of access lists.

Redirector response to a SteelHead failure

The response of the redirector to a SteelHead failure is also a consideration. For example, in WCCP deployments, if a SteelHead fails, it can take up to 30 seconds for the switch or router to declare it has failed and to stop sending traffic. At that point, the buckets that WCCP uses to partition the traffic among the remaining SteelHeads are recomputed and changed. Thus the traffic being redirected to the remaining SteelHeads might change, without consideration for which SteelHead was optimizing each particular flow.

Because the SteelHeads protect against unexpected traffic redirection by using connection forwarding, after a SteelHead failure, a large amount of traffic is redirected with WCCP, potentially to the different SteelHeads. The SteelHeads must redirect the traffic a second time among the cluster. In the case of a new connection, redirecting traffic a second time can result in cold performance for traffic that would have been warmed if sent to the original SteelHead. This process also happens when a SteelHead is added to a WCCP cluster.

Interceptors detect a SteelHead failure in 3 seconds. Only the connections optimized by the failed SteelHead are impacted. All other connections are unaffected and are redirected to the appropriate SteelHeads. You can control the removal of a SteelHead by marking it as paused on the Interceptor, and new connections aren’t redirected to it. Existing optimized connections continue uninterrupted. You can add a SteelHead to an Interceptor cluster without impacting other optimized connections.