Deployment Best Practices

Every deployment of the SteelFusion product family differs due to variations in specific customer needs and types and sizes of IT infrastructure.

The following recommendations and best practices are intended to guide you to achieving optimal performance while reducing configuration and maintenance requirements. However, these guidelines are general; for detailed worksheets for proper sizing, contact your Riverbed account team.

This chapter includes the following sections:

Core best practices

This section describes best practices for deploying the Core. It includes the following topics:

Deploy on gigabit Ethernet networks

The iSCSI protocol enables block-level traffic over IP networks. However, iSCSI is both latency and bandwidth sensitive. To optimize performance reliability, deploy Core and the storage array on Gigabit Ethernet networks.

Use CHAP

For additional security, use CHAP between Core and the storage array, and between Edge and the server. One-way CHAP is also supported.

Configure initiators and storage groups or LUN masking

To avoid unwanted hosts to access LUNs mapped to Core, configure initiator and storage groups between Core and the storage system. This particular practice is also known as LUN masking or Storage Access Control.

When mapping Fibre Channel LUNs to the Core-vs, ensure the ESXi servers in the cluster that are hosting the Core-v appliances have access to these LUNs. Configure the ESXi servers in the cluster that are not hosting the Core-v appliances to not have access to these LUNs.

Core hostname and IP address

If the branch DNS server runs on VSP and its DNS datastore is deployed on a LUN used with SteelFusion, use the Core IP address instead of the hostname when you specify the Core hostname and IP address.

If you must use the hostname, deploy the DNS server on the VSP internal storage, or configure host DNS entries for the Core hostname on the SteelHead.

Segregate storage traffic from management traffic

To increase overall security, minimize congestion, minimize latency, and simplify the overall configuration of your storage infrastructure, segregate storage traffic from regular LAN traffic using VLANs (

Figure: Traffic segregation).

Figure: Traffic segregation

When to pin and prepopulate the LUN

SteelFusion technology has built-in file system awareness for NTFS and VMFS file systems. You will likely need to pin and prepopulate the LUN if it contains file systems other than NTFS and VMFS (or unstructured data), or if frequent, prolonged periods of WAN outages are expected.

LUNs containing file systems other than NTFS and VMFS and LUNs containing unstructured data

Pin and prepopulate the LUN for unoptimized file systems such as FAT, FAT32, ext3, and so on. You can also pin the LUN for applications like databases that use raw disk file format or proprietary file systems.

Data availability at the branch during a WAN link outage

When the WAN link between the remote branch office and the data center is down, data no longer travels through the WAN link. Hence, SteelFusion technology and its intelligent prefetch mechanisms no longer functions. Pin and prepopulate the LUN if frequent, prolonged periods of WAN outages are expected.

By default, the Edge keeps a write reserve that is 10 percent of the blockstore size. If prolonged periods of WAN outages are expected, appropriately increase the write reserve space.

Core configuration export

Store and back up the configuration on an external server in case of system failure. Enter the following CLI commands to export the configuration:

enable

configure terminal

configuration bulk export scp://username:password@server/path/to/config

Complete this export each time a configuration operation is performed or you have some other changes on your configuration.

Core in HA configuration replacement

If the configuration has been saved on an external server, the failed Core can be seamlessly replaced. Enter the following CLI commands to retrieve what was previously saved:

enable

configure terminal

no service enable

configuration bulk import scp://username:password@server/path/to/config

service enable

Hardware upgrade of Core appliances in HA configuration

Due to expansion of your SteelFusion deployment, it might be necessary to replace existing Core appliances with models that provide greater capacity. If you already have existing Cores deployed in an HA configuration, it is possible to perform the hardware replacement with minimal, or even zero, impact to normal data service operations. However, before replacing the hardware, we strongly recommend using Riverbed Professional Services to help plan and implement this process.

The following steps outline the required tasks:

1. Verify failover configuration of both the Core and Edge devices by reviewing the current settings in the Core management console pages for failover and storage.

2. Install both of the new Cores and apply a basic jumpstart configuration including a temporary IP address on the primary interface of each Core to allow for management access.

3. Check that the new Cores have the correct software version and licenses installed. Apply any updates if needed.

4. Trigger a failover of one of the existing production Core devices by stopping SteelFusion services.

5. Check that the surviving (active) production Core is continuing to provide storage services to all Edges as expected.

6. Using either the management console or CLI, export the current configuration of the passive failover Core to an external device: for example, the workstation you are performing these tasks with.

7. Shut down the passive failover Core.

8. Stop the SteelFusion services on the first of the new Core devices.

9. Swap over all of the network cables from the passive (old) Core to the first of the new Core devices, making sure that ports and cables match.

10. Check that the new Core is still accessible via its temporary IP address on the primary interface.

11. Connect a serial console cable to the new Core and perform a configuration jumpstart, applying the IP address and hostname of the old passive Core.

12. Connect to the management console of the new Core and import the configuration file of the old Core.

13. Once the configuration for the new Core is correctly imported and applied, start the SteelFusion services.

14. Verify that the new Core is peered with the remaining active production Core that has yet to be upgraded.

15. Using either the management console or CLI, export the current configuration of the active failover Core to an external device: for example, the workstation you are performing these tasks with.

16. Shut down the active failover Core that has yet to be upgraded.

17. Check that the surviving (newly upgraded) production Core is continuing to provide storage services to all Edges as expected.

18. Stop the SteelFusion services on the second new Core device.

19. Swap over all of the network cables from the remaining (old) Core to the second new Core device, making sure that ports and cables match.

20. Check that the second new Core is still accessible via its temporary IP address on the primary interface.

21. Connect a serial console cable to the second new Core and perform a configuration jumpstart, applying the IP address and hostname of the remaining (old) Core.

22. Connect to the management console of the second new Core and import the configuration file of the remaining (old) Core.

23. Once the configuration for the second new Core is correctly imported and applied, start the SteelFusion services.

24. Verify that the second new Core is peered with the first new Core that was upgraded and that all data services to the Edge device are operating as normal.

The upgrade procedure is now complete.

LUN-based data protection limits

When using LUN-based data protection, be aware that each snapshot/backup operation takes approximately 2 minutes to complete. This means that if the hourly option is configured for more than 30 LUNs, it is quite possible that there could be an increasing number of nonreplicated snapshots on Edges.

WAN usage consumption for a Core to Edge VMDK data migration

When provisioning VMs as part of a data migration, it is possible to see high traffic usage across the WAN link. This can be due to the type of VMDK that is being migrated. This table gives an example of WAN usage consumption for a Core to Edge VMDK data migration containing a 100 GiB VMDK with 20 GiB used.

VMDK type | WAN traffic usage | Space used on array thick LUNs | Space used on array thin LUNs | VMDK fragmentation |

Thin | 20 GB | 20 GiB | 20 GiB | High |

Thick eager zero | 100 GB + 20 GB = 120 GB | 100 GiB | 100 GiB | None (flat) |

Thick lazy zero (default) | 20 GB + 20 GB = 40 GB | 100 GiB | 100 GiB | None (flat) |

For more details, see this Knowledge Base article on the Riverbed Support site:

Reserve memory and CPU resources when deploying Core-v

When deploying Core-v, see the SteelFusion Core Installation and Configuration Guide to understand what hardware requirements (memory, CPU, and disk) are required to support your Core-v model. We strongly recommend that you allocate and reserve the correct amount of resources as specified in the SteelFusion Core Installation and Configuration Guide. Reserving these resources ensures that the memory and recommended CPU are dedicated for use by the Core-v instance, enabling it to perform as expected.

Edge best practices

This section describes best practices for deploying the Edge. It includes the following topics:

Segregate traffic

At the remote branch office, separate storage traffic and WAN/Rdisk traffic from LAN traffic. This practice helps to increase overall security, minimize congestion, minimize latency, and simplify the overall configuration of your storage infrastructure.

Pin the LUN and prepopulate the blockstore

In specific circumstances, you should pin the LUN and prepopulate the blockstore. Additionally, you can have the write-reserve space resized accordingly; by default, the Edge has a write-reserve space that is 10 percent of the blockstore.

To resize the write-reserve space, contact your Riverbed representative.

We recommend that you pin the LUN in the following circumstances:

• Unoptimized file systems - Core supports intelligent prefetch optimization on NFTS and VMFS file systems. For unoptimized file systems such as FAT, FAT32, ext3, and others. Core cannot perform optimization techniques such as prediction and prefetch in the same way as it does for NTFS and VMFS. For best results, pin the LUN and prepopulate the blockstore.

• Database applications - If the LUN contains database applications that use raw disk file formats or proprietary file systems, pin the LUN and prepopulate the blockstore.

• WAN outages are likely or common - Ordinary operation of SteelFusion depends on WAN connectivity between the branch office and the data center. If WAN outages are likely or common, pin the LUN and prepopulate the blockstore.

Segregate data onto multiple LUNs

We recommend that you separate storage into three LUNs, as follows:

• Operating system - In case of recovery, the operating system LUN can be quickly restored from the Windows installation disk or ESX datastore, depending on the type of server used in the deployment.

• Production data - The production data LUN is hosted on the Edge and therefore safely backed up at the data center.

• Swap space - Data on the swap space LUN is transient and therefore not required in disaster recovery. We recommend that you use this LUN as an Edge local LUN.

Ports and type of traffic

You should only allow iSCSI traffic on primary and auxiliary interfaces. Riverbed does not recommend that you configure your external iSCSI initiators to use the IP address configured on the in-path interface. Some appliance models can optionally support an additional NIC to provide extra network interfaces. You can also configure these interfaces to provide iSCSI connectivity.

iSCSI port bindings

If iSCSI port bindings are enabled on the on-board hypervisor of the Edge appliance, a Fusion Edge HA failover operation can take too long and time out. iSCSI port bindings are disabled by default. If these port bindings are enabled, we recommend that you remove the port bindings because the internal interconnect interfaces are all on different network segments.

For more information on this scenario, see the Riverbed Knowledge Base article

After Fusion Edge HA failover, ESX (VSP) takes several minutes to re-establish connectivity to LUNs at

https://supportkb.riverbed.com/support/index?page=content&id=S28205.Changing IP addresses on the Edge, ESXi host, and servers

When you have an Edge and ESXi running on the same converged platform, you must change IP addresses in a specific order to keep the task simple and fast. You can use this procedure when staging the Edges in the data center or moving them from one site to another.

This procedure assumes that the Edges are configured with IP addresses in a staged or production environment. You must test and verify all ESXi, servers, and interfaces before making these changes.

To change the IP addresses on the Edge, ESXi host, and servers

1. Starting with the Windows server, use your vSphere client to connect to the console, login and change it to DHCP or the new destination IP address, and shut down the Windows server from the console.

2. To change the IP address of the ESXi host, the procedure is different depending on whether the Edge is a SteelHead EX or a SteelFusion Edge.

SteelHead EX

Use virtual network computing (VNC) client to connect to the ESXi console, change the IP to the new destination IP address, and shut down ESXi from the console. If you did not configure VNC during the ESXi installation wizard, you may also use vSphere Client and change it from Configuration > Networking > rvbd_vswitch_pri > Properties.

SteelFusion Edge

Connect to the ESXi console serial port or run the following command at the RiOS command line to show the ESXi console on the screen and allow you to change the IP address.

hypervisor console

3. On the Edge Management Console, choose Networking > Networking: In-Path Interfaces, and then change the IP address for inpath0_0 to the new destination IP address.

4. Use the included console cable to connect to the console port on the back of the Edge appliance and log in as the administrator.

5. Enter the following command to change the IP address to your new destination IP address.

enable

config terminal

interface primary ip address 1.7.7.7 /24

ip default-gateway 1.7.7.1

write memory

6. Enter the following command to shut down the appliance:

reload halt

7. Move the Edge appliance to the new location .

8. Start your Windows server at the new location and open the iSCSI Initiator.

• Select the Discovery tab and remove the old portal.

• Click OK.

• Open the tab again and select Discover Portal.

• Add the new Edge appliance primary IP address.

This process brings the original data disk to functioning.

Disk management

You can specify the size of the local LUN during the hypervisor installation on the Edge. The installation wizard allows more flexible disk partitioning in which you can use a percentage of the exact amount in gigabytes that you want to use for the local LUN. The rest of the disk space is allocated to Edge blockstore. To streamline the ESXi configuration, run the hypervisor installer before connecting the Edge appliance to the Core to set up local storage. If local storage is configured during the hypervisor installation, all LUNs provisioned by the Core to the Edge are automatically made available to the ESXi of the SteelFusion Edge.

Rdisk traffic routing options

You can route Rdisk traffic out of the primary or the in-path interfaces. This section contains the following topics:

For more information about Rdisk, see

Network Quality of Service. For information about WAN redundancy, see

Configuring WAN redundancy.

In-path interface

Select the in-path interface when you deploy the SteelFusion Edge W0 appliance. When you configure Edge to use the in-path interface, the Rdisk traffic is intercepted, optimized, and sent directly out of the WAN interface toward the Core deployed at the data center.

Use this option during proof of concepts (POC) installations or if the primary interface is dedicated to management.

The drawback of this mode is the lack of redundancy in the event of WAN interface failure. In this configuration, only the WAN interface needs to be connected. Disable link state propagation.

Primary interface

We recommend that you select the primary interface when you deploy the SteelFusion Edge W1-W3 appliance. When you configure Edge to use the primary interface, the Rdisk traffic is sent unoptimized out of the primary interface to a switch or a router that in turn redirects the traffic back into the LAN interface of the Edge RiOS node to get optimized. The traffic is then sent out of the WAN interface toward the Core deployed at the data center.

This configuration offers more redundancy because you can have both in-path interfaces connected to different switches.

Deploying SteelFusion with third-party traffic optimization

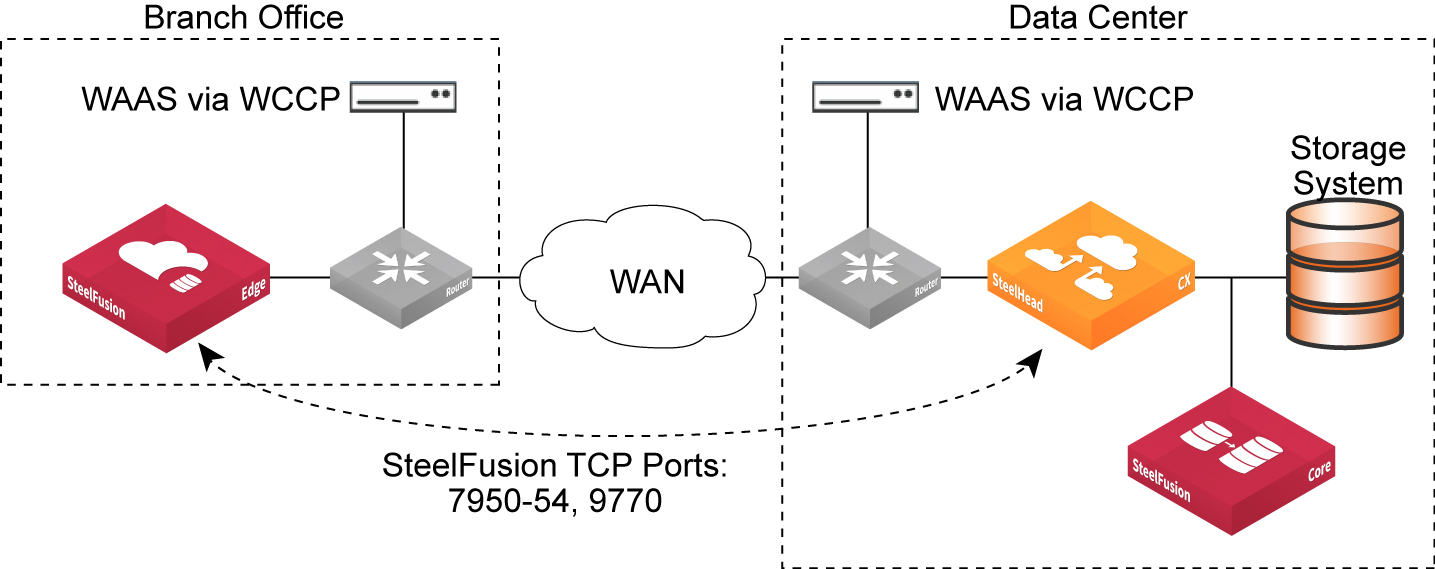

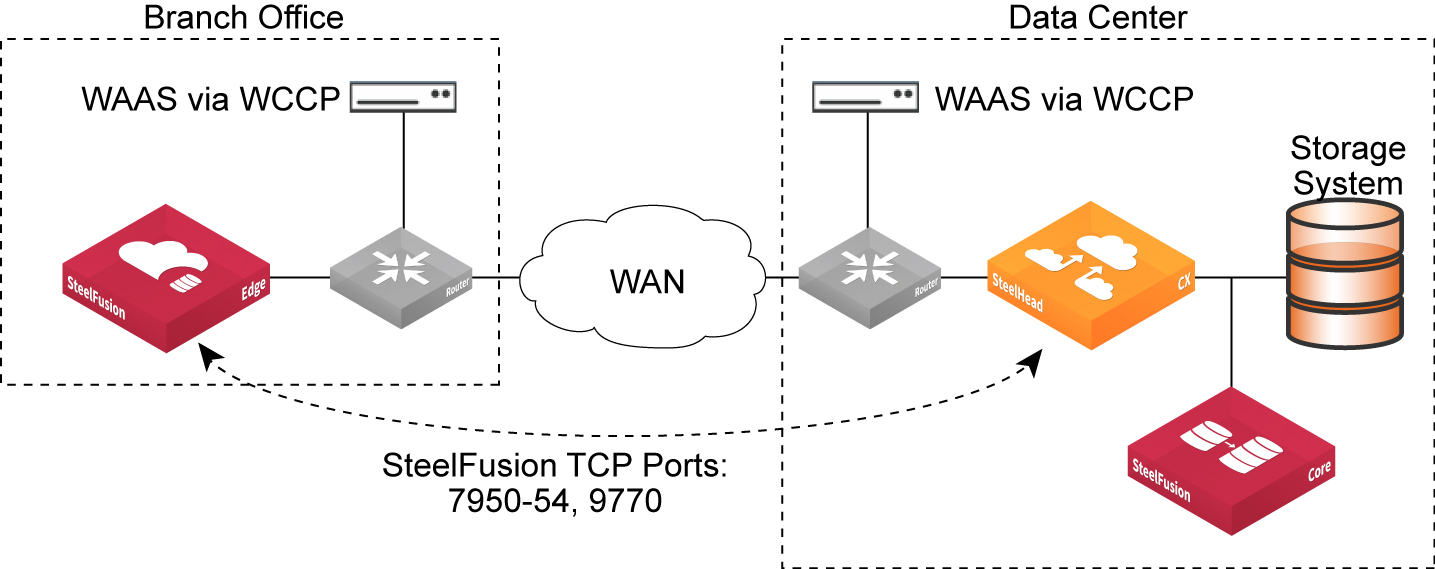

The Edges and Cores communicate with each other and transfer data-blocks over the WAN using six different TCP port numbers: 7950, 7951, 7952, 7953, 7954, and 7970.

Figure: SteelFusion behind a third-party deployment scenario shows a deployment in which the remote branch and data center third-party optimization appliances are configured through WCCP. You can optionally configure WCCP redirect lists on the router to redirect traffic belonging to the six different TCP ports of SteelFusion to the SteelHeads. Configure a fixed-target rule for the six different TCP ports of SteelFusion to the in-path interface of the data center SteelHead.

Figure: SteelFusion behind a third-party deployment scenario

Windows and ESX server storage layout—SteelFusion-protected LUNs vs. local LUNs

Note: This section describes different LUNs and storage layouts. It includes the following topics:

Note: SteelFusion-protected LUNs are also known as iSCSI LUNs. This section refers to iSCSI LUNs as SteelFusion-protected LUNs.

Transient and temporary server data is not required in the case of disaster recovery and therefore does not need to be replicated back to the data center. For this reason, we recommend that you separate transient and temporary data from the production data by implementing a layout that separates the two into multiple LUNs.

In general, plan to configure one LUN for the operating system, one LUN for the production data, and one LUN for the temporary swap or paging space. Configuring LUNs in this manner greatly enhances data protection and operations recovery in case of a disaster. This extra configuration also facilitates migration to server virtualization if you are using physical servers.

For more information about disaster recovery, see

Data Resilience and Security.

In order to achieve these goals, SteelFusion implements two types of LUNs: SteelFusion-protected (iSCSI) LUNs and local LUNs. You can add LUNs by choosing Configure > Manage: LUNs.

Use SteelFusion-Protected LUNs to store production data. They share the space of the blockstore cache. The data is continuously replicated and kept in sync with the associated LUN back at the data center. The Edge cache only keeps the working set of data blocks for these LUNs. The remaining data is kept at the data center and predictably retrieved at the edge when needed. During WAN outages, edge servers are not guaranteed to operate and function at 100 percent because some of the data that is needed can be at the data center and not locally present in the Edge blockstore cache.

One particular type of SteelFusion-protected LUN is the pinned LUN. Pinned LUNs are used to store production data but they use dedicated space in the Edge. The space required and dedicated in the blockstore cache is equal to the size of the LUN provisioned at the data center. The pinned LUN enables the edge servers to continue to operate and function during WAN outages because 100 percent of data is kept in blockstore cache. Like regular SteelFusion LUNs the data is replicated and kept in sync with the associated LUN at the data center.

For more information about pinned LUNs, see

When to pin and prepopulate the LUN.

Use local LUNs to store transient and temporary data. Local LUNs also use dedicated space in the blockstore cache. The data is never replicated back to the data center because it is not required in the case of disaster recovery.

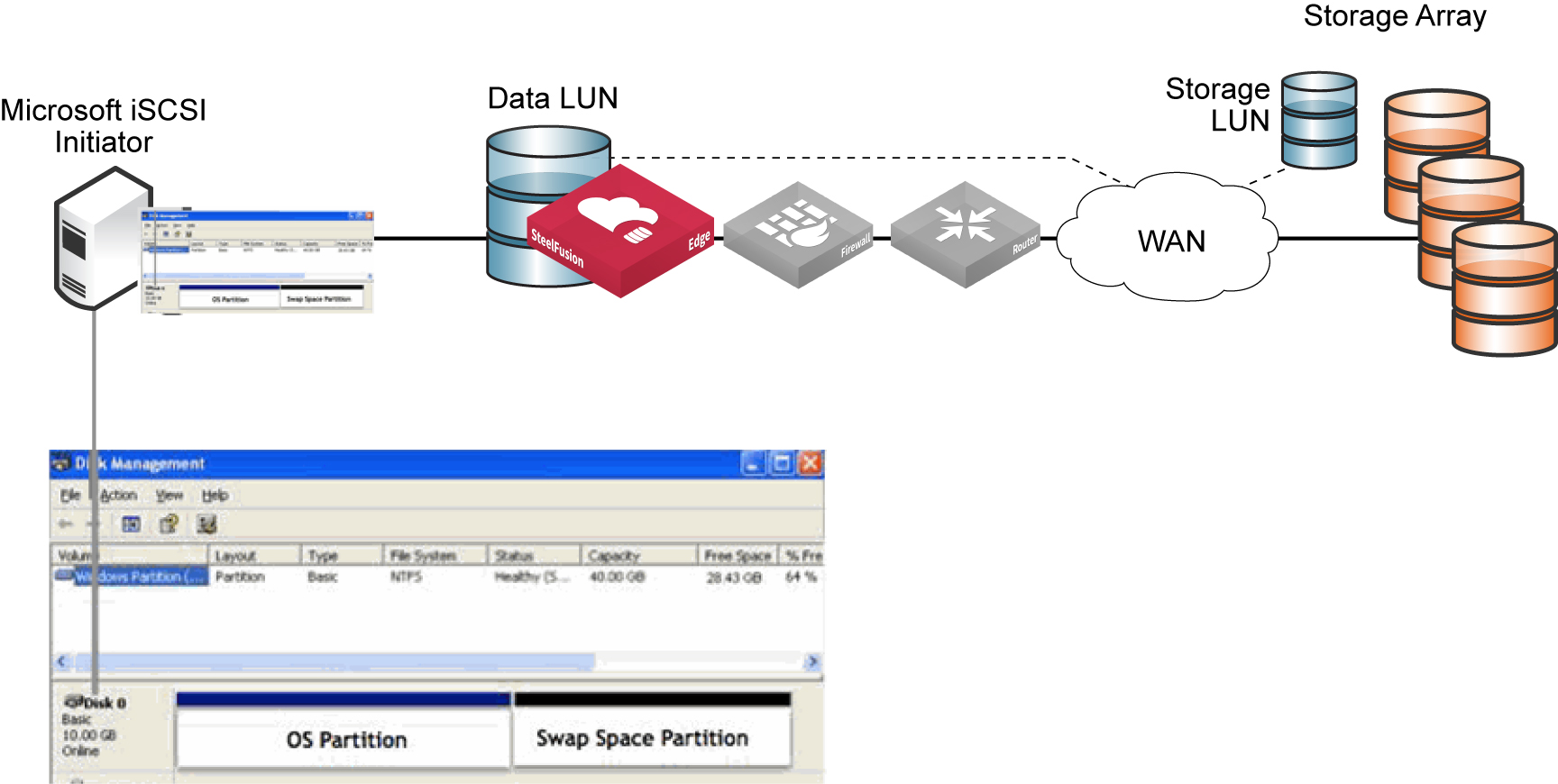

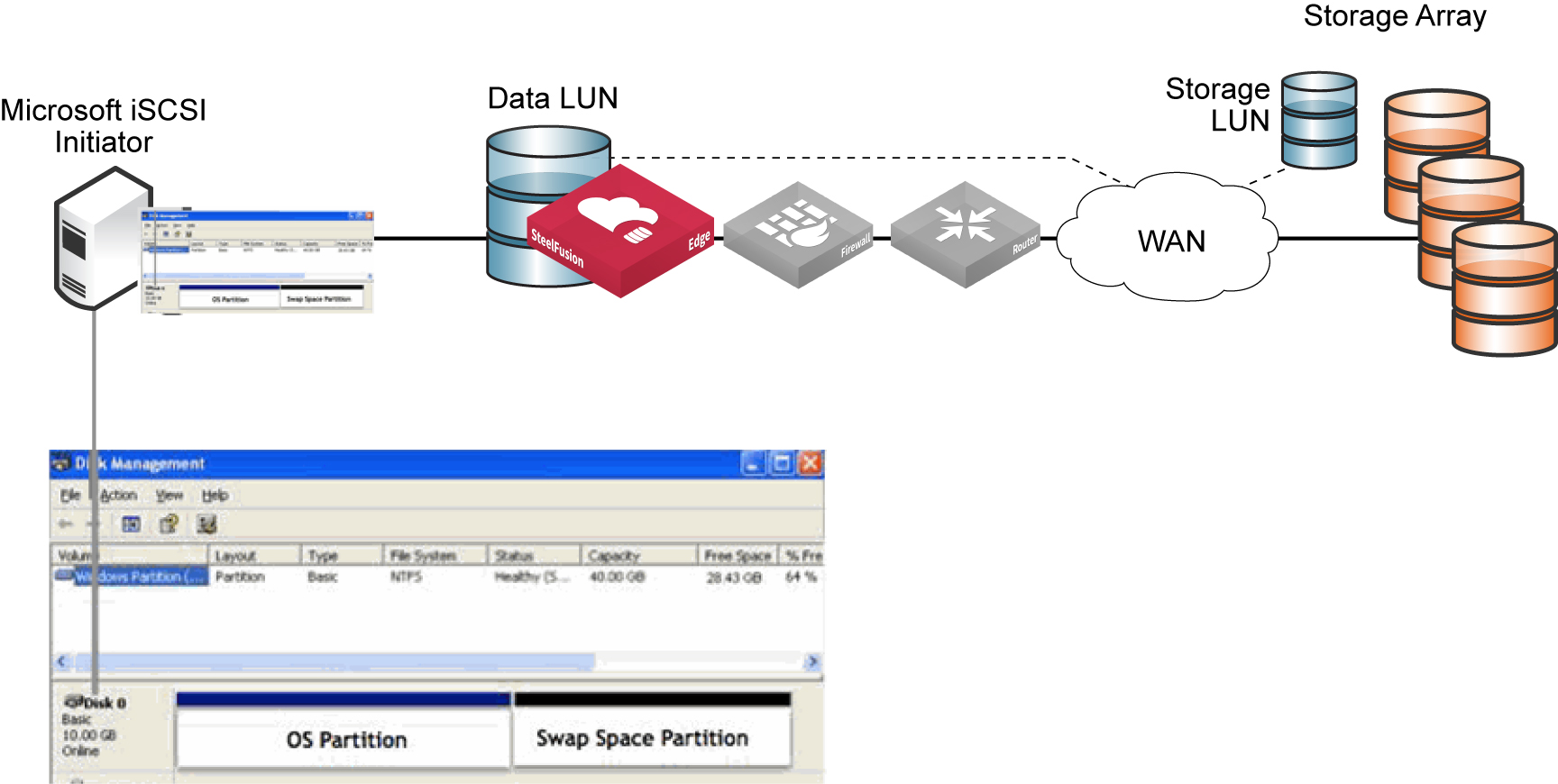

Physical Windows server storage layout

When deploying a physical Windows server, separate its storage into three different LUNs: the operating system and swap space (or page file) can reside in two partitions on the server internal hard drive (or two separate drives), while production data should reside on the SteelFusion-protected LUN (

Figure: Physical server layout).

Figure: Physical server layout

This layout facilitates future server virtualization and service recovery in the case of hardware failure at the remote branch. The production data is hosted on a SteelFusion-protected LUN, which is safely stored and backed up at the data center. In case of a disaster, you can stream this data with little notice to a newly deployed Windows server without having to restore the entire dataset from backup.

Virtualized Windows server on ESX infrastructure with production data LUN on ESX datastore storage layout

When you deploy a virtual Windows server into an ESX infrastructure, you can also store the production data on an ESX datastore mapped to a SteelFusion-protected LUN (

Figure: Virtual server layout 2). This deployment facilitates service recovery in the event of hardware failure at the remote branch because SteelFusion appliances optimize not only LUNs formatted directly with NTFS file system but also optimize LUNs that are first virtualized with VMFS and are later formatted with NTFS.

Figure: Virtual server layout 2

VMFS datastores deployment on SteelFusion LUNs

When you deploy VMFS datastores on SteelFusion-protected LUNs, for best performance, choose the Thick Provision Lazy Zeroed disk format (VMware default). Because of the way we use blockstore in the Edge, this disk format is the most efficient option.

Thin provisioning is when you assign a LUN to be used by a device (in this case a VMFS datastore for an ESXi server, host) and you tell the host how big the LUN is (for example, 10 GiB). However, as an administrator you can choose to pretend that the LUN is 10 GiB, and only assign the host 2 GiB. This fake number is useful if you know that the host needs only 2 GiB to begin with. As time goes by (days or months), and the host starts to write more data and needs more space, the storage array automatically grows the LUN until eventually it really is 10 GiB in size.

Thick provisioning means there is no pretending. You allocate all 10 GiB from the beginning whether the host needs it from day one or not.

Whether you choose thick or thin provisioning, you need to initialize (format) the LUN in the same way as any other new disk. The formatting is essentially a process of writing a pattern to the disk sectors (in this case zeros). You cannot write to a disk before you format it. Normally, you have to wait for the entire disk to be formatted before you can use it—for large disks, this process can take hours. Lazy Zeroed means the process works away slowly in the background and as soon as the first few sectors have been formatted the host can start using it. This immediate usage means the host does not have to wait until the entire disk (LUN) is formatted.

VMware ESXi 5.5 and later support the vStorage APIs for Array Integration (VAAI) feature. This feature uses the SCSI WRITE SAME command when creating or using a vmdk. When using thin-provisioned vmdk files, ESXi creates new extents in the vmdk, by first writing binary 0s and then the block device (filesystem) data. When using thick provisioned vmdk files, ESXi creates all extents by writing binary 0s.

Versions prior to SteelFusion 4.2 of Core and Edge software only supported 10-byte and 16-byte versions of the command. With SteelFusion 4.2 and later, both the Core and Edge software support the use of SCSI WRITE SAME (32 byte) command. This support enables much faster provisioning and formatting of LUNs used for VMFS datastores.

Enable Windows persistent bindings for mounted iSCSI LUNs

Make iSCSI LUNs persistent across Windows server reboots; otherwise, you must manually reconnect them. To configure Windows servers to automatically connect to the iSCSI LUNs after system reboots, select the Add this connection to the list of Favorite Targets check box (

Figure: Favorite targets) when you connect to the Edge iSCSI target.

Figure: Favorite targets

To make iSCSI LUNs persistent and ensure that Windows does not consider the iSCSI service fully started until connections are restored to all the SteelFusion volumes on the binding list, remember to add the Edge iSCSI target to the binding list of the iSCSI service. This addition is important particularly if you have data on an iSCSI LUN that other services depend on: for example, a Windows file server that is using the iSCSI LUN as a share.

The best option to do this process is to select the Volumes and Devices tab from the iSCSI Initiator's control panel and click

Auto Configure (

Figure: Target binding). This action binds all available iSCSI targets to the iSCSI startup process. If you want to choose individual targets to bind, click

Add. To add individual targets, you must know the target drive letter or mount point.

Figure: Target binding

Set up memory reservation for VMs running on VMware ESXi in the VSP

By default, VMware ESXi dynamically tries to reclaim unused memory from guest virtual machines, while the Windows operating system uses free memory to perform caching and avoid swapping to disk.

To significantly improve performance of Windows virtual machines, configure memory reservation to the highest possible value of the ESXi memory available to the VM. This configuration applies whether the VMs are hosted within the hypervisor node of the Edge or on an external ESXi server in the branch that is using LUNs from SteelFusion.

Setting the memory reservation to the configured size of the virtual machine results in a per virtual machine vmkernel swap file of zero bytes, which consumes less storage and helps to increase performance by eliminating ESXi host-level swapping. The guest operating system within the virtual machine maintains its own separate swap and page file.

Boot from an unpinned iSCSI LUN

If you are booting a Windows server or client from an unpinned iSCSI LUN, we recommend that you install the Riverbed Turbo Boot software on the Windows machine. The Riverbed Turbo Boot software greatly improves the boot process over the WAN performance because it allows Core to send to Edge only the files needed for the boot process.

Running antivirus software

There are two antivirus scanning modes:

• On-demand - Scans the entire LUN data files for viruses at scheduled intervals.

• On-access - Scans the data files dynamically as they are read or written to disk.

There are two common locations to perform the scanning:

• On-host - Antivirus software is installed on the application server.

• Off-host - Antivirus software is installed on dedicated servers that can access directly the application server data.

In typical SteelFusion deployments in which the LUNs at the data center contain the full amount of data and the remote branch cache contains the working set, run on-demand scan mode at the data center and on-access scan mode at the remote branch. Running on-demand full file system scan mode at the remote branch causes the blockstore to wrap and evict the working set of data leading to slow performance results.

However, if the LUNs are pinned, on-demand full file system scan mode can also be performed at the remote branch.

Whether scanning on-host or off-host, the SteelFusion solution does not dictate one way versus another, but in order to minimize the server load, we recommend off-host virus scans.

Running disk defragmentation software

Disk defragmentation software is another category of software that can possibly cause the SteelFusion blockstore cache to wrap and evict the working set of data. Do not run disk defragmentation software. Disable default-enabled disk defragmentation on Windows 7 or later.

Running data deduplication features

Some recent versions of server operating systems (for example, Microsoft Windows Server 2012 and Windows Server 2016) include a feature that can be enabled to deduplicate data on the disks used by the server. While this might be considered a useful space-saving tool, it is not relevant when the server is running in a branch as part of a SteelFusion Edge deployment. The deduplication process tends to run once a day as a batch job and rearranges blocks in storage so that deduplication can be performed. This rearrangement of blocks could cause the SteelFusion blockstore cache to wrap and evict the working set of data. It will also cause the rearranged blocks to be recognized by the Edge as changed and therefore be transferred needlessly across the WAN to the backend storage in the data center. Check to ensure that this feature is not enabled for servers hosted within SteelFusion Edge deployments. Most storage systems in modern data centers already have some form of deduplication capability, therefore this capability should be used instead if required.

Running backup software

Backup software is another category of software that will possibly cause the Edge blockstore cache to wrap and evict the working set of data, especially during the execution of full backups. In a SteelFusion deployment, run differential, incremental, synthetic full, and a full backup at the data center.

Configure jumbo frames

If jumbo frames are supported by your network infrastructure, use jumbo frames between Core and storage arrays. We make the same recommendation for the Edge and any external application servers (not hosted within VSP) that are using LUNs from the Edge. The application server interfaces must support jumbo frames. For details, see

Configuring Edge for jumbo frames.

Removing Core from Edge and re-adding Core

When a Core is removed from the Edge with the “preserve config” setting enabled, the Edge local LUNs are saved and the offline remote LUNs are removed from the Edge configuration. On the Core, there is no change, but the LUNs show as “Not connected.” In most scenarios, the reason for this procedure is replacement of the Core.

If a new Core is added to the Edge, the Edge local storage is merged from the Edge to the Core and normal operations are resumed.

However, if for some reason the same Core is added back, the Edge local storage information on the Core must be cleared from the Core by removing any entries for the specific Edge local LUNs, before the add operation is performed.

Note: As long as the Edge and Core are truly disconnected when the Edge local storage entries are removed, no Edge local storage is physically deleted. Only the entries themselves are cleared.

Once the same Core is added back again, the Edge local storage information, on the Edge, is merged to the Core configuration. At the same time, the remote offline storage information on the Core that is mapped to the Edge is merged across to the Edge.

Failure to clear the information prior to re-adding the Core will result in the Core rejecting the Edge connection and both the Core and Edge logfiles reporting a “Config mismatch.”

Further details are available on the Riverbed Support site, in Knowledge Base article S30272 -

https://supportkb.riverbed.com/support/index?page=content&id=S30272.

iSCSI initiator timeouts

This section contains the following topics:

Microsoft iSCSI initiator timeouts

By default, the Microsoft iSCSI Initiator LinkDownTime timeout value is set to 15 seconds and the MaxRequestHoldTime timeout value is also 15 seconds. These timeout values determine how much time the initiator holds a request before reporting an iSCSI connection error. You can increase these values to accommodate longer outages, such as an Edge failover event or a power cycle in the case of a single appliance.

If MPIO is installed in the Microsoft iSCSI Initiator, the LinkDownTime value is used. If MPIO is not installed, MaxRequestHoldTime is used instead.

If you are using Edge in an HA configuration and MPIO is configured in the Microsoft iSCSI Initiator, change the LinkDownTime timeout value to 60 seconds to allow the failover to complete.

ESX iSCSI initiator timeouts

By default, the VMware ESX iSCSI Initiator DefaultTimeToWait timeout is set to 2 seconds. This amount of time is the minimum to wait before attempting an explicit or implicit logout or active iSCSI task reassignment after an unexpected connection termination or a connection reset. You can increase this value to accommodate longer outages, such as an Edge failover event or a power cycle in case of a single appliance.

If you are using Edge in an HA configuration, change the DefaultTimeToWait timeout value to 60 seconds to allow the failover to complete.

For more information about iSCSI Initiator timeouts, see

Configuring iSCSI initiator timeouts.

Operating system patching

This section contains the following topics:

Patching at the branch office for virtual servers installed on iSCSI LUNs

You can continue to use the same or existing methodologies and tools to perform patch management on physical or virtual branch servers booted over the WAN using SteelFusion appliances.

Patching at the data center for virtual servers installed on iSCSI LUNs

If you want to perform virtual server patching at the data center and save a round-trip of patch software from the data center to the branch office, use the following procedure.

To perform virtual server patching at the data center

1. At the branch office:

• Power down the virtual machine.

• Take the VMFS datastore offline.

2. At the data center:

• Take the LUN on the Core offline.

• Mount the LUN to a temporary ESX server.

• Power up the virtual machine, and apply patches and file system updates.

• Power down the virtual machine.

• Take the VMFS datastore offline.

• Bring the LUN on the Core online.

3. At the branch office:

• Bring the VMFS datastore online.

• Boot up the virtual machine.

If the LUN was previously pinned at the edge, patching at the data center can potentially invalidate the cache. If this is the case, you might need to prepopulate the LUN.

Related information

• SteelFusion Core User Guide

• SteelFusion Edge User Guide

• SteelFusion Core Installation and Configuration Guide

• SteelFusion Command-Line Interface Reference Manual

• Fibre Channel on SteelFusion Core Virtual Edition Solution Guide