Overview of SteelHead-v

This chapter provides an overview of SteelHead-v. It includes these sections:

Product dependencies and compatibility

This section provides information about product dependencies and compatibility.

Third-party software dependencies

This table summarizes the software requirements for SteelHead-v.

Component | Software requirements |

Microsoft Hyper‑V Hypervisor | Legacy SteelHead-v models VCX255 through VCX1555 and performance tier-based models VCX10 through VCX110 are supported on Hyper‑V in Windows Server 2012 R2 and later, and the standalone product Microsoft Hyper-V Server 2012 R2 and later. |

VMware ESXi Hypervisor | Legacy SteelHead-v models VCX255 through VCX7055 and performance tier-based models VCX10 through VCX90 are supported on ESXi 5.1 and later. VCX100 and VCX110 are supported on ESXi 6.5 and later. VCX100 and VCX110 models must use either the driver version Avago_bootbank_lsi-mr3_7.700.50.00-1OEM.600.0.0.2768847 or the latest version on the ESXi host. Only VMware hardware versions 14 and earlier are supported. |

Linux Kernel-based Virtual Machine (KVM) Hypervisor | Legacy SteelHead-v models VCX255 through VCX1555 and performance tier-based models VCX10 through VCX110 are supported on KVM. SteelHead-v has been tested on RHEL 7, CentOS 7, Qemu versions 1.7.91 to 2.5.0, and Ubuntu 13.10, 14.04 LTS, and 16.04.1 LTS with paravirtualized virtio device drivers. Host Linux Kernel versions 3.13.0-24-generic through 4.4.0-51 are supported. |

Cisco 5000 series ENCS and NFVIS | SteelHead-v models VCX10 through VCX40 are supported on Cisco 5100 series ENCS and NFVIS using Linux Kernel-based virtual machines. See above for supported KVM dependencies and requirements. Legacy SteelHead-v models are not supported. |

SteelHead-v Management Console | Same requirements as physical SteelHead. See the SteelHead Installation and Configuration Guide. |

SNMP-based management compatibility

This product supports a proprietary Riverbed MIB accessible through SNMP. SNMP1 (RFCs 1155, 1157, 1212, and 1215), SNMP2c (RFCs 1901, 2578, 2579, 2580, 3416, 3417, and 3418), and SNMP3 are supported, although some MIB items might only be accessible through SNMP2 and SNMP3.

SNMP support enables the product to be integrated into network management systems such as Hewlett-Packard OpenView Network Node Manager, BMC Patrol, and other SNMP-based network management tools.

SCC compatibility

To manage SteelHead 9.2 and later appliances, you need to use SCC 9.2 or later. Earlier versions of the SCC do not support 9.2 SteelHeads. For details about SCC compatibility across versions, see the SteelCentral Controller for SteelHead Installation Guide.

Understanding SteelHead-v

SteelHead-v is software that delivers the benefits of WAN optimization, similar to those offered by the SteelHead hardware, while also providing the flexibility of virtualization.

Built on the same RiOS technology as the SteelHead, SteelHead-v reduces bandwidth utilization

and speeds up application delivery and performance. SteelHead-v on VMware vSphere is certified for

the Cisco Service-Ready Engine (SRE) module with Cisco Services-Ready Engine Virtualization

(Cisco SRE-V).

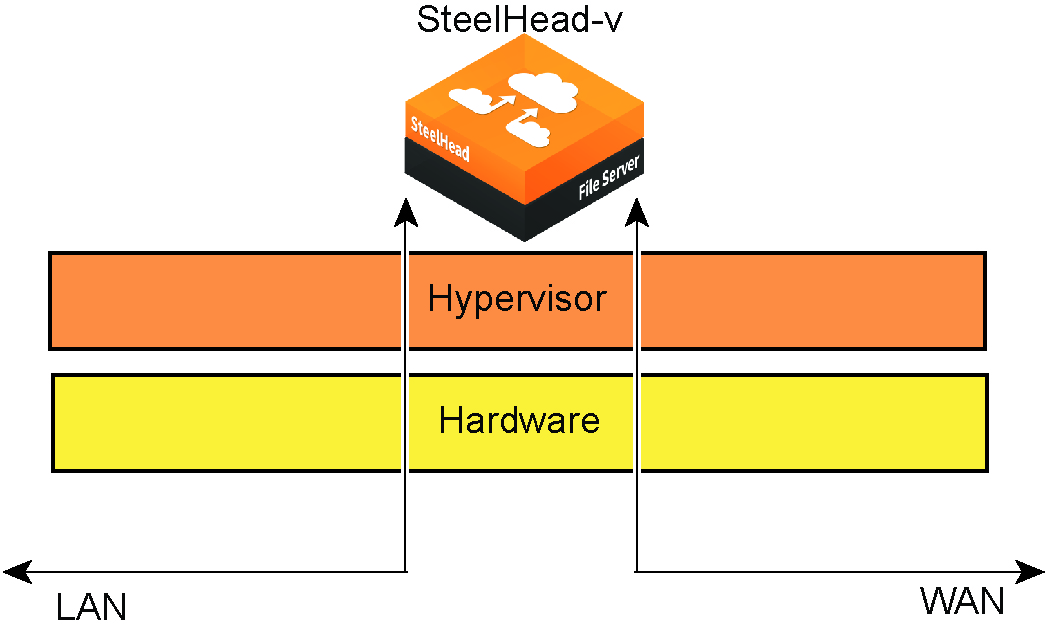

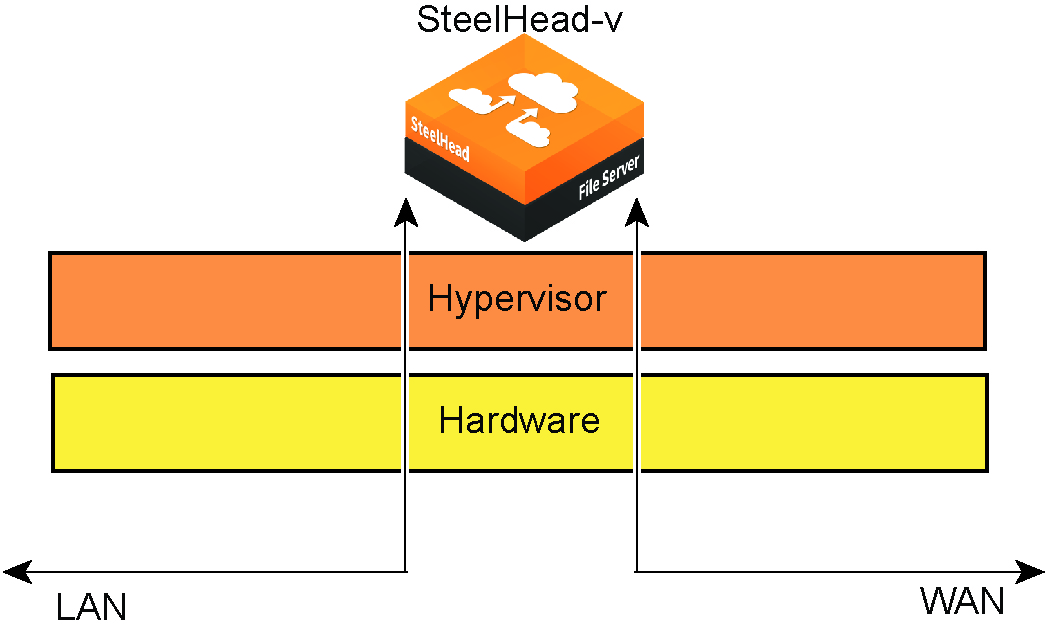

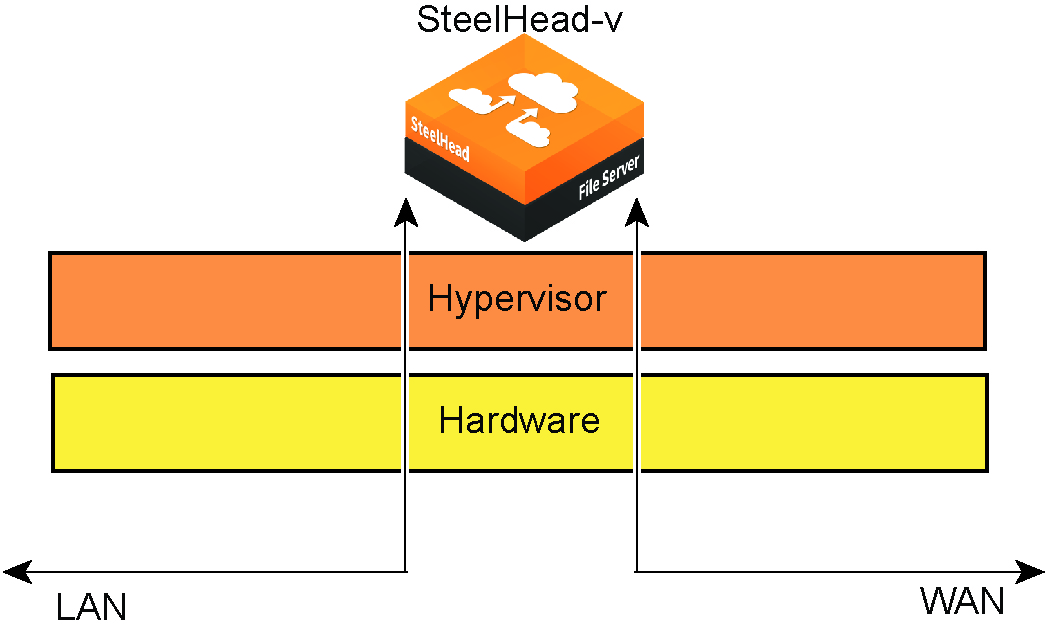

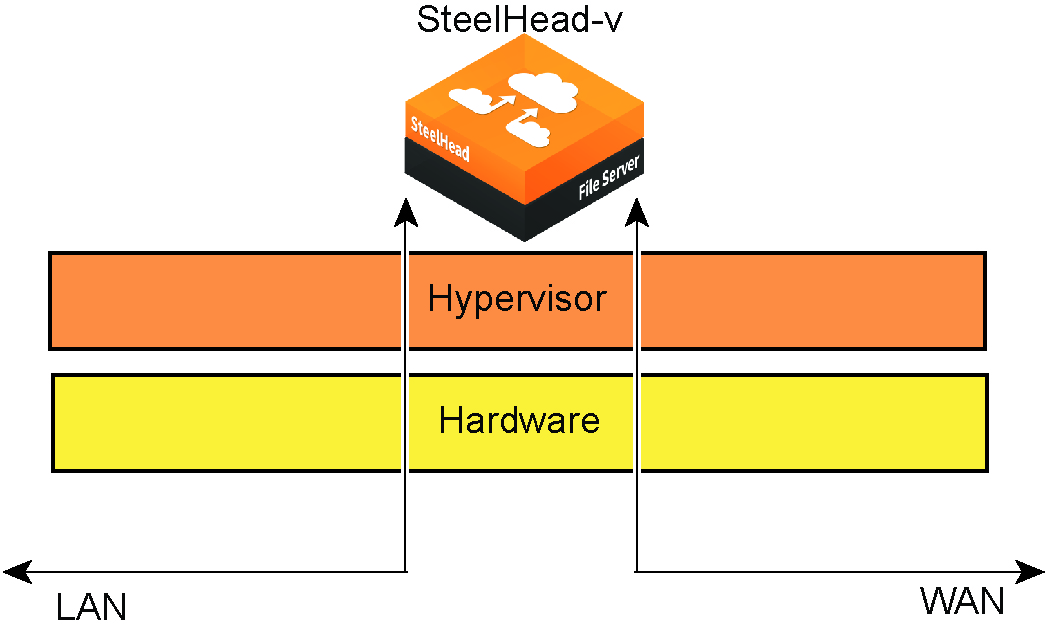

SteelHead-v runs on VMware ESXi, Microsoft Hyper‑V, and Linux KVM hypervisors installed on industry-standard hardware servers.

SteelHead-v and hypervisor architecture

SteelHead-v enables consolidation and high availability while providing most of the functionality of the physical SteelHead, with these exceptions:

• Virtual Services Platform (VSP) or Riverbed Services Platform (RSP)

• Proxy File Service (PFS)

• Fail-to-wire (unless deployed with a Riverbed NIC card)

• Hardware reports such as the Disk Status report

• Hardware-based alerts and notifications, such as a RAID alarm

Starting with RiOS 9.7, bypass cards are supported, and you can deploy SteelHead-v in physical in-path, out-of-path, or virtual in-path modes, or using the Discovery Agent. Physical in-path modes require the use of a bypass card. For information about configuring bypass cards see the Network and Storage Card Installation Guide. Releases prior to 9.7 do not support in-path deployments; however, all other modes and the Discovery Agent are supported.

SteelHead-v supports both asymmetric route detection and connection-forwarding features. You can make SteelHead-v highly available in active-active configurations, with RiOS data store synchronization as serial clusters.

After you license and obtain a serial number for SteelHead-v appliances, you can manage them across the enterprise from a Riverbed SteelCentral Controller for SteelHead (SCC). For best results, we recommend that the version of SCC match, or be more recent than, the SteelHead-v version.

SteelHead-v supports up to 24 virtual CPUs and 10 interfaces.

SteelHead-v optimization

With SteelHead-v, you can solve a range of problems affecting WANs and application performance, including:

• insufficient WAN bandwidth.

• inefficient transport protocols in high-latency environments.

• inefficient application protocols in high-latency environments

RiOS intercepts client-server connections without interfering with normal client-server interactions, file semantics, or protocols. All client requests are passed through to the server normally, while relevant traffic is optimized to improve performance.

RiOS uses these optimization techniques:

• Data streamlining - SteelHead products (SteelHead-v, SteelHeads, and Client Accelerator Controller) can reduce WAN bandwidth utilization by 65 to 98 percent for TCP-based applications using data streamlining. In addition to traditional techniques like data compression, RiOS uses a Riverbed proprietary algorithm called Scalable Data Referencing (SDR). SDR breaks up TCP data streams into unique data chunks that are stored in the hard disk (RiOS data store) of the device running RiOS (a SteelHead or Client Accelerator Controller host system). Each data chunk is assigned a unique integer label (reference) before it is sent to a peer RiOS device across the WAN. When the same byte sequence is seen again in future transmissions from clients or servers, the reference is sent across the WAN instead of the raw data chunk. The peer RiOS device (SteelHead-v, SteelHead, or Client Accelerator Controller) uses this reference to find the original data chunk in its data store to reconstruct the original TCP data stream.

• Transport streamlining - SteelHead-v uses a generic latency optimization technique called transport streamlining. Transport streamlining uses a set of standards and proprietary techniques to optimize TCP traffic between SteelHeads. These techniques:

– ensure that efficient retransmission methods, such as TCP selective acknowledgments, are used.

– negotiate optimal TCP window sizes to minimize the impact of latency on throughput.

– maximize throughput across a wide range of WAN links.

• Application streamlining - In addition to data and transport streamlining optimizations, RiOS can apply application-specific optimizations for certain application protocols: for example, CIFS, MAPI, NFS, TDS, HTTP, and Oracle Forms.

• Management streamlining - These tools include:

Autodiscovery process - Autodiscovery enables SteelHead-v, the SteelHead, and Client Accelerator Controller to automatically find remote SteelHead installations and to optimize traffic using them. Autodiscovery relieves you from having to manually configure large amounts of network information. The autodiscovery process enables administrators to control and secure connections, specify which traffic is optimized, and specify peers for optimization.

Enhanced autodiscovery automatically discovers the last SteelHead in the network path of the TCP connection. In contrast, the original autodiscovery protocol automatically discovers the first SteelHead in the path. The difference is only seen in environments where there are three or more SteelHeads in the network path for connections to be optimized.

Enhanced autodiscovery works with SteelHeads running the original autodiscovery protocol, but it is not the default. When enhanced autodiscovery is enabled on a SteelHead that is peering with other appliances using the original autodiscovery method in a “mixed” environment, the determining factor for peering is whether the next SteelHead along the path uses original autodiscovery or enhanced autodiscovery (regardless of the setting on the first appliance).

If the next SteelHead along the path is using original autodiscovery, the peering terminates at that appliance (unless peering rules are configured to modify this behavior). Alternatively, if the SteelHead along the path is using enhanced autodiscovery, the enhanced probing for a peer continues a step further to the next appliance in the path. If probing reaches the final SteelHead in the path, that appliance becomes the peer.

SteelCentral Controller for SteelHead - The SCC enables remote SteelHeads to be automatically configured and monitored. It also gives you a single view of the data reduction and health of the SteelHead network.

SteelHead Client Accelerator - The Client Accelerator is the management appliance you use to track the individual health and performance of each deployed software client and to manage enterprise client licensing. The Client Accelerator enables you to see who is connected, view their data reduction statistics, and perform support operations such as resetting connections, pulling logs, and automatically generating traces for troubleshooting. You can perform all of these management tasks without end-user input.

SteelHead-v is typically deployed on a LAN, with communication between appliances occurring over a private WAN or VPN. Because optimization between SteelHeads typically occurs over a secure WAN, it is not necessary to configure company firewalls to support SteelHead-specific ports.

For detailed information about how SteelHead-v, the SteelHead, or Client Accelerator Controller works and deployment design principles, see the SteelHead Deployment Guide.

Configuring optimization

You configure optimization of traffic using the Management Console or the Riverbed CLI. You configure the traffic that SteelHead-v optimizes and specify the type of action it performs using:

• In-path rules - In-path rules determine the action that a SteelHead-v takes when a connection is initiated, usually by a client. In-path rules are used only when a connection is initiated. Because connections are usually initiated by clients, in-path rules are configured for the initiating, or client-side, SteelHead-v. In-path rules determine SteelHead-v behavior with SYN packets. You configure one of these types of in-path rule actions:

– Auto - Use the autodiscovery process to determine if a remote SteelHead is able to optimize the connection attempted by this SYN packet.

– Pass-through - Allow the SYN packet to pass through the SteelHead. No optimization is performed on the TCP connection initiated by this SYN packet.

– Fixed-target - Skip the autodiscovery process and use a specified remote SteelHead as an optimization peer. Fixed-target rules require the input of at least one remote target SteelHead; an optional backup SteelHead might also be specified.

– Deny - Drop the SYN packet and send a message back to its source.

– Discard - Drop the SYN packet silently.

• Peering rules - Peering rules determine how a SteelHead-v reacts to a probe query. Peering rules are in ordered lists of fields that a SteelHead-v uses to match with incoming SYN packet fields—for example, source or destination subnet, IP address, VLAN, or TCP port—as well as the IP address of the probing SteelHead-v. This rule is useful in complex networks. Following are the types of peering rule actions:

– Pass - The receiving SteelHead does not respond to the probing SteelHead and allows the SYN+ probe packet to continue through the network.

– Accept - The receiving SteelHead responds to the probing SteelHead and becomes the remote-side SteelHead (the peer) for the optimized connection.

– Auto - If the receiving SteelHead is not using enhanced autodiscovery, Auto has the same effect as Accept. If enhanced autodiscovery is enabled, the SteelHead becomes the optimization peer only if it is the last SteelHead in the path to the server.

For detailed information about in-path and peering rules and how to configure them, see the SteelHead User Guide.

SteelHead-v deployment guidelines

You must follow these guidelines when deploying the SteelHead-v package on a hypervisor. If you do not follow the configuration guidelines, SteelHead-v might not function properly

or might cause outages in your network.

Network configuration

When you deploy a hypervisor, follow this guideline:

• Ensure that a network loop does not form - An in-path interface is, essentially, a software connection between the lanX_Y and wanX_Y interfaces. Before deploying a SteelHead-v, we strongly recommend that you connect each LAN and WAN virtual interface to a distinct virtual switch and physical NIC.

Connecting LAN and WAN virtual NICs to the same vSwitch or physical NIC could create a loop in the system and might make your hypervisor unreachable.

When you deploy SteelHead-v on ESXi, follow these guidelines:

• Enable promiscuous mode for the LAN/WAN vSwitch - Promiscuous mode allows the LAN/WAN SteelHead-v NICs to intercept traffic not destined for the SteelHead installation and is mandatory for traffic optimization on in-path deployments. You must accept promiscuous mode on each in-path virtual NIC. You can enable promiscuous mode through the vSwitch properties in vSphere. For details, see

Installing SteelHead-v on an ESXi virtual machine.

• Use distinct port groups for each LAN or WAN virtual NIC connected to a vSwitch for each SteelHead-v - If you are running multiple SteelHead-v virtual machines (VMs) on a single virtual host, you must add the LAN (or WAN) virtual NIC from each VM into a different port group (on each vSwitch). Using distinct port groups for each LAN or WAN virtual NIC prevents the formation of network loops.

Network performance

Follow these configuration tips to improve performance:

• Use at least a gigabit link for LAN/WAN - For optimal performance, connect the LAN/WAN virtual interfaces to physical interfaces that are capable of at least 1 Gbps. For high capacity models such as VCX80 and higher, use a 25 Gbps interface.

• Do not share physical NICs - For optimal performance, assign a physical NIC to a single LAN or WAN interface. Do not share physical NICs destined for LAN/WAN virtual interfaces with other VMs running on the hypervisor. Doing so can create performance bottlenecks.

• Ensure that the host has resources for overhead - In addition to reserving the CPU resources needed for the SteelHead-v model, verify that additional unclaimed resources are available. Due to hypervisor overhead, VMs can exceed their configured reservation. For details about hypervisor resource reservation and calculating overhead, see

Licensing, upgrading, and downgrading legacy models. • Do not overprovision the physical CPUs - Do not run more VMs than there are CPUs. For example, if a hypervisor is running off a quad-core CPU, all the VMs on the host should use no more than four vCPUs.

• Use a server-grade CPU for the hypervisor - For example, use a Xeon or Opteron CPU as opposed to an Intel Atom.

• Always reserve RAM - Memory is another very important factor in determining SteelHead-v performance. Reserve the RAM that is needed by the SteelHead-v model, but ensure there is extra RAM for overhead. This overhead can provide a performance boost if the hypervisor exceeds its reserved capacity.

• Virtual RAM should not exceed physical RAM - The total virtual RAM provisioned for all running VMs should not be greater than the physical RAM on the system.

• Do not use low-quality storage for the RiOS data store disk - Ensure that the SteelHead-v RiOS data store disk is backed by a physical storage medium that supports a high number of Input/Output Operations Per Second (IOPS). For example, use NAS, SAN, or dedicated SSD or SATA disk drives to back your virtual disks.

• Do not share host physical disks - To achieve near-native disk I/O performance, do not share host physical disks between VMs. When you deploy SteelHead-v, allocate an unshared disk for the RiOS data store disk.

• Do not use hyperthreading - Hyperthreading can cause contention among the virtual cores, resulting in significant loss of performance.

• BIOS Power Management Settings - If configurable, power management settings in the BIOS should be set to maximize performance.

Deployment options

Typically you deploy SteelHead-v on a LAN with communication between appliances taking place over a private WAN or VPN. Because optimization between SteelHeads typically takes place over a secure WAN, it is not necessary to configure company firewalls to support SteelHead-specific ports.

For optimal performance, minimize latency between SteelHead-v appliances and their respective clients and servers. Place the SteelHead-v appliances as close as possible to your network endpoints:

place client-side SteelHead-v appliances as close to your clients as possible, and place server-side SteelHead-v appliances as close to your servers as possible.

Ideally, SteelHead-v appliances optimize only traffic that is initiated or terminated at their local sites. The best and easiest way to achieve this traffic pattern is to deploy the SteelHead-v appliances where the LAN connects to the WAN, and not where any LAN-to-LAN or WAN-to-WAN traffic can pass through (or be redirected to) the SteelHead.

For detailed information about deployment options and best practices for deploying SteelHeads, see the SteelHead Deployment Guide.

Before you begin the installation and configuration process, you must select a network deployment.

In-path deployment

You can deploy SteelHead-v in the same scenarios as the SteelHead, with this exception: SteelHead-v software does not provide a failover mechanism like the SteelHead fail-to-wire. For full failover functionality, you must install a Riverbed NIC with SteelHead-v.

Riverbed NICs come in four-port and two-port models. For more information about NICs and SteelHead-v, see

NICs for SteelHead-v. For information about installing Riverbed NICs, see the

Network and Storage Card Installation Guide.

For deployments where a Riverbed bypass NIC is not an option (for example, in a Cisco SRE deployment) we recommend that you do not deploy your SteelHead-v in-path. If you are not using a bypass card, you can still have a failover mechanism, by employing either a virtual in-path or an out-of-path deployment. These deployments allow a router using WCCP or PBR to handle failover.

Promiscuous mode is required for in-path deployments.

Virtual in-path deployment

In a virtual in-path deployment, SteelHead-v is virtually in the path between clients and servers. Traffic moves in and out of the same WAN interface, and the LAN interface is not used. This deployment differs from a physical in-path deployment in that a packet redirection mechanism, such as WCCP or PBR, directs packets to SteelHead-v appliances that are not in the physical path of the client or server. In this configuration, clients and servers continue to see client and server IP addresses.

On SteelHead-v models with multiple WAN ports, you can deploy WCCP and PBR with the same multiple interface options available on the SteelHead.

For a virtual in-path deployment, attach only the WAN virtual NIC to the physical NIC, and configure the router using WCCP or PBR to forward traffic to the SteelHead-v for optimization. You must also enable in-path out-of-path (OOP) deployment on SteelHead-v.

Out-of-path deployment

The SteelHead-v is not in the direct path between the client and the server. Servers see the IP address of the server-side SteelHead installation rather than the client IP address, which might have an impact on security policies.

For a virtual OOP deployment, connect the primary interface to the physical in-path NIC and configure the router to forward traffic to this NIC. You must also enable OOP on SteelHead-v.

These caveats apply to server-side OOP SteelHead-v configuration:

• OOP configuration does not support autodiscovery. You must create a fixed-target rule on the client-side SteelHead.

• You must create an OOP connection from an in-path or logical in-path SteelHead and direct it to port 7810 on the primary interface of the server-side SteelHead. This setting is mandatory.

• Interception is not supported on the primary interface.

• An OOP configuration provides nontransparent optimization from the server perspective. Clients connect to servers, but servers treat it like a server-side SteelHead connection. This affects log files, server-side ACLs, and bidirectional applications such as rsh.

• You can use OOP configurations along with in-path or logical in-path configurations.

SteelHead-v models

Starting with RiOS 9.6, SteelHead-v models are based on performance tiers. Prior to RiOS 9.6

SteelHead-v models were based on the hardware capacities of equivalent physical SteelHead appliance models.

SteelHead-v model families are independent. You cannot upgrade a xx55 model to a performance tier model. The xx55 virtual models require RiOS 8.0 or later. Performance tier models require RiOS 9.6 or later.

Confirm that you have the physical resources required for the SteelHead-v model you are installing before you download and install SteelHead-v.

For information about the technical specifications for SteelHead-v models, go to this web address:

Flexible RiOS data store

Starting with RiOS 9.8, the flexible data store feature supports a smaller data store size, down to a minimum 12 GB.

To change the disk size of a running SteelHead-v, you must first power off the VM. From the Settings page, you can expand or remove the RiOS data store disk, and replace it with a smaller disk. Simply attempting to reduce the size of the existing disk will not work.

Modifying the disk size causes the RiOS data store to automatically clear.

If you provide a disk size larger than the configured RiOS data store for the model, the entire disk is partitioned, but only the allotted amount for the model is used.

Memory and CPU requirements are a hard requirement for a model to run. Flexible RiOS data store is not supported for the older xx50 models.

Multiple RiOS data stores

SteelHead-v models VCX5055 through VCX7055 running RiOS 8.6 or later, and VCX70 through VCX110 running RiOS 9.6 or later, support multiple RiOS data stores using Fault Tolerant Storage (FTS). We recommend that all RiOS data stores on an appliance be the same size.

To add additional data stores, you must power off the VM.

NICs for SteelHead-v

SteelHead-v models are not limited to a fixed number of NIC interfaces. However, the in-path pair limit is four (four LAN and four WAN interfaces), including bypass cards. If you want to use the SteelHead-v bypass feature, you are limited to the number of hardware bypass pairs the model can support. The SteelHead-v bypass feature is available on all supported virtualization platforms.

Each SteelHead-v requires a primary and auxiliary interface, which are the first two interfaces added. If you add additional interface pairs to the VM, they are added as in-path optimization interfaces. Total bandwidth and connection limits still apply.

Riverbed NICs provide hardware-based fail-to-wire and fail-to-block capabilities for SteelHead-v. The configured failure mode is triggered if the host loses power or is unable to run the SteelHead-v guest, if the SteelHead-v guest is powered off, or if the SteelHead-v guest experiences a significant fault (using the same logic as the physical SteelHead).

For procedures to install these cards, see the Network and Storage Card Installation Guide.

Riverbed NICs are available in two-port and four-port configurations. These cards are supported for SteelHead-v appliances running on ESXi only.

Riverbed NICs for SteelHead-v | Orderable part number | SteelHead-v models |

Two-Port 1-GbE TX Copper NIC | NIC-001-2TX | All |

Four-Port 1-GbE TX Copper NIC | NIC-002-4TX | 1050L, 1050M, 1050H, 2050L, 2050M, and 2050H VCX255, VCX555, VCX755, and VCX1555, VCX5055, VCX7055 VCX10 through VCX110 |

Two-Port 10-GbE Multimode Fiber NIC (direct I/O only) | NIC-008-2SR | VCX5055 and VCX7055 VCX70 through VCX110 |

These cards are supported for SteelHead-v appliances running on Hyper‑V, KVM, and ESXi.

Riverbed NICs for SteelHead-v | Orderable part number | SteelHead-v models |

Four-Port 1-GbE Copper Base-T | NIC-1-001G-4TX-BP | VCX10 through VCX110 |

Four-Port 1-GbE Fiber SX | NIC-1-001G-4SX-BP | VCX10 through VCX110 |

You must use Riverbed NICs for fail-to-wire or fail-to-block with SteelHead-v. NIC cards without a bypass feature from other vendors are supported for functionality other than fail-to-wire and fail-to-block, if supported by the hypervisor.

Requirements for SteelHead-v deployment with a NIC (ESXi only)

To successfully install a NIC in an ESXi host for SteelHead-v, you need these items:

• ESXi host with a PCIe slot.

• vSphere client access to the ESXi host.

• VMware ESXi 5.1 or later and RiOS 8.0.3 or later.

• By default, ESXi does not include the Intel 82580 Gigabit Ethernet network interface driver needed for the Riverbed bypass card. If you do not have this driver installed, you can download it from the VMware website.

• SSH and SCP access to the ESXi host.

The installation procedure in this manual assumes you have already installed a Riverbed NIC following the instructions in the Network and Storage Card Installation Guide.

The number of hardware bypass pairs (that is, one LAN and one WAN port) supported is determined by the model of the SteelHead-v:

• Models V150, V250, and V550: one bypass pair

• Models V1050 and V2050: two bypass pairs (that is, two LAN and two WAN ports)

• Models VCX555, VCX755, VCX1555, VCX5055, and VCX7055: two bypass pairs

• Models VCX10 through VCX110: two bypass pairs

You can install a four-port card in an ESXi host for a SteelHead-v 150, 250, or 550. However, only one port pair is available because the SteelHead-v model type determines the number of pairs.

These configurations have been tested:

• Two SteelHead-v guests, each using one physical pair on a single four-port Riverbed NIC

• Two SteelHead-v guests connecting to separate cards

• One SteelHead-v guest connecting to bypass pairs on different NICs

For more information about installing and configuring SteelHead-v with a Riverbed NIC, see

Completing the preconfiguration checklist.SteelHead-v on the Cisco SRE

In addition to standard ESXi, you can run SteelHead-v on a Cisco server blade, using the SRE platform, based on ESXi 5.1. This table lists the SteelHead-v models supported on each supported Cisco SRE model and the required version of RiOS, disk configuration, and RAM.

SRE model | SteelHead-v model | RiOS version | Disk configuration | RAM |

910 | V1050H, VCX755H | 6.5.4+, 7+, 8+ | RAID1 | 8 GB |

910 | V1050M, VCX755M | 6.5.4+, 7+, 8+ | RAID1 | 4 GB |

900 | V1050M, VCS755M | 6.5.4+, 7+, 8+ | RAID1 | 4 or 8 GB |

700/710 | V250H | 6.5.4+, 7+, 8+ | Single disk | 4 GB |

300 | Not Supported | | | |

For more information about deploying SteelHead-v on a Cisco SRE blade, search the Riverbed Knowledge Base at

https://supportkb.riverbed.com/support/index?page=home.