Configuring the Edge

This chapter describes the process for configuring Edge at the branch office. It includes the following topics:

SteelFusion Edge appliance architecture

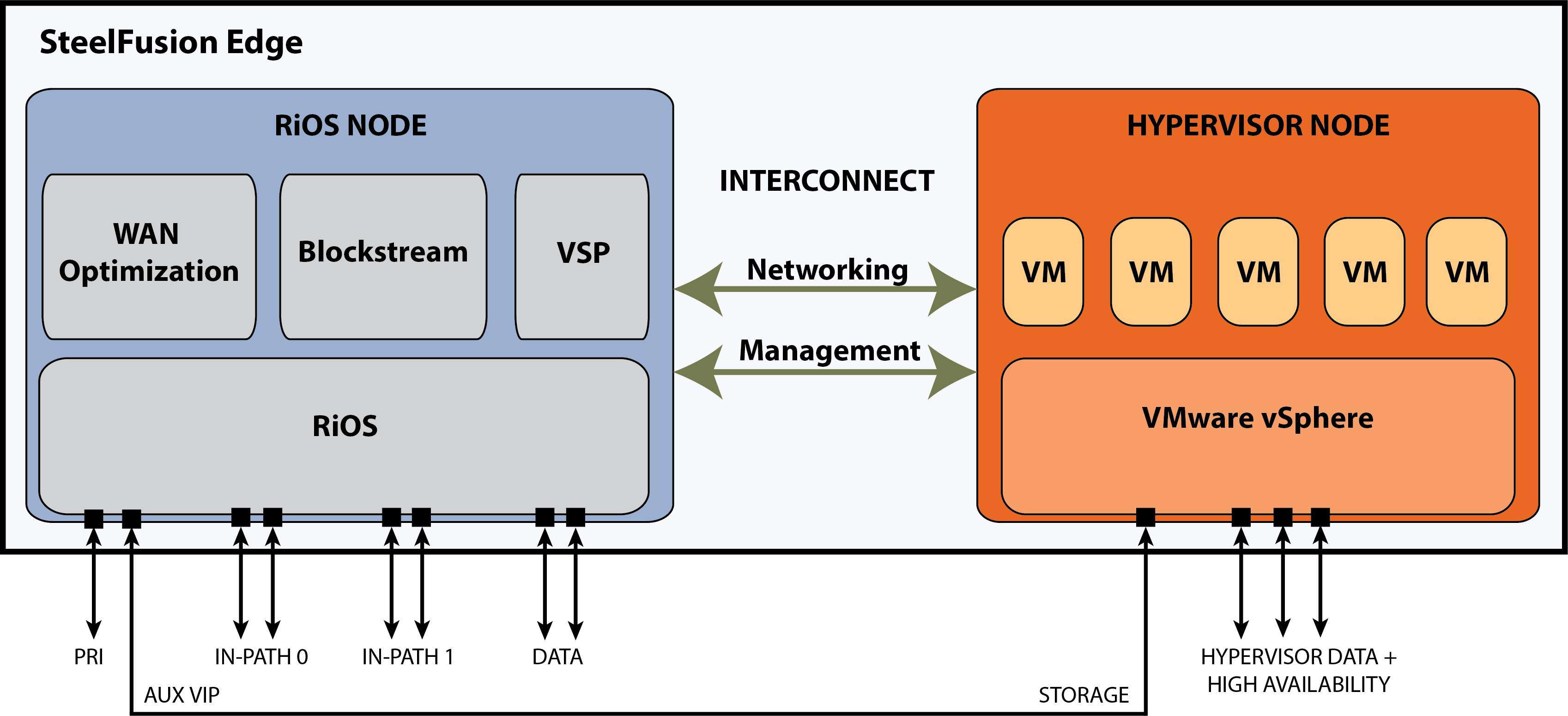

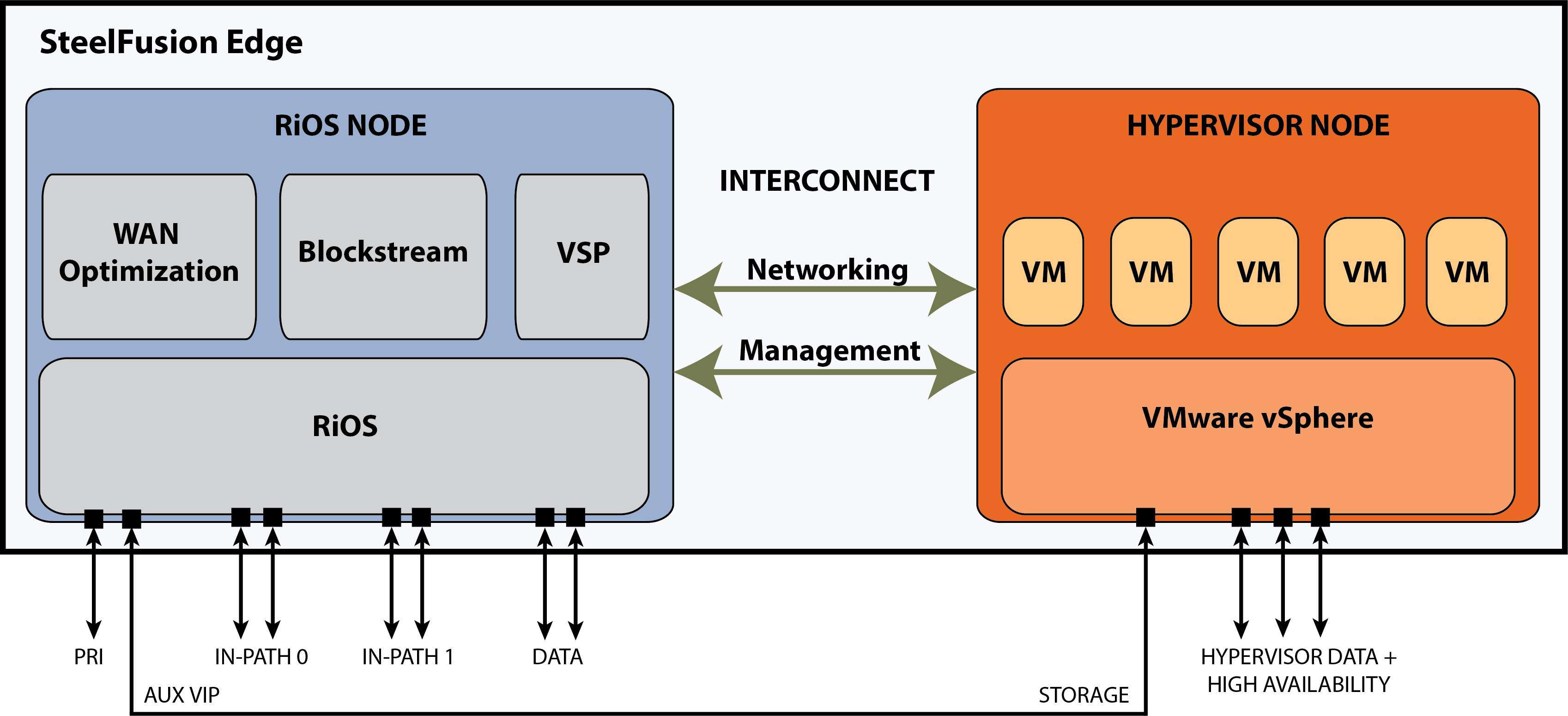

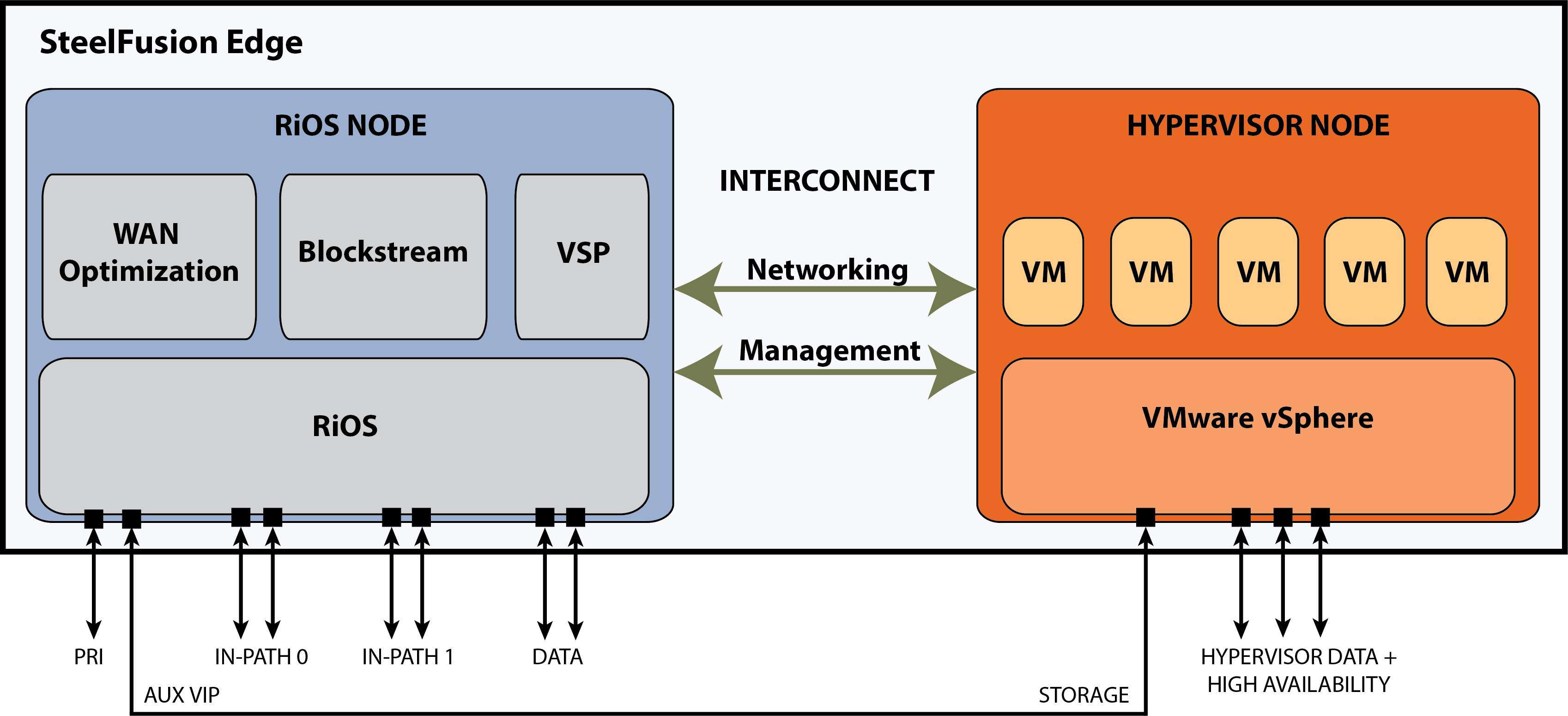

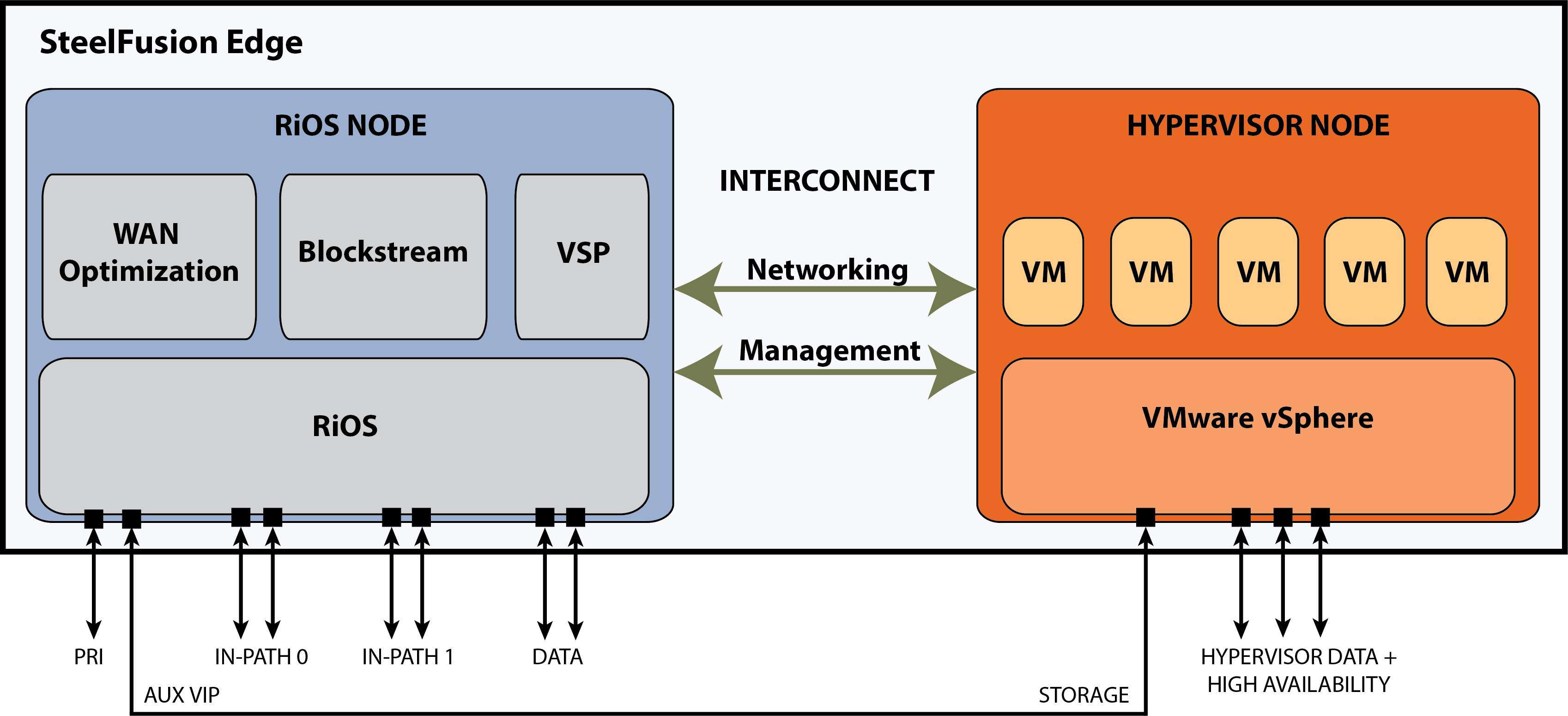

The Edge contains two distinct computing nodes within the same hardware chassis (

Figure 4‑1). A SteelFusion Edge appliance that is configured for an NFS/file deployment has the same basic internal architecture as an Edge that is configured for block storage deployment. This enables the majority of the existing SteelFusion features and services such as HA, prepopulation, local storage, pinned storage, snapshots, and so on to continue being supported. The two-node design provides hardware resource separation and isolation.

The two nodes are as follows:

• The RiOS node provides networking, WAN optimization, direct attached storage available for SteelFusion use, and VSP functionality.

• The hypervisor node provides hypervisor-based hardware resources and software virtualization.

For details on the RiOS and hypervisor nodes, see the SteelFusion Edge Installation and Configuration Guide and the SteelFusion Edge Hardware and Maintenance Guide.

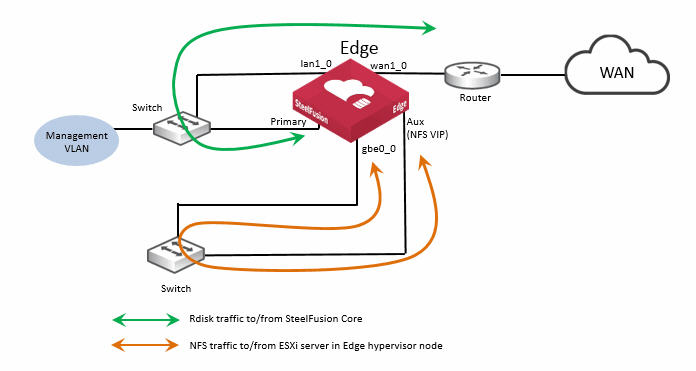

Figure: Edge architecture in NFS/file mode

Although not shown in the architecture diagram, with SteelFusion version 5.0 and later, there is additional functionality within the RiOS node to support an NFS file server. At a high level, the NFS file server in the RiOS node interacts with the existing BlockStream and VSP components to provide branch ESXi servers, including the Edge hypervisor node, with exported fileshares. These fileshares have been projected across the WAN by SteelFusion Core from centralized NFS storage. With SteelFusion Edge deployed in NFS/file mode, the internal interconnect between the RiOS node and hypervisor node is only used for management traffic related to health and status of the architecture. The storage interconnect is not available for use by the NFS file server; this is by design.

To enable the hypervisor node to mount exported fileshares from the NFS file server in RiOS, you must create external network connections. In

Figure 4‑1 the external network connection is shown as being between the RiOS node auxiliary (AUX) network interface port and one of the hypervisor node data network ports. The AUX network interface port will be configured with Virtual IP address (VIP), which will be used to mount the exports on the hypervisor. Depending on your requirements, it may be possible to use alternative network interfaces for this external connectivity. For more details, see

“Configuring interface routing” on page 37.

Edge interface and port configurations

This section describes a typical port configuration for the Edge. You might require additional routing configuration depending on your deployment scenario.

This section includes the following topics:

Configuring Edge for jumbo frames

You can have one or more external application servers in the branch office that use the exports accessible from the Edge NFS file server. If your network infrastructure supports jumbo frames, we recommend that you configure the connection between the Edge and application servers as described below.

In addition to configuring Edge for jumbo frames, you must configure the external application servers and any switches, routers, or other network devices between Edge and the application server for jumbo frame support. The examples below describe the procedure for the Edge primary interface and Edge Ethernet interfaces. If you are using the auxiliary interface then follow the same guidance as for the primary interface but within the Auxiliary Interface box of the Edge Management Console.

To configure Edge primary interface for jumbo frames

1. In the Edge Management Console, choose Networking > Networking: Base Interfaces.

2. In the Primary Interface box:

• Select Enable Primary Interface.

• Select Specify IPv4 Address Manually option, and specify the correct values for your implementation.

• For the MTU setting, specify 9000 bytes.

3. Click Apply to apply the settings to the current configuration.

4. Click Save to save your changes permanently.

For more details about interface settings, see the SteelFusion Edge User Guide.

To configure Edge Ethernet interfaces for jumbo frames

1. In the Edge Management Console, choose Networking > Networking: Data Interfaces.

2. In the Data Interface Settings box:

• Select the required data interface (for example: eth1_0).

• Select Enable Interface.

• Select the Specify IPv4 Address Manually option and specify the correct values for your implementation.

• For the MTU setting, specify 9000 bytes.

3. Click Apply to apply the settings to the current configuration.

4. Click Save to save your changes permanently.

For more details about interface settings, see the

SteelFusion Edge User Guide. For more information about jumbo frames, see

“Configure jumbo frames” on page 118.

Moving the Edge to a new location

If you began your SteelFusion deployment by initially configuring and loading the Edge appliance in the data center, you might have to change the IP addresses of various network ports on the Edge after you move it to its final location in the remote office.

The Edge configuration includes the IP address of the Core and it initiates the connection to the Core when it is active. Because Core does not track the Edge by IP address, it is safe to change the IP addresses of the network ports on the Edge when you move it to its final location.

If the virtual IP (VIP) of the Edge needs to be reconfigured, you will need to unmount and mount the datastores being used by the hypervisor connected to this Edge to make them accessible. For more details, see

https://supportkb.riverbed.com/support/index?page=content&id=S30235.

SteelFusion Edge ports

This table summarizes the ports that connect the SteelFusion Edge appliance to your network. For more information about the Edge appliances, see the SteelFusion Edge Hardware and Maintenance Guide.

Port | Description |

Primary (PRI) | Connects Edge to a VLAN switch through which you can connect to the Management Console and the Edge CLI. This interface is also used to connect to the Core through the Edge RiOS node in-path interface. |

Auxiliary (AUX) | Connects the Edge to the management VLAN. The IP address for the auxiliary interface must be on a subnet different from the primary interface subnet. You can connect a computer directly to the appliance with a crossover cable, enabling you to access the CLI or Management Console of the Edge RiOS node. |

lan1_0 | The Edge RiOS node uses one or more in-path interfaces to provide Ethernet network connectivity for optimized traffic. Each in-path interface comprises two physical ports: the LAN port and the WAN port. Use the LAN port to connect the Edge RiOS node to the internal network of the branch office. You can also use this port for a connection to the Primary port. A connection to the Primary port enables the blockstore traffic sent between Edge and Core to transmit across the WAN link. |

wan1_0 | The WAN port is the second of two ports that comprise the Edge RiOS node in-path interface. The WAN port is used to connect the Edge RiOS node toward WAN-facing devices such as a router, firewall, or other equipment located at the WAN boundary. If you need additional in-path interfaces, or different connectivity for in-path (for example, 10 GigE or Fiber), then you can install a bypass NIC in an Edge RiOS node expansion slot. |

eth0_0 to eth0_1 | These ports are available as standard on the Edge appliance. When configured for use by Edge RiOS node, the ports can provide additional NFS interfaces for storage traffic to external servers. These ports also enable the ability to provide redundancy for Edge high availability (HA). In such an HA design, we recommend that you use the ports for the heartbeat and BlockStream synchronization between the Edge HA peers. If additional NFS connectivity is required in an HA design, then install a nonbypass data NIC in the Edge RiOS node expansion slot. |

gbe0_0 to gbe0_3 | These ports are available as standard on the Edge appliance. When configured for use by Edge hypervisor node, the ports provide LAN connectivity to external clients and also for management. The ports are connected to a LAN switch using a straight-through cable. If additional connectivity is required for the hypervisor node, then install a nonbypass data NIC in a hypervisor node expansion slot. There are no expansion slots available for the hypervisor node on the SFED 2100 and 2200 models. There are two expansion slots on the SFED 3100, 3200, and 5100 models. Note: Your hypervisor must have access to the Edge VIP via one of these interfaces. |

Note: All the above interfaces are gigabit capable. Where it is practical, use gigabit speeds on interface ports that are used for NFS traffic.

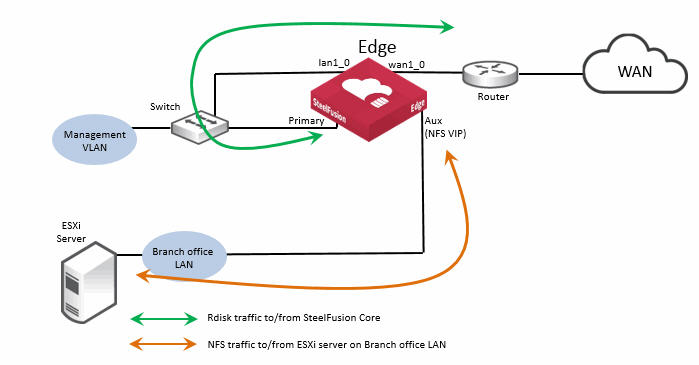

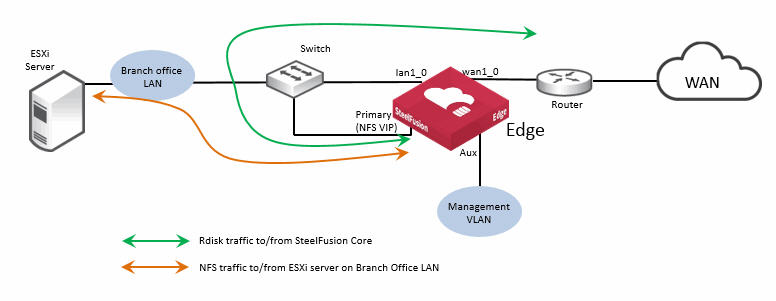

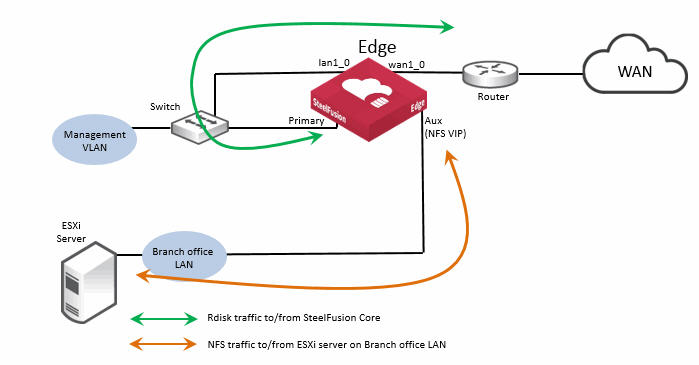

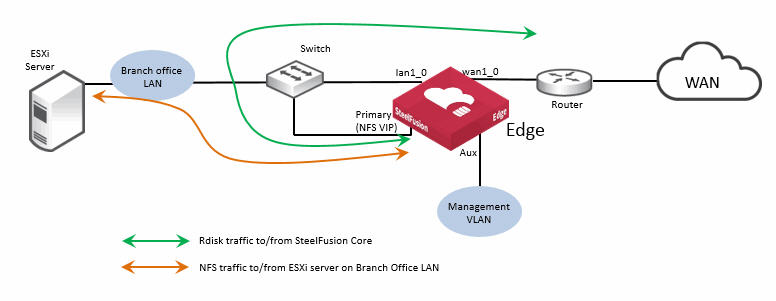

This figure shows a basic branch NFS/file deployment indicating some of the Edge network ports. In this scenario, the ESXi server is installed on the branch LAN, external to the Edge.

Figure: Edge ports used in branch NFS/file deployment - external ESXi server

Note that in this figure, the Edge auxiliary interface (Aux) is labeled

NFS VIP. For details on Edge VIP, see

“Edge Virtual IP address” on page 50.

Edge Virtual IP address

Unlike block storage, NFSv3 doesn’t support the concept of multipath. In order for high availability (HA) to avoid a single point of failure, the general industry standard solution for NFS file servers is to use a virtual IP (VIP) address. The VIP floats between the network interfaces on file servers that are part of an HA deployment. Clients that are accessing file servers using NFS in an HA deployment are configured to use the VIP address. When a file server that is part of an HA configuration is active, only it responds to the client requests. If the active file server fails for some reason, the standby file server starts responding to the client requests via the same VIP address.

On a related note, if there are NFS file servers in the data center configured for HA, then it is their VIP address that is added to the Core configuration.

At the branch location, because Edge is the NFS file server, it is configured with a VIP address for the ESXi server(s) to access as NFS clients.

Note: This VIP address must be configured even if Edge is not expected to be part of an Edge HA configuration.

The Edge VIP address requires an underlying network interface with a configured IP address on the same IP subnet. The underlying network interface must be reachable by the NFS clients requiring access to the fileshares exported by the SteelFusion Edge. The NFS clients in this case will be one or more ESXi servers that will mount fileshares exported from the Edge and use them as their datastore. The ESXi server that is in the Edge hypervisor node must also use an external network interface to connect to the configured VIP address. Unlike Edge deployments in block storage mode, it is not supported to use the internal interconnect between the RiOS node and the hypervisor node.

Depending on the Edge appliance model, you may have several options available for network interfaces that could be used for NFS access with the VIP address. By default, these would include any of the following: Primary, Auxiliary, eth0_0, or eth0_1. It is important to remember that any of these four interfaces could already be required to use as connectivity for management of the RiOS node, rdisk traffic to/from the Edge in-path interface, or heartbeat and synchronization traffic as part of a SteelFusion Edge HA deployment. Therefore, select a suitable interface based on NFS connectivity requirements and traffic workload.

If an additional nonbypass NIC is installed in the RiOS node expansion slot, then you may have additional ethX_Y interfaces available for use.

The Edge VIP address is configured in the Edge Management Console in the Storage Edge Configuration page. This figure shows a sample configuration setting with the available network interface options.

Figure: Edge VIP address configuration

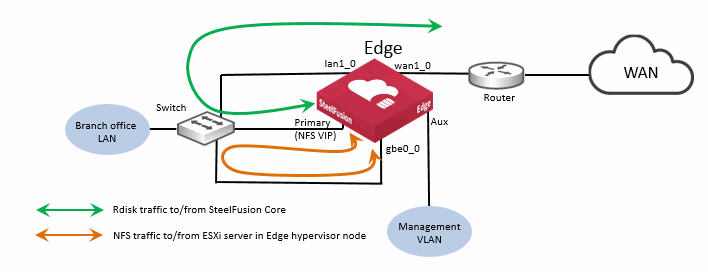

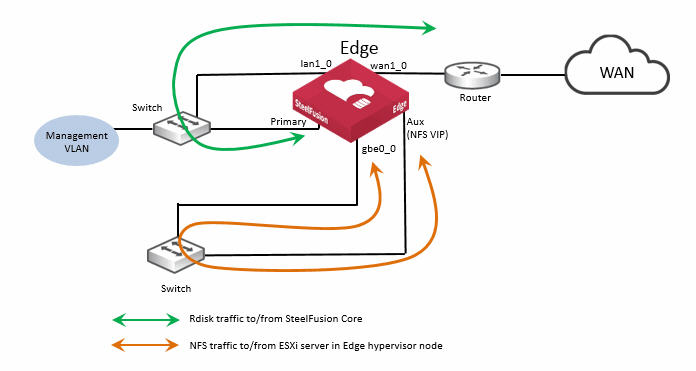

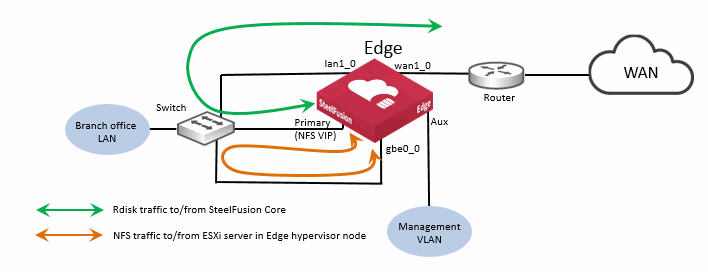

Figure 4‑4 shows a basic branch NFS/file deployment indicating some of the Edge network ports in use. In this scenario the ESXi server is internal to the Edge, in the hypervisor node. It is important to notice that the NFS traffic for the ESXi server in the hypervisor node is not using the internal connections between the RiOS node and the hypervisor node as it is not supported. Instead, the NFS traffic in this example is being transported externally between the auxiliary port of the RiOS node and the gbe0_0 port of the hypervisor node. If there were no other connectivity requirements, these two ports could be connected via a standard Ethernet cross-over cable. However, it is considered best practice to use straight-through cables and connect via a suitable switch.

Figure: Edge ports used in branch NFS/file deployment - ESXi server in Edge hypervisor node

Figure 4‑2 and

Figure 4‑4 show a basic deployment example where the VIP interface is configured on the Edge auxiliary port. Depending on your requirements, it may be possible to use other interfaces on the Edge. For example,

Figure 4‑5 and

Figure 4‑6 show examples where the Edge Primary interface is configured with the NFS VIP address.

Figure: Edge ports used in branch NFS/file deployment - external ESXi server

Figure: Edge ports used in branch NFS/file deployment - ESXi server in Edge hypervisor node

Virtual Services Platform hypervisor installation

Before performing the initial installation of the Virtual Services Platform (VSP) hypervisor in the SteelFusion Edge hypervisor node, the SteelFusion Core must already be configured and connected. See

“Core deployment process overview with NFS” on page 35 for an outline of the required tasks, and see the

SteelFusion Core User Guide (NFS mode) for more detailed information.

Once Core is correctly configured, you can install the VSP hypervisor. The SteelFusion Edge Management Console is equipped with an installer wizard to guide you through the specific steps of the installation process. For a successful installation, be aware that exported fileshares projected from the Core and mapped to the Edge will only be detected by the hypervisor if they are configured with “VSP service” or “Everyone” access permissions. Once the VSP is installed, additional mapped fileshares can be added by mounting manually (via ESXi server) configuration tools such as vSphere client, and specifying the Edge VIP address. See the SteelFusion Edge User Guide for more details on the VSP hypervisor installation process.

Configuring SteelFusion storage

Complete the connection to the Core by choosing Storage > Storage Edge Configuration on the Edge Management Console, specifying the Core IP address, and defining the Edge Identifier (among other settings).

You need the following information to configure Edge storage:

• Hostname/IP address of the Core.

• Edge Identifier, the value of which is used on the Core-side configuration for mapping LUNs to specific Edge appliances. The Core identifier is case sensitive.

• Self Identifier. If you configure failover, both appliances must use the same self-identifier. In this case, you can use a value that represents the group of appliances.

• Port number of the Core. The default port is 7970.

• The interface for the current Edge to use when connecting with the Core.

• The virtual IP address of the Edge NFS server. This setting also requires you to specify the network interface to use and the subnet mask.

For details about this procedure, see the SteelHead User Guide and the SteelFusion Edge User Guide.

Related information

• SteelFusion Core User Guide

• SteelFusion Edge User Guide

• SteelFusion Core Installation and Configuration Guide

• SteelFusion Command-Line Interface Reference Manual