HTTP Optimization

This chapter includes examples of techniques used by the Hypertext Transfer Protocol (HTTP) optimization module on the SteelHead to improve optimization. This chapter includes the following sections:

The HTTP protocol has become the accepted standard mechanism for transferring documents on the internet. The original version, HTTP 1.0, is documented in RFC 1945 but has since been superseded by HTTP 1.1 and documented in RFC 2068. TCP protocol is the most common underlying transport protocol for HTTP.

By default, a web server listens for HTTP traffic on TCP port 80, although you can reconfigure the web server to listen on a different port number. HTTP uses a very simple client-server model—after the client has established the TCP connection with the server, it sends a request for information and the server replies back. A web server can support many different types of requests but the two most common types of requests are as follows:

• GET request - asks for information from the server.

• POST request - submits information to the server.

A typical web page is not one file that downloads all at once. web pages are composed of dozens of separate objects including JPG and GIF images, JavaScript code, cascading style sheets—each of which is requested and retrieved separately, one after the other. Given the presence of latency, this behavior is highly detrimental to the performance of web-based applications over the WAN. The higher the latency, the longer it takes to fetch each individual object and, ultimately, to display the entire page. Furthermore, the server might be protected and require authentication before delivering the objects. The authentication can be once per connection or it can be once per request.

The HTTP optimization module addresses these challenges by using several techniques. For example:

• The HTTP optimization module learns about the objects within a web page and prefetch those objects in bulk before the client requests them. When the client requests those objects, the local SteelHead serves them out locally without creating extra round trips across the WAN.

• The HTTP optimization module learns the authentication scheme that is configured on the server. It can inform the client that it needs to authenticate against the server without the client incurring an extra round trip to discover the authentication scheme on the server.

HTTP and Browser Behavior

The HTTP protocol uses a simple client-server model where the client transmits a message (also referred to as a request), to the server and the server replies with a response. Apart from the actual request, which is to download the information from the server, the request can contain additional embedded information for the server. For example, the request might contain a Referer header. The Referer header indicates the URL of the page that contains the hyperlink to the currently requested object. Having this information is very useful for the web server to trace where the requests originated from, for example:

GET /wiki/List_of_HTTP_headers HTTP/1.1

Host: en.wikipedia.org

User-Agent: Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.2.7)

Gecko/20100713 Firefox/3.6.7

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8

Accept-Language: en-us,en;q=0.5

Accept-Encoding: gzip,deflate

Accept-Charset: ISO-8859-1,utf-8;q=0.7,*;q=0.7

Keep-Alive: 115

Connection: keep-alive

The HTTP protocol is a stateless protocol. A stateless protocol means that the HTTP protocol does not have a mechanism to store the state of the browser at any given moment in time. Being able to store the state of the browser is useful particularly in situations where a user who is online shopping has added items to the shopping cart but was then interrupted and closed the browser. With a stateless protocol, when the user revisits the website, the user has to add all the items back into the shopping cart. To address this issue, the server can issue a cookie to the browser. These cookies are the typical types:

• Persistent cookie - remains on the user's PC after the user closes the browser.

• Nonpersistent cookie - discarded after the user closes the browser.

In the example earlier, assuming that the server issues a persistent cookie, when the user closes the browser and revisits the website, all the items from the previous visit are populated in the shopping cart. Cookies are also used to store user preferences. Each time the user visits the website, the website is personalized to the user's liking.

This example shows where to find the cookie in the client's GET request.

GET /cx/scripts/externalJavaScripts.js HTTP/1.1

Host: www.acme.com

User-Agent: Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.2.6)

Gecko/20100625 Firefox/3.6.6

Accept: */*

Accept-Language: en-us,en;q=0.5

Accept-Encoding: gzip,deflate

Accept-Charset: ISO-8859-1,utf-8;q=0.7,*;q=0.7

Keep-Alive: 115

Connection: keep-alive

Referer: http://www.acme.com/cpa/en_INTL/homepage

Cookie: TRACKER_CX=64.211.145.174.103141277175924680;

CoreID6=94603422552512771759299&ci=90291278,90298215,90295525,90296369,902941

55; _csoot=1277222719765; _csuid=4c20df4039584fb9; country_lang_cookie=SG-en

Web browser performance has improved considerably over the years. In the HTTP 1.0 specification, the client opened a connection to the server, downloaded the object, and then closed the connection. Although this was easy to understand and implement, it was very inefficient because each download triggered a separate TCP connection setup (TCP 3-way handshake). In the HTTP 1.1 specification, the browser has the ability to use the same connection to download multiple objects.

The next section discusses how the browser uses multiple connections and a technique known as pipelining to improve the browser's performance.

Multiple TCP Connections and Pipelining

Most modern browsers establish two or more TCP connections to the server for parallel downloads. The concept is simple—as the browser parses the web page, it knows what objects it needs to download. Instead of sending the requests serially over a single connection, the requests are sent over parallel connections resulting in a faster download of the web page.

Another technique used by browsers to improve the performance is

pipelining. Without pipelining, the client first sends a request to the server and waits for a reply before sending the next request. If there are two parallel connections, then a maximum of two requests are sent to the server concurrently. With pipelining, the browser sends the requests in batches instead of waiting for the server to respond to each individual object before sending the next request. Pipelining is used with a single HTTP connection or with multiple HTTP connections. Although most servers support multiple HTTP connections, some servers do not support pipelining.

Figure: Pipelining illustrates the effect of pipelining.

Figure: Pipelining

HTTP Authentication

To provide access control to the contents on the web server, authentication is enabled on the server. There are many ways to perform authentication—for example, certificates and smartcards. The most common schemes are NT LAN Manager (NTLM) and Kerberos. Although the two authentication schemes are not specific to HTTP and are used by other protocols such as CIFS and MAPI, there are certain aspects that are specific to HTTP.

The following steps describe four-way NTLM authentication shown in

Figure: Four-Way NTLM Authentication:

1. The client sends a GET request to the server.

2. The server, configured with NTLM authentication, sends back a 401 Unauthorized message to inform the client that it needs to authenticate. Embedded in the response is the authentication scheme supported by the server. This is indicated by the WWW-Authenticate line.

3. The client sends another GET request and attaches an NTLM Type-1 message to the request. The NTLM Type-1 message provides the set the capability flags of the client (for example, encryption key size).

4. The server responds with another 401 Unauthorized message, but this time it includes an NTLM Type-2 message in the response. The NTLM Type-2 message contains the server's NTLM challenge.

5. The client computes the response to the challenge and once again attaches this to another GET request. Assuming the server accepts the response, the server delivers the object to the client.

6. Assuming a network on 200 ms of round-trip latency, it would take at least 600 ms for the browser begins to download the object.

Figure: Four-Way NTLM Authentication

For a more in-depth discussion on NTLM authentication, go to:

A detailed explanation of the Kerberos protocol is beyond the scope of this guide. For details, go to:

There is the concept of per-request and per-connection authentication with HTTP authentication. A server configured with per-request authentication requires the client to authenticate every single request before the server delivers the object to the client. If there are 100 objects (for example, .jpg images), it performs authentication 100 times with per-request authentication. With per-connection authentication, if the client only opens a single connection to the server, then the client only needs to authenticate with the server once. No further authentication is necessary. Using the same example, only a single authentication is required for the 100 objects. Whether the web server does per-request or per-connection authentication varies depending on the software. For Microsoft's Internet Information Services (IIS) server, the default is per-connection authentication when using NTLM authentication, the default is per-request authentication when using Kerberos authentication.

When the browser first connects to the server, it does not know whether the server has authentication enabled. If the server requires authentication, the server responds with a 401 Unauthorized message. Within the body of the message, the server indicates what kind of authentication scheme it supports in the WWW-Authenticate line. If the server supports more than one authentication schemes, then there are multiple WWW-Authenticate lines in the body of the message. For example, if a server supports both Kerberos and NTLM, the following appears in the message body:

WWW-Authenticate: Negotiate

WWW-Authenticate: NTLM

When the browser receives the Negotiate keyword in the WWW-Authenticate line, it first tries Kerberos authentication. If Kerberos authentication fails, it falls back to NTLM authentication.

Assuming that there are multiple TCP connections in-use, after the authentication succeeds on a first connection, the browser downloads and parses the base page as they authenticate. To improve performance, most browsers parse the web page and start to download the objects over parallel connections (for details, see

Multiple TCP Connections and Pipelining).

Connection Jumping

When the browser establishes the second, or subsequent, TCP connections for the parallel or pipelining downloads, it does not remember if the server requires authentication. Therefore, the browser sends multiple GET requests over the second, or additional, TCP connections, without the authentication header. The server rejects the requests with the 401 Unauthorized messages. When the browser receives the 401 Unauthorized message on the second connection, it is aware that the server requires authentication. The browser initiates the authentication process. Yet, instead of keeping the authentication requests for all the previously requested objects on the second connection, some of the requests jump over the first and are authenticated. This is known as connection jumping.

Note: Connection jumping is specific behavior to Internet Explorer, unless you are using Internet Explorer 8 with Windows 7.

Figure: Client Authenticates with the Server shows the client authenticated with the server and the client request for the index.html web page. It parses the web page and initiates a second connection for parallel download.

Figure: Client Authenticates with the Server

Figure: Rejected Second Connection shows the client pipelines the requests to download objects 1.jpg, 2.jpg, and 3.jpg on the second connection. However, because the server requires authentication, the requests for those objects are all rejected.

Figure: Rejected Second Connection

Figure: Connection Authentication Jumping shows the client sends the authentication request to the server. Instead of keeping the requests on the second connection, some of the requests have jumped over to the first, and an already authenticated, connection.

Figure: Connection Authentication Jumping

The following problems, that result in performance impact, arise as a result of this connection jumping behavior:

• If an authentication request appears on an already authenticated connection, the server can reset the state of the connection and force it to go through the entire authentication process again.

• The browser has effectively turned this into a per-request authentication even though the server can support per-connection authentication.

HTTP Proxy Servers

Most enterprises have proxy servers deployed in the network. Proxy servers serve the following purposes:

• Access control

• Performance improvement

Many companies have some compliance policies that restrict what the users can or cannot access from their corporate network. Enterprises meet this requirement by deploying proxy servers. The proxy servers act as a single point through which all web traffic entering or exiting the network must traverse so the administrator can enforce the necessary policies. In addition to controlling access, the proxy server might act as a cache engine caching frequently accessed data. By doing this, it can eliminate the need to fetch the same content for different users.

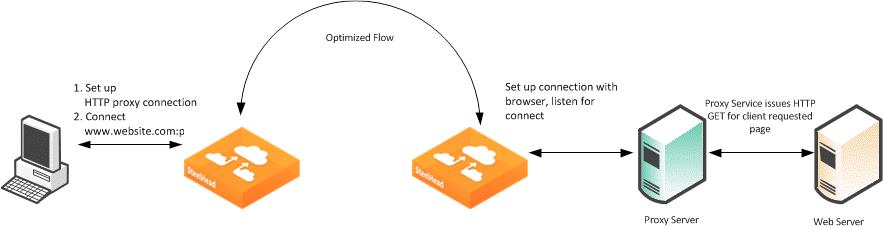

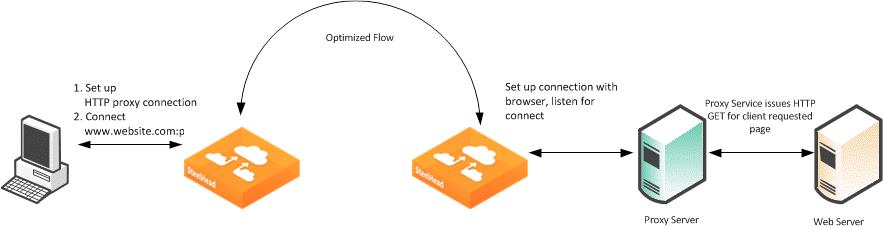

Communication with an HTTP proxy server differs from the browser to the requested HTTP server. When you use a defined proxy server, the browser initiates a TCP connection with that defined proxy server. The initiation starts with a standard TCP three-way handshake. Next, the browser requests a web page and issues a CONNECT statement to the proxy server, with instructions to which web server it wants to connect.

Figure: Connection to a Web Server Through a Proxy

In a standard HTTP request with an open proxy server, the proxy next opens a connection to the requested web server and returns the requested objects. However, most corporate environments use proxy servers as outbound firewall devices and require authentication by issuing a code 407 Proxy Authentication Required. After a successful authorization is complete, the proxy returns the originally requested objects. Successful authorization by the proxy server can be verifying username and password, and if the destination website is on the approved list.

Figure: Client Sending NTLM Authorization

SSL connections are different. In a standard HTTP request, the SteelHead optimizes proxy traffic without any issues. Standard HTTP optimized traffic is leveraged, and data reduction and latency reductions are prevalent. Due to the nature of proxy server connections, an additional step is required to set up SSL connections. Prior to RiOS 7.0, SSL connections were set up as pass-through connections and were not optimized because the SSL sessions were negotiated after the initial TCP setup. In RiOS 7.0 or later, RiOS can negotiate SSL after the setup of the session.

Figure: Setting Up an SSL Connection Through a Proxy Server

Figure: Setting Up an SSL Connection Through a Proxy Server shows an SSL connection through a proxy server. The steps are as follows:

1. A client sends a TCP three-way handshake to the proxy server. The proxy HTTP is made on this connection.

2. The client issues a CONNECT statement to the host it wants to connect with, for example:

CONNECT www.riverbed.com:443 HTTP/1.0

User-Agent: Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1;.NET CLR 1.0.3705;.NET CLR 1.1.4322;.NET CLR 2.0.50727;.NET CLR 3.0.4506.2152;.NET CLR 3.5.30729)

Host: www.riverbed.com

Content-Length: 0

Proxy-Connection: Keep-Alive

Pragma: no-cache

3. The proxy server forwards the connect request to the remote web server.

4. The remote HTTP server accepts and send back an ACK.

5. The client sends an SSL Client Hello Message.

6. The server-side SteelHead intercepts the Client Hello Message and begins to set up an SSL inner-channel connection with the client. The SteelHead also begins to set up the SSL conversation with the original web server.

Note: The private key and certificate of the requested HTTP server must exist on the server-side SteelHead, along with all servers targeted for SSL optimization.

7. An entry is added to a hosts table on the server-side SteelHead.

The host table is how a SteelHead discerns which key is associated with each SSL connection, because each SSL session is to the same IP and port pair. The hostname becomes the key field for managing this connection.

8. The server-side SteelHead passes the session to the client-side SteelHead using SSL optimization. For information about SSL optimization, see

SSL Deployments.

Configuring HTTP SSL Proxy Interception

In some HTTP proxy implementations, the proxy server terminates the SSL session to the web server to inspect the web payload for policy enforcement or surveillance. In this scenario, the SSL server that is defined in the SSL Main Settings page on the server-side SteelHead is the SSL proxy device, and not the server being requested by the browser. If you use self-signed certificates from the proxy server, you must add the CA from the proxy server to the SteelHead trusted certificate authority (CA) list.

Figure: SSL Session Setup Between the Proxy Server and the Client

Figure: SSL Session Setup Between the Proxy Server and the Client shows that the client requests a page from

http://www.riverbed.com/. This request is sent to the proxy server as a CONNECT statement. Because this is an intercepted proxy device, the SSL session is set up between the proxy server and the client, typically with an internally trusted certificate. You must use this certificate on the server-side SteelHead for SSL optimization to function correctly.

Figure: Adding a CA

To configure RiOS SSL traffic interception

1. Before you begin:

• You must have a valid SSL license.

• The server-side and client-side SteelHeads must be running RiOS 7.0 or later.

2. Choose Optimization > SSL: SSL Main Settings and select Enable SSL Optimization.

The private key and the certificate of the requested web server, and all the servers targeted for SSL optimization, must exist on the server-side SteelHead.

3. Choose Optimization > SSL: Advanced Settings and select Enable SSL Proxy Support.

4. Create an in-path rule on the client-side SteelHead (shown in

Figure: In-Path Rule) to identify the proxy server IP address and port number with the SSL preoptimization policy for the rule.

Figure: In-Path Rule

RiOS HTTP Optimization Techniques

The HTTP optimization module was first introduced in RiOS 4.x. At the time, the only feature available was URL learning. Subsequently, in RiOS 5.x, the parse-and-prefetch and metadata response features were added to the family. In RiOS 6.0, the metadata response feature was replaced with the object prefetch table. In RiOS 6.1, major enhancements were made specifically to optimize HTTP authentication.

RiOS 7.0 introduces HTTP automatic configuration. With HTTP automatic configuration you can:

• enable HTTP automatic configuration to profile HTTP applications. In other words, HTTP automatic configuration collects statistics on applications and dynamically generates a configuration entry with the proper optimization settings and self-tunes its HTTP parameter settings.

You can also store all objects permitted by the servers—you do not need to specify the extension type of specific objects to prefetch.

• expand the HTTP authentication options by including end-to-end Kerberos authentication. All previous configurations are still available for configuration.

You must run RiOS 7.0 or later on both the client-side SteelHead and server-side SteelHead to use automatic HTTP configuration and end-to-end Kerberos. For more details, see

HTTP Automatic Configuration and

HTTP Authentication Optimization.

Primary Content Optimization Methods

The following list explains the primary content optimization methods:

• Strip compression - To conserve bandwidth, nearly all browsers support compression. The browser specifies what encoding schemes it supports in the Accept-Encoding line in the request. Before the server responds with a reply, it compresses the data with the encoding scheme that the client supports. To maximize the benefit of SDR, data coming from the server must decompress to allow SDR to de-duplicate this data. When Strip Compression is enabled, the HTTP optimization module removes the Accept-Encoding line from the request header before sending the request to the server. Because the modified request does not contain any supported compression scheme, the server responds to the request without any compression. This allows SDR to deduplicate the data. With this option enabled, the amount of LAN-side traffic increases as the server no longer sends the traffic in a compressed format.

• Insert cookie - The HTTP optimization module relies on cookies to distinguish between different users. If the server does not support cookies, the HTTP optimization module inserts its own cookie so that it can distinguish between different users. Cookies that are inserted by the HTTP optimization module start with rbt-http= and are followed by a random number. Enable this option only if the server does not issue its own cookies.

The SteelHead removes the Riverbed cookie when it forwards the request to the server.

• Insert keep-alive - Keep-alive, or persistent connection, is required for the HTTP optimization module to perform prefetches. If the client does not support keep-alive and the server does, the client-side SteelHead inserts a Connection: keep-alive to the HTTP/1.0 response, unless the server explicitly instructed to close the connection by adding Connection: close.

This option does not apply to situations where the client supports keep-alive but the server does not. If the server does not support keep-alive, then prefetching is not possible and changes must be made on the server to support keep-alive.

• URL learning - The SteelHead learns associations between a base request and a follow-on request. This feature is most effective for web applications with large amounts of static-content images and style sheets for example. Instead of saving each object transaction, the SteelHead saves only the request URL of object transactions in a knowledge base, and then it generates related transactions from the list. URL learning uses the Referer header field to generate relationships between object requests and the base HTML page that referenced them and to group embedded objects. This information is stored in an internal HTTP database. The benefit of URL learning is faster page downloads for subsequent references to the same page, from the original browser of the requester or from other clients in the same location. When the SteelHead finds a URL in its database, it immediately sends requests for all of the objects referenced by that URL, saving round trips for the client browser. You can think of URL learning as an aggressive form of prefetching that benefits all users in a location, instead of just a single user, and that remembers what was fetched for subsequent accesses.

• Parse-and-prefetch - The SteelHead includes a specialized algorithm that determines which objects are going to be requested for a given web page, and it prefetches them so that they are available when the client makes a request. This feature complements URL learning by handling dynamically generated pages and URLs that include state information.

Parse-and-prefetch reads a page, finds HTML tags that it recognizes as containing a prefetchable object, and sends out prefetch requests for those objects. Typically, a client needs to request the base page, parse it, and then send out requests for each of these objects. This still occurs, but with parse-and-prefetch the SteelHead has quietly prefetched the page before the client receives it and has already sent out the requests. This allows the SteelHead to serve the objects as soon as the client requests them, rather than forcing the client to wait on a slow WAN link.

For example, when an HTML page contains the tag <img src="my_picture.gif">, the SteelHead prefetches the image my_picture.gif

Like URL learning, parse-and-prefetch benefits many users in a location rather than just a single user. Unlike URL learning, parse-and-prefetch does not remember which objects were referenced in a base request, so it does not waste space on dynamic content that changes each request for the same URL, or on dynamic URLs, which is not an efficient use of space.

• Object prefetch table - The SteelHead stores objects per the metadata information contained in the HTTP response. The object prefetch table option helps the client-side SteelHead respond to If-Modified-Since (IMS) requests from the client, cutting back on round trips across the WAN. This feature is useful for applications that use a lot of cacheable content.

Although URL learning and parse-and-prefetch can request information from the HTTP server much sooner than the client would have requested the same information, the object prefetch table stores information to completely eliminate some requests on the WAN. The client browsers receive these objects much faster than they would if the object had to be fetched from the server.

In releases previous to RiOS 8.6, all HTTP responses above 1 Mb triggered the RiOS HTTP traffic optimization to bypass the remainder of established HTTP sessions. In RiOS 8.6 and later, environments running both client-side and server-side SteelHeads remove the 1-Mb response limit. However, single HTTP objects returned as part of the response, which are greater than 1 Mb, are not stored in the HTTP object prefetch table by default.

Although features such as URL learning, parse-and-prefetch, and the object prefetch table (the replacement to metadata response) can help speed up applications, other factors, such as authentication, can negatively impact the performance of applications.

HTTP Vary Headers

RFC 2616 provides HTTP 1.1 the ability for client and server to determine if they can retrieve HTTP responses from local cache or if they must retrieve the responses from the origin server. The vary header consists of HTTP field names that the client evaluates. In releases previous to RiOS 8.6, responses that contained an HTTP vary header were excluded from SteelHead HTTP optimization.

In RiOS 8.6 and later, if HTTP responses contain a vary HTTP header and are not compressed, RiOS HTTP optimization caches the response for client-side consumption. However, if RiOS receives a response with a vary HTTP header and the response is content-encoded, the local RiOS HTTP object cache does not store the HTTP object.

The following example shows an HTTP response with an HTTP vary header set without content encoding enabled:

HTTP/1.1 200 OK

Date: Sat, 01 Mar 2014 23:07:28 GMT

Server: Apache

Last-Modified: Fri, 28 Feb 2014 10:23:10 GMT

ETag: "183-4f374d3157f80-gzip"

Accept-Ranges: bytes

Vary: User-Agent

Content-Encoding: gzip

Content-Length: 265

Keep-Alive: timeout=15, max=98

Connection: Keep-Alive

Content-Type: text/css

As a best practice, enable the HTTP Strip Compression option on the HTTP page. You can apply this as part of the HTTP automatic configuration settings or manually per HTTP server. For more information about HTTP automatic configuration, see

HTTP Automatic Configuration.

Figure: HTTP Optimization Configuration

Connection Pooling

Connection pooling preestablishes 20 inner channel connections between each pair of SteelHeads. HTTP traffic benefits the most from connection pooling, although connection pooling is not specific to HTTP. When the SteelHead requires an inner channel, it picks one from the pool and therefore eliminates the time for the TCP three-way handshake. The reason HTTP traffic benefits the most is because those connections are typically short-lived.

Note: Connection pooling is available only when using the Correct Addressing as the WAN visibility mode. For details, see the SteelHead Deployment Guide.

HTTP Authentication Optimization

RiOS 6.1 or later has specific HTTP authentication optimization for handling the various inefficient browser authentication behaviors. The HTTP authentication optimization attempts to modify the client-to-server behavior so that it maximizes the benefit of the HTTP optimization module.

RiOS 7.0 or later supports Kerberos as an authentication mechanism, in addition to the NTLM-based authentication supported by previous RiOS versions. Kerberos authentication support is beneficial for access to SharePoint, Exchange, IIS, and other Microsoft applications that use Active Directory and Kerberos for authentication. With this feature, your system is capable of prefetching resources when the web server employs per-request Kerberos authentication.

Prior to RiOS 7.0, servers that required Kerberos authentication did not take advantage of the parse-and-prefetch optimization feature. RiOS 7.0 or later can decrypt the Kerberos service ticket and generate session keys to authenticate, on a per-request basis, with the web server. For more information about Kerberos, see

Kerberos.

The following is a list of HTTP authentication optimization methods:

• Reuse auth - URL learning and parse-and-prefetch sends a particular request from the browser and triggers the HTTP optimization module to prefetch the objects of a web page. The browser typically opens parallel TCP connections to the server to download the objects (for details, see

Multiple TCP Connections and Pipelining). If the Reuse Auth feature is not enabled, the HTTP optimization module does not distribute the objects to the client through an unauthenticated connection, even though it can already have the objects in its database. With reuse auth enabled, the HTTP optimization module requires that the session has already been authenticated and that it is safe (in other words, without violating any permissions) to deliver the prefetched objects to the client regardless of whether the connection is authenticated or not. Reuse Auth is as if the client only used a single connection to download all the objects in serial.

• Force NTLM - The default authentication behavior on Microsoft's IIS server is per-request authentication for Kerberos and per-connection authentication for NTLM. Thus, the HTTP optimization module is configured to change the client-to-server negotiation so that the client chooses an authentication that maximizes the benefit of the HTTP optimization module. When enabled, Force NTLM removes the WWW-Authenticate: Negotiate line from the 401 Unauthorized message from the server. When the 401 Unauthorized message arrives at the client, the only authentication option available is NTLM. The client has no choice but to use NTLM for authentication.

Do not use this feature if Kerberos authentication is required.

• Strip auth header - When you enable strip auth header, the HTTP optimization module detects an authentication request on an already authenticated connection. It removes the authentication header before sending the request to the server. Because the connection is already authenticated, the server delivers the object to the client without having to go through the entire authentication process again. If you require Kerberos authentication, do not use strip auth header.

• Gratuitous 401 - You can use this feature with both per-request and per-connection authentication but it is most effective when used with per-request authentication. With per-request authentication, every request must be authenticated against the server before the server would serve the object to the client. Most browsers do not cache the server response requiring authentication, which wastes one round-trip for every GET request. With gratuitous 401, the client-side SteelHead caches the server response. When the client sends the GET request without any authentication headers, it responds locally with a 401 unauthorized message and saves a round trip.

The HTTP optimization module does not participate in the actual authentication. The HTTP optimization module informs the client that the server requires authentication without requiring it to waste one round trip.

To enable HTTP Kerberos authentication

1. Install RiOS 7.0 or later on the client-side and server-side SteelHead.

2. Join the server-side SteelHead to the active domain directory.

3. Enable Kerberos key replication on the server-side SteelHead.

4. On the HTTP page, select Enable Kerberos Authentication Support.

5. Click Apply.

HTTP Automatic Configuration

RiOS 7.0 or later reduces the complexity of configuring the HTTP optimization features by introducing an automatic configuration method. HTTP optimization can automatically detect new HTTP web hosts and initiate an evaluation of the traffic statistic to determine the most optimal setting. Rule sets are built automatically. The analysis of each HTTP application is completed and configured independently per client-side SteelHead.

Riverbed recommends that both the client-side and server-side SteelHeads are running RiOS 7.0 or later for full statistics gathering and optimization benefits. For more details, go to

https://supportkb.riverbed.com/support/index?page=content&id=S17039.

HTTP automatic configuration is enabled by default—the SteelHead starts to profile HTTP applications upon startup.

A detailed description of the HTTP automatic configuration process phases are as follows:

1. Identification phase - HTTP applications are identified by a hostname derived from the HTTP request header: for example, host: sharepoint.example.com or www.riverbed.com. This makes applications more easily identifiable when an HTTP application is represented by multiple servers. For example, http://sharepoint.example.com is collectively resolved to multiple IP addresses, each belonging to multiple servers for the purpose of load balancing. A single entry encompasses the multiple servers.

2. Evaluation phase - The SteelHead reads the metadata and builds latency and throughput-related statistics on a per-HTTP application basis. After the SteelHead detects a predetermined number of transactions, it moves to the next phase. During the evaluation phase, the web server host has an object prefetch table, insert keep alive, reuse authentication, strip authentication and gratuitous 401 enabled by default to provide optimization. Strip authentication might be disabled after the evaluation stage if the SteelHead determines that the HTTP server requires authentication.

3. Automatic phase - The HTTP application profiling is complete and the HTTP application is configured. At this phase, prefetch (URL learning and parse-and-prefetch), strip-compression, and insert-cookie optimization features are declared as viable configuration options for this application, in addition to the options from the earlier phase. Evaluation of prefetching is based on the time difference between the server-side SteelHead to the server and the server-side SteelHead to the client-side SteelHead. Prefetch is enabled if the time difference is significantly greater. Stripping the compression is enabled if the server-side SteelHead LAN bandwidth is significantly greater than the WAN of the client-side SteelHead. Insert-cookie optimization is automatically enabled only when the server does not use cookies.

4. Static phase - You can insert custom settings for specific hosts or subnets. You can also select an automatically configured rule and override the settings. In RiOS 7.0 or later, you can insert hostnames (for example, www.riverbed.com) along with specific IP hosts/subnets (for example, 10.1.1.1/32). If you use a subnet instead of a hostname, you must specify the subnet mask.

Figure: Subnet and Subnet Mask

RiOS 7.0 or later stores all cacheable objects, as long as the objects are allowed by the HTTP server and not prohibited by the headers in the HTTP request and response. The restriction to cache objects based on their extensions is removed. You can choose to cache all allowable objects or change to pre-RiOS 7.0 settings and explicitly point out the extension of certain object you wish to store. You can also choose to not store any objects.

To enable HTTP automatic configuration

1. In the Management Console, choose Optimization > Protocols: HTTP.

2. Select Enable Per-Host Auto Configuration.

3. Select the appropriate options. All are enabled by default.

For more information about HTTP options, see the SteelHead Management Console User’s Guide.

4. Click Apply.

Figure: HTTP Configuration Page

You can filter between the discovered and statically added hosts. When you enable HTTP automatic configuration, you can select the row filters to see auto-configured hosts and evaluated hosts. To delete the entry, you must edit an automatically configured host. The host automatically changes its configuration to become static. Only then can you remove the entry.

Note: Removal of an entry allows the SteelHead to relearn the HTTP application and begin the evaluation phase again.

You can select an automatically configured rule and edit the optimization settings. Upon completion, the rule set is now considered a static rule. This process is similar to previous RiOS versions in which manually adding subnets to optimize hosts was needed.

When you disable the automatic configuration option, only static and the default optimization rule (0.0.0.0/0) apply. The automatic and evaluation rules no longer populate the table.

Note: If you restart the optimization process, you remove all automatic and evaluation configured entries. The SteelHead profiles the applications again.

To configure HTTP automatic configuration with the command line

• On the client-side SteelHead, connect to the CLI in configuration mode and enter the following command:

protocol http auto-config enable

To clear the HTTP automatic configuration statics with the command line

• On the client-side SteelHead, connect to the CLI in configuration mode and enter one of the following commands:

protocol http auto-config clear-stats all

or

protocol http auto-config clear-stats hostname <http-hostname>

For more information about how to configure HTTP automatic configuration, see the SteelHead Management Console User’s Guide and the Riverbed Command-Line Interface Reference Manual.

HTTP Settings for Common Applications

Riverbed recommends that you test the HTTP optimization module in a real production environment. To manually configure HTTP applications, the following table shows the recommended settings for common enterprise applications.

Application | URL Learning | Parse-and-Prefetch | OPT |

SAP/Netweaver | No | Yes | Yes |

Microsoft CRM | Yes | No | Yes |

Agile | No | No | Yes |

Pivotal CRM | No | Yes | Yes |

SharePoint | No | Yes | Yes |

As discussed in

Primary Content Optimization Methods, URL learning accelerates environments with static URLs and content, parse-and-prefetch accelerates environments with dynamic content, and OPT saves round trips across the WAN, and reduces WAN bandwidth consumption.

HTTP Optimization for SharePoint

You can configure Microsoft SharePoint-specific HTTP optimization options in RiOS 8.5 and later. The SharePoint optimization options are available on the Optimization > Protocols: HTTP page (

Figure: HTTP Configuration Page):

• Microsoft Front Page Server Extensions (FPSE) protocol - The FPSE protocol enables the client application to display the contents of a website as a file system. FPSE supports uploading and downloading files, directory creation and listing, basic file locking, and file movement in the web server. The FPSE HTTP subprotocol is used by SharePoint 2007 and 2010 when opening documents in Microsoft Office. To increase performance, the following FPSE requests are cached:

– URL-to-Web URL request

– Server-version request

– Open-service request

One of the inherent issues with SharePoint communication is that after each request is complete, the web server closes the connection. Thus, each new request requires a new TCP handshake. If you are using SSL, additional round trips are required. Request caching eliminates the round trips and speeds up the connection.

FPSE supports SharePoint Office clients 2007 and 2010, installed on Windows 7 (SP1) and Windows 8. SharePoint 2013 does not support FPSE.

• Microsoft Web Distributed Authoring and Versioning (WebDAV) - The WebDAVE represents a set of standardized extensions (RFC 4918) to the HTTP/1.1 protocol that enables users to collaborate, edit, and manage files on remote web servers. Specific to SharePoint, WebDAV is a protocol for manipulating the contents of the document management system. You can use WebDAV protocol to map a SharePoint site to a drive on a Windows client machine. To properly set up as a WebDAV drive, you must create it as a mapped drive and not as a new network folder location.

The WebDAV optimization option in the RiOS cache often repeats metadata and replies locally from the client-side SteelHead. WebDAV protocol is used in both SharePoint 2010 and 2013.

• File Synchronization via SOAP over HTTP (FSSHTTP) Protocol - FSSHTTP is a protocol you can use for file data transfer in Microsoft Office and SharePoint. FSSHTTP enables one or more protocol clients to synchronize changes performed on shared files stored on a SharePoint server. FSSHTTP allows coauthoring and is supported with Microsoft Office 2010 and 2013 and SharePoint 2010 and 2013.

Currently, RiOS does not offer any latency optimization for FSSHTTP. Clients can continue to benefit from HTTP optimization, TCP streamlining, and data reduction.

• OneDrive for Business - OneDrive for Business is a personal library intended for storing and organizing work documents. As an integral part of Office 365 or SharePoint 2013, OneDrive for Business enables end users to work within the context of your organization, with features such as direct access to your company address book. OneDrive for Business is usually paired with Sync OneDrive for Business (sync client). This synchronization application enables you to synchronize OneDrive for Business library or other SharePoint site libraries to a local computer. OneDrive for Business is available with Office 2013 or with Office 365 subscriptions that include Microsoft Office 2013 applications.

RiOS 8.6 and later support optimization of traffic between OneDrive for Business and Sync OneDrive for Business. Office 365 OneDrive for Business requires a SteelHead SaaS. SteelHead SaaS runs in the Akamai SRIP network, and it optimizes both SharePoint and Exchange.

The following table shows a summary of SharePoint application visibility reporting.

SharePoint Version | HTTP | FPSE | WebDAV |

SharePoint 2007 | Yes | Yes | Yes |

SharePoint 2010 | Yes | Yes | Yes |

SharePoint 2013 | Yes | No | No |

For information about how to configure SharePoint optimization and more information about the SharePoint optimization options, see the SteelHead Management Console User’s Guide and the Riverbed Command-Line Interface Reference Manual. For information about AFE, see the SteelHead Deployment Guide and the SteelHead Management Console User’s Guide.

HTTP Optimization Module and Internet-Bound Traffic

Sometimes internet access from the branch offices is back-hauled to the data center first, before going out to the internet. Consequently, a significant portion of the WAN traffic is internet-bound traffic and you might be tempted to optimize that traffic.

For internet-bound traffic, Riverbed recommends that you enable HTTP automatic configuration.

Similar to other Layer-7 optimization modules, the HTTP optimization module expects the latency between the server and the server-side SteelHead to be LAN latency. With internet-bound traffic, the latency between the server-side SteelHead and the server is going to be far greater than typical LAN latency. In this case, the URL learning and parse-and-prefetch features are not very effective, and in certain cases they are slower than without optimization. Riverbed recommends that you do not disable HTTP automatic configuration—the SteelHead adjusts as necessary.

Figure:

Internet Traffic Backhauled from the Branch Office to the Data Center Before Internet

HTTP and IPv6

HTTP enables you to specify settings for a particular server or subnet using an IPv6 address similar to how you configure HTTP for IPv4 addresses. The following shows an example from the CLI:

protocol http server-table subnet 2001:dddd::100/128 obj-pref-table no parse-prefetch no url-learning no reuse-auth no strip-auth-hdr no gratuitous-401 no force-nego-ntlm no strip-compress no insert-cookie yes insrt-keep-aliv no FPSE no WebDAV no FSSHTTP no

For more information about IPv6, see the SteelHead Deployment Guide.

Overview of the Web Proxy Feature

RiOS 9.1 and later include the web proxy feature. Web proxy uses the traditional single-ended internet HTTP-caching method enhanced by Riverbed for web browsing methodologies of today. The web proxy feature enables SteelHeads to provide a localized cache of web objects and files. The localized cache alleviates the cost of repeated downloads of the same data. Using the web proxy feature in the branch office provides a significant overall performance increase due to the localized serving of this traffic and content from the cache. Furthermore, multiple users accessing the same resources receive content at LAN speeds while freeing up valuable bandwidth.

Because this is a client-side feature, it is controlled and managed from an SCC. You can configure the in-path rule on the client-side SteelHead running the web proxy or on the SCC. You must also enable the web proxy globally on the SCC and add domains to the global HTTPs white list. For more information, see the SteelCentral Controller for SteelHead Deployment Guide, the SteelCentral Controller for SteelHead User’s Guide, and the SteelHead Management Console User’s Guide.

Note: The web proxy feature is currently supported in a physical in-path or a virtual in-path deployment model. The xx50 models, xx60 models, and the SteelHead-v do not support this feature.

Tuning Microsoft IIS Server

This section describes the steps required to modify the IIS server so that it can maximize the performance of the HTTP Authentication optimization module. In some instances of the HTTP Authentication optimization requires the IIS server to behave in a certain way. For example, if NTLM authentication is in use, then the HTTP optimization module expects the NTLM authentication to use per-connection authentication.

Determining the Current Authentication Scheme on IIS

IIS 6 uses metabase to store its authentication settings. Although you can manually modify the metabase, the supported method in querying or modifying the metabase is to use the built-in Visual Basic (VB) script adsutil.vbs. The adsutil.vbs script is located in the C:\inetpub\adminscripts directory on the IIS server.

To determine the current setting on the IIS server

• From the command prompt on the Windows server, enter the following command in the c:\inetpub\adminscripts directory:

cscript adsutil.vbs get w3svc/$WebsiteID$/root/NTAuthenticationProviders

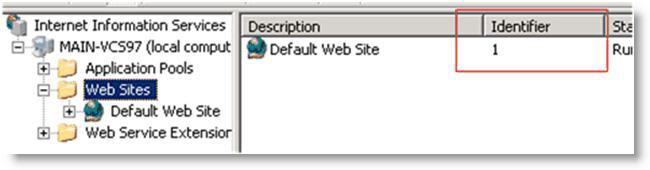

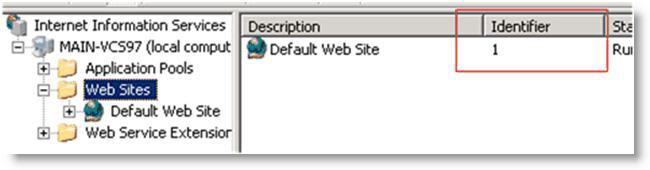

The $WebsiteID$ is the ID of the website hosted by the web server. The $WebsiteID$ can be found in the IIS Manager and it's the number under the Identifier column.

Figure: Web Site ID in the Identifier Column

If the output of the command is one of the following, it indicates that the server prefers Kerberos authentication and if that fails, it falls back to NTLM:

The parameter "NTAuthenticationProviders" is not set at this node.

NTAuthenticationProviders : (STRING) "Negotiate,NTLM"

If the output of the command is the following, it indicates that the server only supports NTLM authentication:

NTAuthenticationProviders : (STRING) "NTLM"

Determining the Current Authentication Mode on IIS

Both NTLM and Kerberos authentication can support either per-connection or per-request authentication. To determine the current authentication mode on IIS, use the same VB script, adsutil.vbs, but query a different node:

cscript adsutil.vbs get w3svc/$WebsiteID$/root/AuthPersistSingleRequest

The output of the command should be similar to the following text:

The parameter "AuthPersistSingleRequest" is not set at this node; or

AuthPersistSingleRequest : (BOOLEAN) False; or

AuthPersistSingleRequest : (BOOLEAN) True

Per-Connection or Per-Request NTLM Authentication

If the authentication scheme is NTLM and the output of the authentication mode is either not set or false, then the server is configured with per-connection NTLM authentication. If the output of the authentication mode is true, then the server is configured with per-request NTLM authentication.

Per-Connection or Per-Request Kerberos Authentication

If the authentication scheme is Kerberos, you must perform extra steps you can determine whether the server is using per-connection or per-request authentication.

To determine whether Kerberos is using per-connection or per-request (applies to IIS 6.0)

1. Check the registry for key named EnableKerbAuthPersist under HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\W3SVC\Parameters. If the key does not exist, or if the key does exist but has a value of zero, then Kerberos is using per-request authentication.

Figure: Registry Editor Window

2. If the key exists but has a nonzero value, and the output from authentication mode is false, then Kerberos might be using per-connection Kerberos. Kerberos is using per-connection authentication if the server running is running IIS 6.0 and has the patch installed per Microsoft's knowledge base article

http://support.microsoft.com/kb/917557.

For information about IIS 7.0, go to

http://support.microsoft.com/kb/954873.

The following table shows the different combinations and whether Kerberos would perform per-connection or per-request authentication.

| | EnableKerbAuthPersist/AuthPersistNonNTLM** |

| | Not-set | Nonzero | Zero |

AuthPersistSingleRequest | Not-set | Per-request | Per-connection* | Per-request |

TRUE | Per-request | Per-request | Per-request |

FALSE | Per-request | Per-connection | Per-request |

* requires IIS patch

** AuthPersistNonNTLM of IIS 7 replaces EnableKerbAuthPersist of IIS 6

Changing the Authentication Scheme

You can change the authentication by using the adsutil.vbs script.

To modify the server so that it only supports NTLM authentication

• From the command prompt on the Windows server, enter the following command:

cscript adsutil.vbs set w3svc/$WebsiteID$/root/NTAuthenticationProviders "NTLM"

You can configure the server to attempt Kerberos authentication first before NTLM by changing the last parameter to Negotiate, NTLM.

Remember to restart the IIS server after the changes have been made.

Changing the Per-Connection/Per-Request NTLM Authentication Mode

By default, NTLM uses per-connection authentication.

To change per-connection NTLM to per-request NTLM

• From the command prompt on the Windows server, enter the following command:

cscript adsutil.vbs set w3svc/$WebsiteID$/root/AuthPersistSingleRequest TRUE

To change NTLM back to its default, replace the word TRUE with FALSE and restart the IIS server.

Note: When you use NTLM, the HTTP optimization module works best when per-connection authentication is set.

Changing the Per-Connection/Per-Request Kerberos Authentication Mode

By default, Kerberos uses per-request authentication. There are several ways to configure Kerberos to use per-connection authentication. For details, see

Per-Connection or Per-Request Kerberos Authentication.

HTTP Authentication Settings

The following table shows some of the recommended configurations for the HTTP Authentication optimization. In this instance, assume that it is not possible to make any modifications on the IIS server.

For example, if the authentication setting on the IIS server is per-request Kerberos, enabling Force NTLM forces the client to use NTLM and in turn, the HTTP optimization module can provide better optimization by using the URL learning and parse-and-prefetch features. If NTLM authentication is not an option, then the only possibility is to enable gratuitous 401.

If you modify the settings on the IIS server, then Force NTLM might not be necessary. In this case, only the first row of the following table is applicable.

The HTTP optimization module expects the default authentication behavior for both NTLM and Kerberos (in other words, per-connection authentication for NTLM and per-request authentication for Kerberos). Continuing with the example earlier, if the IIS server is configured to use the nonstandard authentication scheme by using per-request Kerberos and per-request NTLM, then using Force NTLM does not help as it changes from per-request Kerberos to per-request NTLM. In this case, URL learning and parse-and-prefetch is not effective.

IIS Authentication | Recommended Configurations | Notes |

per-connection NTLM | Reuse Auth. + Strip Auth. Header + Grat. 401 | |

Reuse Auth. + Strip Auth. Header | |

per-request Kerberos | Reuse Auth. +Force NTLM + Strip Auth. Header + Grat. 401 | N/A if NTLM is not an option |

Reuse Auth. +Force NTLM + Strip Auth. Header + Grat. 401 | N/A if NTLM is not an option |

Grat. 401 | |

per-request NTLM | Grat. 401 | |

per -connection Kerberos | Reuse Auth. + Force NTLM + Strip Auth. Header | N/A if NTLM is not an option |

Reuse Auth. | |

Change to per-request Kerberos and turn on | |

Grat. 401 | |

Don’t know | Reuse Auth. + Strip Auth. Header + Grat. 401 | |

Reuse Auth. + Strip Auth. Header | |

HTTP Optimization Module and Proxy Servers

Some network deployments might involve HTTP proxy servers to speed up resource retrieval, apply access control policies, or audit and filter contents. In general, the SteelHeads provide full optimization benefits even with proxy servers in place. Optimized connections can be limited or even hindered in the following circumstances:

• Proxy authentication - If the proxy server uses authentication, prefetching performance can be affected. Similar to web server authentication, proxy authentication can limit the prefetching capability of the connection where prefetched resources are served when the proxy server uses per-connection authentication. In the case of the per-request mode, prefetching is not possible as every single request requires user authentication. HTTP authentication optimization features apply to both web server and proxy authentication.

• Proxy caching - A proxy server can maintain its own local cache to accelerate access to resources. Generally, proxy caching does not thwart HTTP optimization. If it caches objects for an excessively long time, object prefetch table (OPT) might not provide full benefits as the retrieved resource might not be sufficiently fresh.

• Selective proxying - If the same web server is accessed directly by a client and through a proxy server, URL learning might not work properly. URL learning builds a prefetch tree by observing ongoing requests. This is based on the assumption that the same URL is requested by the client if the same base page is requested again.

When a prefetch tree is created without a proxy, the observed partial URLs comprise the tree. If another client asks for the same page but through a proxy, the proxy fails to forward the prefetched responses because it expects full URLs to be able to send the responses back to the client. Thus URL learning might not work well when proxy is selectively employed for the same host. This only happens with URL learning. Other prefetching schemes, such as parse-and-prefetch and object prefetch table, are not subjected to this issue.

• Fat Client - Not all applications accessed through a web browser use the HTTP protocol. This is especially true for fat clients that run inside a web browser that might use proprietary protocols to communicate with a server. HTTP optimization does not improve performance in such cases.

Determining the Effectiveness of the HTTP Optimization Module

Figure: Response Header Contained in the Line X-RBT-Prefetched-By shows that when an object is optimized by the HTTP optimization module, the response header contains the line X-RBT-Prefetched-By. The X-RBT-Prefetched-By line also contains the name of the SteelHead, the version of system, and the method of optimization. The different methods of optimization are:

• UL - URL learning.

• PP - parse-and-prefetch.

• MC - metadata response (pre-RiOS 6.0)

• PT - object prefetch table (RiOS 6.0 or later)

• AC - gratuitous 401

Figure: Response Header Contained in the Line X-RBT-Prefetched-By

The response header can be captured by using tcpdump, HTTPWatch, Fiddler, or similar tools. Because the HTTP optimization module is a client-side driven feature, the capture must be done on the client itself.

Info-Level Logging

You can look at the client-side SteelHead log messages to determine whether or not the HTTP optimization module is functioning. The logging level on the SteelHead must be set at info level logging for the messages to appear in the log. If the HTTP optimization module is prefetching objects, a message similar to the following appears in the logs:

Sep 25 12:28:57 CSH sport[29969]: [http/client.INFO] 2354 {10.32.74.144:1051 10.32.74.143:80} Starting 40 Prefetches for Key->abs_path="/" host="10.32.74.143" port="65535" cookie="rbt-http=2354"

This only applies to the URL learning and parse-and-prefetch. No log messages are displayed for metadata response or object prefetch table.

The key specified in the log message is not necessarily the object that triggered the prefetch operation. In RiOS 6.0 or later, the log message includes the object that triggered the prefetch operation.

Use Case

While automatic configuration is typically the preferred method of configuration, you have the option of manual configuration. The following use case shows a manual configuration.

A customer has a 1.5 Mbps link with 100 ms latency between the branch office and the data center. The PCs in the remote office are running Microsoft Windows XP with Internet Explorer 7. Users in the remote offices are complaining of slow access for SAP Netweaver and Microsoft SharePoint. The SAP Netweaver server has an IP address of 172.30.1.10 and the Microsoft SharePoint server has an IP address of 172.16.2.20.

Because both SAP Netweaver and Microsoft SharePoint are well-known applications, the customer configured the following on the client-side SteelHead.

Figure: Two Subnet Server Settings Showing the New Recommended SharePoint Settings

After configuring the settings above, the customer noticed a significant improvement in response time for SAP Netweaver but no changes for Microsoft SharePoint—even though the connections are optimized with good data reduction. One of the users mentioned that the Microsoft SharePoint portal required authentication, which might be the reason why parse-and-prefetch did not work. Unfortunately, the system administrator in charge of the SharePoint portal cannot be reached at this moment and you cannot check the authentication setting on the server.

Instead of checking the authentication on the server, you can capture tcpdump traces and check for the authentication scheme in use.

Figure: TCP Dump Trace Confirming Kerberos Enabled shows the server has Kerberos enabled and hence the client attempts to authenticate using Kerberos first.

Figure: TCP Dump Trace Confirming Kerberos Enabled

Figure: TCP Dump Trace Showing Per-Request Kerberos confirms that by scrolling through the trace, the per-request Kerberos is configured on the server.

Figure: TCP Dump Trace Showing Per-Request Kerberos

Figure: Enable Force NTLM shows that given this information, the best option is to enable

Force NTLM for the SharePoint server.

Figure: Enable Force NTLM

Taking another trace on the client-side SteelHead confirms that the only authentication option available is NTLM. Because there is no other authentication option but NTLM, the client is forced to authenticate via NTLM and parse-and-prefetch and prefetches can once again function as before.

Figure: Trace Stream Showing NTLM as the Only Authentication Available

In this instance, it is not necessary to enable the other features, as the entire transaction took place over a single connection. If the client uses multiple TCP connections, then it might be necessary to enable reuse auth, strip auth header, and gratuitous 401. Enabling the rest of the features does not provide any benefit in this instance, but it does not cause any problems either.

Internet Traffic Backhauled from the Branch Office to the Data Center Before Internet

Internet Traffic Backhauled from the Branch Office to the Data Center Before Internet