HTTP and Browser Behavior

The HTTP protocol uses a simple client-server model where the client transmits a message (also referred to as a request), to the server and the server replies with a response. Apart from the actual request, which is to download the information from the server, the request can contain additional embedded information for the server. For example, the request might contain a Referer header. The Referer header indicates the URL of the page that contains the hyperlink to the currently requested object. Having this information is very useful for the Web server to trace where the requests originated from, for example:

GET /wiki/List_of_HTTP_headers HTTP/1.1

Host: en.wikipedia.org

User-Agent: Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.2.7)

Gecko/20100713 Firefox/3.6.7

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8

Accept-Language: en-us,en;q=0.5

Accept-Encoding: gzip,deflate

Accept-Charset: ISO-8859-1,utf-8;q=0.7,*;q=0.7

Keep-Alive: 115

Connection: keep-alive

The HTTP protocol is a stateless protocol. A stateless protocol means that the HTTP protocol does not have a mechanism to store the state of the browser at any given moment in time. Being able to store the state of the browser is useful particularly in situations where a user who is online shopping, has added items to the shopping cart, but was then interrupted and closed the browser. With a stateless protocol, when the user re-visits the Web site again, the user has to add all the items back into the shopping cart. To address this issue, the server can issue a cookie to the browser. There are typically the following types of cookies:

Persistent cookie - remains on the user's PC after the user closes the browser. Nonpersistent cookie - discarded after the user closes the browser. In the example earlier, assuming that the server issues a persistent cookie, when the user closes the browser and revisits the Web site again, all the items from the previous visit are populated in the shopping cart. Cookies are also used to store user preferences. Each time the user visits the Web site, the Web site is personalized to the user's liking.

This example shows where to find the cookie in the client's GET request.

GET /cx/scripts/externalJavaScripts.js HTTP/1.1

Host: www.acme.com

User-Agent: Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.2.6)

Gecko/20100625 Firefox/3.6.6

Accept: */*

Accept-Language: en-us,en;q=0.5

Accept-Encoding: gzip,deflate

Accept-Charset: ISO-8859-1,utf-8;q=0.7,*;q=0.7

Keep-Alive: 115

Connection: keep-alive

Referer: http://www.acme.com/cpa/en_INTL/homepage

Cookie: TRACKER_CX=64.211.145.174.103141277175924680;

CoreID6=94603422552512771759299&ci=90291278,90298215,90295525,90296369,902941

55; _csoot=1277222719765; _csuid=4c20df4039584fb9; country_lang_cookie=SG-en

Web browser performance has improved considerably over the years. In the HTTP v1.0 specification, the client opened a connection to the server, downloaded the object, and then closed the connection. Although this was easy to understand and implement, it was very inefficient because each download triggered a separate TCP connection setup (TCP 3-way handshake). In the HTTP v1.1 specification, the browser has the ability to use the same connection to download multiple objects.

The next section discusses how the browser uses multiple connections, and a technique known as pipelining, to improve the browser's performance.

Multiple TCP Connections and Pipelining

Most modern browsers establish two or more TCP connections to the server for parallel downloads. The concept is simple—as the browser parses the Web page, it knows what objects it needs to download. Instead of sending the requests serially over a single connection, the requests are sent over parallel connections resulting in a faster download of the Web page.

Another technique used by browsers to improve the performance is

pipelining. Without pipelining, the client first sends a request to the server and waits for a reply before sending the next request. If there are two parallel connections, then a maximum of two requests are sent to the server concurrently. With pipelining, the browser sends the requests in batches instead of waiting for the server to respond to each individual object before sending the next request. Pipelining is used with a single HTTP connection or with multiple HTTP connections. Although most servers support multiple HTTP connections, some servers do not support pipelining.

Figure 4‑1 illustrates the effect of pipelining.

Figure 4‑1. Pipelining

HTTP Authentication

To provide access control to the contents on the Web server, authentication is enabled on the server. There are many ways to perform authentication—for example, certificates and smartcards. The most common schemes are NT LAN Manager (NTLM) and Kerberos. Although the two authentication schemes are not specific to HTTP and are used by other protocols such as CIFS and MAPI, there are certain aspects that are specific to HTTP.

The following steps describe four-way NTLM authentication shown in

Figure 4‑2:

The client sends a GET request to the server.

The server, configured with NTLM authentication, sends back a 401 Unauthorized message to inform the client that it needs to authenticate. Embedded in the response is the authentication scheme supported by the server. This is indicated by the WWW-Authenticate line.

The client sends another GET request, and attaches an NTLM Type-1 message to the request. The NTLM Type-1 message provides the set the capability flags of the client (for example, encryption key size).

The server responds with another 401 Unauthorized message, but this time it includes an NTLM Type-2 message in the response. The NTLM Type-2 message contains the server's NTLM challenge.

The client computes the response to the challenge and once again attaches this to another GET request. Assuming the server accepts the response, the server delivers the object to the client.

Assuming a network on 200 ms of round-trip latency, it would take at least 600 ms for the browser begins to download the object.

Figure 4‑2. Four-Way NTLM Authentication

For a more in-depth discussion on NTLM authentication, go to:

http://www.innovation.ch/personal/ronald/ntlm.htmlA detailed explanation of the Kerberos protocol is beyond the scope of this guide. For details, go to:

http://simple.wikipedia.org/wiki/Kerberos http://technet.microsoft.com/en-us/library/cc772815(WS.10).aspxThere is the concept of per-request and per-connection authentication with HTTP authentication. A server configured with per-request authentication requires the client to authenticate every single request before the server delivers the object to the client. If there are 100 objects (for example, .jpg images), it performs authentication 100 times with per-request authentication. With per-connection authentication, if the client only opens a single connection to the server, then the client only needs to authenticate with the server once. No further authentication is necessary. Using the same example, only a single authentication is required for the 100 objects. Whether the Web server does per-request or per-connection authentication varies depending on the software. For Microsoft's IIS server, the default is per-connection authentication when using NTLM authentication, the default is per-request authentication when using Kerberos authentication.

When the browser first connects to the server, it does not know whether the server has authentication enabled. If the server requires authentication, the server responds with a 401 Unauthorized message. Within the body of the message, the server indicates what kind of authentication scheme it supports in the WWW-Authenticate line. If the server supports more than one authentication schemes, then there are multiple WWW-Authenticate lines in the body of the message. For example, if a server supports both Kerberos and NTLM, the following appears in the message body:

WWW-Authenticate: Negotiate

WWW-Authenticate: NTLM

When the browser receives the Negotiate keyword in the WWW-Authenticate line, it first tries Kerberos authentication. If Kerberos authentication fails, it falls back to NTLM authentication.

Assuming that there are multiple TCP connections in-use, after the authentication succeeds on a first connection, the browser downloads and parses the base page as they authenticate. To improve performance, most browsers parse the Web page and start to download the objects over parallel connections (for details, see

Multiple TCP Connections and Pipelining).

Connection Jumping

When the browser establishes the second, or subsequent, TCP connections for the parallel or pipelining downloads, it does not remember if the server requires authentication. Therefore, the browser sends multiple GET requests over the second, or additional, TCP connections, without the authentication header. The server rejects the requests with the 401 Unauthorized messages. When the browser receives the 401 Unauthorized message on the second connection, it is aware that the server requires authentication. The browser initiates the authentication process. Yet, instead of keeping the authentication requests for all the previously requested objects on the second connection, some of the requests jump over the first and are authenticated. This is known as connection jumping.

Connection jumping is specific behavior to Internet Explorer, unless you are using Internet Explorer 8 with Windows 7.

Figure 4‑3 shows the client authenticated with the server and the client request for the index.html Web page. It parses the Web page and initiates a second connection for parallel download.

Figure 4‑3. Client Authenticates with the Server

Figure 4‑4 shows the client pipelines the requests to download objects 1.jpg, 2.jpg, and 3.jpg on the second connection. However, because the server requires authentication, the requests for those objects are all rejected.

Figure 4‑4. Rejected Second Connection

Figure 4‑5 shows the client sends the authentication request to the server. Instead of keeping the requests on the second connection, some of the requests have jumped over to the first, and an already authenticated, connection.

Figure 4‑5. Connection Authentication Jumping

The following problems, that result performance impact, arise as a result of this connection jumping behavior:

If an authentication request appears on an already authenticated connection, the server can reset the state of the connection and force it to go through the entire authentication process again.The browser has effectively turned this into a per-request authentication even though the server can support per-connection authentication. HTTP Proxy Servers

Most enterprises have proxy servers deployed in the network. Proxy servers serve the following purposes:

Access controlPerformance improvementMany companies have some compliance policies that restrict what the users can or cannot access from their corporate network. Enterprises meet this requirement by deploying proxy servers. The proxy servers act as a single point through which all Web traffic entering or exiting the network must traverse so the administrator can enforce the necessary policies. In addition to controlling access, the proxy server might act as a cache engine caching frequently accessed data. By doing this, it can eliminate the need to fetch the same content for different users.

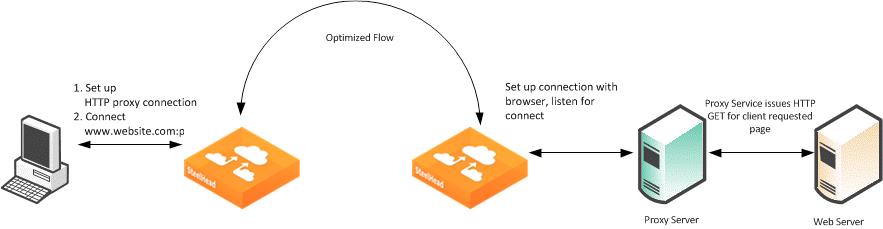

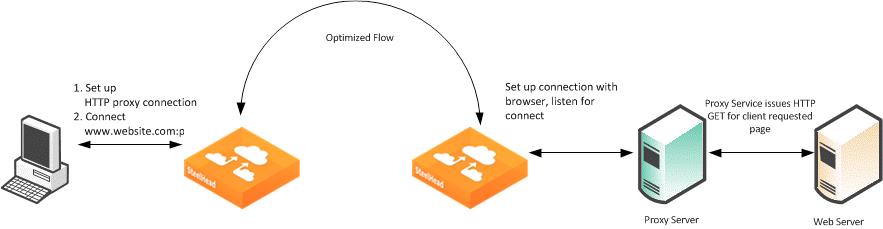

Communication with an HTTP proxy server differs from the browser to the requested HTTP server. When you use a defined proxy server, the browser initiates a TCP connection with that defined proxy server. The initiation starts with a standard TCP three-way handshake. Next, the browser requests a Web page and issues a CONNECT statement to the proxy server, with instructions to which Web server it wants to connect.

Figure 4‑6. Connection to a Web Server Through a Proxy

In a standard HTTP request with an open proxy server, the proxy next opens a connection to the requested Web server and returns the requested objects. However, most corporate environments use proxy servers as outbound firewall devices, and require authentication by issuing a code 407 Proxy Authentication Required. After a successful authorization is complete, the proxy returns the originally requested objects. Successful authorization by the proxy server can be verifying username and password, and if the destination Web site is on the approved list.

Figure 4‑7. Client Sending NTLM Authorization

SSL connections are different. In a standard HTTP request, the SteelHead optimizes proxy traffic without any issues. Standard HTTP optimized traffic is leveraged, and data reduction and latency reductions are prevalent. Due to the nature of proxy server connections, an additional step is required to set up SSL connections. Prior to RiOS v7.0, SSL connections were set up as pass-through connections and were not optimized because the SSL sessions were negotiated after the initial TCP setup. In RiOS 7.0 or later, RiOS can negotiate SSL after the setup of the session.

Figure 4‑8. Setting Up an SSL Connection Through a Proxy Server

Figure 4‑8 shows an SSL connection through a proxy server. The steps are as follows:

A client sends a TCP three-way handshake to the proxy server. The proxy HTTP is made on this connection.

The client issues a CONNECT statement to the host it wants to connect with, for example:

CONNECT www.riverbed.com:443 HTTP/1.0

User-Agent: Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1;.NET CLR 1.0.3705;.NET CLR 1.1.4322;.NET CLR 2.0.50727;.NET CLR 3.0.4506.2152;.NET CLR 3.5.30729)

Host: www.riverbed.com

Content-Length: 0

Proxy-Connection: Keep-Alive

Pragma: no-cache

The proxy server forwards the connect request to the remote Web server.

The remote HTTP server accepts and send back an ACK.

The client sends an SSL Client Hello Message.

The server-side SteelHead intercepts the Client Hello Message and begins to set up an SSL inner-channel connection with the client. The SteelHead also begins to set up the SSL conversation with the original Web server.

The private key and certificate of the requested HTTP server must exist on the server-side SteelHead, along with all servers targeted for SSL optimization.

An entry is added to a hosts table on the server-side SteelHead.

The host table is how a SteelHead discerns which key is associated with each SSL connection, because each SSL session is to the same IP and port pair. The hostname becomes the key field for managing this connection.

The server-side SteelHead passes the session to the client-side SteelHead using SSL optimization. For information about SSL optimization, see

SSL Deployments.

Configuring HTTP SSL Proxy Interception

In some HTTP proxy implementations, the proxy server terminates the SSL session to the Web server to inspect the Web payload for policy enforcement or surveillance. In this scenario, the SSL server that is defined in the SSL Main Settings page on the server-side SteelHead is the SSL proxy device, and not the server being requested by the browser. If you use self-signed certificates from the proxy server, you must add the CA from the proxy server to the SteelHead trusted certificate authority (CA) list.

Figure 4‑9. SSL Session Setup Between the Proxy Server and the Client

Figure 4‑9 shows that the client requests a page from

http://www.riverbed.com/. This request is sent to the proxy server as a CONNECT statement. Because this is an intercepted proxy device, the SSL session is set up between the proxy server and the client, typically with an internally trusted certificate. You must use this certificate on the server-side SteelHead for SSL optimization to function correctly.

Figure 4‑10. Adding a CA

To configure RiOS SSL traffic interception

Before you begin:

You must have a valid SSL license.The server-side and client-side SteelHeads must be running RiOS 7.0 or later.Choose Optimization > SSL: SSL Main Settings and select Enable SSL Optimization.

The private key and the certificate of the requested Web server, and all the servers targeted for SSL optimization, must exist on the server-side SteelHead.

Choose Optimization > SSL: Advanced Settings and select Enable SSL Proxy Support.

Create an in-path rule on the client-side SteelHead (shown in

Figure 4‑11) to identify the proxy server IP address and port number with the SSL preoptimization policy for the rule.

Figure 4‑11. In-Path Rule