SteelFusion and Fibre Channel

This chapter includes general information about Fibre Channel LUNs and how they interact with SteelFusion. It includes the following sections:

Overview of Fibre Channel

Core-v can connect to Fibre Channel LUNs at the data center and export them to the branch office as iSCSI LUNs. The iSCSI LUNs can then be mounted by VMware ESX or ESXi hypervisor running internally on VSP or on external ESX or ESXi servers or directly by Microsoft Windows virtual servers through Microsoft iSCSI Initiator. A virtual Windows file server running on VSP (

Figure: SteelFusion solution with Fibre Channel) can then share the mounted drive to branch office client PCs through CIFS protocol.

This section includes the following topics:

Figure: SteelFusion solution with Fibre Channel

Fibre Channel is the predominant storage networking technology for enterprise business. Fibre Channel connectivity is estimated to be at 78 percent versus iSCSI at 22 percent. IT administrators still rely on the known, trusted, and robust Fibre Channel technology.

Fibre Channel is a set of integrated standards developed to provide a mechanism for transporting data at the fastest rate possible with the least delay. In storage networking, Fibre Channel is used to interconnect host and application servers with storage systems. Typically, servers and storage systems communicate using the SCSI protocol. In a storage area network (SAN), the SCSI protocol is encapsulated and transported through Fibre Channel frames.

The Fibre Channel (FC) protocol processing on the host servers and the storage systems is mostly carried out in hardware.

Figure: HBA FC protocol stack shows the various layers in the FC protocol stack and the portions implemented in hardware and software for an FC host bus adapter (HBA). FC HBA vendors are Qlogic, Emulex, and LSI.

Figure: HBA FC protocol stack

Special switches are also required to transport Fibre Channel traffic. Vendors in this market are Cisco and Brocade. Switches implement many of the FC protocol services, such as name server, domain server, zoning, and so on. Zoning is particularly important because, in collaboration with LUN masking on the storage systems, it implements storage access control by limiting access to LUNs to specific initiators and servers through specific targets and LUNs. An initiator and a target are visible to each other only if they belong to the same zone.

LUN masking is an access control mechanism implemented on the storage systems. NetApp implements LUN masking through initiator groups, which enable you to define a list of worldwide names (WWNs) that are allowed to access a specific LUN. EMC implements LUN masking using masking views that contain storage groups, initiator groups, and port groups.

LUN masking is important because Windows-based servers, for example, attempt to write volume labels to all available LUNs. This attempt can make the LUNs unusable by other operating systems and can result in data loss.

Fibre Channel LUN considerations

Fibre Channel LUNs are distinct from iSCSI LUNs in several important ways:

• No MPIO configuration - Multipathing support is performed by the ESXi system.

• SCSI reservations - SCSI reservations are not taken on Fibre Channel LUNs.

• Additional HA configuration required - Configuring HA for Core-v failover peers requires that each appliance be deployed on a separate ESXi system.

• Maximum of 60 Fibre Channel LUNs per ESXi system - ESXi allows a maximum of 60 RDMs into a VM. Within a VM an RDM is represented by a virtual SCSI device. A VM can only have four virtual SCSI controllers with 15 virtual SCSI devices each.

How VMware ESXi virtualizes Fibre Channel LUNs

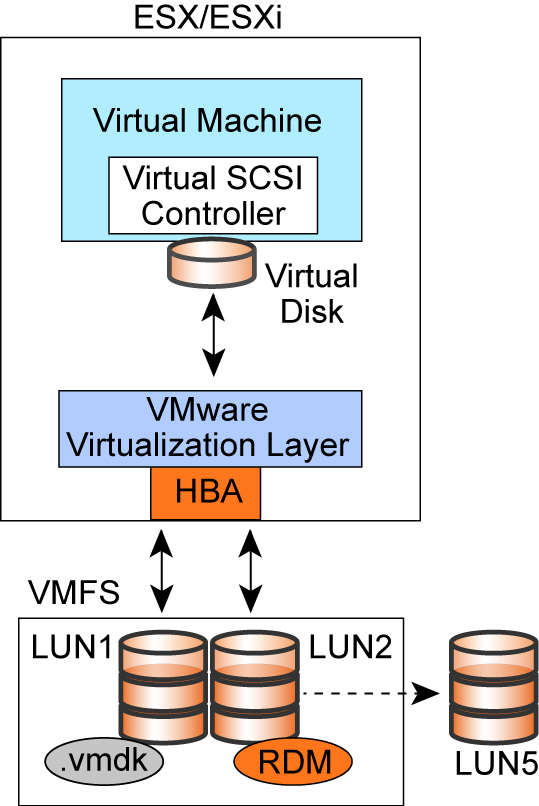

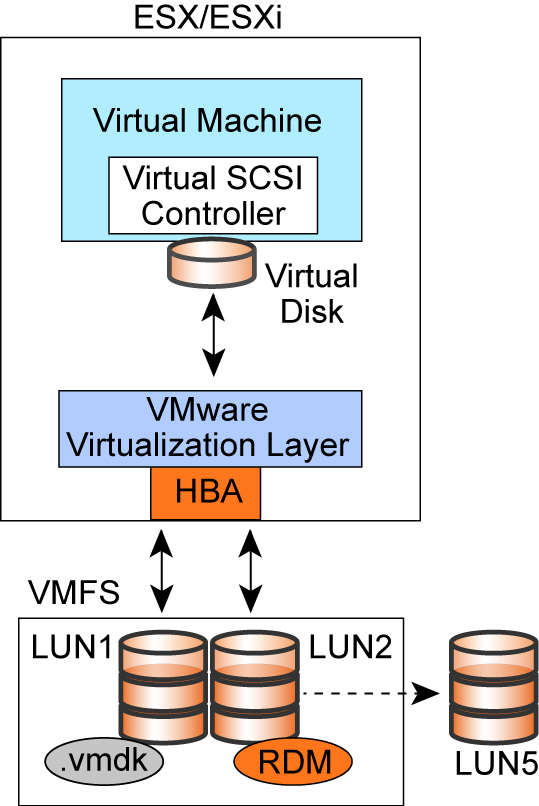

The VMware ESXi hypervisor provides not only CPU and memory virtualization but also host-level storage virtualization, which logically abstracts the physical storage layer from virtual machines. Virtual machines do not access the physical storage or LUNs directly, but instead use virtual disks. To access virtual disks, a virtual machine uses virtual SCSI controllers.

Each virtual disk that a virtual machine can access through one of the virtual SCSI controllers resides on a VMware Virtual Machine File System (VMFS) datastore or a raw disk. From the standpoint of the virtual machine, each virtual disk appears as if it were a SCSI drive connected to a SCSI controller. Whether the actual physical disk device is being accessed through parallel SCSI, iSCSI, network, or Fibre Channel adapters on the host is transparent to the guest operating system.

Virtual machine file system

In a simple configuration, the disks of virtual machines are stored as files on a Virtual Machine File System (VMFS). When guest operating systems issue SCSI commands to their virtual disks, the virtualization layer translates these commands to VMFS file operations.

Raw device mapping

A raw device mapping (RDM) is a special file in a VMFS volume that acts as a proxy for a raw device, such as a Fibre Channel LUN. With the RDM, an entire Fibre Channel LUN can be directly allocated to a virtual machine.

Figure: ESXi storage virtualization

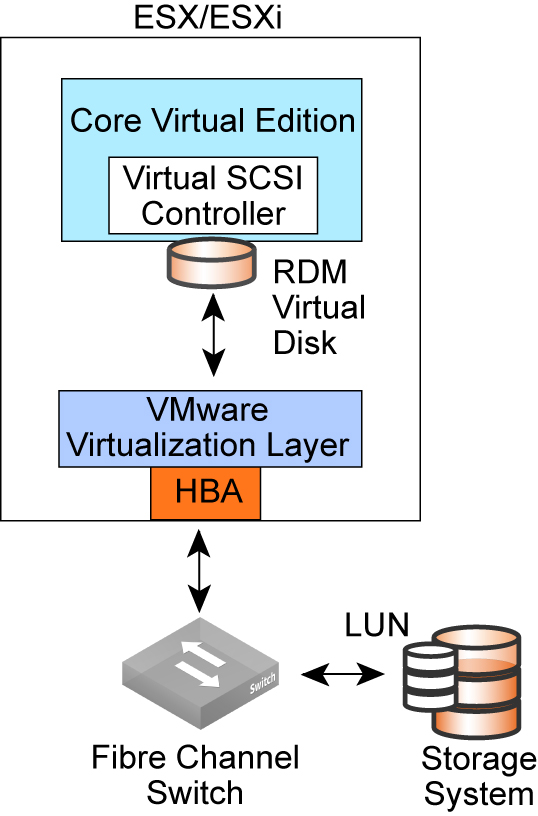

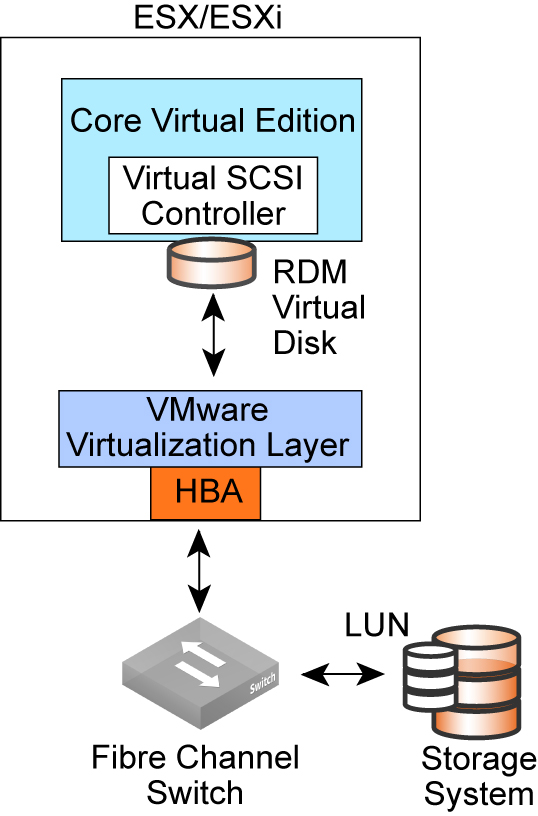

How Core-v connects to RDM Fibre Channel LUNs

Core-v uses RDM to mount Fibre Channel LUNs and export them to the Edge at the branch office. The Edge exposes those LUNs as iSCSI LUNs to the branch office clients.

Figure: Core-VM FC LUN to RDM Mapping

When Core-v interacts with an RDM Fibre Channel LUN, the following process takes place:

1. Core-v issues SCSI commands to the RDM disk.

2. The device driver in the Core-v operating system communicates with the virtual SCSI controller.

3. The virtual SCSI controller forwards the command to the ESXi virtualization layer or VMkernel.

4. The VMkernel performs the following tasks:

• Locates the RDM file in the VMFS.

• Maps the SCSI requests for the blocks on the RDM virtual disk to blocks on the appropriate Fibre Channel LUN.

• Sends the modified I/O request from the device driver in the VMkernel to the HBA.

5. The HBA performs the following tasks:

• Packages the I/O request according to the rules of the FC protocol.

• Transmits the request to the storage system.

• A Fibre Channel switch receives the request and forwards it to the storage system that the host wants to access.

Requirements for Core-v and Fibre Channel SANs

The following table describes the hardware and software requirements for deploying Core-v with Fibre Channel SANs.

Requirements | Notes |

SteelFusion Edge 4.0 and later | |

Core-v with SteelFusion 2.5 or later | |

VMware ESX/ESXi 4.1 or later | |

Storage system, HBA, and firmware combination supported in conjunction with ESX/ESXi systems | For details, see the VMware Compatibility Guide. |

Reserve CPU(s) and RAM on the ESX/ESXi system | Core model V1000U: 2 GB RAM, 2 CPU Core model V1000L: 4 GB RAM, 4 CPU Core model V1000H: 8 GB RAM, 8 CPU Core model V1500L: 32 GB RAM, 8 CPU Core model V1500H: 48 GB RAM, 12 CPU |

Fibre Channel license on the storage system | In some storage systems, Fibre Channel is a licensed feature. |

Specifics about Fibre Channel LUNs versus iSCSI LUNs

Using Fibre Channel LUNs on Core-v in conjunction with VMware ESX/ESXi differs from using iSCSI LUN directly on the Core in a number of ways, as listed in the following table.

Feature | Fibre Channel LUNs Versus iSCSI LUNs |

Multipathing | The ESX/ESXi system (not the Core) performs multipathing for the Fibre Channel LUNs. |

VSS snapshots | Snapshots created using the Microsoft Windows diskshadow command are not supported on Fibre Channel LUNs. |

SCSI reservations | SCSI reservations are not taken on Fibre Channel LUNs. |

Core HA deployment | Active and failover Core-vs must be deployed in a separate ESX/ESXi system. |

Max 60 Fibre Channel LUNs per ESX/ESXi system | ESX/ESXi systems enable a maximum of four SCSI controllers. Each controller supports a maximum of 15 SCSI devices. Hence, a maximum of 60 Fibre Channel LUNs are supported per ESX/ESXi system. |

VMware vMotion not supported | Core-vs cannot be moved to a different ESXi server using VMware vMotion. |

VMware HA not supported | A Core-v cannot be moved to another ESXi server through the VMware HA mechanism. To ensure that the Core-v stays on the specific ESXi server, create an affinity rule as described in this Riverbed Knowledge Base article: http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1005508 |

Deploying Fibre Channel LUNs on Core-v appliances

This section describes the process and procedures for deploying Fibre Channel LUNs on Core-v appliances. It includes the following sections:

Deployment prerequisites

Before you can deploy Fibre Channel LUNs on Core-v appliances, the following conditions must be met:

• The active Core-v must be deployed and powered up on the ESX/ESXi system.

• The failover Core-v must be deployed and powered up on the second ESX/ESXi system.

• A Fibre Channel LUN must be available on the storage system.

• Preconfigured initiator and storage groups for LUN mapping to the ESX/ESXi systems must be available.

• Preconfigured zoning on the Fibre Channel switch for LUN visibility to the ESX/ESXi systems across the SAN fabric must be available.

• You must have administrator access to the storage system, the ESX/ESXi system, and SteelFusion appliances.

For more information about how to set up Fibre Channel LUNs with the ESX/ESXi system, see the relevant edition of the VMware Fibre Channel SAN Configuration Guide and the VMware vSphere ESXi vCenter Server Storage Guide.

Configuring Fibre Channel LUNs

Perform the procedures in the following sections to configure the Fibre Channel LUNs:

To discover and configure Fibre Channel LUNs as Core RDM disks on an ESX/ESXi system

1. Navigate to the ESX system Configuration tab, click Storage Adapters, select the FC HBA, and click Rescan All to discover the Fibre Channel LUNs.

Figure: FC Disk Discovery

2. Right-click the name of the Core-v and select Edit Settings.

The virtual machine properties dialog box opens.

3. Click Add and select Hard Disk for device type.

4. Click Next and select Raw Device Mappings for type of disk to use.

Figure: Select Raw Device Mappings

5. Select the LUNs to expose to the Core-v.

6. Select the datastore on which you want store the LUN mapping.

7. Select Store with Virtual Machine.

Figure: Store mappings with VM

8. For compatibility mode, select Physical.

9. For advanced options, use the default virtual device node setting.

10. Review the final options and click Finish.

The Fibre Channel LUN is now set up as an RDM and ready to be used by the Core-v.

To discover and configure exposed Fibre Channel LUNs though an ESX/ESXi system

on the Core-v

1. In the Core Management Console, choose Configure > Manage: LUNs and select Add a LUN.

2. Select Block Disk.

Figure: Rescan for new block disks

4. Select the LUN Serial Number.

5. Select Add Block Disk LUN to add it to the Core-v. Map the LUN to the desired Edge and configure the access lists of the initiators.

Figure: Add new block disk

Configuring Fibre Channel LUNs in a Core-v HA scenario

This section describes how to deploy Core-vs in HA environments. It includes the following topics:

When you deploy Core-v appliances in an HA environment, install the two appliances on separate ESX servers so that there is no single point of failure. You can deploy the Core-v appliances differently depending on whether the ESX servers hosting the Core-v appliances are managed by a vCenter or not. The methods described in this section are only relevant when Core-v appliances manage FC LUNs (also called block disk LUNs).

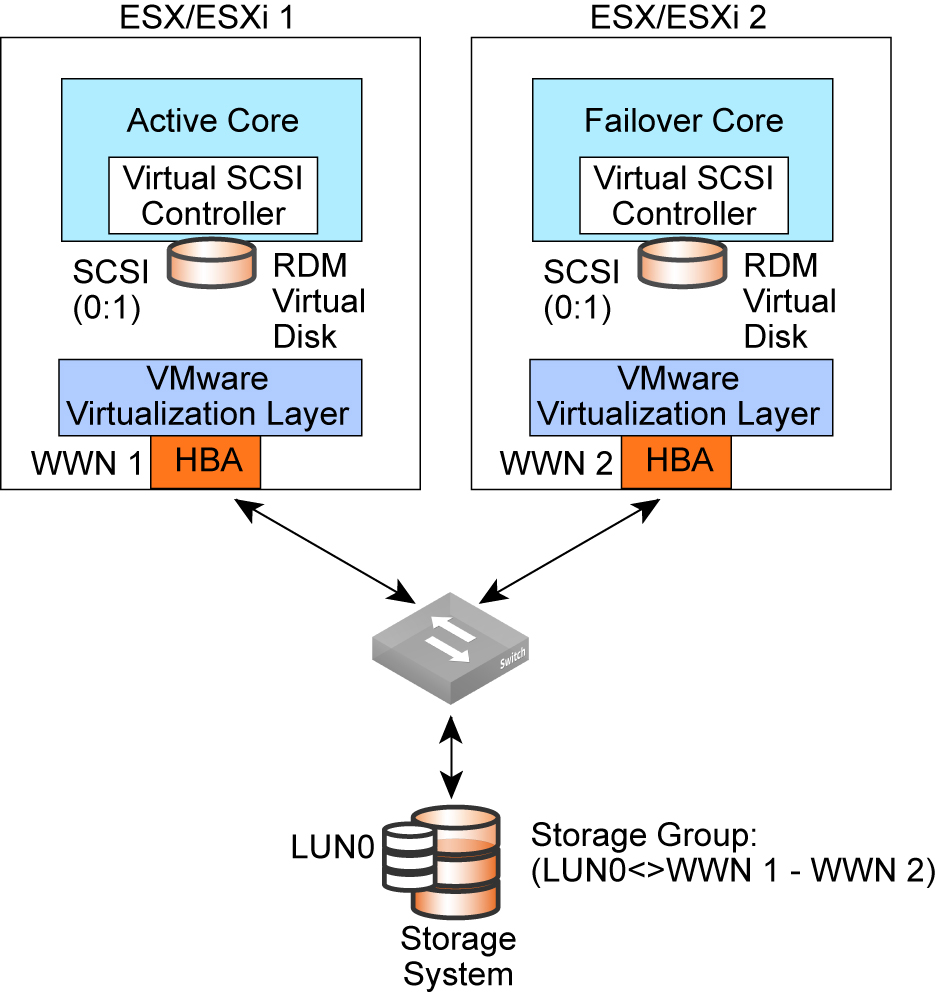

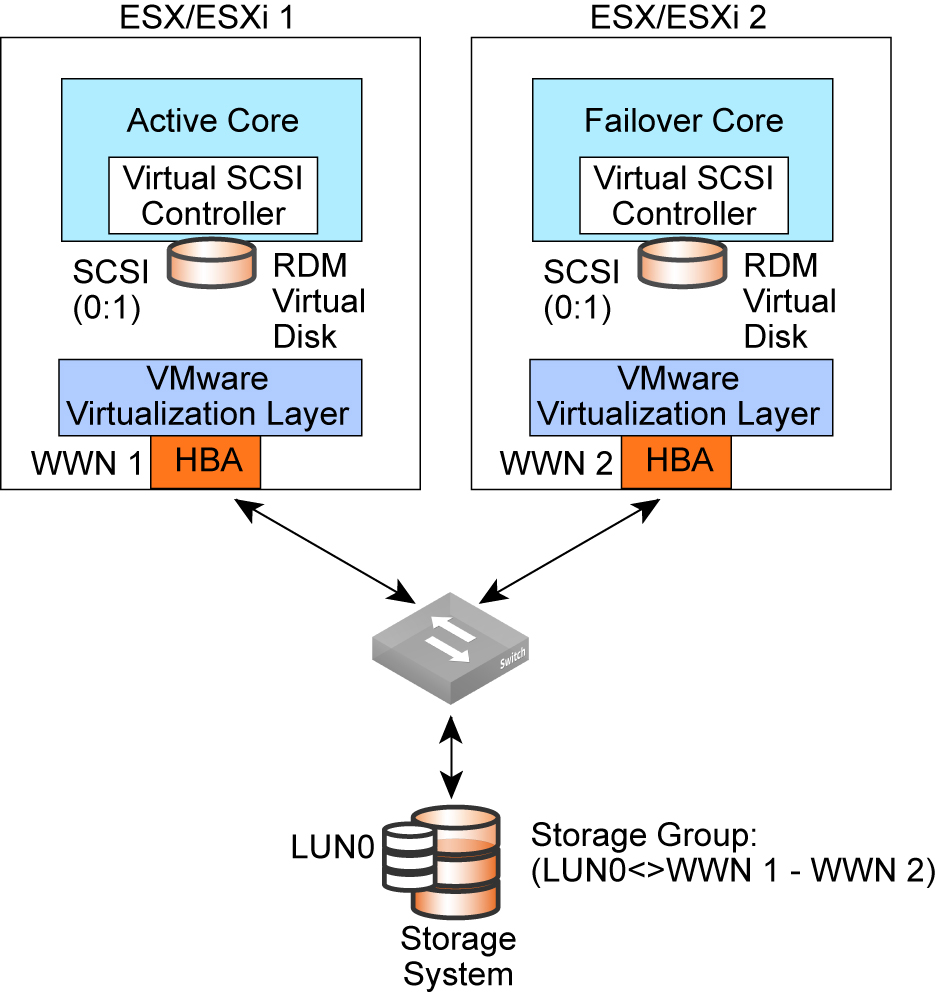

For both deployment methods, modify the storage system Storage Group to expose the LUN to both ESXi systems.

Figure: Core-v HA deployment shows that LUN 0 is assigned to both worldwide names of the HBAs or the ESXi HBAs.

Figure: Core-v HA deployment

When ESXi servers hosting the Core-v appliances are managed by vCenter

This is a scenario where two Core-v appliances (Core-v1 and Core-v2) are deployed in HA. They are hosted on ESX and managed by vCenter. After adding a LUN in as RDM to Core-v1, vCenter does not present the LUN in the list of LUNs available to add as RDM to Core-v2. It is not available because the LUN filtering mechanism is turned on in vCenter by default to help prevent LUN corruption.

One way to solve the problem is by adding LUNs to the two Core-v appliances in HA, with the ESX servers in a vCenter without turning off LUN filtering by using the following procedures. You must also have a shared datastore on a SAN that the ESXi hosts can access that can be used to store the RDM files.

Add LUNs to the first Core-v

1. In the vSphere Client inventory, select the first Core-v and select Edit Settings.

The Virtual Machine Properties dialog box opens.

2. Click Add, select Hard Disk, and click Next.

3. Select Raw Device Mappings and click Next.

4. Select the LUN to be added and click Next.

5. Select a datastore and click Next.

This datastore must be on a SAN because you need a single shared RDM file for each shared LUN on the SAN.

6. Select Physical as the compatibility mode and click Next. A SCSI controller is created when the virtual hard disk is created.

7. Select a new virtual device node. For example, select SCSI (1:0), and click Next.

This node must be a new SCSI controller. You cannot use SCSI 0.

8. Click Finish to complete creating the disk.

9. In the Virtual Machine Properties dialog box, select the new SCSI controller and set SCSI Bus Sharing to Physical and click OK.

To Add LUNs to the second Core-v

1. In the vSphere Client inventory, select the HA Core-v and select Edit Settings.

The Virtual Machine Properties dialog box appears.

2. Click Add, select Hard Disk, and click Next.

3. Select Use an existing virtual disk and click Next.

4. In Disk File Path, browse to the location of the disk specified for the first node. Select Physical as the compatibility mode and click Next.

A SCSI controller is created when the virtual hard disk is created.

5. Select the same virtual device node you chose for the first Core-v's LUN (for example, SCSI [1:0]), and click Next.

The location of the virtual device node for this LUN must match the corresponding virtual device node for the first Core-v.

6. Click Finish.

7. In the Virtual Machine Properties dialog box, select the new SCSI controller, set SCSI Bus Sharing to Physical, and click OK.

Keep in mind the following caveats:

• You cannot use SCSI controller 0; so the number of RDM LUNs supported on Core-v running on ESXi 5.x reduces from 60 to 48.

• You can only change the SCSI controller SCSI bus sharing setting when the Core-v is powered down; so you need to power down the Core-v each time you want to add a new controller. Each controller supports 16 disks.

• vMotion is not supported with Core-v.

Another solution is to turn off LUN filtering (RDM filtering) on the vCenter. If you are not willing to turn off RDM filtering for the entire vCenter, you cannot disable LUN filtering per data center or per LUN in vCenter.

If you turn off LUN filtering temporarily, you can complete the following steps:

1. Turn off RDM filtering on vCenter. The LUN filtering mechanism during RDM creation adds LUNs to both Core-vs.

2. Turn RDM filtering back on.

You must repeat these steps every time new LUNs are added to the Core-v appliances. However, VMware does not recommend turning LUN filtering off unless there are other methods to prevent LUN corruption. This method should be used with caution.

When ESXi servers hosting the Core-v appliances are not managed by vCenter

When ESX is hosting the Core-v appliances in HA that are not managed by the same vCenter or not managed by vCenter at all, you can add the LUNs as RDM to both Core-vs without any issues or special configuration requirements.

Populating Fibre Channel LUNs

This section provides the basic steps you need to populate Fibre Channel LUNs prior to deploying them into the Core.

To populate a Fibre Channel LUN

1. Create a LUN (Volume) in the storage array and allow the ESXi host where the Core is installed to access it.

2. Go to the ESXi host and choose Configuration > Advance Settings > RdmFilter and clear RdmFilter to disable it.

You must complete this step if you intend on deploying Core in HA config.

3. Navigate to the ESX system Configuration tab, click Storage Adapters, select the FC HBA, and click

Rescan All… to discover the Fibre Channel LUNs (

Figure: FC Disk Discovery on

page 61).

4. On the ESXi server, select Storage and click Add.

5. Select Disk/LUN for the storage type and click Next.

You might need to wait a few moments before the new Fibre Channel LUN appears in the list.

6. Select the Fibre Channel drive and click Next.

7. Select VMFS-5 for the file system version and click Next.

8. Click Next, enter a name for the datastore, and click Next.

9. For Capacity, use the default setting of Maximum available space and click Next.

10. Click Finish.

11. Copy files from an existing datastore to the datastore you just added.

12. Select the new datastore and unmount.

You need to unmount and detach the device and rescan it, and then reattach it before you can proceed.

To unmount and detach a datastore

1. Right-click the device in the Devices list and choose Unmount.

2. Right-click the device in the Devices list and choose Detach.

3. Rescan the data twice.

4. Reattach the device by right-clicking the device in the Devices list and choosing Attach.

Do not rescan the device.

To add the LUN to the Core-v

1. Right-click the Core-v and select Edit Settings.

2. Click Add and select Hard Disk.

3. Click Next, and when prompted to select a disk to use, select Raw Device Mappings.

4. Select the target LUN to use.

5. Select the datastore on which to store the LUN mapping and select Store with Virtual Machine.

6. Select Physical for compatibility mode.

7. For advanced options, use the default setting.

8. Review the final options and click Finish.

The Fibre Channel LUN is now set up as RDM and ready to be used by the Core-v.

When the LUN is projected in the branch site and is attached to the Branch ESXi Server (VSP or other device), you are prompted to select VMFS mount options. Select Keep the existing signature.

Best practices for deploying Fibre Channel on Core-v

This section describes the best practices for deploying Fibre Channel on Core-v. Follow these suggestions because they lead to designs that are easier to configure and troubleshoot.

This section includes the following topics:

Best practices

The following table shows the Riverbed best practices for deploying Fibre Channel on Core-v.

Best Practice | Description |

Keep iSCSI and Fibre Channel LUNs on separate Cores | Do not mix iSCSI and Fibre Channel LUNs in the same

Core-v. |

Use ESX/ESXi 4.1 or later | Make sure that the ESX/ESXi system is running version 4.1 or later. |

Use gigabit links | Make sure that you map the Core-v interfaces to gigabit links and are not shared with other traffic. |

Dedicate physical NICs | Use one-to-one mapping between physical and virtual NICs for the Core data interfaces. |

Reserve CPU(s) and RAM | Reserve CPU(s) and RAM for the Virtual Core appliance, following the guidelines listed in the following table. |

The following table shows the CPU and RAM guidelines for deployment.

Model | Memory reservation | Disk space | Recommended CPU reservation | Maximum data set size | Maximum number of branches |

VGC-1000-U | 2 GB | 25 GB | 2 @ 2.2 GHz | 2 TB | 5 |

VGC-1000-L | 4 GB | 25 GB | 4 @ 2.2 GHz | 5 TB | 10 |

VGC-1000-M | 8 GB | 25 GB | 8 @ 2.2 GHz | 10 TB | 20 |

VGC-1500-L | 32 GB | 350 GB | 8 @ 2.2 GHz | 20 TB | 30 |

VGC-1500-M | 48 GB | 350 GB | 12 @ 2.2 GHz | 35 TB | 30 |

Recommendations

The following table shows the Riverbed recommendations for deploying Fibre Channel on the Core-v.

Recommendation | Description |

Deploy a dual-redundant FC HBA | The FC HBA connects the ESXi system to the SAN. Dual-redundant HBAs help to keep an active path always available. ESXi multipath software is used for controlling and monitoring HBA failure. In case of path or HBA failure, the workload is failed-over to the working path. |

Use recommended practices for removing/deleting FC LUNs | Before deleting, offlining, or unmapping the LUNs from the storage system or removing the LUNs from the zoning configuration, remove the LUNs/block disks from the Core and unmount the LUNs from the ESXi system. ESXi might become unresponsive and sometimes might need to be rebooted if all paths to a LUN are lost. |

Do not use the block disks on the Core | Fibre Channel LUNs (also known as block disks) are not supported on the physical Core. |

Troubleshooting

This section describes common deployment issues and solutions.

If the FC LUN is not detected on the ESXi system on which the Core-v is running, try performing these debugging steps:

1. Rescan the ESXi system storage adapters.

2. Make sure that you are looking at the right HBA on ESXi system.

3. Make sure that the ESXi system has been allowed to access the FC LUN on the storage system, and check initiator and storage groups.

4. Make sure that the zoning configuration on the FC switch is correct.

5. Refer to VMware documentation and support for further assistance on troubleshooting FC connectivity issues.

If you deployed the VM on the LUN with the same ESXi or ESXi cluster to deploy the Core-v, and the datastore is still mounted, you might detect the FC LUN on the ESXi system, but the LUN does not appear on the list of the LUNs that are presented as RDM to Core-v. If this is the case, perform the following procedure to unmount the datastore from the ESXi system.

To unmount the datastore from the ESXi system

1. To unmount the FC VMFS datastore, select the Configuration tab, select View: Datastores, right-click a datastore, and select Unmount.

Figure: Unmounting a datastore

2. To detach the corresponding device from ESXi, select view Devices, right-click a device, and select Detach.

3. Rescan twice.

Figure: Rescanning a device

4. To attach the device by viewing Devices, right-click a device, and select Attach.

5. Do not rescan, but view Devices and verify that the datastore is removed from the datastore list.

6. Readd the device as the RDM disk to the Core-v.

If the FC RDM LUN is not visible on the Core-v, try the following debugging actions:

• Select the Rescan for new LUNs process on the Core-v several times.

• Check the Core-v logs for failures.

Related information

• SteelFusion Core Management Console User’s Guide

• SteelFusion Edge Management Console User’s Guide

• SteelFusion Core Installation and Configuration Guide

• SteelFusion Command-Line Interface Reference Manual

• Fibre Channel on SteelFusion Core Virtual Edition Solution Guide