About policy settings for protocols

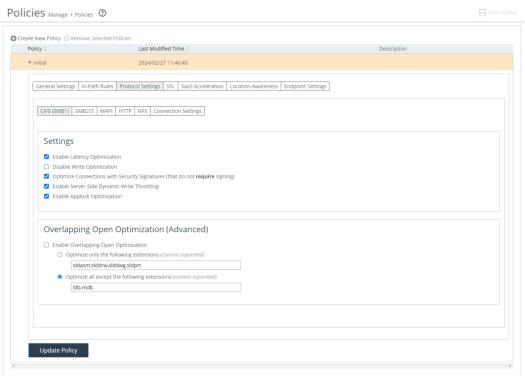

You configure these protocol settings in the Protocol Settings tab under Manage > Services: Policies.

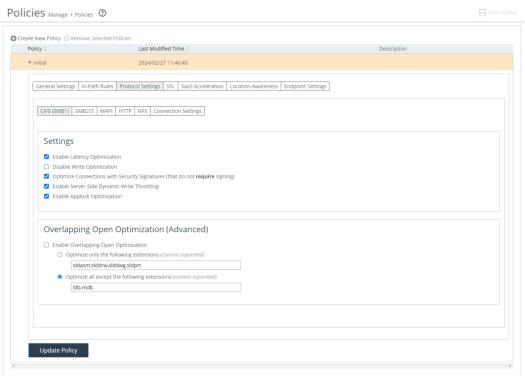

CIFS (SMB1) settings

About CIFS (SMB1) protocol settings

CIFS SMB1 optimization performs latency and SDR optimizations on SMB1 traffic. Without this feature, the controller performs only SDR optimization without improving CIFS latency. Typically, you disable CIFS optimization only to troubleshoot the system.

The Enable Latency Optimization setting enables latency and SDR optimizations on SMB1 traffic. Without this feature, the controller performs only SDR optimization without improving CIFS latency. Latency optimization is enabled by default. Typically, you disable latency optimization to troubleshoot problems with the system.

To disable CIFS optimization, it must also be disabled on the server-side SteelHead.

The Disable Write Optimization setting disables write optimization. Disable write optimization only if you have applications that assume and require write-through in the network. If you disable write optimization, the controller still provides optimization for CIFS reads and for other protocols, but you might experience a slight decrease in overall optimization. Most applications operate safely with write optimization because CIFS allows you to explicitly specify write-through on each write operation. However, if you have an application that does not support explicit write-through operations, you must disable it on the controller. If you don’t disable write-through, the controller acknowledges writes before they are fully committed to disk, to speed up write operation. The controller does not acknowledge the file close until the file is safely written.

The Optimize Connections with Security Signatures (that don’t require signing) setting prevents Windows SMB signing. This is the default setting. The Secure-CIFS feature enables you to automatically disable Windows SMB signing. SMB signing prevents the appliance from applying full optimization on CIFS connections and significantly reduces the performance gain from a Client Accelerator deployment. Because many enterprises already take additional security precautions (such as firewalls, internal-only reachable servers, and so forth), SMB signing adds minimal additional security, at a significant performance cost (even without Riverbed optimization). Before you enable Secure-CIFS, consider these factors:

• If the client machine has Required signing, enabling Secure-CIFS prevents the client from connecting to the server.

• If the server-side machine has Required signing, the client and server connect, but you can’t perform full latency optimization with the appliance. Domain controllers default to Required.

For details about SMB signing and the performance cost associated with it, see the SteelHead User Guide.

The Enable Server Side Dynamic Write Throttling setting enables the CIFS dynamic throttling mechanism, which replaces the current static buffer scheme. If you enable CIFS dynamic throttling, it is activated only when there are suboptimal conditions on the server side causing a backlog of write messages; it does not have a negative effect under normal network conditions.

The Enable Applock Optimization setting enables CIFS latency optimizations to improve read and write performance for Microsoft Word (.doc) and Excel (.xls) documents when multiple users have the file open. This setting is enabled by default in RiOS 6.0 and later. This feature enhances the Enable Overlapping Open Optimization feature by identifying and obtaining locks on read/write access at the application level. The overlapping open optimization feature handles locks at the file level.

Applock Optimization is a client-side setting only. To enable this feature on Client Accelerator endpoints, select Applock Optimization on the Client Accelerator policy assigned to the clients.

The Enable Overlapping Open Optimization setting is disabled by default. To prevent any compromise to data integrity, the appliance optimizes only data to which exclusive access is available (in other words, when locks are granted). When an oplock is not available, the controller does not perform application-level latency optimizations but still performs SDR and compression on the data, as well as TCP optimizations. Enabling this feature on applications that perform multiple opens of the same file to complete an operation results in a performance improvement (for example, CAD applications).

If a remote user opens a file that is optimized using the overlapping open feature and a second user opens the same file, the second user might receive an error if the file fails to go through an endpoint, or if it does not go through a SteelHead (for example, certain applications that are sent over the LAN). If this error occurs, disable overlapping opens for those applications.

The Optimize only these extensions setting specifies a list of extensions to optimize using overlapping opens.

The Optimize all except these extensions setting specifies a list of extensions not to optimize using overlapping opens.

About SMB2/3 protocol settings

These settings perform SMB2, SMB3, or SMB3.1.1 latency optimization in addition to the existing bandwidth optimization features. These optimizations include cross-connection caching, read-ahead, write-behind, and batch prediction among several other techniques to ensure low-latency transfers. The controller maintains the data integrity and the client always receives data directly from the servers.

Use the Down-negotiation setting so that connections that can be successfully down-negotiated will be optimized according to the settings in the CIFS (SMB1) section. If down-negotiation is enabled, select one of these options:

• None—Don’t down-negotiate connections. No connections can be down negotiated.

• SMB2 and SMB3 to SMB1—Down-negotiate SMB2, SMB3, or SMB3.1.1 connections to SMB1.

The Enable SMB2 Optimization setting enables SMB2 optimization in addition to the existing bandwidth optimization features. These optimizations include cross-connection caching, read-ahead, write-behind, and batch prediction among several other techniques to ensure low-latency transfers. The controller maintains the data integrity, and the client always receives data directly from the servers.

The Enable SMB3 Optimization setting enables SMB3 (or SMB3.1.1) optimization.

You must enable (or disable) SMB2, SMB3, or SMB3.1.1 (if applicable) optimization on both the controller and the server-side SteelHead. After enabling SMB2, SMB3, or SMB3.1.1 optimization, you must restart the optimization service.

MAPI protocol settings

MAPI does not require a separate license and is enabled by default. When encrypted MAPI support is enabled on the controller, it uses a secure inner channel to ensure that all MAPI traffic sent between Client Accelerator endpoints and the server-side SteelHeads is secure.

MAPI optimization is enabled by default. Disable MAPI only if you’re experiencing issues with Outlook traffic.

• Exchange port—Specifies the MAPI Exchange port. The default value is 7830.

• Enable encrypted optimization.

• Enable Outlook Anywhere optimization. Outlook Anywhere is a feature of Microsoft Exchange Server 2003, 2007, and 2010 that allows Microsoft Office Outlook 2003, 2007, and 2010 clients to connect to their Exchange servers over the internet using the Microsoft RPC tunneling protocol. Outlook Anywhere allows for a VPN-less connection as the MAPI RPC protocol is tunneled over HTTP or HTTPS. RPC over HTTP can transport regular or encrypted MAPI. If you use encrypted MAPI, the server-side SteelHead must be a member of the Windows domain. By default, this feature is disabled. To use this feature, you must also enable HTTP Optimization and server-side SteelHeads (HTTP optimization is enabled by default). If you’re using Outlook Anywhere over HTTPS, you must enable TLS and the IIS certificate must be installed on the server-side SteelHead:

– When using HTTP, Outlook can only use NTLM proxy authentication.

– When using HTTPS, Outlook can use NTLM or Basic proxy authentication.

– When using encrypted MAPI with HTTP or HTTPS, you must enable and configure encrypted MAPI in addition to this feature.

• Auto-detect Outlook Anywhere connections—Automatically detects the RPC over HTTPS protocol used by Outlook Anywhere. This feature is dimmed and unavailable until you enable Outlook Anywhere optimization. By default, these options are enabled. You can enable automatic detection of RPC over HTTPS using this option or you can set in-path rules. Autodetect is best for simple Client Accelerator configurations and when the IIS server is also handling websites. If the IIS server is only used as RPC Proxy, and for configurations with asymmetric routing, connection forwarding or Interceptor installations, add in-path rules that identify the RPC Proxy server IP addresses and select the Outlook Anywhere latency optimization policy. After adding the in-path rule, disable the auto-detect option.

• Enable MAPI over HTTP optimization—optimizes MAPI-over-HTTP traffic. MAPI over HTTP is a transport mechanism that provides faster connections between Outlook and Exchange.

After you apply your settings, you can verify that the connections appear in the Endpoint report as a MAPI-OA or an eMAPI-OA (encrypted MAPI) application. The Outlook Anywhere connection entries appear in the system log with an RPCH prefix.

Outlook Anywhere can create twice as many connections on the SteelHead as regular MAPI (depending on the versions of the Outlook client and Exchange server). This effect results in the SteelHead entering admission control twice as fast with Outlook Anywhere as with regular MAPI. For details and troubleshooting information, see the SteelHead Deployment Guide.

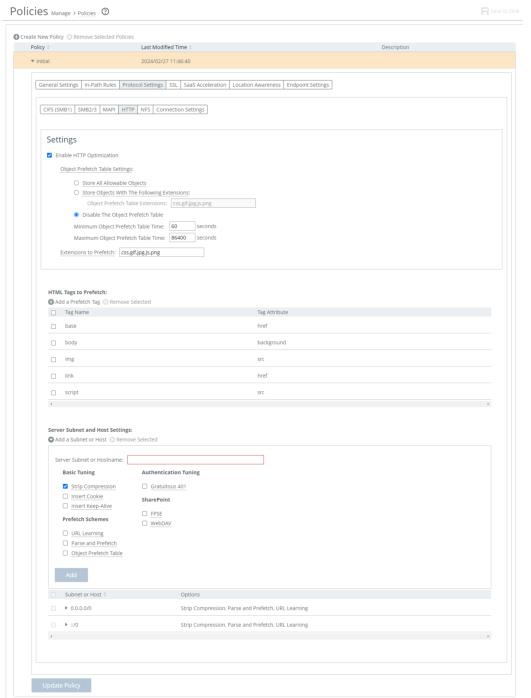

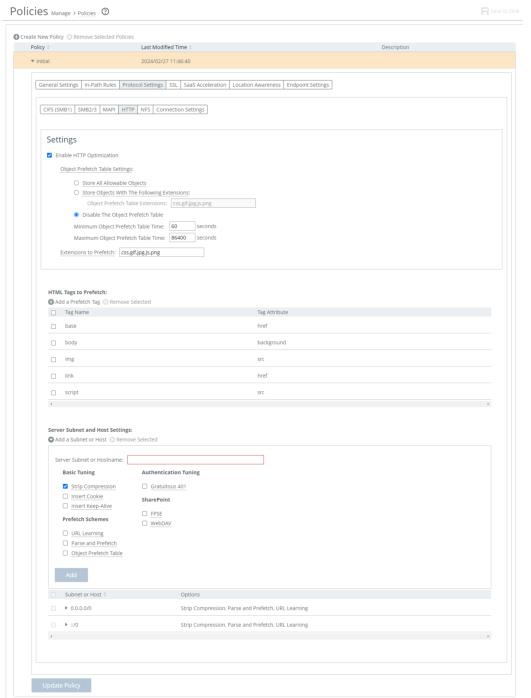

HTTP protocol settings

Select Enable HTTP Optimization to prefetch and store objects embedded in web pages to improve HTTP traffic performance. By default, HTTP Optimization is enabled. You can choose the extensions to store, such as .css, .gif, .jpg, .js, and .png, or configure the Client Accelerator endpoint to store all allowable objects.

HTTP settings (with HTML Tags to Prefetch and “Server Subnet and Host Settings” sections expanded)

You can configure these HTTP settings:

• Enable HTTP Optimization—Enables prefetching and storing objects embedded in web pages to improve HTTP traffic performance. By default, HTTP optimization is enabled.

• Store All Allowable Objects—Examines the control header to determine which objects to store. When enabled, the controller does not limit the objects to those listed in Extensions to prefetch but rather prefetches all objects that the control header indicates are storable. This option is useful to store web objects encoded into names without an object extension: for example, SharePoint objects. By default, Store All Allowable Objects is enabled.

• Disable the Object Prefetch Table—Stores nothing.

• Minimum Object Prefetch Table Time—Sets the minimum number of seconds the objects are stored in the local object prefetch table. The default is 60 seconds. This setting specifies the minimum lifetime of the stored object. During this lifetime, any qualified If-Modified-Since (IMS) request from the client receives an HTTP 304 response, indicating that the resource for the requested object has not changed since stored.

• Maximum Object Prefetch Table Time—Sets the maximum number of seconds the objects are stored in the local object prefetch table. The default is 86,400 seconds (24 hours). This setting specifies the maximum lifetime of the stored object. During this lifetime, any qualified If-Modified-Since (IMS) request from the client receives an HTTP 304 response, indicating that the resource for the requested object has not changed since stored.

• Extensions to Prefetch—Specifies object extensions to prefetch, separated by commas. By default, the SteelHead prefetches .jpg, .gif, .js, .png, and .css object extensions.

HTML Tags to Prefetch

By default, these tags are prefetched: base/href, body/background, img/src, link/href, and script/src. Add a prefetch tag configures a new prefetch tag where you can enter the tag name and attribute. These tags are for the Parse and Prefetch feature only and don’t affect other prefetch types, such as object extensions.

Server Subnet and Host Settings

These settings enable URL Learning, Parse and Prefetch, and Object Prefetch Table in any combination for any server subnet. You can also enable authorization optimization to tune a particular subnet dynamically with no service restart required. The default setting is URL Learning for all traffic with automatic configuration disabled. The default setting applies when HTTP optimization is enabled, regardless of whether there is an entry in the Subnet list. In the case of overlapping subnets, specific list entries override any default settings. Suppose the majority of your web servers have dynamic content applications, but you also have several static content application servers. You could configure your entire server subnet to disable URL Learning and enable Parse and Prefetch and Object Prefetch Table, optimizing HTTP for the majority of your web servers. Next, you could configure your static content servers to use URL Learning only, disabling Parse and Prefetch and Object Prefetch Table. Server subnet or hostname specifies an IP address and mask pattern for the server subnet on which to set up the HTTP optimization scheme.

Basic Tuning

• Strip Compression—Removes the accept-encoding lines from the HTTP compression header. An accept-encoding directive compresses content rather than using raw HTML. Enabling this option improves the performance of the Client Accelerator data reduction algorithms. By default, strip compression is enabled.

• Insert Cookie—Adds a cookie to HTTP applications that don’t already have one. HTTP applications frequently use cookies to keep track of sessions. The controller uses cookies to distinguish one user session from another. If an HTTP application does not use cookies, the controller inserts one so that it can track requests from the same client. By default, this setting is not selected.

• Insert Keep Alive—Uses the same TCP connection to send and receive multiple HTTP requests and responses, as opposed to opening a new one for every single request and response. Select this option when using the URL Learning or Parse and Prefetch features with HTTP 1.0 or HTTP 1.1 applications using the Connection Close method. By default, this setting is not selected.

Prefetch Schemes

• URL Learning—Enables URL Learning, which learns associations between a base URL request and a follow-on request. It stores information about which URLs have been requested and which URLs have generated a 200 OK response from the server. This option fetches the URLs embedded in style sheets or any JavaScript associated with the base page and located on the same host as the base URL. URL Learning works best with nondynamic content that does not contain session-specific information. URL Learning is enabled by default.

Your system must support cookies and persistent connections to benefit from URL Learning. If your system has cookies turned off and depends on URL rewriting for HTTP state management, or is using HTTP 1.0 (with no keepalives), you can force the use of cookies using the Add Cookie option and force the use of persistent connections using the Insert Keep Alive option.

• Parse and Prefetch—Enables Parse and Prefetch, which parses the base HTML page received from the server and prefetches any embedded objects to the controller. This option complements URL Learning by handling dynamically generated pages and URLs that include state information. When the browser requests an embedded object, the controller serves the request from the prefetched results, eliminating the round-trip delay to the server.

The prefetched objects contained in the base HTML page can be images, style sheets, or any Java scripts associated with the base page and located on the same host as the base URL.

Parse and Prefetch requires cookies. If the application does not use cookies, you can insert one using the Insert Cookie option.

• Object Prefetch Table—Enables the Object Prefetch Table, which stores HTTP object prefetches from HTTP GET requests for cascading style sheets, static images, and Java scripts in the Object Prefetch Table. When the browser performs If-Modified-Since (IMS) checks for stored content or sends regular HTTP requests, the controller responds to these IMS checks and HTTP requests, cutting back on round trips across the WAN.

Authentication Tuning

• Gratuitous 401—Prevents a WAN round trip by issuing the first 401 containing the realm choices from the controller. We recommend enabling Strip Auth Header along with this option. This option is most effective when the web server is configured to use per-connection NTLM authentication or per-request Kerberos authentication. If the web server is configured to use per-connection Kerberos authentication, enabling this option might cause additional delay.

SharePoint

• FPSE (FrontPage Server Extensions)—FPSE is an application-level protocol used by SharePoint. FPSE allows a website to be presented as a file share. FPSE initiates its communication with the server by requesting well-defined URLs for further communication and determining the version of the server.

• WebDAV (Web-based Distributed Authoring and Versioning)—WebDAV is a set of extensions to the HTTP/1.1 protocol that allows users to collaboratively edit and manage files on remote web servers. WebDAV is an IETF Proposed Standard (RFC 4918) that provides the ability to access the document management system as a network file system.

NFS settings

NFS settings provide latency optimization improvements for NFS operations by prefetching data, storing it on the client for a short amount of time, and using it to respond to client requests.

Connection settings

Connection pooling enhances network performance by reusing active connections instead of creating a new connection for every request. Connection pooling is useful for protocols that create a large number of short-lived TCP connections, such as HTTP.

To optimize such protocols, a connection pool manager maintains a pool of idle TCP connections, up to the maximum pool size. When a client requests a new connection to a previously visited server, the pool manager checks the pool for unused connections and returns one if available. Therefore, the controller and the SteelHead don’t have to wait for a three-way TCP handshake to finish across the WAN. If all connections currently in the pool are busy and the maximum pool size has not been reached, the new connection is created and added to the pool. When the pool reaches its maximum size, all new connection requests are queued until a connection in the pool becomes available or the connection attempt times out.

The default value is 5. A value of 0 specifies no connection pool.