Overview of the SteelHead Interceptor

This chapter introduces the SteelHead Interceptor. It includes the following sections:

Overview of the SteelHead Interceptor

The SteelHead Interceptor enables you to scale your deployments of SteelHeads at large central sites. Deployed in-path, SteelHead Interceptors provide virtual in-path clustering and load balancing for SteelHeads that are physically deployed out-of-path.

The SteelHead Interceptor is typically deployed in a data center or hub network where any in-path devices need to be high performing and highly available.

The SteelHead Interceptor directs incoming traffic to its clustered SteelHeads according to in-path, service, and load-balancing rules.

The SteelHead Interceptor is an in-path clustering solution you can use to distribute optimized traffic to a local group of SteelHeads. The SteelHead Interceptor does not perform optimization itself. Therefore, you can use it in demanding network environments with extremely high throughput requirements. The SteelHead Interceptor works in conjunction with the SteelHead and offers several benefits over other clustering techniques, such as Web Cache Communication Protocol (WCCP) or Layer-4 switching, including native support for asymmetrically routed traffic flows.

Note: For details on in-path, service, and load-balancing rules, see the SteelHead Interceptor User’s Guide.

The SteelHead Interceptor includes the following features:

Feature | Description |

Connection Tracing | Connection traces enable you to identify the SteelHeads to which the SteelHead Interceptor has forwarded specific connections. Connection traces also enable users to debug failing or unoptimized connections or failing pass-through path-selection connections (if the Path Selection feature is enabled). Note: For details on setting connection tracing, see the SteelHead Interceptor User’s Guide. |

EtherChannel Deployment | The SteelHead Interceptor can operate within an EtherChannel deployment. In an EtherChannel deployment, all the links in the channel must pass through the same SteelHead Interceptor. These two configurations are supported: up to four channels with two links per channel group, or up to two channels with four links per channel group. |

Failover | You can configure a pair of SteelHead Interceptors for failover. In the event that one SteelHead Interceptor goes down or requires maintenance, the second SteelHead Interceptor configured for failover ensures uninterrupted service. Note: For details on setting failover, see the SteelHead Interceptor User’s Guide. You can configure a SteelHead Interceptor 9350 as the failover appliance for a SteelHead Interceptor 9600 if you enable the 9350-mode on the SteelHead Interceptor 9600. The 9350-mode is enabled by using the appliance operating-mode 9350 command. Note: For more information about the appliance operating-mode 9350 command, see the Riverbed Command-Line Interface Reference Manual. |

In-Path Rules | When the SteelHead Interceptor intercepts a SYN request to a server, the in-path rules you configure determine the subnets and ports for traffic to be optimized. You can specify in-path rules to pass through, discard, deny traffic, or to forward and optimize it. In the case of a data center, the SteelHead Interceptor intercepts SYN requests when a data center server establishes a connection with a client that resides outside of the data center. In the connection-processing decision tree, in-path rules are processed before load-balancing rules. Only traffic selected for forwarding proceeds to load-balancing rules processing. Traffic selected for pass-through proceeds to service rule processing if Path Selection is enabled. Note: For details on setting in-path rules and Path Selection, see the SteelHead Interceptor User’s Guide. |

Intelligent Forwarding | Intelligent Forwarding prevents the SteelHead Interceptor from forwarding the same packet to the SteelHead more than once. This feature prevents loops in case the packet that was already processed is routed through the SteelHead Interceptor again. |

Interceptor Monitoring | Cluster SteelHead Interceptors include both failover pairs deployed in a serial configuration and SteelHead Interceptors deployed in a parallel configuration to handle asymmetric routes. Asymmetric routing can cause the response from the server to be routed along a different physical network path from the original request, and a different SteelHead Interceptor can be on each of these paths. When you deploy SteelHead Interceptors in parallel, the first SteelHead Interceptor that receives a packet forwards the packets to the appropriate SteelHead. |

Link State Detection and Link State Propagation | The SteelHead Interceptor monitors the link state of devices in its path, including routers, switches, interfaces, and in-path interfaces. When the link state changes for a device that is part of a bridge, the link state change is propagated to the other devices on the bridge as well. |

Load-Balancing Rules | For connections selected by an in-path redirect rule, the SteelHead Interceptor distributes the connection to the most appropriate SteelHead based on rules you configure, intelligence from monitoring cluster SteelHeads, and the RiOS connection distribution algorithm. The SteelHead Interceptor combines two approaches to load balancing: • Peer affinity - The SteelHead Interceptor sends the connection to the local SteelHead with the most affinity (that is, the local SteelHead that has received the greatest number of connections from the remote SteelHead). • Round-robin distribution - Instead of checking the SteelHeads in order of most to least peer affinity, the SteelHeads are checked for availability in round-robin order starting with the one after the SteelHead that received the last connection from that rule. Note: Round-robin distribution can be enabled using the CLI only. It cannot be configured using the GUI. Note: For details on setting load-balancing rules (including information on Fair Peering V2 as it relates to load balancing), see the SteelHead Interceptor User’s Guide. |

Peer Affinity | Peer affinity refers to an established connection relationship between remote and cluster SteelHeads. Affinity-based load balancing matches traffic from a remote SteelHead to a specific cluster SteelHead based on a previous connection between them. Note: If no local SteelHead is currently handling the remote SteelHead, then the SteelHead Interceptor directs the traffic to the SteelHead with the lowest connection count. If the SteelHead with the best peer affinity is unavailable, the SteelHead Interceptor attempts to use the SteelHead with the next best affinity. |

Port Labels | Port labels enable you to apply rules to a range of ports. The SteelHead Interceptor provides port labels for secure and interactive ports, and for Riverbed Technology (RBT) ports. You can create additional labels as needed. |

Path Selection | RiOS 9.1.0 and later extend path selection to operate in SteelHead Interceptor cluster deployments, providing high-scale and high-availability deployment options. A SteelHead Interceptor cluster is one or more SteelHead Interceptors collaborating with one or more SteelHead appliances to select paths dynamically in complex architectures working together as a single solution. You can configure service rules to specify whether UDP traffic flows should be subject to path selection (that is, redirected to a SteelHead appliance or relayed without being path selected). Note: For details on enabling path selection and configuring service rules, see the SteelHead Interceptor User’s Guide. |

Reporting | The SteelHead Interceptor reporting function allows you to generate interface counter reports, diagnostic reports (to check CPU utilization, memory paging, and system logs), and report export data. |

SteelHead Monitoring | Cluster SteelHeads are the pool of SteelHeads for which the SteelHead Interceptor monitors capacity and balances load. To assist in deployment tuning and troubleshooting, the SteelHead Interceptor can monitor SteelHeads for connectivity, health, and load balancing (if Pressure Monitoring is enabled). |

System Status | The Dashboard displays system status information such as the system uptime, service uptime, the available SteelHead Interceptors, and a list of SteelHead connections. |

VLAN Tagging | The SteelHead Interceptor supports VLAN-tagged connections in VLAN-trunked links. The SteelHead Interceptor supports VLAN 802.1q. Note: The SteelHead Interceptor does not support the Cisco InterSwitch Link (ISL) protocol. |

New Features in Version 5.5

The following features are available in SteelHead Interceptor 5.5:

• Network services throughput performance improvement - Network services, such as path selection, Quality of Service (QoS) marking, and SteelFlow export, can now operate at higher throughputs in SteelHead and SteelHead Interceptor clusters.

The SteelHeads in the appliance cluster provide NetFlow or SteelFlow export services. The network services on the SteelHead Interceptor redirect the pass-through traffic to the SteelHead for QoS and flow export services. In SteelHead Interceptor 5.5, the network services were enhanced to handle higher throughputs by using a feature called Receive Packet Steering (RPS).

To achieve the higher throughputs in SteelHead Interceptor clusters, complete these tasks:

– First, enable path selection on all of the SteelHeads and SteelHead Interceptors in the cluster.

– Second, enable RPS by entering the rps enable command on each SteelHead and each SteelHead Interceptor in the cluster. Enabling RPS on each SteelHead and each SteelHead Interceptor uses the multiple CPU cores more effectively, resulting in higher throughputs.

For more information about enabling path selection, see the SteelHead Management Console User’s Guide and the SteelCentral Controller for SteelHead User’s Guide.

For more information about the rps enable command, see the Riverbed Command-Line Interface Reference Manual.

• Path Selection with Interceptor Cluster (PSIC) autochannel configuration - To operate efficiently, PSIC requires that cluster channels be set up between the SteelHead and SteelHead Interceptors. Cluster channels are traditionally configured using the GUI on the appliance. However, when the cluster configuration is pushed using the SteelCentral Controller for SteelHead (SCC), the PSIC cluster channels are autogenerated by the SCC and pushed to the appliances. No additional configuration tasks are required.

For more information, see the SteelHead Management Console User’s Guide and the SteelCentral Controller for SteelHead User’s Guide.

• Octal SteelHead Interceptor deployments - The SteelHead Interceptors can be configured in octal cluster deployments. For more information about SteelHead Interceptor deployments, see the SteelHead Interceptor Deployment Guide.

Note: SteelHead Interceptor 5.5 supports automatic licensing. Automatic licensing allows the SteelHead Interceptor appliance, once connected to the network, to automatically contact the Riverbed Licensing Portal to retrieve and install license keys onto the appliance. For more information about automatic licensing, see the SteelHead Interceptor User’s Guide.

Note: SteelHead Interceptor 5.5 supports the following SteelHead features: QoS marking, SteelFlow, and NetFlow. These features are enabled and performed on the SteelHead appliance. For more information about enabling these features on the SteelHead (for use in cluster deployments), see the SteelHead Management Console User’s Guide.

Upgrading to SteelHead Interceptor 5.5

This section describes how to upgrade to SteelHead Interceptor 5.5.

To upgrade SteelHead Interceptor software

1. Download the software image from Riverbed Support to a location such as your desktop.

2. Log in to the SteelHead Interceptor appliance using the Administrator account (admin).

3. Choose Administration > Maintenance: Software Upgrade page to display the Software Upgrade page.

Figure: Software Upgrade Page

4. Under Install Upgrade, choose one of the following options:

– From URL - Type the URL that points to the software image that you want to upgrade to.

– From Riverbed Support Site - Select this option to upgrade from the support site. But before you can do that, check the Support page to ensure that Riverbed Support credentials have been set.

Note: Installing directly from the Riverbed Support site is not supported.

– From Local File - Browse to your file system and select the software image.

– Schedule Upgrade for Later - Type the date and time using the following format:

yyyy/mm/dd hh:mm:ss

– Click Install to upgrade your SteelHead Interceptor software.

5. Choose Administration > Maintenance: Reboot/Shutdown to display the Reboot/Shutdown page.

Figure: Reboot/Shutdown Page

6. Click Reboot to restart the SteelHead Interceptor.

To view software version history

• Under Software Version History on the Software Upgrade page, view the history.

Product Dependencies and Compatibility

This section provides information about product dependencies and compatibility. It includes the following sections:

Software and Configuration Requirements

The following tables summarize the software and configuration requirements for the Riverbed CLI and the SteelHead Interceptor.

Riverbed CLI Configuration Requirements | Software System Requirements |

One of the following: • An ASCII terminal or emulator that can connect to the serial console (9600 baud, 8 bits, no parity, 1 stop bit, and no flow control) • A computer with a Secure Shell (SSH) client that is connected by an IP network to the appliance primary interface | Secure Shell (SSH). Free SSH clients include PuTTY for Windows computers, OpenSSH for many UNIX and UNIX-like operating systems, and Cygwin. |

Riverbed Component | Software Requirements |

Interceptor Management Console | Any computer that supports a web browser with a color image display. The Management Console has been tested with the following browsers: • Mozilla Firefox 10.0 and 31 ESR • Microsoft Internet Explorer 7.0, 8.0, and 9.0 • Google Chrome JavaScript and cookies must be enabled in your web browser. |

SteelHead Compatibility

The SteelHead Interceptor with VLAN segregation enabled is fully compatible with SteelHead 8.0.1 and later and supports up to 24 total interfaces in SteelHead 6.5.6 and later.

Ethernet Network Compatibility

The SteelHead Interceptor supports the following Ethernet networking standards:

• Ethernet Logical Link Control (LLC) (IEEE 802.2 - 1998)

• Fast Ethernet 100 Base-TX (IEEE 802.3 - 2008)

• Gigabit Ethernet over Copper 1000 Base-T and Fiber 1000 Base-SX (LC connector) and Fiber 1000 Base-LX (IEEE 802.3 - 2008)

• 10 Gigabit Ethernet over Fiber 10GBase-LR Single Mode and 10GBase-SR Multimode (IEEE 802.3 - 2008)

The SteelHead Interceptor ports support the following connection types and speeds:

• Primary - 10/100/1000 Base-T, autonegotiating

• Auxiliary - 10/100/1000 Base-T, autonegotiating

• LAN - 10/100/1000 Base-TX or 1000 Base-SX or 1000 Base-LX or 10GBase-LR or 10GBase-SR, depending on configuration

• WAN - 10/100/1000 Base-TX or 1000 Base-SX or 1000 Base-LX or 10GBase-LR or 10GBase-SR, depending on configuration

The SteelHead Interceptor supports VLAN Tagging (IEEE 802.1Q - 2005). It does not support the ISL protocol.

All copper interfaces are autosensing for speed and duplex (IEEE 802.3 - 2008).

The SteelHead Interceptor autonegotiates speed and duplex mode for all data rates and supports full duplex mode and flow control (IEEE 802.3 - 2008).

The SteelHead Interceptor with a Gigabit Ethernet card supports jumbo frames on in-path and primary ports.

SNMP-Based Management Compatibility

The SteelHead Interceptor supports a proprietary Riverbed MIB accessible through SNMP. SNMPv1 (RFCs 1155, 1157, 1212, and 1215), SNMPv2c (RFCs 1901, 2578, 2579, 2580, 3416, 3417, and 3418), and SNMPv3 are supported, although some MIB items might only be accessible through SNMPv2 and SNMPv3.

SNMP support enables the SteelHead Interceptor to be integrated into network management systems such as Hewlett-Packard OpenView Network Node Manager, BMC Patrol, and other SNMP-based network management tools.

Safety Guidelines

Follow the safety precautions outlined in the Safety and Compliance Guide when installing and setting up your equipment.

Caution: Failure to follow these safety guidelines can result in injury or damage to the equipment. Mishandling of the equipment voids all warranties. Read and follow safety guidelines and installation instructions carefully.

Many countries require the safety information to be presented in their national languages. If this requirement applies to your country, consult the Safety and Compliance Guide. The guide contains the safety information in your national language. Before you install, operate, or service Riverbed products, you must be familiar with the safety information associated with them. Refer to the Safety and Compliance Guide if you do not clearly understand the safety information provided in the product documentation.

SteelHead Interceptor Deployment Features

This section describes the SteelHead Interceptor features used in SteelHead Interceptor deployments.

The feature configurations vary depending on the deployment, as described in the following table.

Feature | Description |

Allow failure | Allow failure ensures optimized traffic. When contact between two designated SteelHead Interceptors is lost, the remaining SteelHead Interceptor keeps forwarding connections to ensure that optimization continues. In a parallel configuration, consider enabling this feature if no asymmetric routing is expected in the network. Also note that in a parallel configuration using Path Selection, pass-through traffic will not be able to use path selection if the SteelHead Interceptor is disconnected. In a serial configuration, redundancy is provided by default. This feature is configured in the Networking > Network Services: In-Path Interfaces page. For details, see the SteelHead Interceptor User’s Guide. |

Cluster SteelHead Interceptor | When this feature is enabled, SteelHead Interceptors notify each other when they intercept specific packets. For example, during a connection setup phase, when SteelHead Interceptor A receives a SYN packet, it notifies SteelHead Interceptor B, so that when SteelHead Interceptor B sees the SYN/ACK, it forwards it to the appropriate SteelHead. (During the data phase, both SteelHead Interceptors pass subsequent connections directly to the appropriate SteelHeads.) |

Fail-to-block | When fail-to-block is enabled, a failed SteelHead Interceptor blocks any network traffic on its path, as opposed to passing it through. In a parallel configuration, fail-to-block should be enabled to force all traffic through a cluster SteelHead Interceptor, thereby enabling optimization to continue. This feature is configured in the Networking > Networking: In-Path Interfaces page. For details, see the SteelHead Interceptor User’s Guide. |

Fail-to-wire (bypass) | When fail-to-wire (bypass) is enabled, a failed SteelHead Interceptor passes through network traffic. In a serial or quad configuration, fail-to-wire should be enabled to pass all traffic through to the cluster or failover SteelHead Interceptor, thereby enabling optimization to continue. This feature is configured in the Networking > Networking: In-Path Interfaces page. For details, see the SteelHead Interceptor User’s Guide. |

Failover | Failover enables you to specify a failover counterpart. When failover is configured, a failover SteelHead Interceptor takes over for a failed SteelHead Interceptor. In a serial configuration, you configure the serial SteelHead Interceptors for mutual failover. For details, see the SteelHead Interceptor User’s Guide. |

Deployment Scenarios

You can deploy the SteelHead Interceptor using different deployment scenarios.

Typical SteelHead Interceptor deployments have multiple SteelHead Interceptors and SteelHeads, and usually all appliances have more than one enabled and configured in-path interface. For more details, see the SteelHead Interceptor Deployment Guide.

You can configure the SteelHead Interceptor to be either a serially connected failover SteelHead Interceptor acting as a backup for the same network paths, or a SteelHead Interceptor cluster that covers different network paths or is used in a virtual in-path cluster.

The SteelHead Interceptor relationships and failure reaction features are typically combined in several ways for actual SteelHead Interceptor deployments. You can deploy SteelHead Interceptor clusters in the following ways:

For more details, see the SteelHead Interceptor Deployment Guide.

Deploying Single SteelHead Interceptors

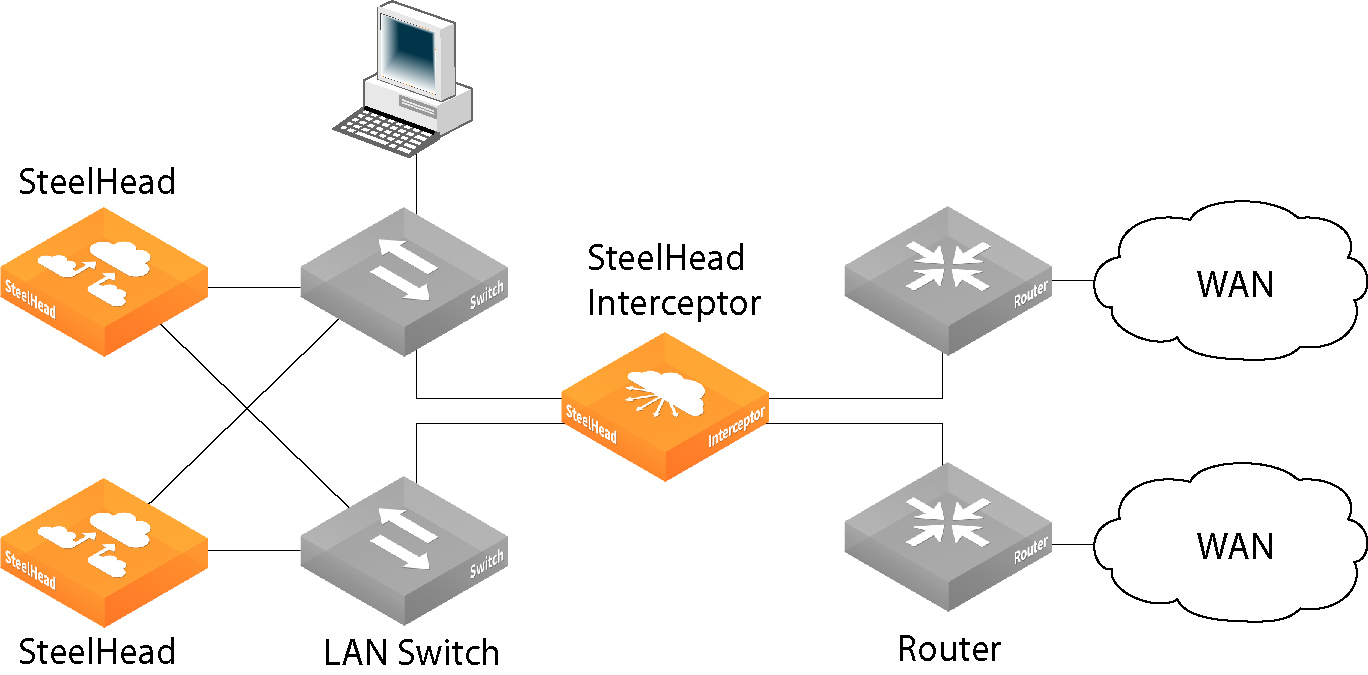

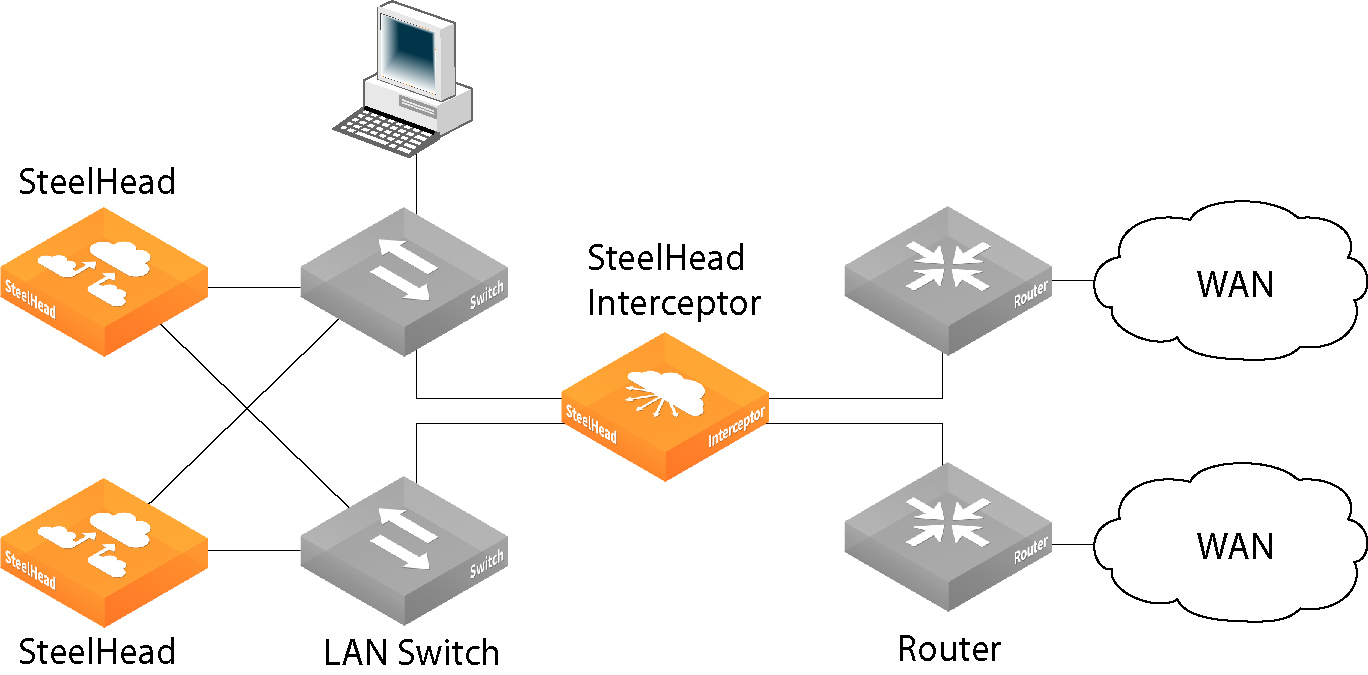

Figure: Basic Single SteelHead Interceptor Deployment shows a basic single SteelHead Interceptor deployment.

Caution: To enable full transparency, do not position any router that might generate ICMP “fragmentation needed” or “forward” messages between the SteelHead Interceptor and SteelHeads. Such messages, if not routed through the SteelHead Interceptor, are not received by the SteelHeads, resulting in a possible connection failure.

Figure: Basic Single SteelHead Interceptor Deployment

In this deployment, the following elements apply:

• SteelHead Interceptor - This deployment features one SteelHead Interceptor.

• Cluster SteelHeads - This deployment features two SteelHeads.

Deploying Serial SteelHead Interceptors

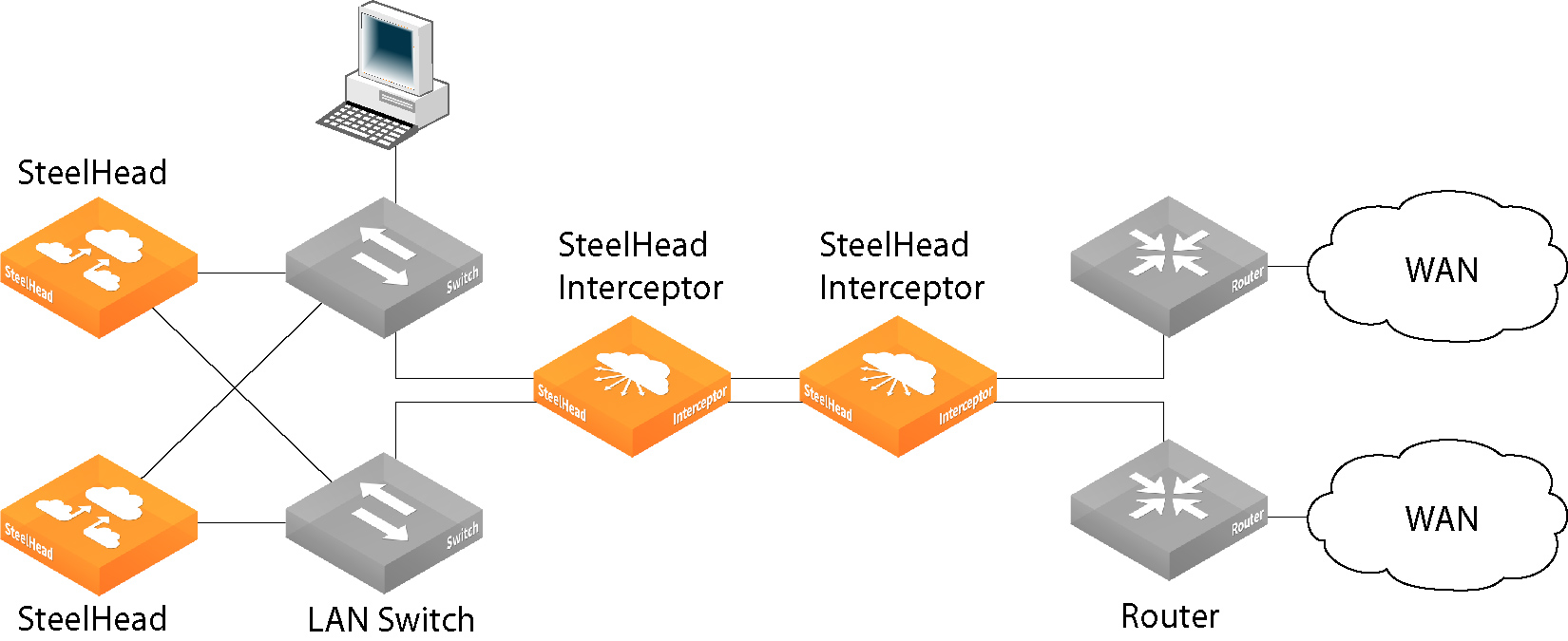

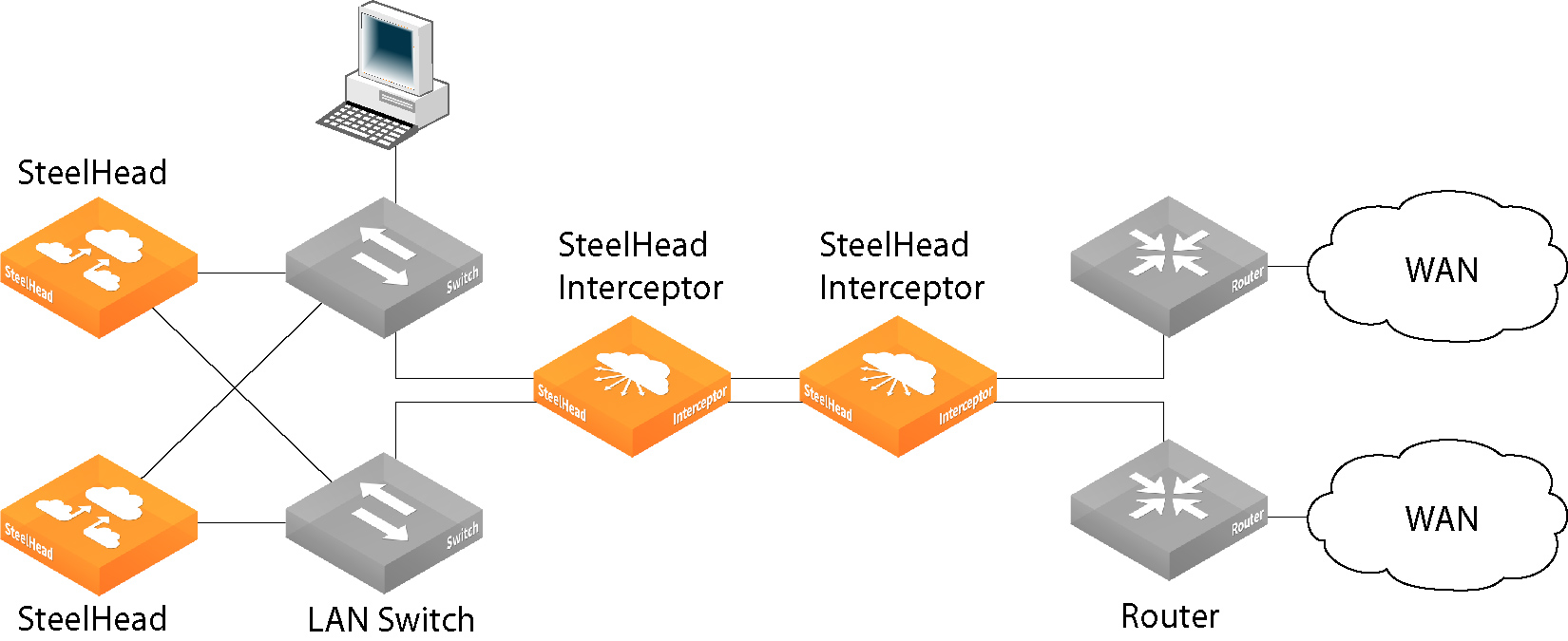

Figure: Serial SteelHead Interceptors, Fail-to-Wire Dual-Connected SteelHeads

In this deployment, the following element applies:

• Fail-to-wire - If either SteelHead Interceptor fails, traffic passes through to the other SteelHead Interceptor.

Failure Handling

In

Figure: Serial SteelHead Interceptors, Fail-to-Wire Dual-Connected SteelHeads, each in-line SteelHead Interceptor is configured for mutual failover to ensure high availability. If either SteelHead Interceptor fails, the other appliance directs all network traffic.

Deploying Parallel SteelHead Interceptors

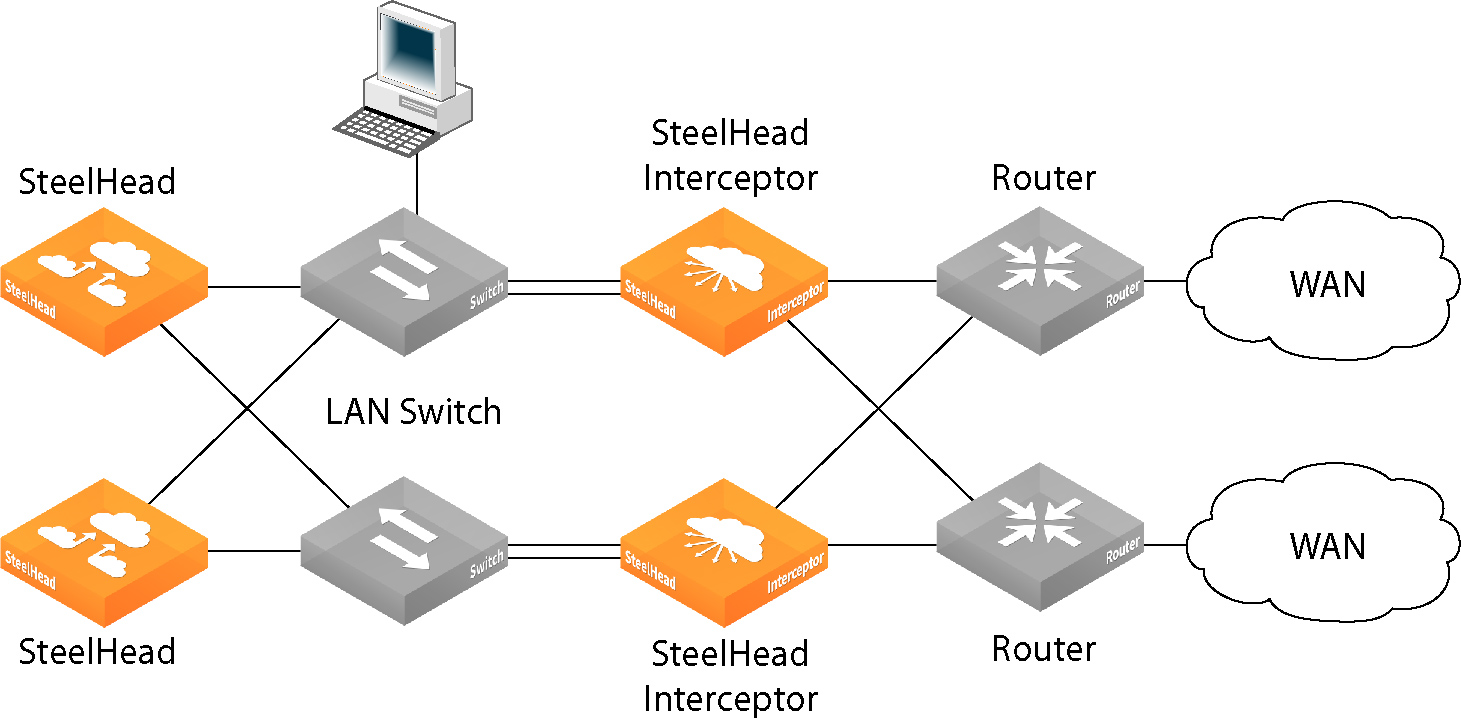

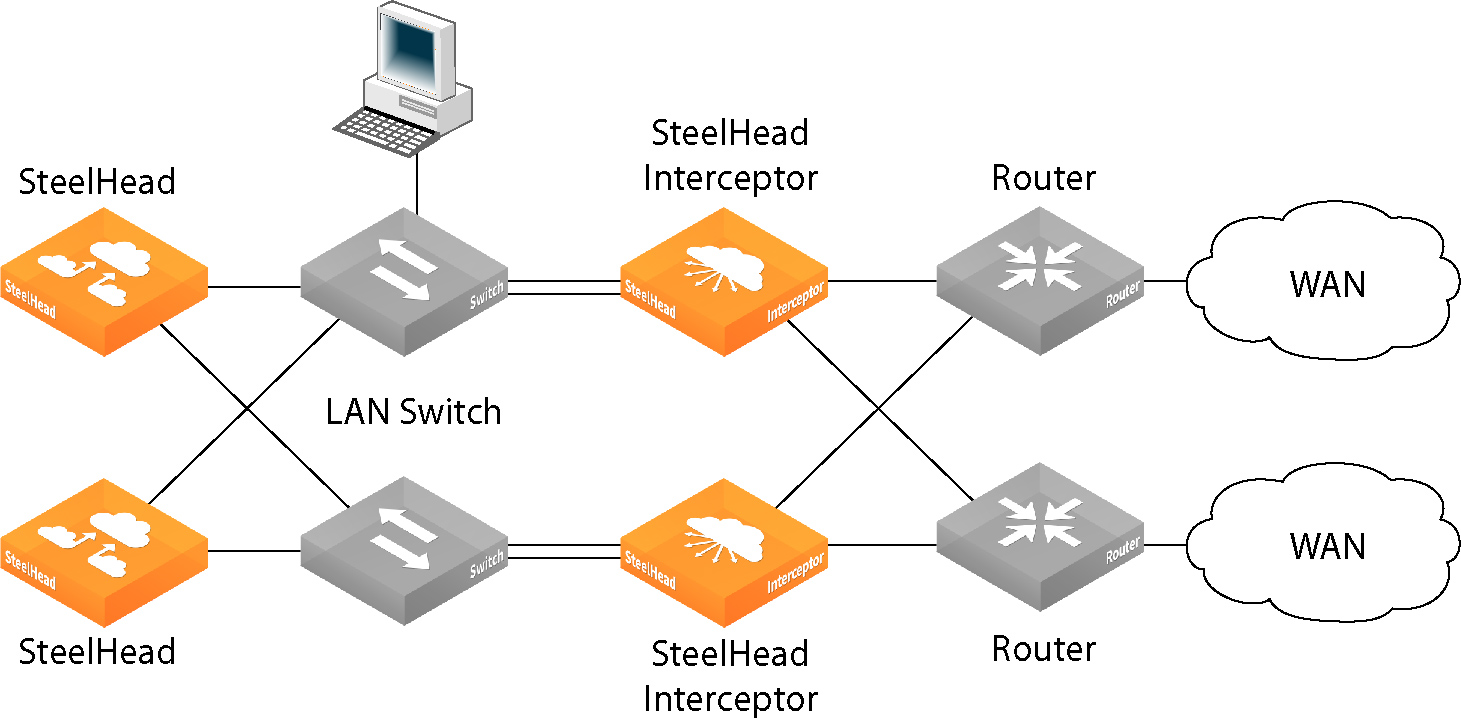

Figure: Parallel SteelHead Interceptors, Fail-to-Block with Dual-Connected SteelHeads

In this deployment, the following elements apply:

• Fail-to-block - This feature is enabled. If either SteelHead Interceptor fails or it blocks traffic, the network automatically reroutes traffic through the remaining SteelHead Interceptor.

• Connection-forwarding counterpart - This feature is enabled when the SteelHead Interceptors can notify each other about intercepted connections.

• Allow failure - This feature is optional (but recommended as a best practice). If contact is lost between the cluster SteelHead Interceptors, the remaining SteelHead Interceptor continues directing all connections, ensuring that optimization continues.

Failure Handling

In

Figure: Parallel SteelHead Interceptors, Fail-to-Block with Dual-Connected SteelHeads, if a SteelHead Interceptor fails, fail-to-block causes connections to route through the other SteelHead Interceptor, thereby allowing optimization to continue. However, delays might result from traffic reconvergence.

Deploying Quad SteelHead Interceptors

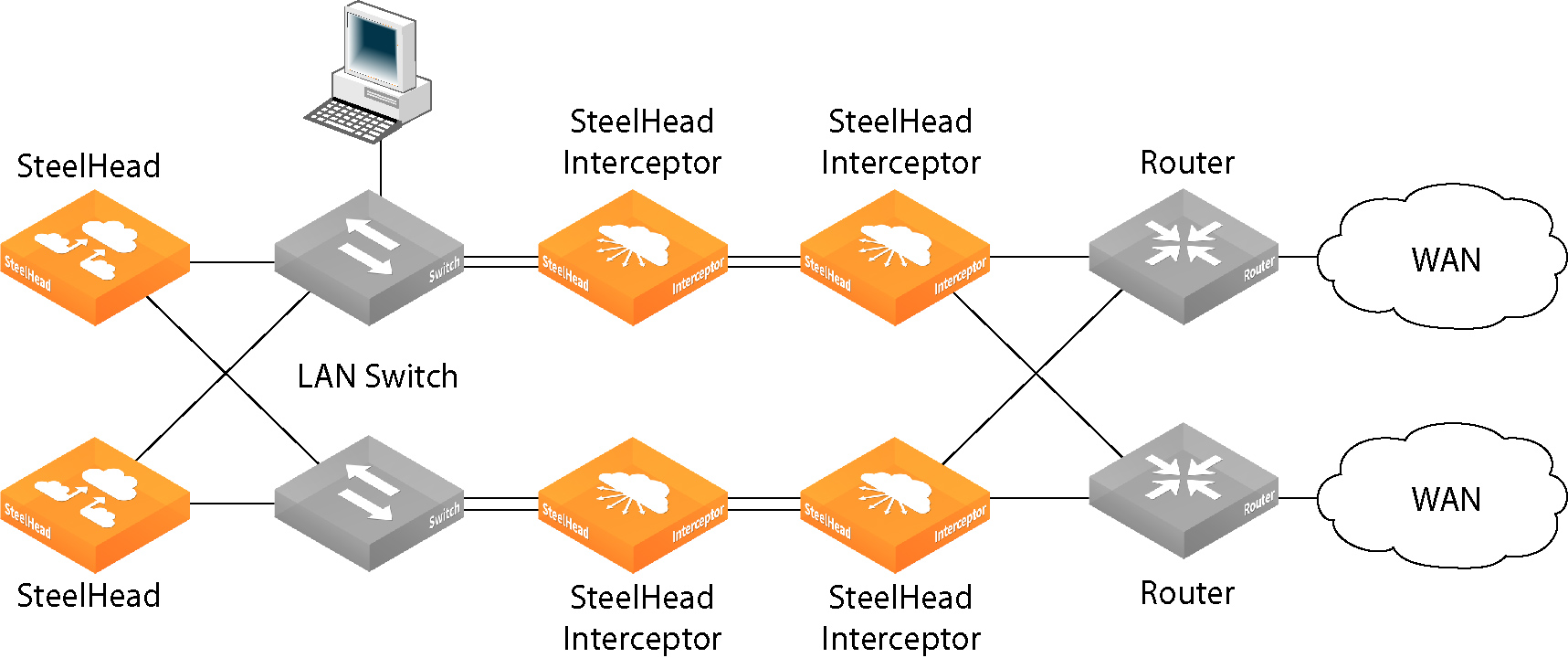

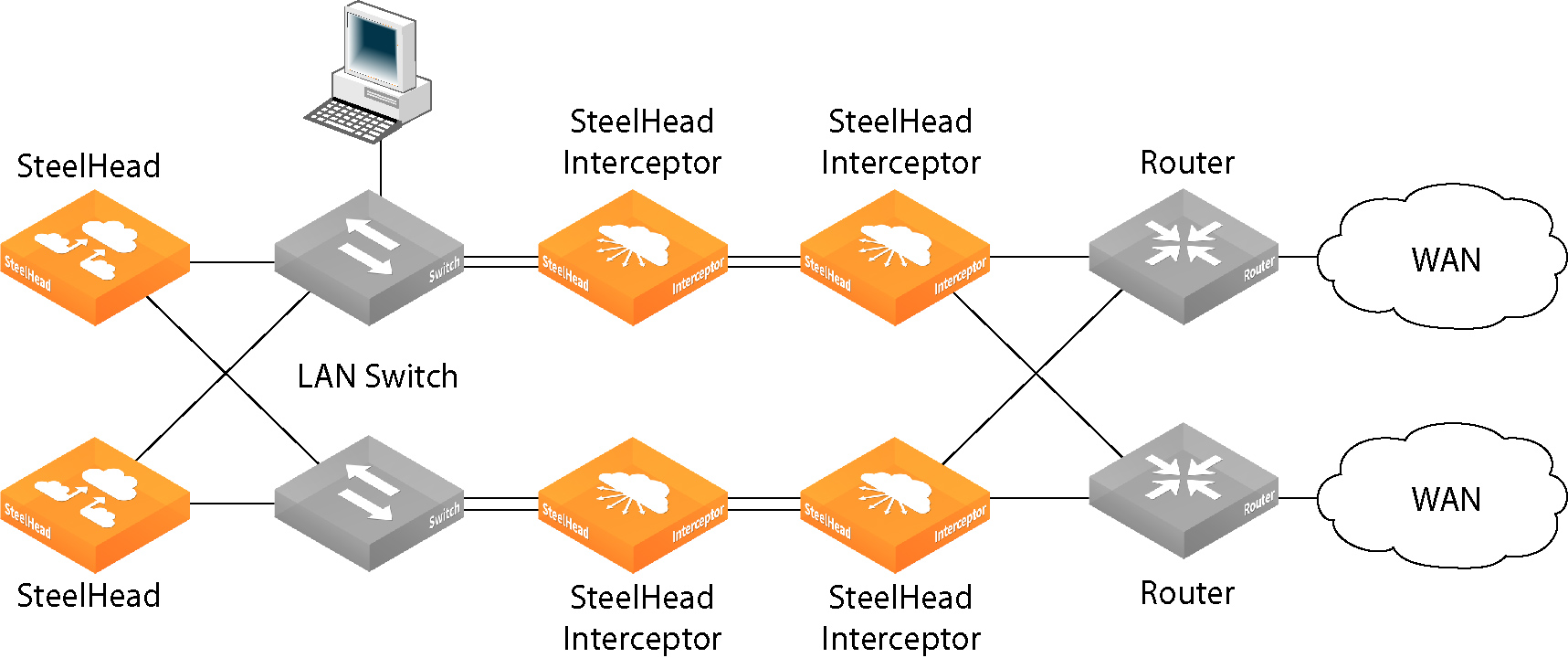

Figure: Quad SteelHead Interceptors with Dual-Connected SteelHeads

In this deployment, the following elements apply:

• Failover Appliance - Each in-line SteelHead Interceptor is configured to provide failover support for the other.

• Fail-to-Wire - If either in-line SteelHead Interceptor fails, traffic passes through to the other SteelHead Interceptor.

• Clusters - This feature is enabled where clustered SteelHead Interceptors can notify each other about intercepted connections.

Failure Scenarios

In

Figure: Quad SteelHead Interceptors with Dual-Connected SteelHeads, each parallel network uses two in-line SteelHead Interceptors. Each in-line SteelHead Interceptor is configured as fail-to-wire, so if one fails, network traffic passes through to its in-line counterpart, which is configured for failover.

This scenario is distinct from the parallel network configuration shown in

Deploying Parallel SteelHead Interceptors because the in-line redundancy eliminates the need for fail-to-block and allow failure configurations.

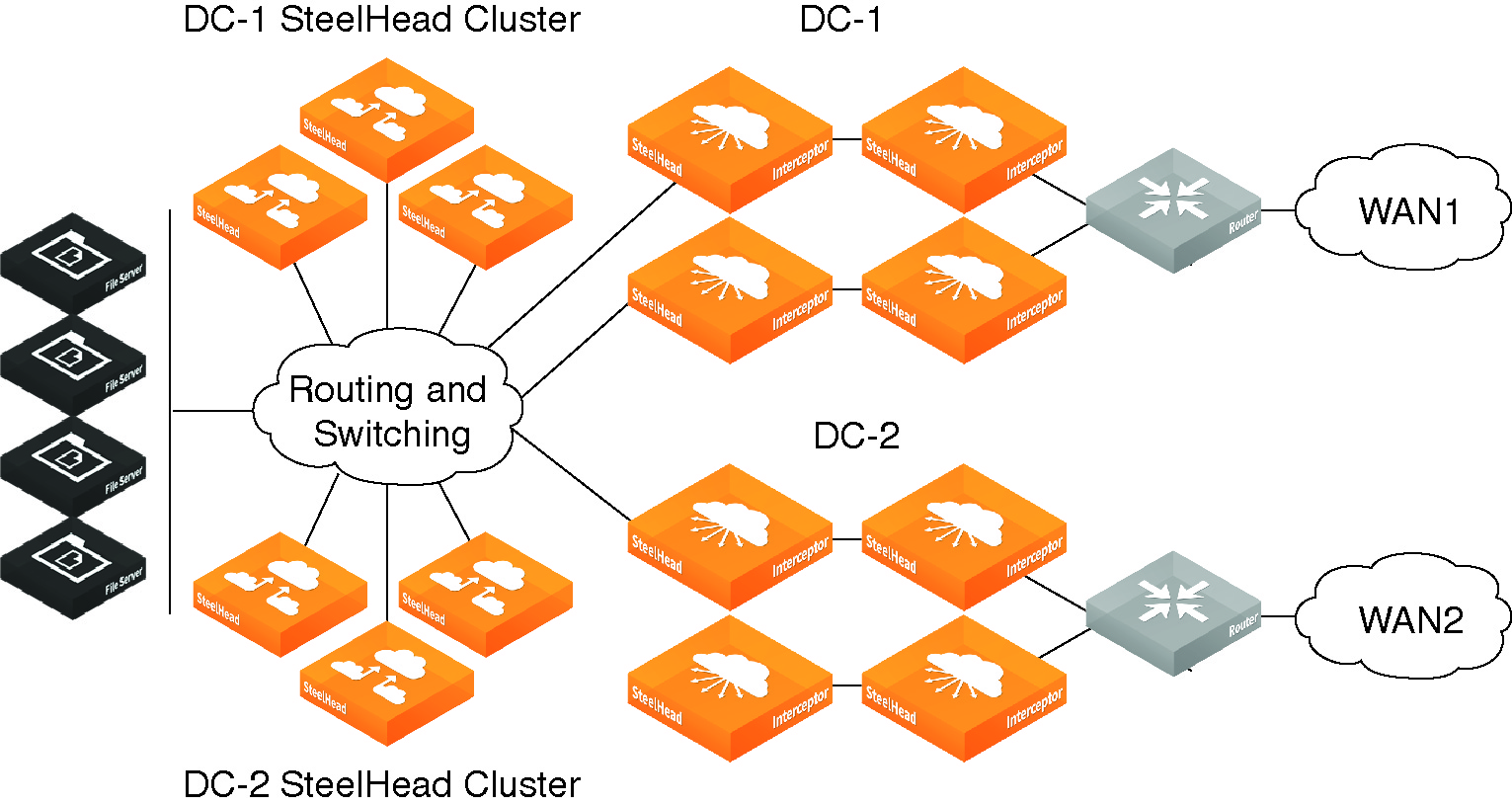

Deploying Octal SteelHead Interceptors

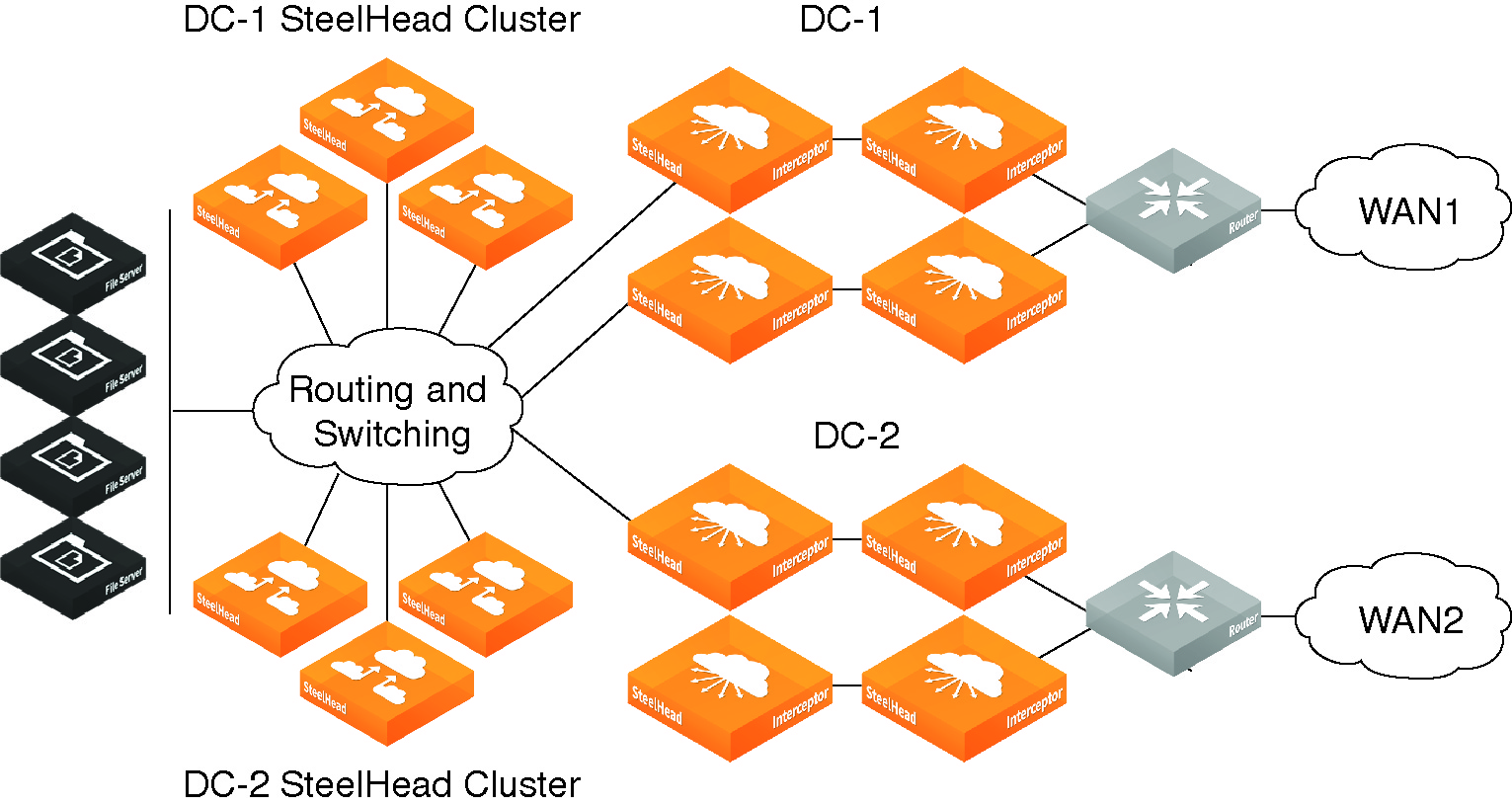

Figure: Octal SteelHead Interceptor Deployment shows an octal SteelHead Interceptor deployment. An octal SteelHead Interceptor deployment consists of two quad deployments split across a data center.

Figure: Octal SteelHead Interceptor Deployment