About policy settings for in-path rules

You configure in-path optimization rules for your Client Accelerator endpoint in the In-Path Rules tab of the Manage > Services: Policies page.

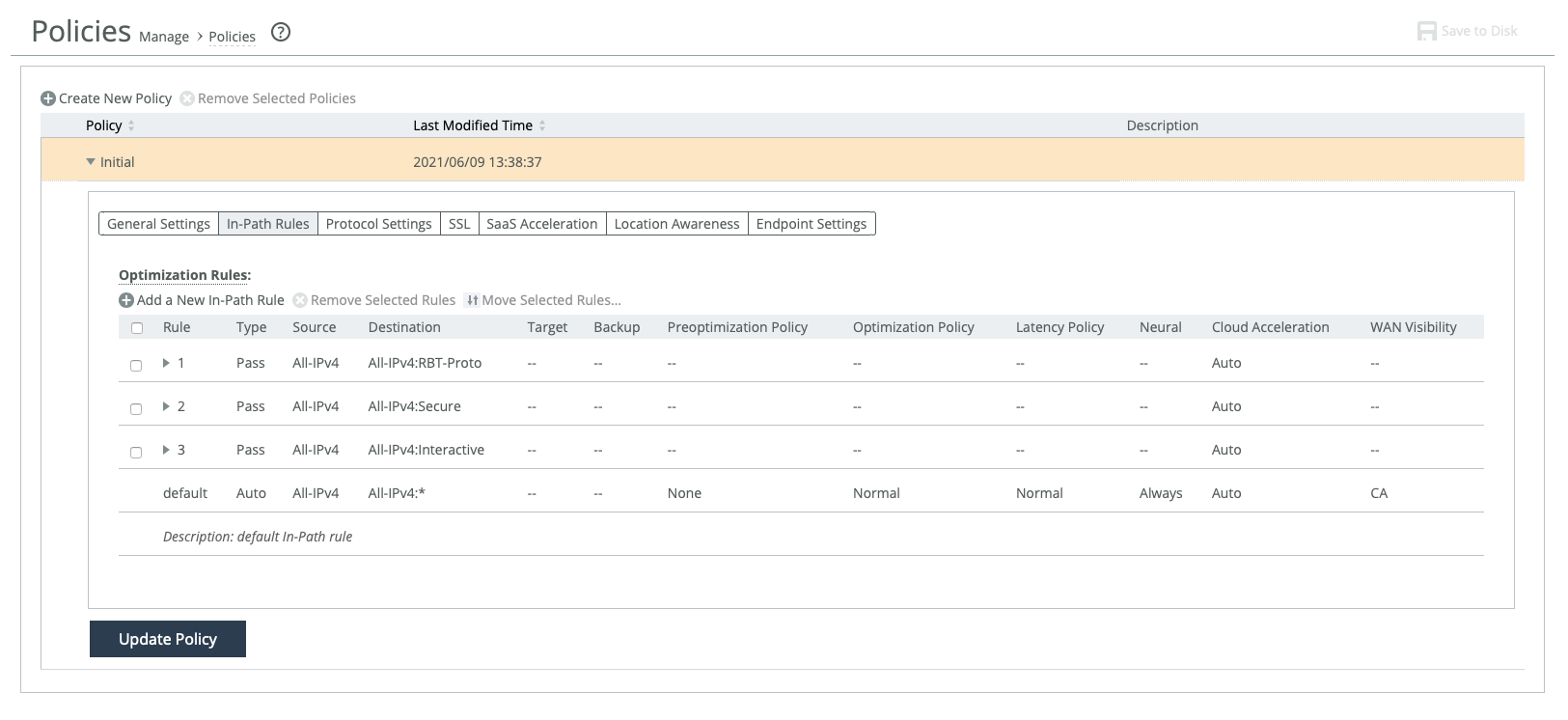

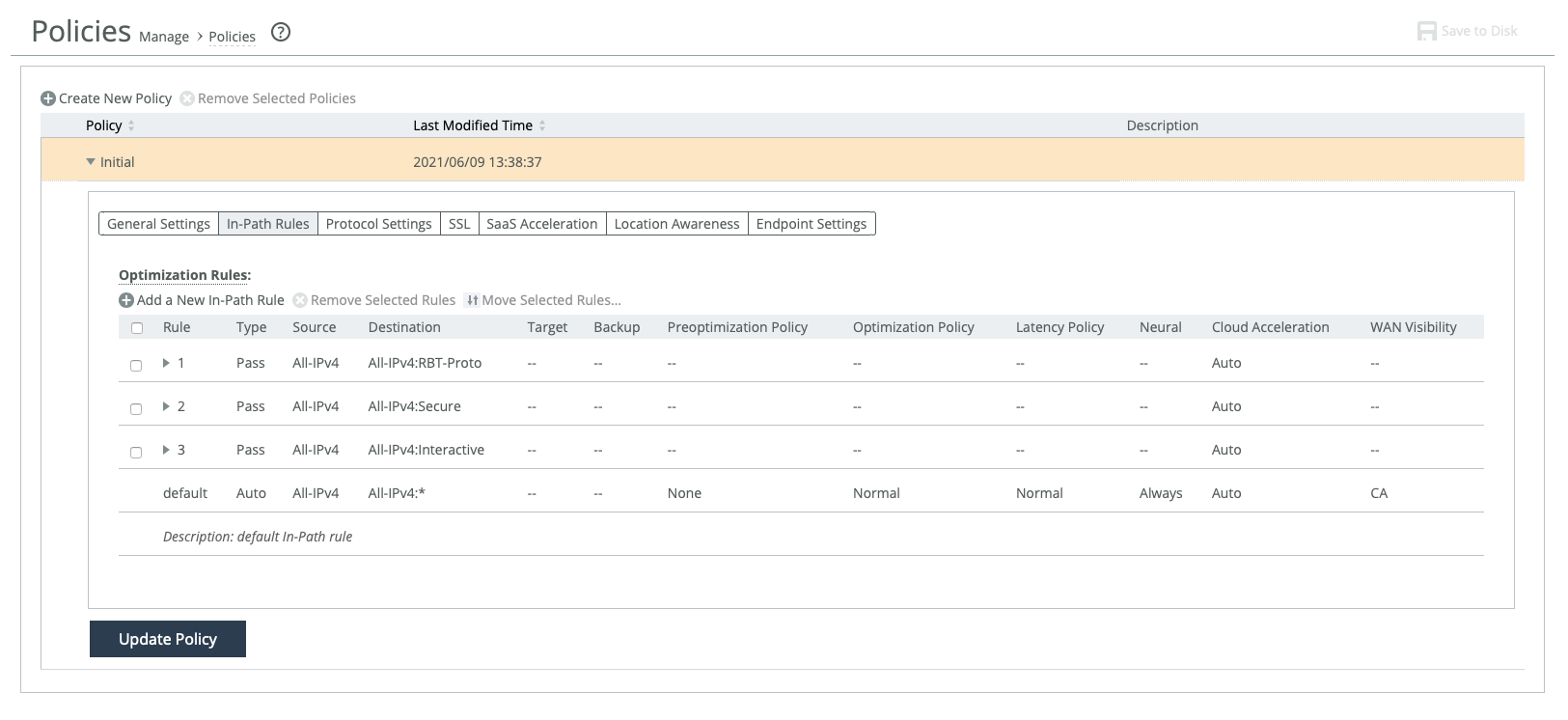

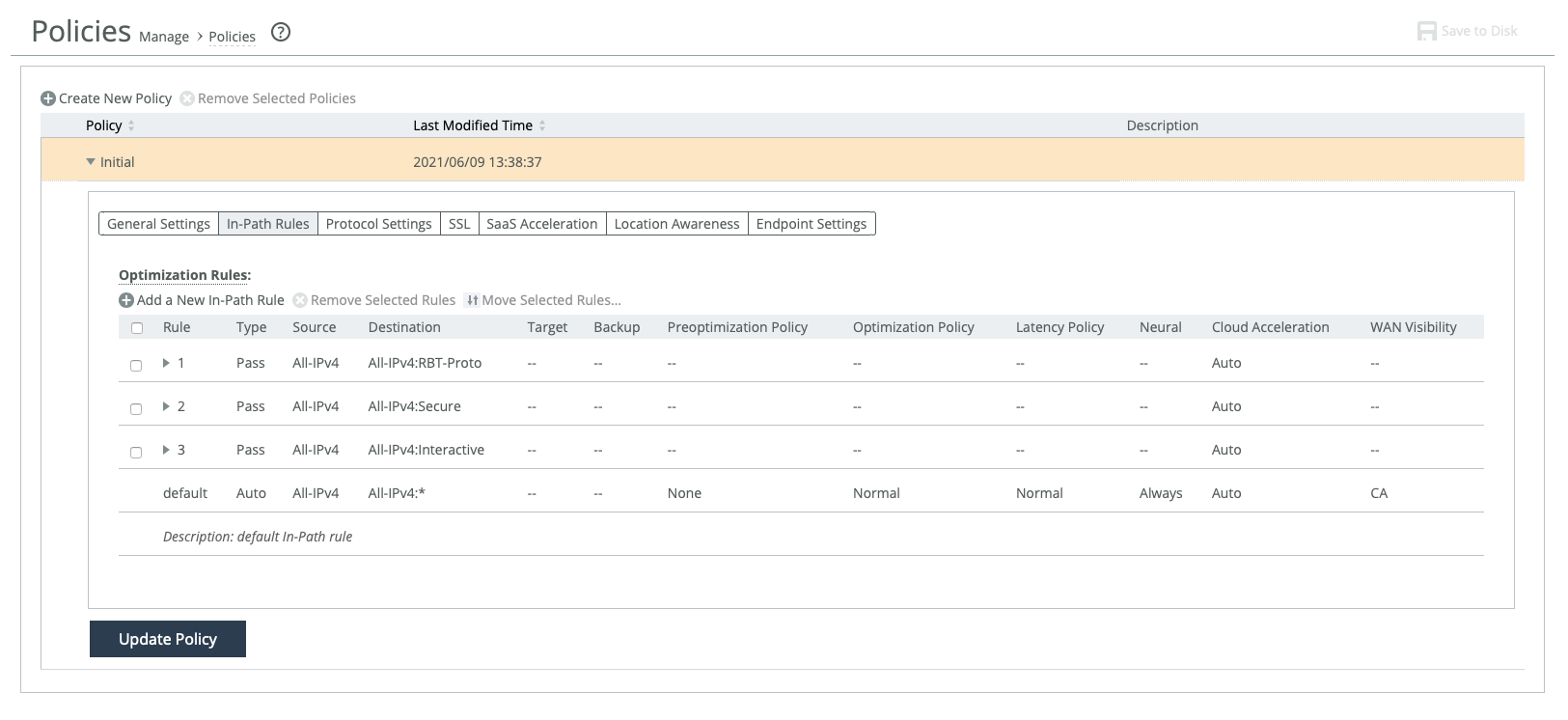

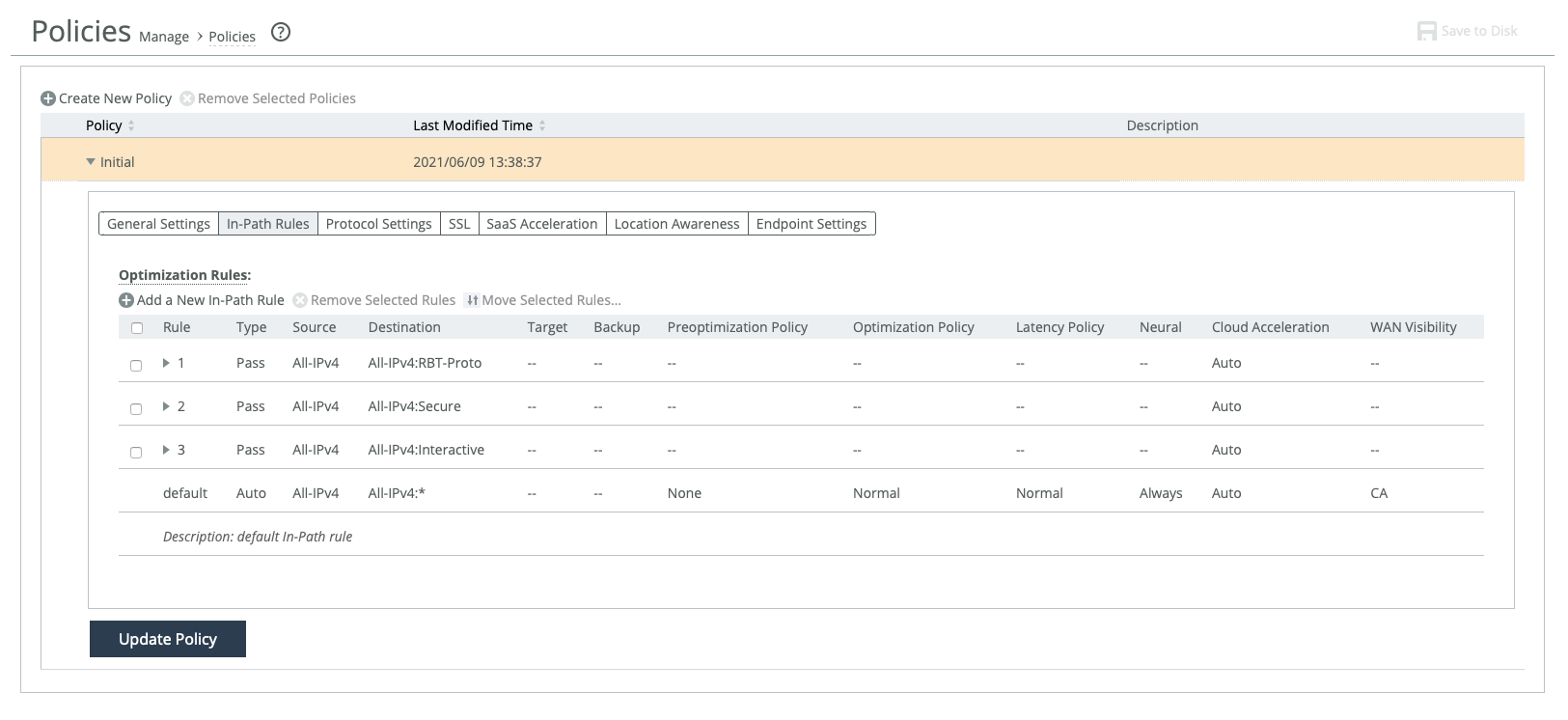

Policies—In-Path Rules page

In-path rules determine the Client Accelerator endpoint behavior with SYN packets. In-path rules are an ordered list of fields a controller uses to match with Viewing Endpoint SYN packet fields (for example, source or destination subnet, IP address, VLAN, or TCP port). Each in-path rule has an action field. When a controller finds a matching in-path rule for a SYN packet, the controller treats the packet according to the action specified in the in-path rule.

In-path rule configurations differ depending on the action. For example, both the fixed-target and the autodiscovery actions allow you to choose what type of optimization is applied, what type of data reduction is used, what type of latency optimization is applied, and so on.

For details about in-path rules, see the SteelHead User Guide.

Type

Autodiscover rules enable the controller to automatically intercept and optimize traffic on all IP addresses and ports. By default, autodiscover is applied to all IP addresses and ports that are not secure, interactive, or default Riverbed ports. Defining in-path rules overrides this setting. For details, see the SteelHead User Guide.

Fixed-target rules specify that a controller always goes to a specific SteelHead first. Fixed-target rules can be used if the SteelHead is located out-of-path, or for troubleshooting purposes. When you select this option, you also must set a target SteelHead and, optionally, a backup SteelHead.

• Target Appliance IP Address—Specify the IP address and port number for your target SteelHead.

• Backup Appliance IP Address—Specify the IP address and port number for your backup SteelHead.

Pass-through rules identify traffic that is passed through the network unoptimized. You define pass-through rules to exclude subnets from optimization. Traffic is also passed through when the SteelHead is in bypass mode. Traffic may be passed through by the controller because of the pass-through rule, because the connection was established before the controller was put in place or before the service was enabled.

Discard rules drop the SYN packets silently. The controller filters out traffic that matches the discard rules. This process is similar to how routers and firewalls drop disallowed packets: the connection-initiating application has no knowledge of the fact that its packets were dropped until the connection times out.

Deny rules drop the SYN packets, send a message back to its source, and reset the TCP connection being attempted. Using an active reset process rather than a silent discard allows the connection initiator to know that its connection is disallowed.

Position

Select Start, End, or a rule number from the drop-down list. The controller evaluates rules in numerical order starting with rule 1. If the conditions set in the rule match, the rule is applied and the system moves on to the next packet. If the conditions set in the rule don’t match, the system consults the next rule. For example, if the conditions of rule 1 don’t match, rule 2 is consulted. If rule 2 matches the conditions, it is applied, and no further rules are consulted.

The default rule, Auto-Discover, which optimizes all remaining traffic that has not been selected by another rule, can’t be removed and is always listed last.

Source subnet or source label

Specify the subnet IP address and netmask for the source network. Use this IPv4 format for an individual subnet IP address and netmask: xxx.xxx.xxx.xxx/xx. You can enter 0.0.0.0/0 as the wildcard for all traffic.

The All-IPv4 option is the default. It specifies all IPv4 traffic subnets as source.

The IPv4 option prompts you to enter IPv4 subnets. You can enter up to 64 subnets.

The Source Label option is active only if source labels are configured on the appliance. You can configure either a source label or a destination port label for a rule, but not both. See

About source labels. Destination subnet, or host or app label

The All IPv4 option maps to 0.0.0.0/0.

The IPv4 option prompts you for a specific IPv4 address. Use this format for an individual subnet IP address and netmask: xxx.xxx.xxx.xxx/xx

The Host Label option prompts you to select a host label. Host labels enable you to selectively optimize connections to specific services. A host label includes a fully qualified domain name (hostname) and/or a list of subnets. Host labels replace the destination. When you select a host label, Client Accelerator ignores any destination IP address specified within the in-path rule. See

About host labels. The Management Console dims the host label selection when there aren’t any host labels.

The SaaS Application option defines an in-path rule for SaaS optimization SaaS Accelerator. When you choose SaaS Application, you also need to specify an application (such as SharePoint for Business) or an application bundle (such as Microsoft Office 365). Only applications and application bundles set up for SaaS acceleration on SAM appear in the list. For detailed information on SaaS Accelerator, see

Configuring Interoperability with SaaS Accelerator and the

SaaS Accelerator Manager User Guide.

Destination port or port label

This setting specifies a destination port number, port label, or all ports for the rule. You can configure either a source label or a destination port label for a rule, but not both.

Destination domain label

This setting specifies a domain label to optimize a specific service or application with an autodiscover, passthrough, or fixed-target rule. See

About domain labels. An in-path rule with a domain label uses two layers of match conditions. The in-path rule still sets a destination IP address and subnet (or uses a host label or port). Any traffic that matches the destination first must also be going to a domain that matches the entries in the domain label. The connection must match both the destination and the domain label. When the entries in the domain label don’t match, the system looks to the next matching rule. Domain labels are compatible with IPv4 only.

Configuring an in-path rule with the domain-label set to DISCARD/DENY/FORWARD as the first rule causes traffic to drop or pass through. This is because the rule matching terminates on the first rule. These rules must always be configured as a rule that falls back to an autodiscover or fixed-target rule to limit optimization that is dynamically based on an HNBI decision. When HNBI fails to match the domain label tied with the current matched rule, it will attempt to match the domain label tied to other rules, if present. If it hits an autodiscover rule after it first hits a fixed-target rule, the system passes through traffic.

Target appliance IP address

This setting specifies the target appliance’s IP address and port number for a fixed-target rule.

Backup appliance IP address

This setting specifies the target backup appliance’s IP address and port number for a fixed-target rule.

Preoptimization policy

This is the default setting. Use this setting if the Oracle Forms, SSL, or Oracle Forms-over-SSL preoptimization policy is enabled and you want to disable it for a port.

Port 443 always uses a preoptimization policy of SSL even if an in-path rule on the client-side SteelHead sets the preoptimization policy to None. To disable the SSL preoptimization for traffic to port 443, you can either:

• disable the SSL optimization on the client-side or server-side SteelHead.

—or—

• modify the peering rule on the server-side SteelHead by setting the SSL Capability control to No Check.

Oracle Forms enables preoptimization processing for Oracle Forms. This policy is not compatible with IPv6.

Oracle Forms over SSL enables preoptimization processing for both the Oracle Forms and SSL encrypted traffic through SSL secure ports on the client-side SteelHead. You must also set the Latency Optimization Policy to HTTP. This policy is not compatible with IPv6. If the server is running over a standard secure port—for example, port 443—the Oracle Forms over SSL in-path rule needs to be before the default secure port pass-through rule in the in-path rule list.

SSL enables preoptimization processing for SSL encrypted traffic through SSL secure ports on the client-side SteelHead.

Optimization policy

Optionally, if the rule type is Auto-Discover or Fixed Target, you can configure these types of data reduction policies:

• Normal—Performs LZ compression and SDR.

• SDR-Only—Performs SDR; doesn’t perform LZ compression.

• Compression-Only—Performs LZ compression; doesn’t perform SDR.

• None—Doesn’t perform SDR or LZ compression.

Latency optimization policy

Normal performs all latency optimizations (HTTP is activated for ports 80 and 8080). This is the default setting.

HTTP activates HTTP optimization on connections matching this rule.

Outlook Anywhere enables Outlook Anywhere latency optimization. Outlook Anywhere is a feature of Microsoft Exchange Server that allows Microsoft Office Outlook clients to connect to their Exchange servers over the internet using the Microsoft RPC tunneling protocol. For details about Outlook Anywhere, see the SteelHead User Guide.

Citrix activates Citrix-over-SSL optimization on connections matching this rule. This policy is not compatible with IPv6. Add an in-path rule to the server-side SteelHead that specifies the Citrix Access Gateway IP address, select this latency optimization policy on the server-side SteelHeads, and set the preoptimization policy to SSL. The server-side SteelHeads must be running RiOS 7.0 or later. The preoptimization policy must be set to SSL. SSL must be enabled on the Citrix Access Gateway. On the server-side SteelHead, enable SSL and install the SSL server certificate for the Citrix Access Gateway. The server-side SteelHeads establish an SSL channel between themselves to secure the optimized Independent Computing Architecture (ICA) traffic. End users log in to the Access Gateway through a browser (HTTPS) and access applications through the web interface. Clicking an application icon starts the Online Plug-in, which establishes an SSL connection to the Access Gateway. The ICA connection is tunneled through the SSL connection. The SteelHead decrypts the SSL connection from the user device, applies ICA latency optimization, and reencrypts the traffic over the internet. The server-side SteelHead decrypts the optimized ICA traffic and reencrypts the ICA traffic into the original SSL connection destined to the Access Gateway.

Exchange Autodetect automatically detects MAPI transport protocols (Autodiscover, Outlook Anywhere, and MAPI over HTTP) and HTTP traffic. For MAPI transport protocol optimization, enable SSL and install the SSL server certificate for the Exchange Server on the server-side SteelHead. To activate MAPI-over-HTTP bandwidth and latency optimization, you must also choose Manage > Services: Policies and select the Protocol Settings tab, select the MAPI tab, and click Enable MAPI over HTTP optimization on the server-side SteelHead. The server-side SteelHeads must be running RiOS 9.2 or later for MAPI-over-HTTP latency optimization.

None will not activate latency optimization on connections matching this rule. For Oracle Forms-over-SSL encrypted traffic, you must set the Latency Optimization Policy to HTTP. Setting the Latency Optimization Policy to None excludes all latency optimizations, such as HTTP, MAPI, and SMB.

Neural framing mode

Optionally, if you have selected Auto-Discover or Fixed Target, you can select a neural framing mode for the in-path rule. Neural framing enables the system to select the optimal packet framing boundaries for Scalable Data Referencing (SDR). Neural framing creates a set of heuristics to intelligently determine the optimal moment to flush TCP buffers. The system continuously evaluates these heuristics and uses the optimal heuristic to maximize the amount of buffered data transmitted in each flush, while minimizing the amount of idle time that the data sits in the buffer.

You can specify these neural framing settings:

• Never—Don’t use the Nagle algorithm. The Nagle algorithm is a means of improving the efficiency of TCP/IP networks by reducing the number of packets that need to be sent over the network. It works by combining a number of small outgoing messages and sending them all at once. All the data is immediately encoded without waiting for timers to fire or application buffers to fill past a specified threshold. Neural heuristics are computed in this mode but are not used. In general, this setting works well with time-sensitive and chatty or real-time traffic.

• Always—Use the Nagle algorithm. This is the default setting. All data is passed to the codec, which attempts to coalesce consume calls (if needed) to achieve better fingerprinting. A timer (6 ms) backs up the codec and causes leftover data to be consumed. Neural heuristics are computed in this mode but are not used.

For different types of traffic, one algorithm might be better than others. The considerations include latency added to the connection, compression, and SDR performance.

To configure neural framing for an FTP data channel, define an in-path rule with the destination port 20 and set its data reduction policy. To configure neural framing for a MAPI data channel, define an in-path rule with the destination port 7830 and set its data reduction policy.

WAN visibility mode

This setting enables WAN visibility, which pertains to how packets traversing the WAN are addressed.

WAN visibility mode is configurable for Auto-Discover and Fixed-Target rules. To configure WAN Visibility for Fixed-Target rules, you must use CLI commands. For details on WAN Visibility CLI commands, see the Riverbed Command-Line Interface Reference Manual.

You configure WAN visibility on the client-side controller (where the connection is initiated). The server-side SteelHead must also support WAN visibility.

• Correct Addressing—Turns WAN visibility off. Correct addressing uses SteelHead IP addresses and port numbers in the TCP/IP packet header fields for optimized traffic in both directions across the WAN. This is the default setting.

• Port Transparency—Preserves your server port numbers in the TCP/IP header fields for optimized traffic in both directions across the WAN. Traffic is optimized while the server port number in the TCP/IP header field appears to be unchanged. Routers and network monitoring devices deployed in the WAN segment between the communicating SteelHeads can view these preserved fields.

Use port transparency if you want to manage and enforce policies that are based on destination ports. If your WAN router is following traffic classification rules written in terms of client and network addresses, port transparency enables your routers to use existing rules to classify the traffic without any changes.

Port transparency enables network analyzers deployed within the WAN (between the SteelHeads) to monitor network activity and to capture statistics for reporting by inspecting traffic according to its original TCP port number.

Port transparency does not require dedicated port configurations on your controllers.

Port transparency only provides server port visibility. It does not provide server IP address visibility. For the Client Accelerator Controller, the client IP address and port numbers are preserved.

• Full Transparency—Preserves your client and server IP addresses and port numbers in the TCP/IP header fields for optimized traffic in both directions across the WAN. It also preserves VLAN tags. Traffic is optimized while these TCP/IP header fields appear to be unchanged. Routers and network monitoring devices deployed in the WAN segment between the communicating SteelHeads can view these preserved fields.

If both port transparency and full address transparency are acceptable solutions, port transparency is preferable. Port transparency avoids potential networking risks that are inherent to enabling full address transparency. For details, see the SteelHead Deployment Guide.

However, if you must see your client or server IP addresses across the WAN, full transparency is your only configuration option.

Enabling full address transparency requires symmetrical traffic flows between the client and server. If any asymmetry exists on the network, enabling full address transparency might yield unexpected results, up to and including loss of connectivity. For details, see the SteelHead Deployment Guide.

You can use Full Transparency with a stateful firewall. A stateful firewall examines packet headers, stores information, and then validates subsequent packets against this information. If your system uses a stateful firewall, this option is available:

• Full Transparency with Reset—Enables full address and port transparency and also sends a forward reset between receiving the probe response and sending the transparent inner channel SYN. This option ensures the firewall does not block inner transparent connections because of information stored in the probe connection. The forward reset is necessary because the probe connection and inner connection use the same IP addresses and ports and both map to the same firewall connection. The reset clears the probe connection created by the SteelHead and allows for the full transparent inner connection to traverse the firewall.

For details on configuring WAN visibility and its implications, see the SteelHead Deployment Guide. To turn full transparency on globally by default, create an in-path auto-discover rule, select Full, and place it above the default in-path rule and after the Secure, Interactive, and RBT-Proto rules. You can configure a SteelHead for WAN visibility even if the server-side SteelHead does not support it, but the connection is not transparent. You can enable full transparency for servers in a specific IP address range and you can enable port transparency on a specific server. For details, see the SteelHead Deployment Guide.

• The Top Talkers report displays statistics on the most active, heaviest users of WAN bandwidth, providing some WAN visibility without enabling a WAN Visibility Mode.