SteelCentral™ NetIM

2.4.0 System & Deployment Requirements

SteelCentral™ NetIM

2.4.0 System & Deployment Requirements

Supported Platforms

|

Vendor |

Hypervisor |

|

VMware |

ESXI 6.5 (*) ESXI 6.7 (*) ESXI 7.0 (*) |

|

Azure |

Azure Hypervisor |

|

Amazon Web Services |

AWS Hyervisor |

(*) Note: The NetIM Virtual Appliance OVA is certified for deployment on VMware ESXi. In many cases, NetIM may be successfully deployed on other major hypervisors (i.e., KVM, AHV); however, these other hypervisors have not been QAed or certified. Further, Riverbed maintains the right to deny support and provide support only on a best-effort basis for deployment on uncertified hypervisors.

Deployment Requirements

![]()

o Each VM requires a minimum of 4 virtual CPUs, 16 GB of RAM, and 175 GB (75 GB for OS partition & 100 GB for application and persistence partition)

o VM Snapshots are known to affect performance but can be enabled with appropriate caution.

§ VM Snapshots are supported while NetIM services are down

§ VM Snapshots are supported while NetIM services are up provided the underlying storage is SSD-based, a hierarchy of snapshots is not present, memory is not included in the snapshot, and the snapshot can be completed in 7 seconds or less

§ Note: No adverse effects from snapshots were observed in internal testing using higher-performance VM infrastructure and the above recommendations, hence snapshots are supported with the following limitations:

· Riverbed Global Support reserves the right to ask you to disable snapshots if snapshots are suspected of causing instability in your NetIM implementation.

o vMotion can be enabled with appropriate caution

§ Note: No adverse effects from vMotion were observed in internal testing using higher-performance VM infrastructure, hence vMotion is supported with the following limitations:

· Riverbed Global Support reserves the right to ask you to disable vMotion if vMotion is suspected of causing instability in your NetIM implementation.

· NetIM Manager and Data Managers must be allocated Enterprise-class high-performance storage (Premium SSD, Standard/General Purpose SSD)

o Sustained throughput per physical host: 8,000 IOPS / 800 MBps

o Average Response Time: 2-4 ms

· NetIM Worker and NetIM Core must be allocated Enterprise-class storage (Standard/General Purpose SSD, SSD Accelerated)

o Sustained throughput per physical host: 6,000 IOPS / 600 MBps

o Average Response Time: 3-5 ms

· NetIM Core must be provisioned with a network interface capable of supporting the throughput required to support CLI/SNMP collection and trap/syslog receiving

· NetIM Worker(s) must be provisioned with a network interface capable of supporting the throughput required to support polling and alert notification

· By default, NetIM uses the following IPv4 subnets:10.255.0.0/16, 10.50.0.0/16, 10.60.0.0/16, 172.17.0.0/16, and 172.18.0.0/16 for internal communication. However, during setup, NetIM will modify its subnet usage if it suspects or detects one of the above IPv4 subnets is in use in your network. NetIM setup’s Advanced Docker Configuration step will allow you to view and manually select alternative subnets, if required.

· Access to a Network Time Protocol (NTP) Server is required for proper time-synchronization between components

Deployment Guidelines

![]()

|

InterfaceCount / Polled InterfaceCount (*) |

NetIM Manager (**) |

NetIM Data Manager (**) |

NetIM Worker |

NetIM Core |

Managers |

Data Managers (***) |

Workers (****) |

Core |

|

|

2.5K |

100K / 50K |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 1 TB Storage (App) |

N/A |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 100 GB Storage (App) |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 100 GB Storage (App) |

1 |

0 |

1 |

1 |

|

5K |

200K / 100K |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 2 TB Storage (App) |

N/A |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 100 GB Storage (App) |

6 vCPUs 32 GB Memory 75 GB Storage (OS) 200 GB Storage (App) |

1 |

0 |

2 |

1 |

|

10K |

400K / 200K |

6 vCPUs 24 GB Memory 75 GB Storage (OS) 2 TB Storage (App) |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 2 TB Storage (App) |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 100 GB Storage (App) |

8 vCPUs 48 GB Memory 75 GB Storage (OS) 250 GB Storage (App) |

1 |

1 |

4 |

1 |

|

15K |

600K / 300K |

8 vCPUs 32 GB Memory 75 GB Storage (OS) 2 TB Storage (App) |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 2 TB Storage (App) |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 100 GB Storage (App) |

8 vCPUs 64 GB Memory 75 GB Storage (OS) 300 GB Storage (App) |

1 |

1 |

6 |

1 |

|

20K |

800K / 400K |

8 vCPUs 40 GB Memory 75 GB Storage (OS) 2 TB Storage (App) |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 2 TB Storage (App) |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 100 GB Storage (App) |

8 vCPUs 80 GB Memory 75 GB Storage (OS) 350 GB Storage (App) |

1 |

2 |

8 |

1 |

|

25K |

1M / 500K |

10 vCPUs 48 GB Memory 75 GB Storage (OS) 3 TB Storage (App) |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 3 TB Storage (App) |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 100 GB Storage (App) |

8 vCPUs 96 GB Memory 75 GB Storage (OS) 400 GB Storage (App) |

1 |

2 |

10 |

1 |

|

30K |

1.2M / 600K |

10 vCPUs 56 GB Memory 75 GB Storage (OS) 3 TB Storage (App) |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 3 TB Storage (App) |

4 vCPUs 16 GB Memory 75 GB Storage (OS) 100 GB Storage (App) |

8 vCPUs 112 GB Memory 75 GB Storage (OS) 450 GB Storage (App) |

1 |

3 |

12 |

1 |

(* Assuming 5-minute polling and minimal latency between workers and polled elements. If CoS metrics are polled, each CoS definition applied to a polled interface counts as an additional logical interface, thereby increasing the overall polled interface count.)

(** Manager & Data Manager Application Storage requirements are approximate and primarily dependent on metric retention and roll-up settings. For proof-of-concepts, the default App Storage of 100GB may be sufficient if metric retention settings are reduced from the system defaults.)

(*** Write and query performance may improve with additional Data Managers.)

(**** Use the netimsh “scale” command on the Manager to scale the poller, alerting, and thresholding services to equal the number of deployed Workers. See the NetIM Installation Guide or Upgrade Guide for instructions.)

NetIM Test Engine Requirements

![]()

NetIM’s Test Engine is supported on 64-bit versions of Windows 10, Windows Server 2012, Windows Server 2016, RedHat Enterprise Linux 6, RedHat Enterprise Linux 7, and Ubuntu 18.04. The NetIM Test Engine requires a 2.0 GHz processor with 2 or more cores (4 cores recommended). A minimum of 4GB is required. Each Test Engine is expected to support up to 1000 simple tests executing at a frequency of every 5 minutes. Complex Selenium tests will limit Test Engine scalability.

Supported Display Resolutions

![]()

Recommended - 1280 x 1024 and higher

Polling & SNMP/WMI Support

![]()

SNMP

A NetIM Worker supports polling up to 2500 devices and 50,000 interfaces via SNMP and ping at a 5-minute polling interval. (Note: Each CoS definition applied to a polled interface acts as an additional logical interface, thereby increasing the overall polled interface count. For example, an interface with 5 CoS classes being polled counts as 6 interfaces – 1 physical interface and 5 logical interfaces).

NetIM supports SNMPv1, SNMPv2c, and SNMPv3 polling. SNMPv3 autodiscovery and trap sending is supported.

WMI

A NetIM worker supports polling approximately 300 Windows systems via PowerShell 5.1x or higher remoting at a 5-minute polling interval. For information on WMI and PowerShell configuration and troubleshooting, see the NetIM User Guide on the support site.

User Authentication

![]()

NetIM supports local authentication and integration with Security Assertion Markup Language 2.0 (SAML 2.0) compliant identity providers and TACACS+ servers.

Supporting Software

![]()

Web Browser:

Google Chrome 92 (64-bit Edition) and higher (Chrome does not cache content from self-signed servers. For best Web interface performance, replace NetIM’s self-signed certificate with a signed certificate from a trusted CA.)

Mozilla Firefox 90 (64-bit Edition) and higher

Microsoft Edge 92 and higher

Safari 13 and higher

Networking:

TCP/IP networking software is required.

![]()

NetIM supports integration with SteelCentral Portal, NetPlanner, NetAuditor, UCExpert, and NetProfiler (see Knowledge Base Article S27459 on SteelCentral Interoperability).

Custom integrations using NetIM’s RESTful APIs are also supported. Each integration places additional load on NetIM services; hence, a dedicated NetIM may be required per product integration.

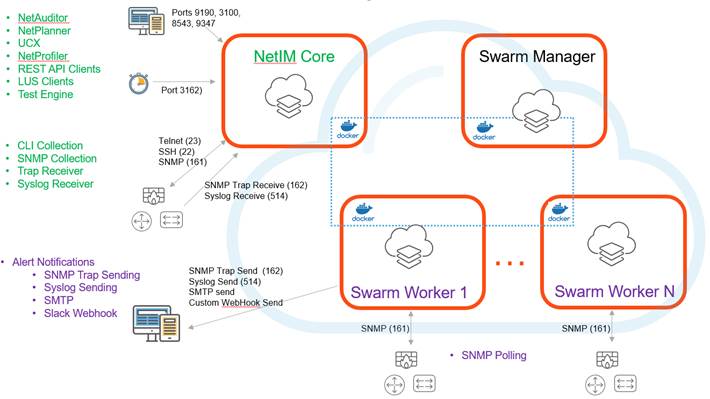

High-Level Architectural Diagram

![]()

Access to Network

![]()

Ensure that the following ports are open:

|

Outbound Ports |

Inbound Ports |

|

|

NetIM Core |

TCP/22 (ssh) |

TCP/22 (ssh) |

|

|

|

TCP/9190 (http-web interface) |

|

|

|

TCP/3389 (RDP) |

|

|

|

TCP/3100 (LUS API Clients) |

|

|

|

TCP/8543 (https-web interface) |

|

|

UDP/8162 (SNMP Trap) KBA S33800 |

|

|

|

UDP/8514 (Syslog) KBA S33800 |

|

|

|

TCP/25 or other (SMTP) |

|

|

|

UDP/161 (SNMP) |

|

|

|

TCP/3162 (Test Engine Controller) |

|

|

|

TCP/9191 API Gateway |

|

|

|

TCP/23 (Telnet) |

|

|

|

UDP/123 (NTP) |

|

|

|

|

TCP/9347 (Portal DCL) |

|

|

|

TCP/8085 (cAdviser) |

|

|

|

TCP/9001 (portainer) |

|

Swarm Manager & Workers |

UDP/123 (NTP) |

|

|

TCP-UDP/7946 (Docker) |

TCP-UDP/7946 (Docker) |

|

|

|

TCP-UDP/4789 (Docker) |

TCP-UDP/4789 (Docker) |

|

|

TCP/2377 (Docker) |

TCP/2377 (Docker) |

|

|

TCP/22 (SSH) |

TCP/22 (SSH) |

|

|

|

TCP/3389 (RDP) |

|

|

UDP/162 (SNMP Trap) |

|

|

|

UDP/514 (Syslog) |

|

|

|

|

TCP/9100 (portainer) |

|

|

|

TCP/9001 (portainer) |

|

|

|

TCP/8901 (Job Service Monitor) |

|

|

|

TCP/8919 (Service Monitor |

|

|

|

TCP/9000 (Kafka Manager) |

|

|

|

8088 (redis commander) |

|

|

TCP/80 (Internet Hosts) |

TCP/80 (PgAdmin4) |

|

|

|

TCP/3000 (Grafana) |

|

|

|

TCP/8085 (cAdviser) |

|

|

|

TCP/5100 (Elastic HQ) |

|

|

|

TCP/9143 (API Gateway) |

|

|

TCP/443 (Internet Hosts) |

|

|

|

TCP/25 or other (SMTP) |

|

|

UDP/161 (SNMP) |

||

|

|

Slack Custom Webhook port |

|

Cloud Images

![]()

Deploying NetIM in Amazon Web Services

Native Amazon Web Services (AWS) AMI images are available for deploying NetIM in AWS. Contact Riverbed Product Management for fulfillment via sc-netimcloud-setup@riverbed.com.

· Instance type

o We recommend the m5 series.

o See AWS Instance Types for all instance types.

o Refer to the NetIM Deployment Guidelines table for the appropriate number of vCPUs and memory size.

· Volume type

o We recommend "General Purpose SSD (gp2)" or "Provisioned IOPS SSD (io1).”

o See AWS Volume Types for all volume types.

o Refer to the NetIM Deployment Guidelines table for the required volume sizes.

Note: Storage optimized instance types, such as the i3 series, cannot be used as-is; additional configuration is required post-installation. With storage-optimized instance types, high performance volumes are attached in addition to the regular EBS volume for root and data1. Without post-deployment, manual modifications, NetIM will not take advantage of the high-performance volumes. If you plan to use storage-optimized instance types, you must follow additional deployment instructions.

Deploying NetIM in Azure

Native Azure images are available on the Riverbed Support site for deploying NetIM in Azure.

· VM Instance type

o We recommend Dsv3 series and Dasv4 series

o See Azure Dv3-series and Dsv3-series

o See Azure Dav4-series and Dasv4-series

o Refer to the NetIM Deployment Guidelines table for the appropriate number of vCPUs and memory size.

· Volume types Disk

o We recommend Premium SSD

§ Note: VM instance type must be compatible with Premium SSD; more specifically, it should be “s” type.

o See Azure Disk Types

o Refer to the NetIM Deployment Guidelines table for the required volume sizes.

Documentation Suite

![]()

NetIM supports the following product documentation, available in both HTML and PDF formats on the Support Site:

|

· Backing Up and Restoring NetIM v2.4.0 Using SAVE_RESTORE |

· |

|

· Migrating to SteelCentral NetIM v2.4.0 |

· |

|

· SteelCentral NetIM v2.4.0 Installation Guide |

· |

|

· SteelCentral NetIM v2.4.0 ISO Update Guide |

· |

|

· SteelCentral NetIM v2.4.0 Release Notes |

· |

|

· SteelCentral NetIM v2.4.0 System Requirements |

· |

|

· SteelCentral NetIM v2.4.0 User Guide |

· |

©2021 Riverbed Technology. All rights reserved. Riverbed and any Riverbed product or service name or logo used herein are trademarks of Riverbed Technology. All other trademarks used herein belong to their respective owners. The trademarks and logos displayed herein may not be used without the prior written consent of Riverbed Technology or their respective owners.